Save to My DOJO

Table of contents

- Challenges of the vSphere Standard Switch (VSS)

- What is a vSphere Distributed Switch (vDS)?

- vDS Features Missing in the VSS

- Creating a vSphere Distributed Switch (vDS)

- Creating a vSphere Distributed Switch Port Group

- Adding ESXi hosts to the Distributed Port Group

- Adding VMkernel adapters

- Migrating to vSphere Distributed Switch (vDS)

- Conclusion

One of the core pillars of VMware vSphere infrastructure is the underlying virtual networking provided by the virtual switch or vSwitch. Without the vSwitch, communication would not be possible between the virtual stack’s various layers, including the physical networking layer. Back in vSphere version 4.x, VMware released a new virtual switch type – the vSphere Distributed Switch (vDS).

The vSphere Distributed Switch (vDS) has many advantages over the vSphere Standard Switch (VSS). This complete guide covering the vDS switch will examine the basic concepts of the vDS, including creation, configuration, requirements, VMkernel ports, migration, and other topics.

Challenges of the vSphere Standard Switch (VSS)

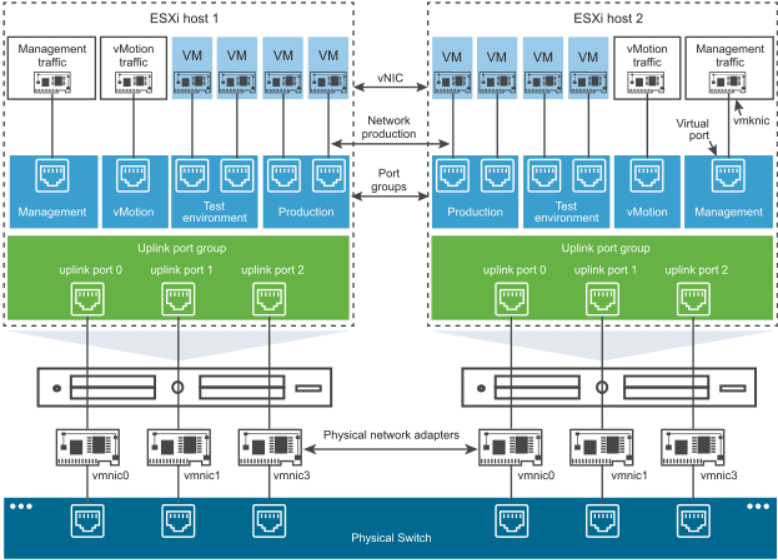

As VMware vSphere customer environments began to grow, it became readily apparent that the vSphere Standard Switch (VSS) was not well-equipped to satisfy the networking needs of large environments at scale. To understand what the vSphere Distributed Switch is, we need first to understand a bit more about the vSphere Standard Switch. The vSphere Standard Switch provides the default network connectivity to hosts and virtual machines. It can bridge traffic internally between virtual machines running on the host and link them to external networks.

This functionality is similar to a physical Ethernet switch. The virtual machine network adapters and physical NICs on the ESXi host use the switch’s logical ports as each adapter uses one port. Each logical port on the VSS is a member of a single port group. One of the key differentiators between the vSphere Standard Switch (VSS) and the vSphere Distributed Switch is where the management resides. The VSS is a host-centric vSphere virtual switch. It is created by default when you first install VMware ESXi. When you assign a management IP address to the ESXi host, this is the first VMkernel port created on the default vSwitch0 of the ESXi host.

Virtual switches contain two elements for consideration: a management plane and a data plane. The VSS includes both the management plane and the data plane. For this reason, you manage each VSS as a single, standalone entity from the perspective of the ESXi host.

The overview architecture of the vSphere Standard Switch (VSS)

Since the VSS contains both the management and data planes, any VLANS and associated port groups with those VLANs must be managed and maintained on each specific ESXi host. Even other ESXi hosts in the same vSphere cluster are unaware of the VSS switches and port groups configured on the other ESXi hosts. If you have 100 VSS port groups created on a specific ESXi host, you must create these same VSS port groups on all other ESXi hosts to access those particular port groups.

It is essential in a vSphere cluster to have the same VSS port groups and VLAN IDs configured with the same names and exact details. Any virtual machines vMotioned between ESXi hosts in a given cluster must have the same virtual networks on the destination ESXi host, or these will risk losing network connectivity.

The vSphere Standard Switch works well in small and even large vSphere environments. However, the challenge with the VSS comes in the form of management at scale. Also, specific VMware solutions require the vSphere Distributed Switch (vDS), NSX-T being one if not using the N-VDS.

When you think about creating the same vSphere Standard Switches and port groups on a few hosts, this is not too difficult. However, what if you had hundreds or even thousands of hosts that needed the same vSwitch? What if you needed to make a simple change on the vSwitch and need this change reflected on all hosts? In these management scenarios, this is where the vSphere Standard Switch’s management woes start to show and where the vSphere Distributed Switch begins to shine. Without further ado, let’s introduce the vSphere Distributed Switch.

What is a vSphere Distributed Switch (vDS)?

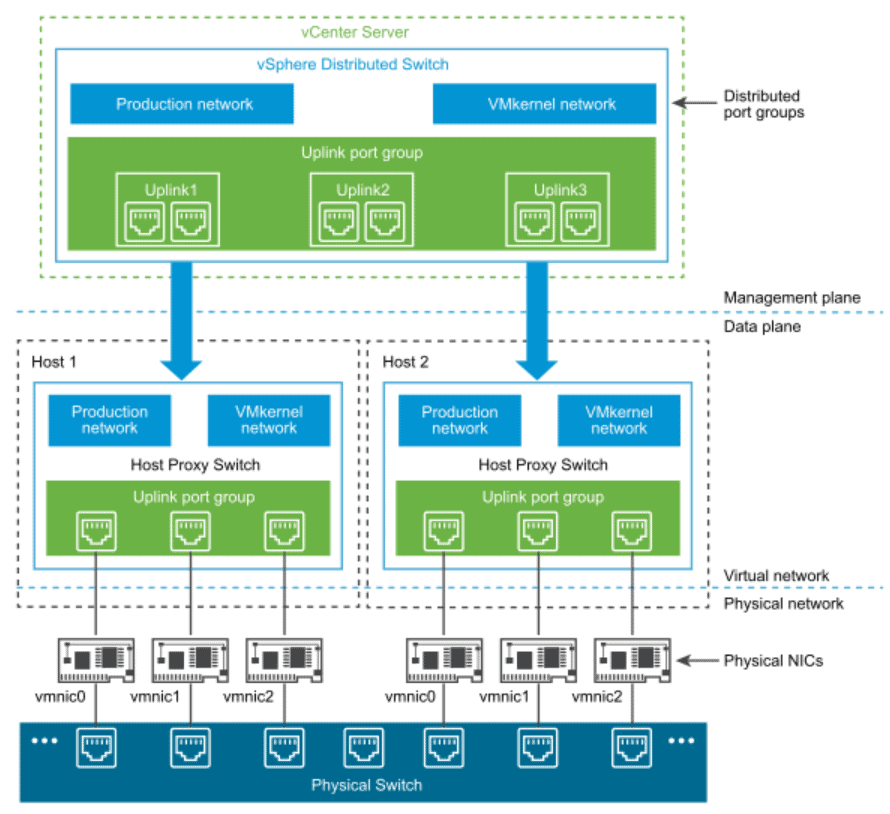

When it comes to the basics of how the vSphere Distributed (vDS) passes traffic, it is very similar to the VSS. However, in terms of management and features, it is a much more powerful virtual networking construct. The vSphere Distributed Switch separates the management plane and the data plane. All management of the vSphere Distributed Switch resides on the vCenter Server while the data plane that passes traffic remains local to the ESXi host.

Overview of vSphere Distributed Switch (vDS) architecture

This separation of the management and data planes means that while all management tasks are taken care of at the vCenter Server level, no vSphere Distributed Switch traffic traverses vCenter Server. In this way, there are no network interruptions with your hosts using vSphere Distributed Switches if vCenter Server goes down. The data plane component configured on the ESXi host is known as the host proxy switch.

To effectively separate the management and data planes of the vSphere Distributed Switch, VMware introduced two new abstractions in the architecture of the vDS. These are the uplink port group and the distributed port group. Let’s see how these come into play with the vSphere Distributed Switch.

vSphere Distributed Switch Uplink Port group

The vDS uplink port group, also known as the dvuplink port group, is first created when you create the vSphere Distributed Switch to which it is associated. As mentioned, it is an abstraction that allows the distributed nature of the vDS. The vSphere Distributed Switch uplink port group is a template of sorts that allows configuring the physical uplinks on the ESXi hosts and policies that define failover and load-balancing configuration. Each of the physical uplinks on an ESXi host maps to the uplink port group ID. You configure policies on the uplink port group. The host proxy switch located on the ESXi host receives the configuration defined in the failover and load-balancing policies.

vSphere Distributed Switch Port Group

The vSphere Distributed Switch port groups are essential constructs in the vDS. These provide network connectivity to VMs and also provide the conduit for VMkernel traffic. You label the vDS port groups with a network label like you would label a VSS port group. These must be unique to each vSphere data center. The vDS port groups are also crucial as these are where the policies are applied that affect teaming, failover, load balancing, VLAN configuration, traffic shaping, and security.

You apply the configuration for the vSphere Distributed Switch port group at the vCenter Server level. The settings then propagate down to the ESXi host proxy switch. In this way, virtual machines can share the same network configuration by connecting virtual machines to the same vSphere Distributed Switch port group.

Other Advantages of the vSphere Distributed Switch (vDS)

Aside from much more robust management of your virtual networking across your vSphere landscape, the vDS provides many other advantages and features compared to the vSphere Standard Switch. These include the following:

- Simplified virtual machine network configuration – With the vDS, you can significantly simplify VM networking configuration across your vSphere infrastructure. The vDS allows you to provide centralized control of your VM networking, including centralized control over the port group naming, VLAN configuration, security, and many other settings.

- Link Aggregation Control Protocol (LACP) – Keep in mind that the only supported way to run LACP in your vSphere environment with vSphere virtual networking is using the vSphere Distributed Switch.

- Network health-check capabilities – The vDS provides many network health check capabilities, including verifying vSphere to physical network checks.

- Advanced network monitoring and troubleshooting – With the vDS, you have access to RSPAN ERSPAN, IPFIX Netflow version 10, SNMPv3, rollback and recovery of the network configuration

- Templates for backing up and restoring virtual machine network configuration

- Netdump for network-based host debug

- Advanced networking features – These include Network I/O Control (NIOC), SR-IOV, and BPDU filter, among others.

- Private VLANs (PVLAN) support – The vSphere Distributed Switch allows the use of Private VLANs, which provide even more security options for segmenting traffic

- Bi-directional traffic shaping – You can shape traffic policies on DV port group definitions (average bandwidth, peak bandwidth, and burst size)

Requirements of the vSphere Distributed Switch (vDS)

There are a few requirements to consider with the vSphere Distributed Switch. The first requirement is a statement of the obvious. The vSphere Distributed Switch is a vCenter Server construct, so you must by necessity be running vCenter Server. You will not be able to use the vSphere Distributed Switch if you are running standalone hosts not connected to vCenter Server.

Unlike the vSphere Standard Switch found with all vSphere license types and even with free ESXi, the vSphere Distributed Switch is only available with the vSphere Enterprise Plus license. However, many may not realize they get the vSphere Distributed Switch as part of a vSAN license. The vDS provides many advantages for performance in a vSAN environment due to using Network I/O Control (NIOC).

vDS Features Missing in the VSS

We have touched on this a bit. However, what vDS features are missing in the vSphere Standard Switch (VSS)? There are quite a few to note. These include:

- Centralized management – As described, the vDS is managed from the vCenter Server and not from the ESXi host itself.

- Port mirroring – The ability to mirror network traffic from a virtual switch port to another virtual switch port. Port mirroring is often used with troubleshooting or even capturing traffic for security or forensics purposes.

- Network I/O Control – Network I/O Control is a very robust feature that helps mitigate network contention and prioritize traffic if the network becomes saturated.

- Advanced networking features – Supported features such as Link Aggregation Control Protocol (LACP), Private VLANs (PVLANs), NetFlow, and Link Layer Discovery Protocol (LLDP) are not found on the vSphere Standard Switch

- vNetwork Switch API – This provides an interface for third-party vendors to extend the built-in vSphere Distributed Switch functionality. However, VMware has since ended support of the third-party vSwitch program. Popular third-party vSwitches such as the Cisco 1000V are no longer supported past vSphere 6.5 Update 1.

- Ability to backup and restore vSwitches – The vDS configuration can be backed up in the vSphere Client and restored. There is no built-in functionality equivalent for the vSphere Standard Switch.

- Virtual Machine port blocking – There may be cases where you want to selectively block ports from sending or receiving data using a vSphere Distributed Switch port blocking policy.

- NSX-T support – The vSphere Distributed Switch is the only vSwitch that is supported for use with NSX-T. Creating a vSphere Distributed Switch (vDS)

- vSphere with Tanzu support – New with vSphere 7 Update1 and vSphere with Tanzu, customers can use the native vSphere networking with the vDS to back their Tanzu Kubernetes Grid clusters. New with vSphere with Tanzu, VMware has removed the requirement to have NSX-T in the environment. Now, you can make use of a vSphere Distributed Switch for connectivity to your frontend, workload, and management interfaces.

Creating a vSphere Distributed Switch (vDS)

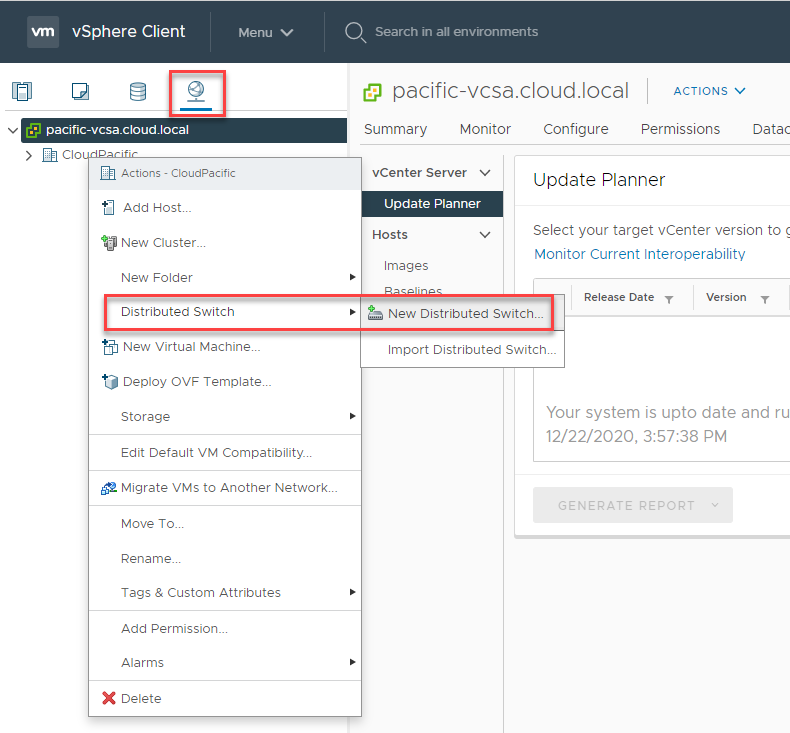

As opposed to creating the vSphere Standard Switch, the vSphere Distributed Switch is provisioned at the vCenter Server level. It is found in the Networking menu of the vSphere Client. Let’s walk through creating a new vSphere Distributed Switch in the vSphere Client. Once you click the networking menu, right-click your data center name. Click Distributed Switch > New Distributed Switch.

Creating a new vSphere Distributed Switch

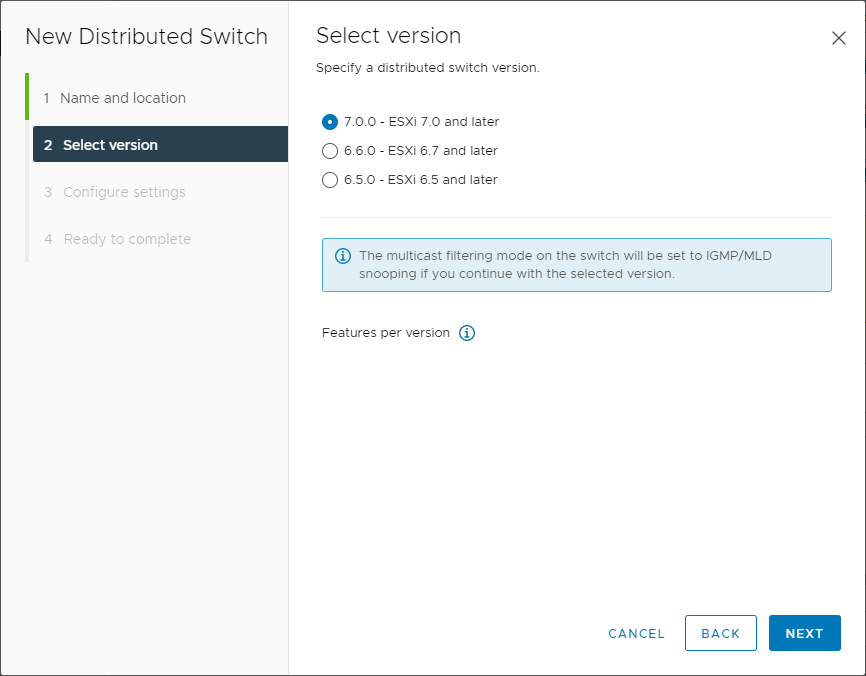

Next, you will select the version of the vSphere Distributed Switch. The version selection is an important detail. You are setting the functionality level and subsequent compatibility with the ESXi host version you can add to the vSphere Distributed Switch. Keep in mind that you cannot change the version once created, and you will not be able to add a down-level host to a newer vDS. Additionally, each new version of the vSphere Distributed Switch has new capabilities not found in previous versions.

Below is a quick overview of the features added with each new version of the vDS.

New features and enhancements

- Distributed switch: 7.0.0

- NSX Distributed Port Group

- Distributed switch: 6.6.0

- MAC Learning

- Distributed switch: 6.5.0

- Port Mirroring Enhancements

The vSphere Client UI does a good job of detailing certain things to note in the informational “bubble tips” when a specific version of the vDS is selected. You will note that if you choose version 7.0.0 – ESXi 7.0 and later, you will see the message “The multicast filtering mode on the switch will be set to IGMP/MLD snooping if you continue with the selected version.”

Selecting the version of the vSphere Distributed Switch

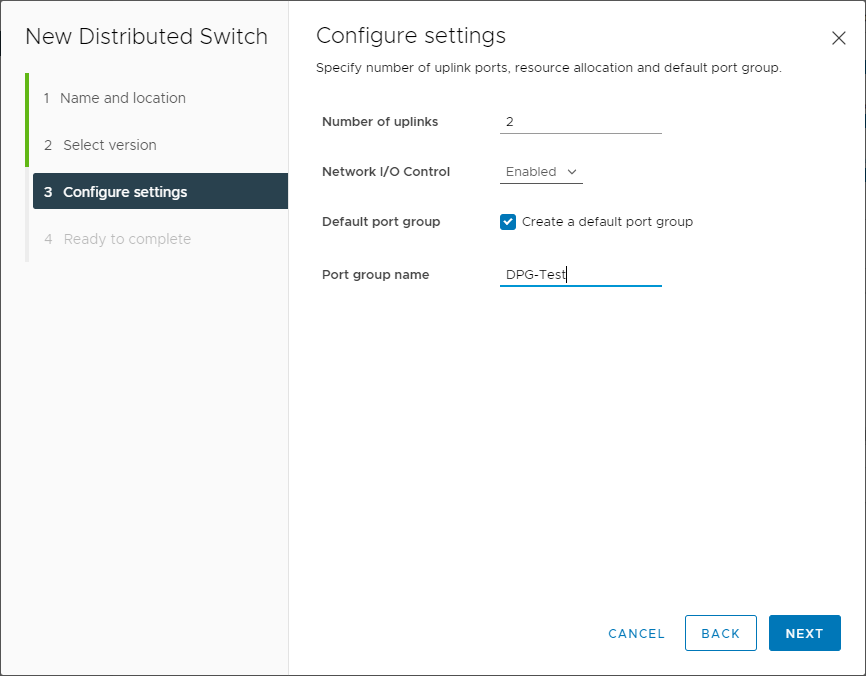

The configure settings screen allows configuring the number of uplinks to the vDS, Network I/O Control (enabled by default), and allows creating the first distributed port group and name.

Configuring the settings of the vSphere Distributed Switch during creation

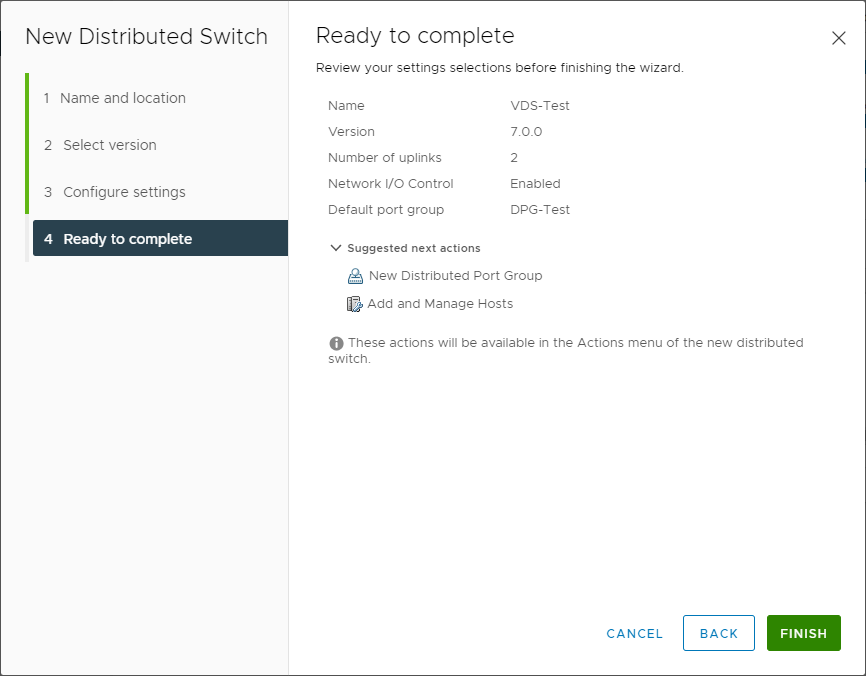

On the Ready to complete screen, review the summary of information presented. Click Finish to create the new vSphere Distributed Switch.

Finishing the creation of the new vSphere Distributed Switch

Creating a vSphere Distributed Switch Port Group

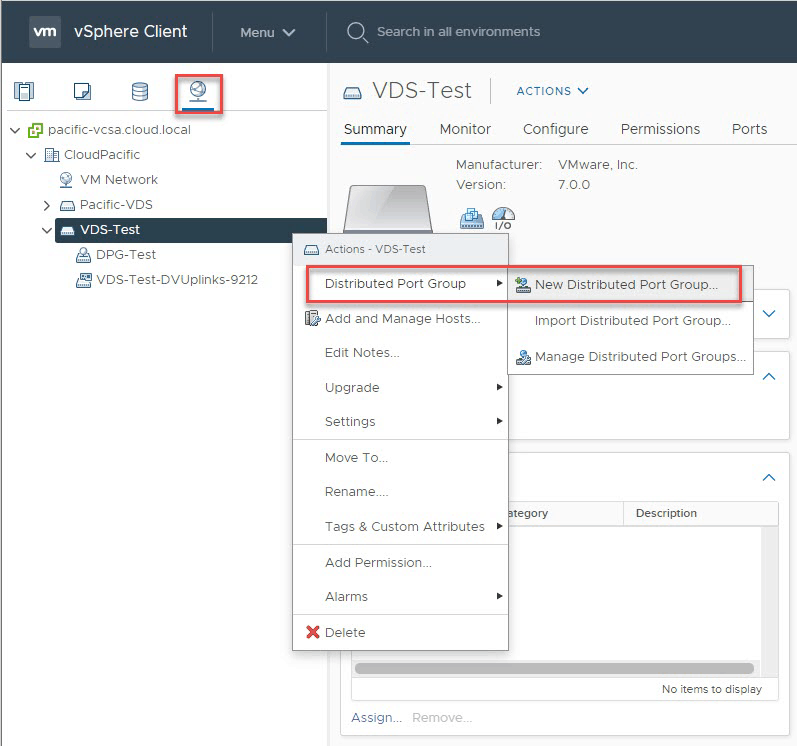

After you have created your vSphere Distributed Switch, you will most likely want to add additional port groups to your vDS. It is an easy process using the vSphere Client on the Networking tab. Right-click on your vSphere Distributed Switch and select Distributed Port Group > New Distributed Port Group.

Creating a new Distributed Port Group

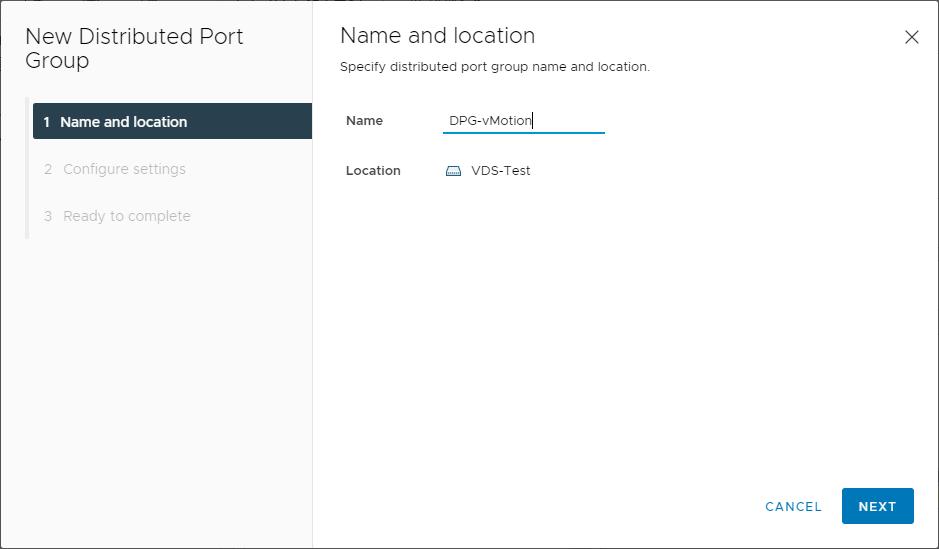

Select a name for the new Distributed Port Group. Here, we are creating a new port group for vMotion traffic.

Naming the new Distributed Port Group

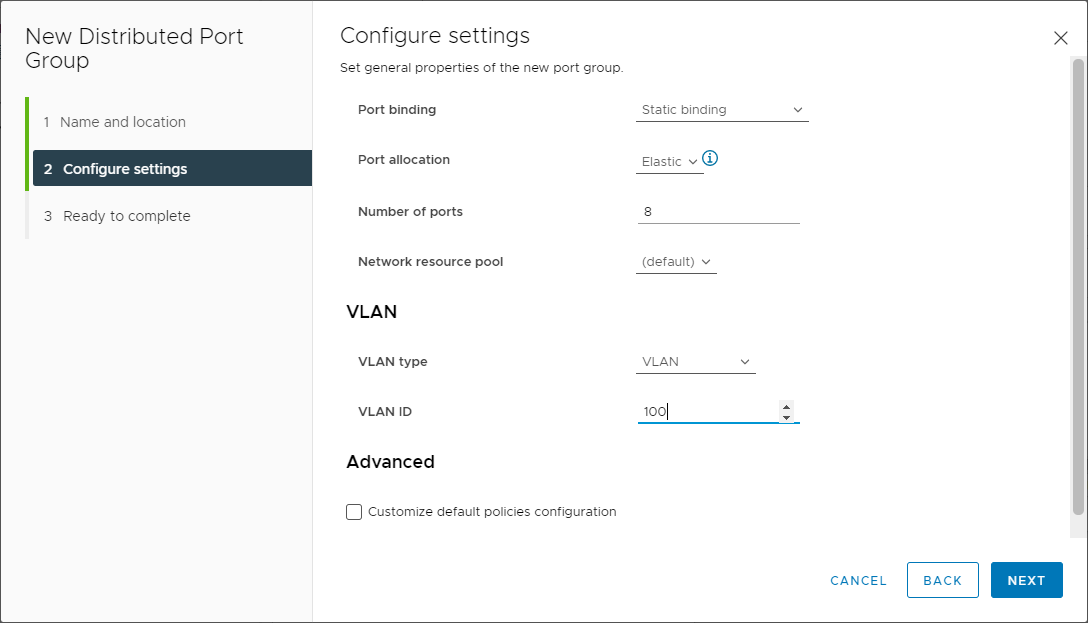

On the configure settings screen, you can configure the port binding, port allocation, number of ports, network resource pool, VLAN type, and VLAN ID. If you select the Customize default policies configuration, you will be able to define custom policies during the initial creation of the port group for security, traffic shaping, teaming and failover, monitoring, and others. Keep in mind. If you do not select the check box, you can customize these settings later.

Configuring settings for the new Distributed Port Group

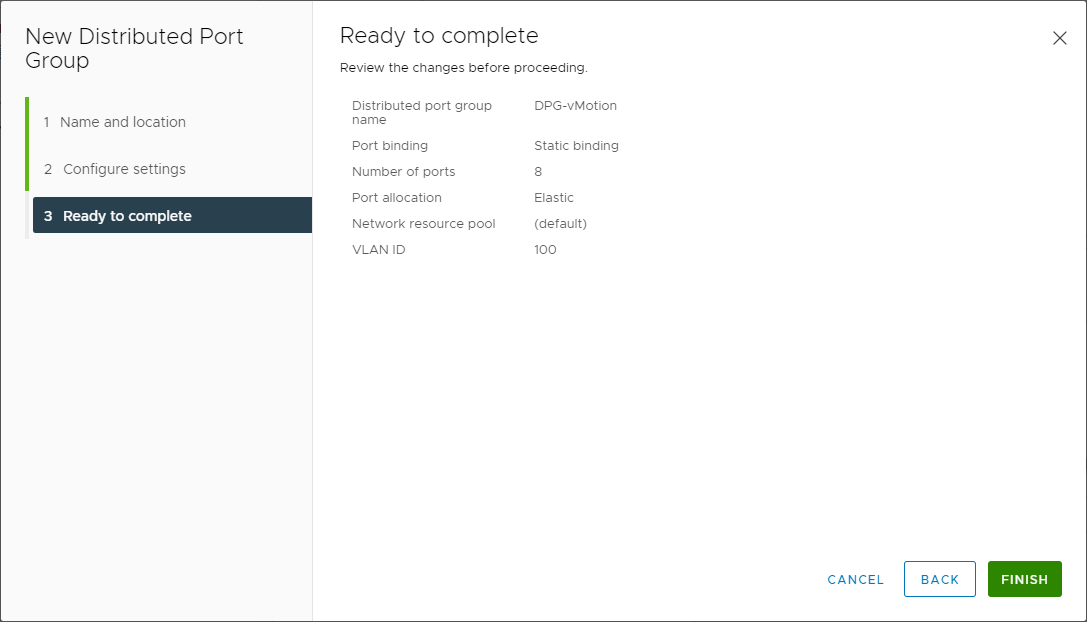

The new Distributed Port Group wizard is ready to complete.

Finishing the creation of the new Distributed Port Group

Adding ESXi hosts to the Distributed Port Group

One of the fundamental differences in the vSphere Distributed Switch vs. the vSphere Standard Switch is where the management plane resides. Keep in mind, merely creating a vSphere Distributed Switch does not automatically add them to your ESXi hosts. Add the ESXi hosts to the new vSphere Distributed Switch to add the data plane to the ESXi host. Now that we have created a new vSphere Distributed Switch, we can add it to the ESXi hosts.

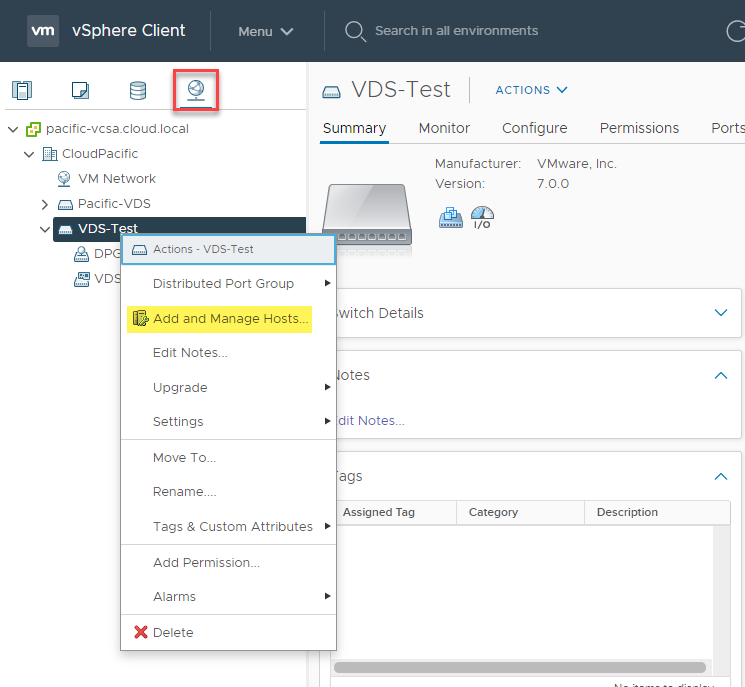

To add the vDS to your ESXi hosts, visit the networking tab, right-click your vDS that you want to add to your ESXi host, and select Add and Manage Hosts.

Adding an ESXi host to your vSphere Distributed Switch

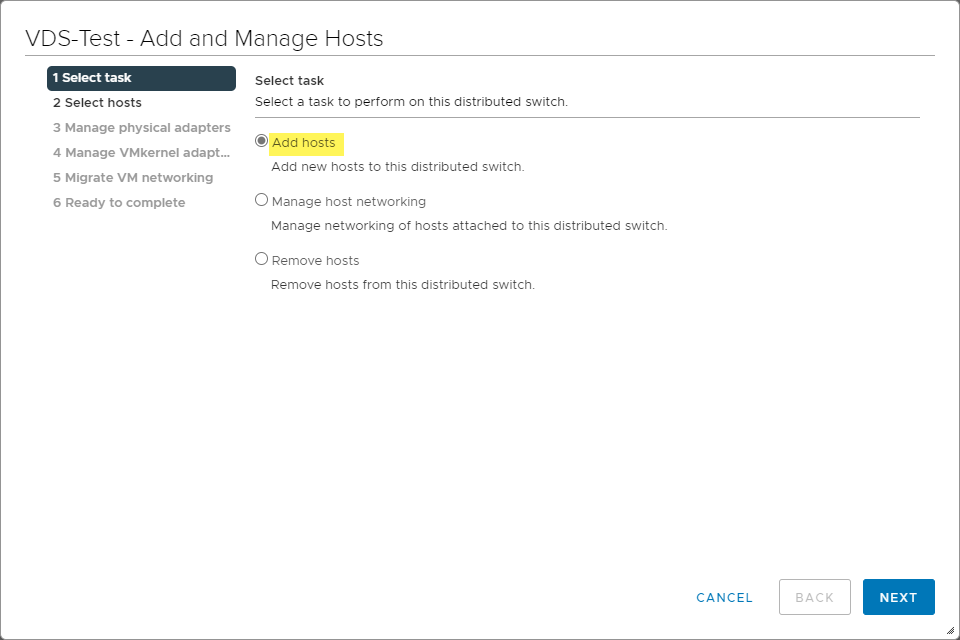

Select Add hosts and click Next.

Adding hosts on the Add and Manage Hosts screen

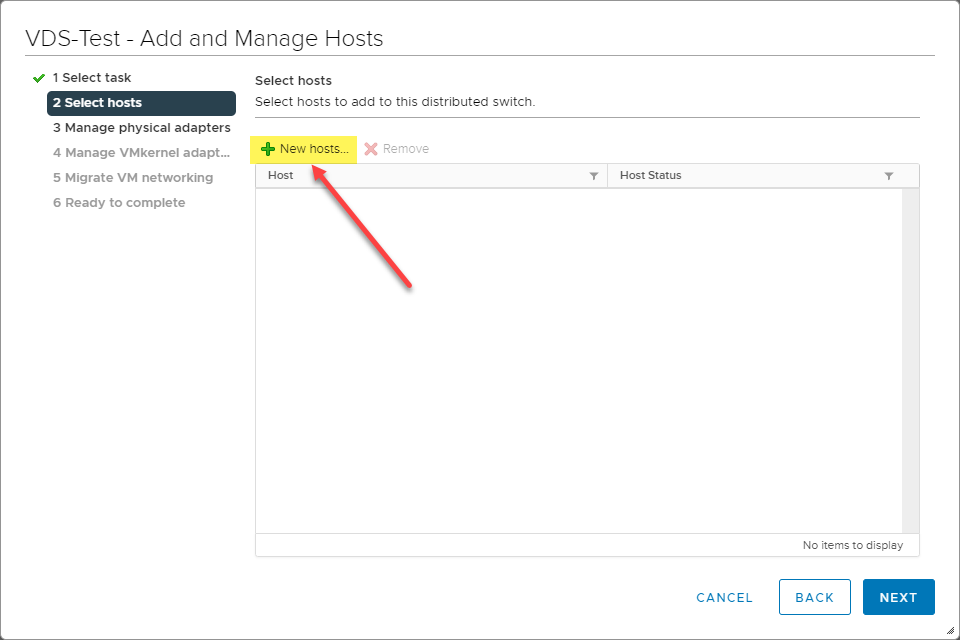

On the Select hosts screen, click the New hosts button and select Next.

Click the button to add your ESXi hosts

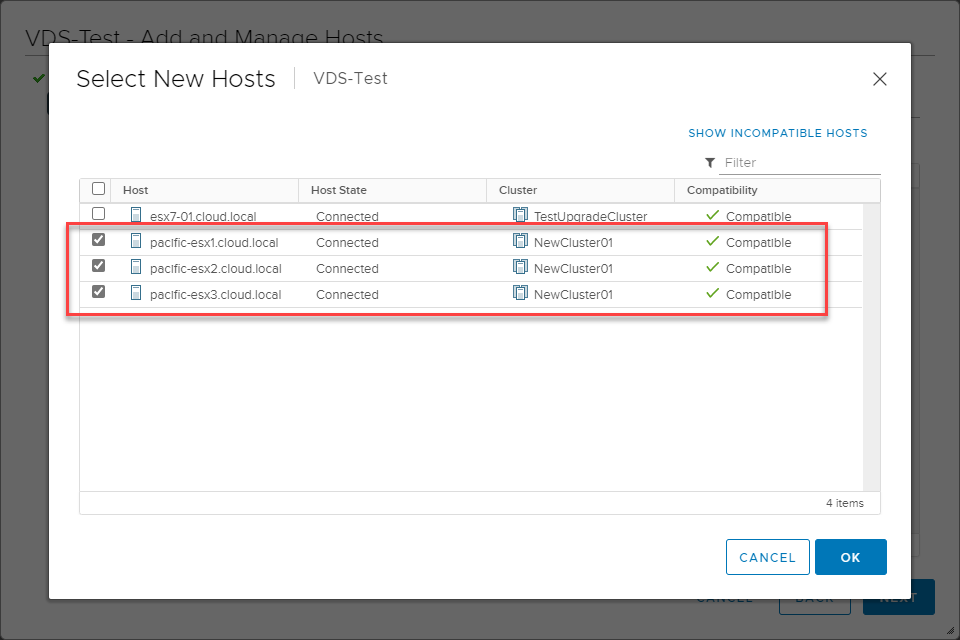

Next, select the hosts you want to add to the new vSphere Distributed Switch. You can select multiple hosts as shown. Click OK once you have the hosts you want to configure selected.

Selecting the ESXi hosts to add to the new vSphere Distributed Switch

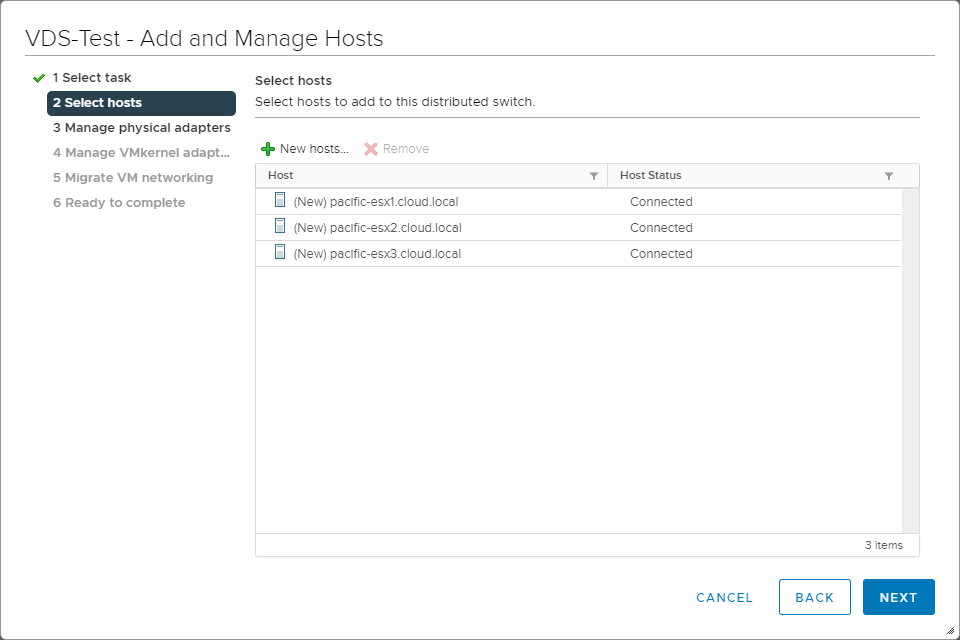

You will see the hosts added to the wizard. Notice the (New) prefix listed for the hosts.

New hosts selected to add to the vSphere Distributed Switch

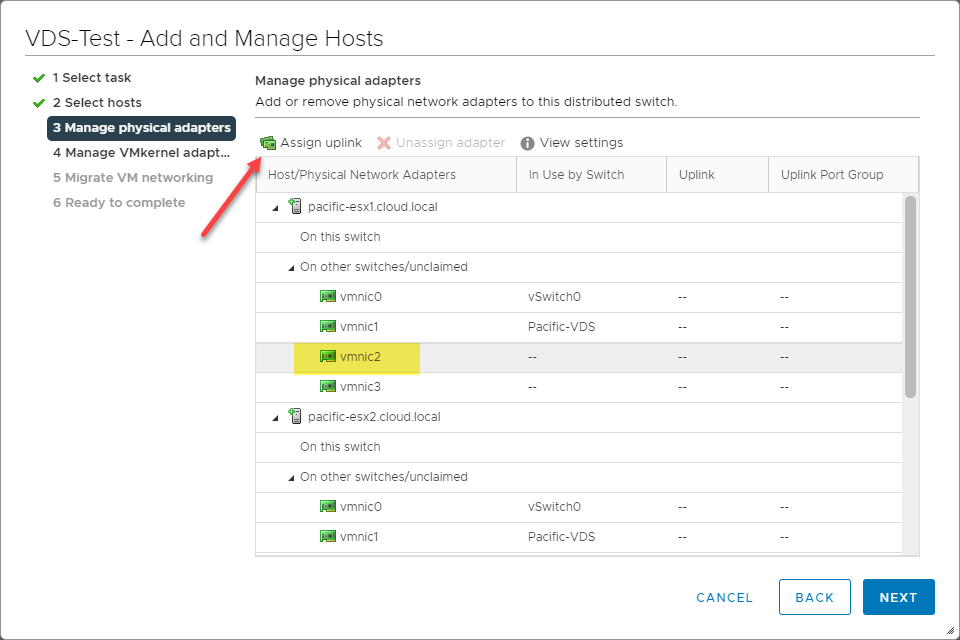

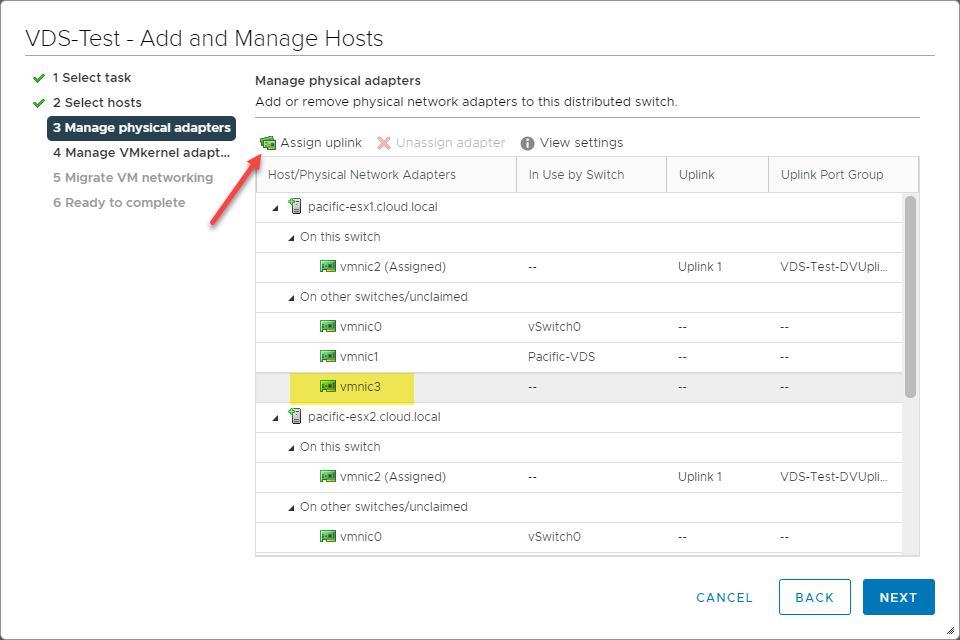

The next step in the wizard is to Manage physical adapters. In this step, you assign the physical adapters to the Uplink port group. Select the first adapter you want to assign to the uplink port group. Below, the vmnic2 adapter will be assigned to the first uplink.

Assigning the first adapter to the uplink port group

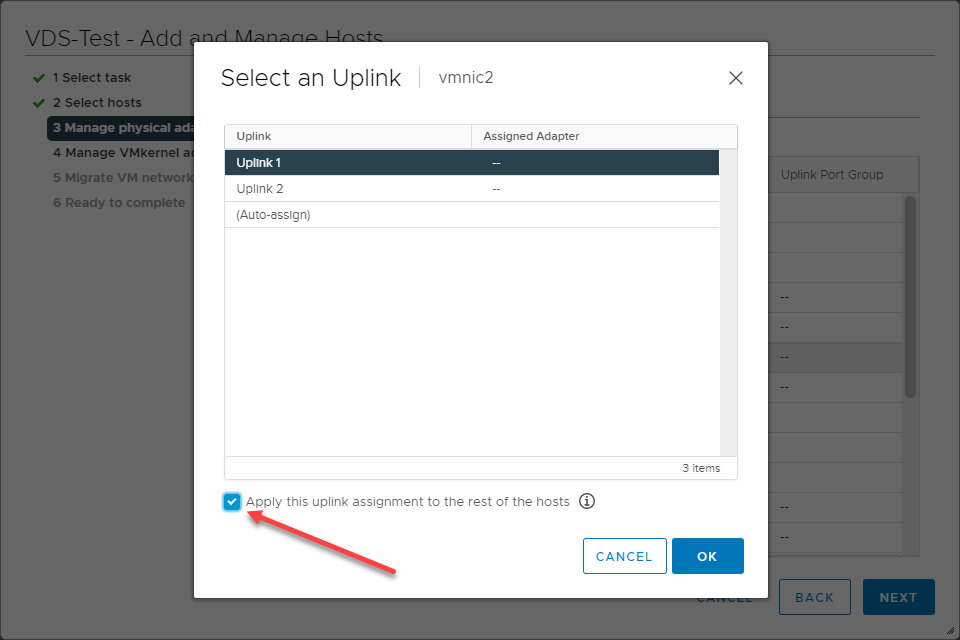

On the Select an uplink screen, select the uplink you want to assign the adapter. Notice the Apply this uplink assignment to the rest of the hosts checkbox. This is a huge time saver as it will apply your selections to all the other hosts you are adding to the vSphere Distributed Switch.

Assigning the first adapter to the first uplink

Next, we will assign the second adapter to the second uplink. Below, this is vmnic3.

Choose the second adapter to assign in the uplink port group

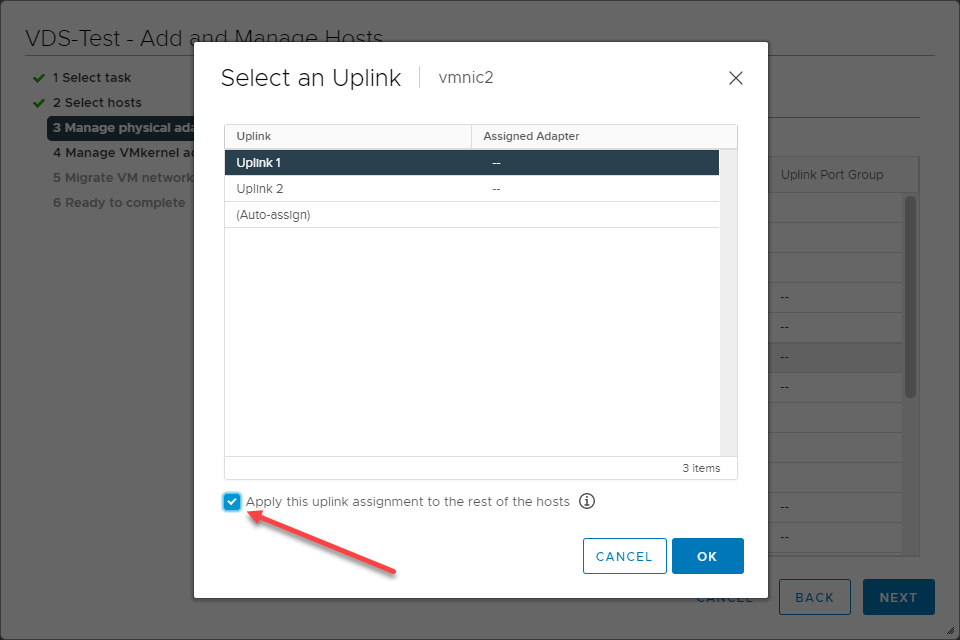

Again, using the Select an Uplink dialog box, assign the adapter to the second uplink. Also, select the Apply this uplink assignment to the rest of the hosts.

Assigning the second adapter to the second uplink

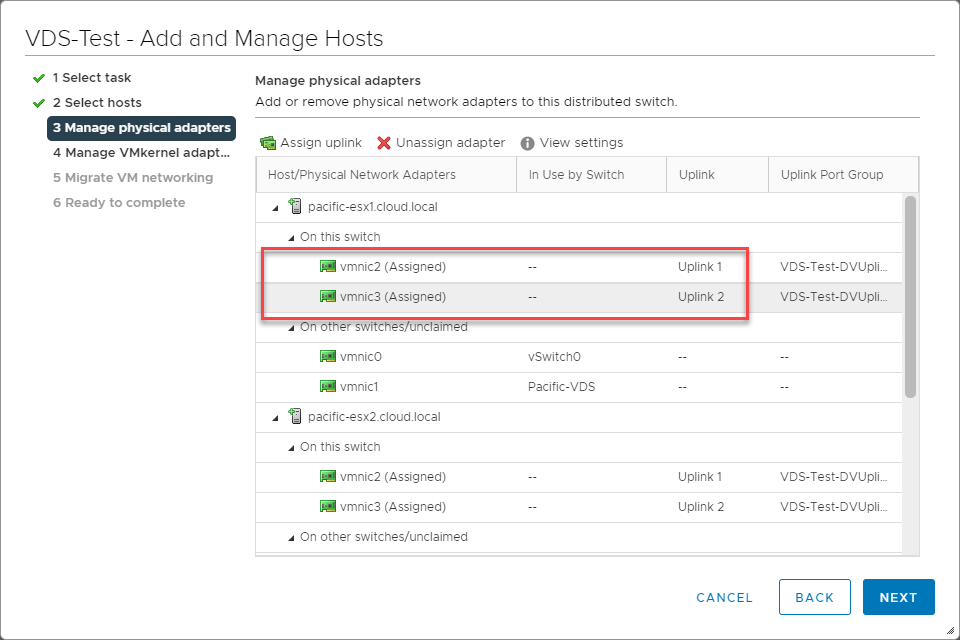

Now, you will see the adapters have been assigned for all hosts chosen to add to the vSphere Distributed Switch.

Adapters have been assigned uplinks for all hosts

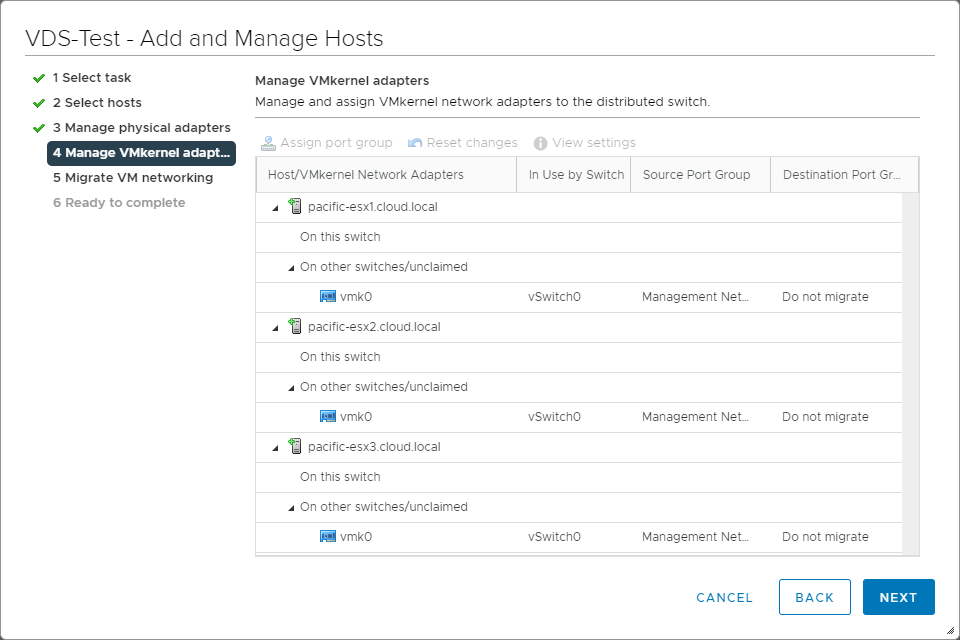

If you want to migrate existing VMkernel adapters to the new vDS, you can do this on the Manage VMkernel adapters screen.

Managing VMkernel adapters

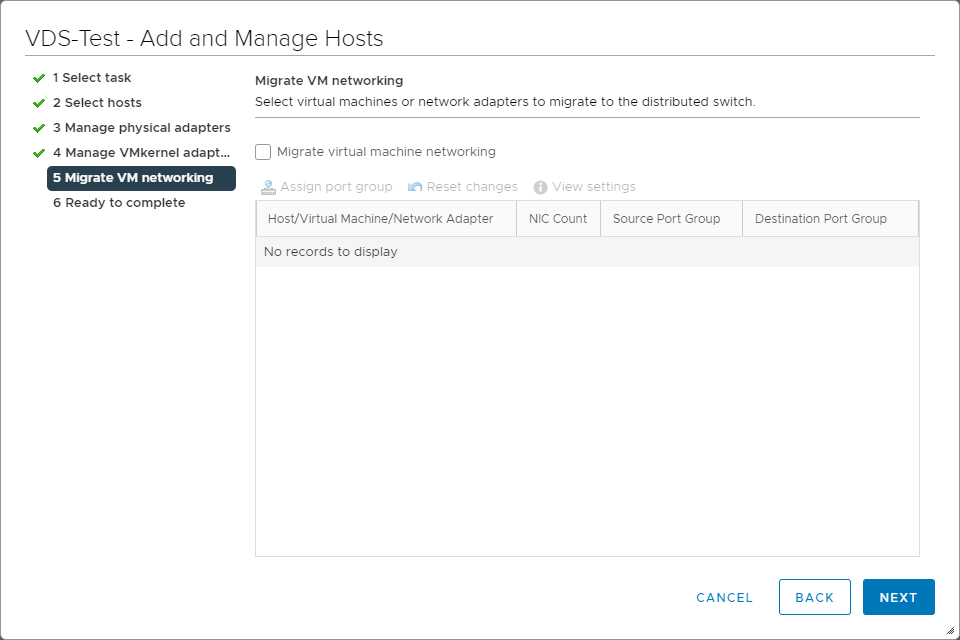

You can also migrate VM networking if you have VMs that you would like to migrate to the new vDS.

Migrate VM networking

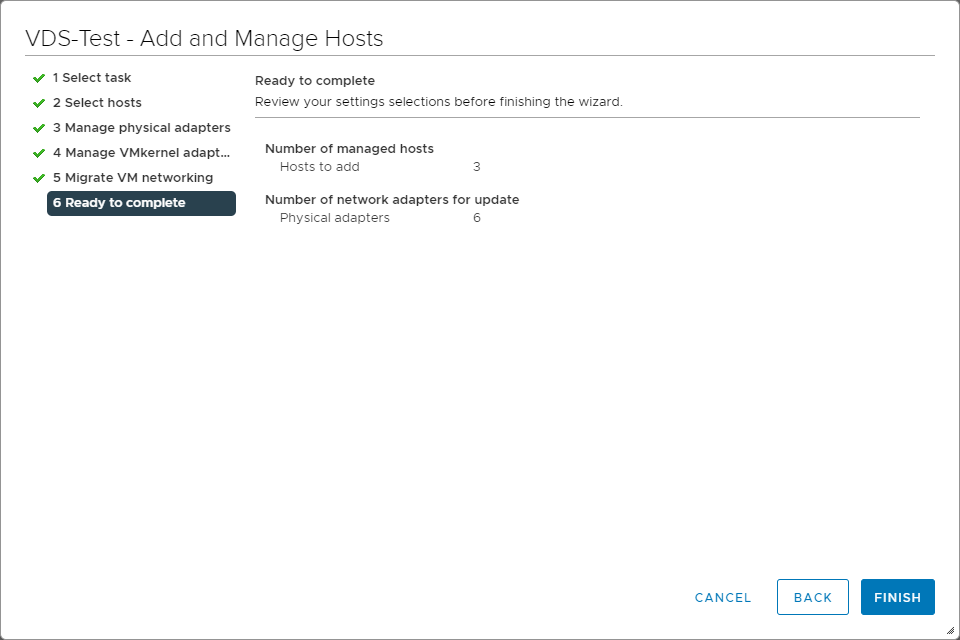

Finally, we are ready to complete adding the ESXi hosts to the new vSphere Distributed Switch. Click Finish.

Completing adding your ESXi hosts to the new vSphere Distributed Switch

Adding VMkernel adapters

The VMkernel adapters are special adapters responsible for communicating specialized vSphere traffic such as vMotion, vSAN, Fault Tolerance, etc. The VMkernel adapters are adapters that are assigned IP addresses. Now that we have added the ESXi hosts to the new vSphere Distributed Switch, we can add a VMkernel adapter to the vSphere Distributed Switch Port Group.

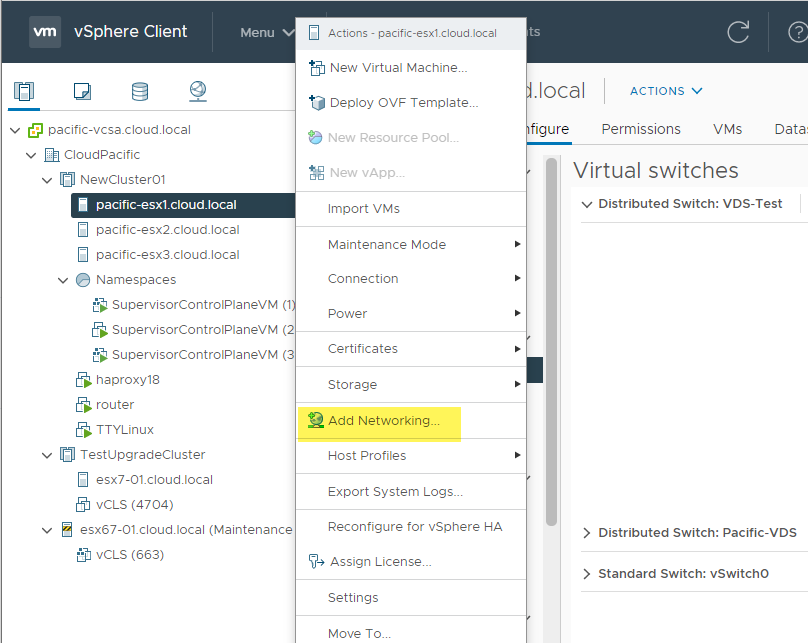

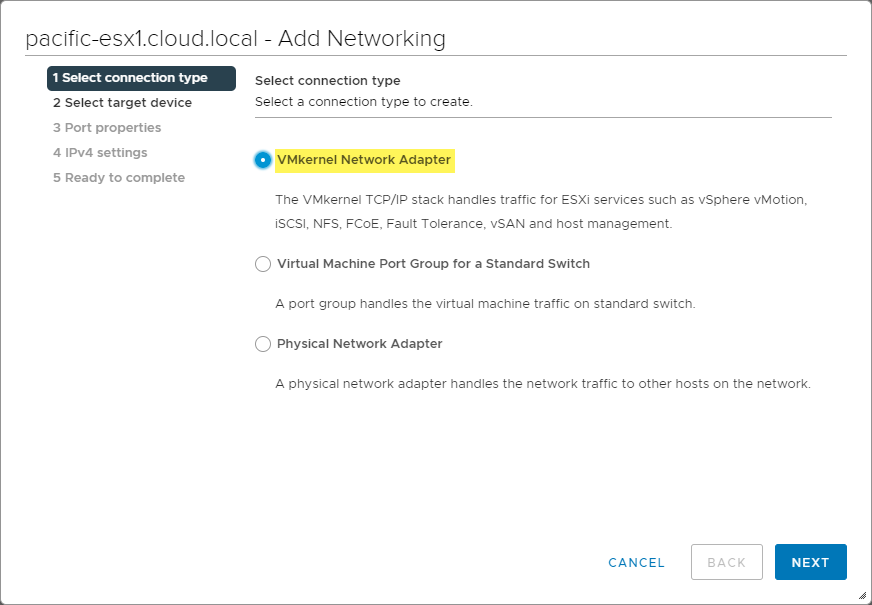

If you noticed earlier, the port group added was DPG-vMotion. So, we will use this new vDS port group to carry vMotion traffic. To do that, we need to add a VMkernel network adapter for this purpose and designate it for vMotion. To add the VMkernel network adapter, right-click on your ESXi host, and select Add networking.

Add networking for adding a VMkernel network adapter

On the Add Networking Select connection type, select VMkernel Network Adapter. As shown, the VMkernel TCP/IP stack handles traffic for ESXi services such as vSphere vMotion, iSCSI, NFS, FCoE, Fault Tolerance, vSAN, and host management.

Adding a new VMkernel Network Adapter

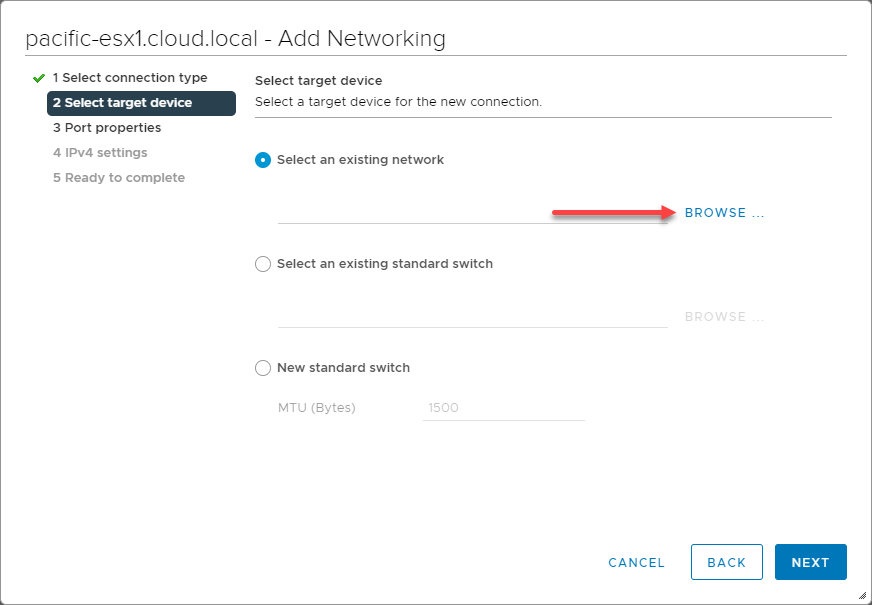

Next, select a target device. On this screen, you choose which vSwitch you will use to add the VMkernel Network Adapter. We want to choose the vSphere Distributed Switch port group created for vMotion. Select Browse.

Browse to select an existing network

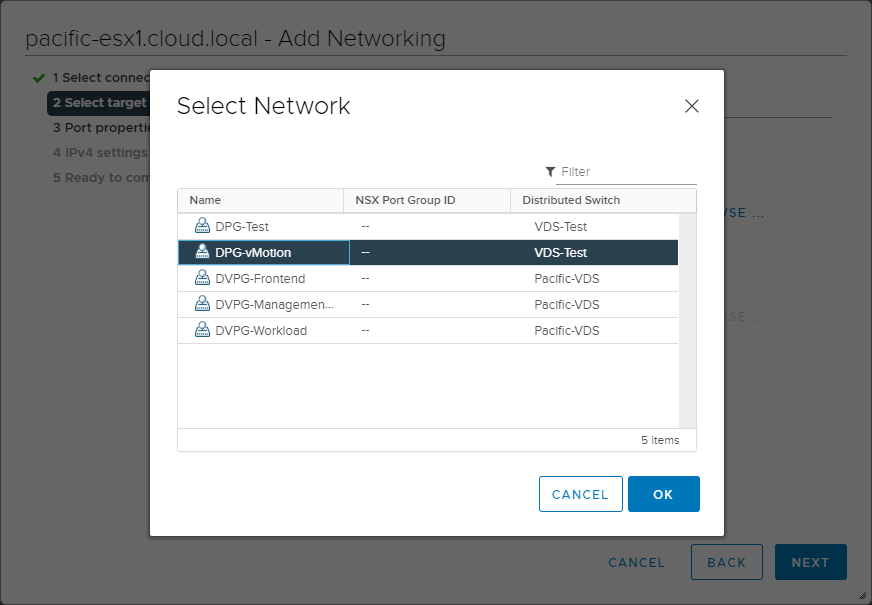

Select the network to use for vMotion. Below, the DPG-vMotion port group is selected. Notice on this screen, you are selecting port groups, not the vDS itself. The wizard shows the vDS association as well.

Select the port group to use for the VMkernel Network Adapter

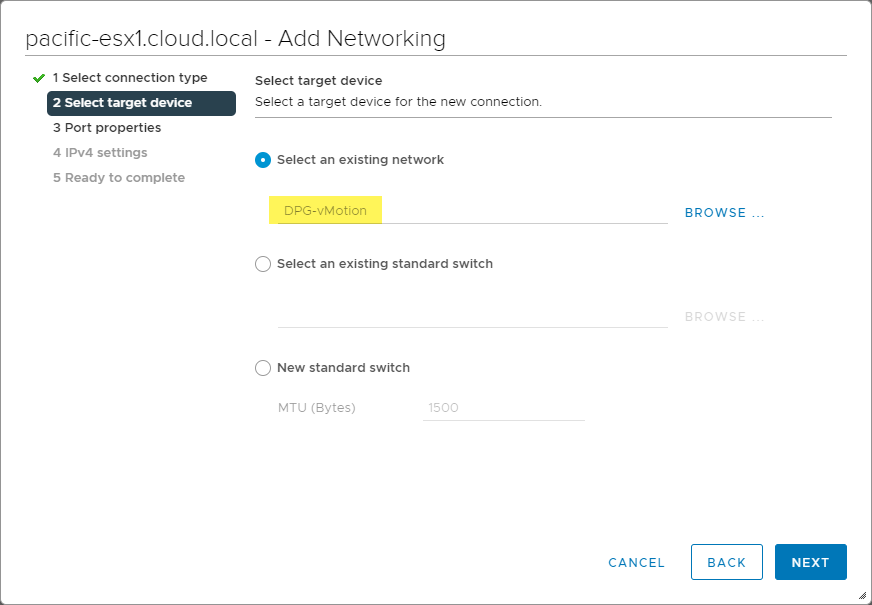

The Distributed Port Group is selected. Click Next.

The target device for the VMkernel Network Adapter is selected

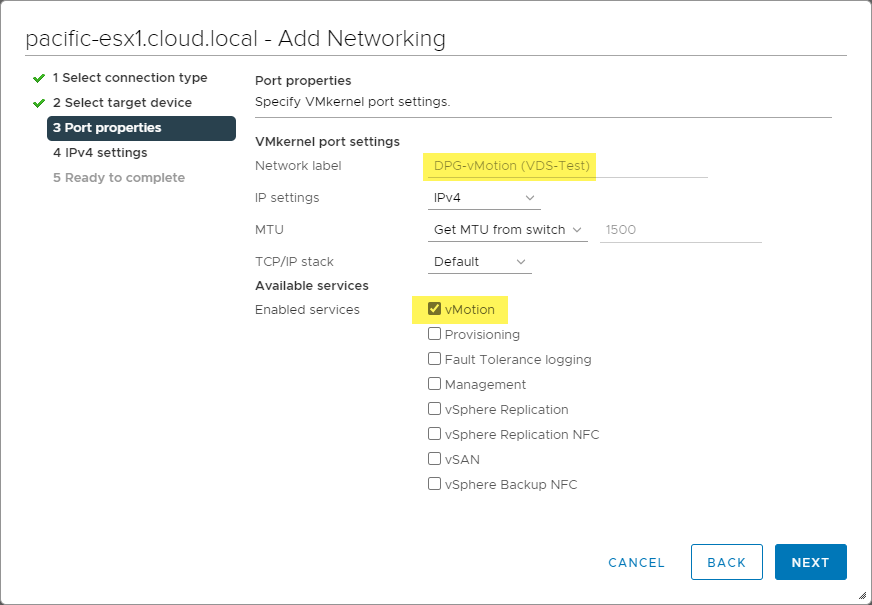

Now, configure the port properties of the VMkernel Network Adapter. You configure the VMkernel port settings, including the Available services associated with the VMkernel Network Adapter.

Configuring the port properties for the VMkernel Network Adapter

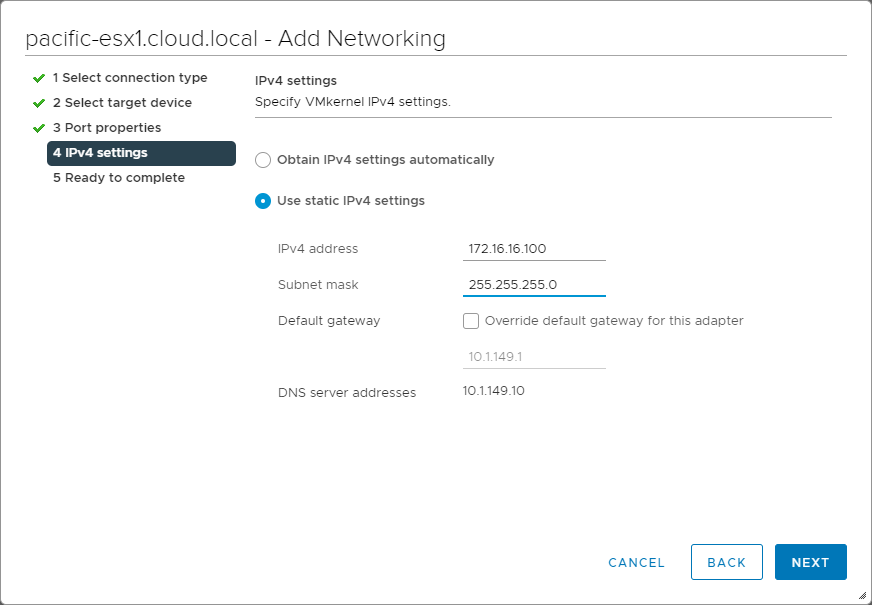

You configure the VMkernel Network Adapter with an IP address. On the IPv4 settings screen, choose how you want to provision the VMkernel Network Adapter’s IP address. A static IP is used below for the VMkernel network adapter.

Configuring the IP address settings for the VMkernel Network Adapter

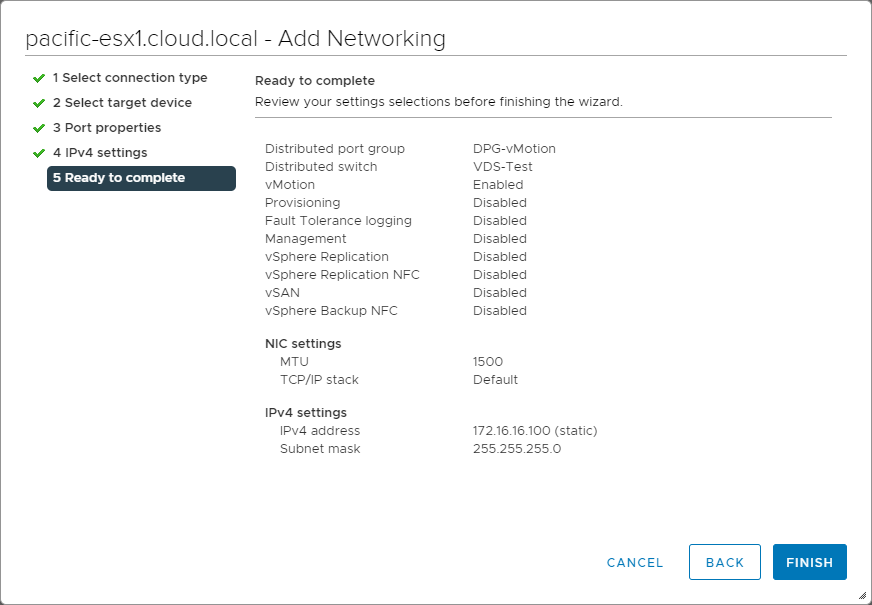

Finally, we are ready to complete the configuration of the VMkernel Network Adapter configuration. Review the summary screen to ensure the configuration is correct and click Finish.

Finalizing the configuration of the VMkernel Network Adapter

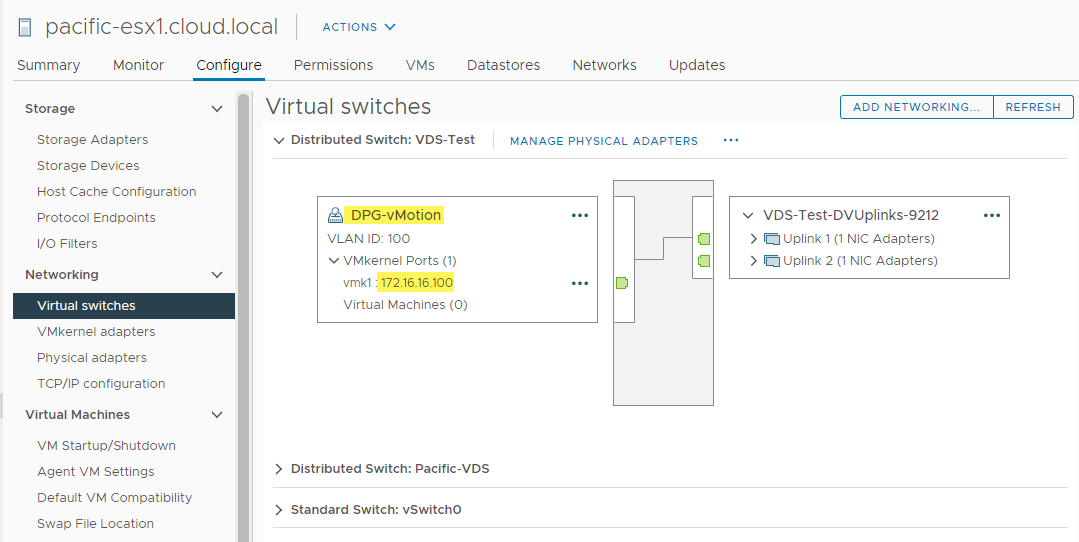

After adding the VMkernel Network Adapter, you can see the new VMkernel adapter and address under the DPG-vMotion port group.

Verifying the VMkernel Network Adapter

Migrating to vSphere Distributed Switch (vDS)

In most environments, the ESXi host’s initial configuration consists of vSphere Standard Switches that carry all traffic, including management, vMotion, vSAN, iSCSI, etc. For environments configured initially with the default vSphere Standard Switch, how do you migrate to the vSphere Distributed Switch? The process is relatively straightforward. However, care and attention to detail need considering when performing the VSS migration to the vDS.

While VMware has safeguards to prevent losing network connectivity, there is still the possibility of this happening with misconfigured settings or details such as VLAN IDs not being carried over to vDS configurations serving as the target of the VSS migration.

Keep in mind that if performed correctly, the VSS to vDS migration does not require a maintenance period, and there should not be any disruption of network traffic. However, some choose to establish a maintenance period for migrating production hosts from VSS to vDS to account for unforeseen issues.

When migrating from the vSphere Standard Switch, there are a few things to consider:

- What VMkernel Network Adapters currently exist on vSphere Standard Switches?

- Which ESXi host network adapters are backing the VSS?

- Will you use the same physical ESXi adapters for the vDS migration or different?

- If different physical adapters back the vDS, have the physical switch ports been tagged with appropriate VLANs backing the new adapters?

- What virtual machines connect to vSphere Standard Switches?

- If migrating to vDS, have vDS port groups with corresponding VLAN tags been created?

In order of priority, you want to think about:

- VMkernel network adapter connectivity – You do not want to lose connectivity to critically important connectivity such as iSCSI, vSAN, or the Management VMkernel port for the ESXi host itself.

- Virtual machine connectivity

Migration best practices

Consider the following when migrating from VSS to vDS:

- While you can migrate multiple hosts when migrating between VSS and vDS using the wizard, it is much safer to migrate a single host. In this way, you can flush out any issues with the vDS configuration or physical network infrastructure.

- Migrate production VMs off the first host to migrate from VSS to vDS. In this way, you will ensure no issues with production workload connectivity.

- Use a simple test VM as a workload to test basic network connectivity. Using a small Linux appliance like the TTYLinux distribution is a great tool to check VM connectivity.

- Migrate a single physical network adapter to the vDS instead of all adapters at once.

- Once you have a single physical network adapter migrated to the new vDS, migrate the VMkernel management interface.

Migrating from a vSphere Standard Switch (VSS) to vSphere Distributed Switch (vDS)

In the walkthrough below on a specific ESXi host, the management VMkernel Network Adapter resides on a vSphere Standard Switch. Also, there is a single virtual machine connected to the VSS that needs migrating. To level-set, the ESXi host is added to the vSphere Distributed Switch.

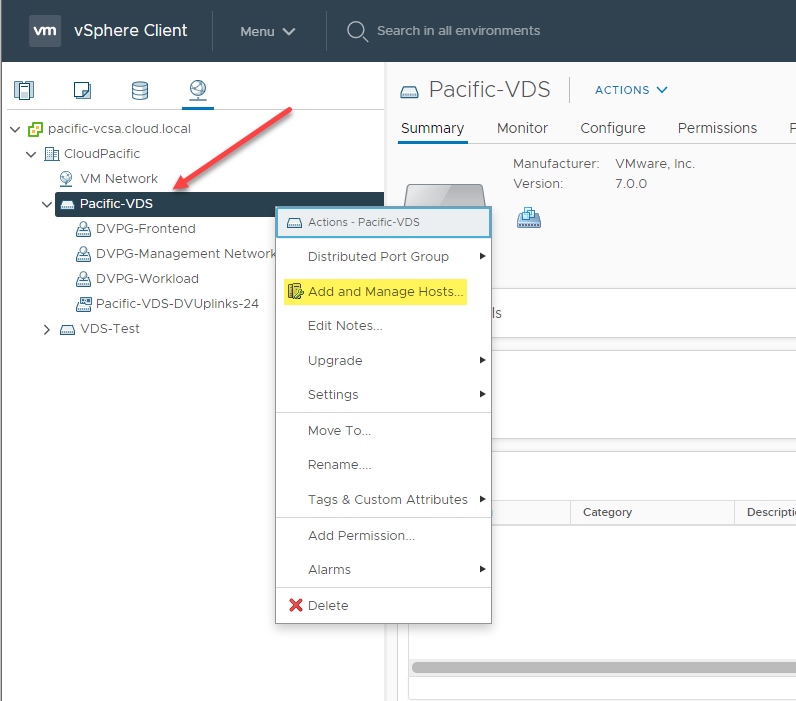

To migrate from the VSS to the vDS, right-click on the destination vSphere Distributed Switch and click Add and Manage Hosts.

Add and Manage Hosts to migrate from VSS to Vds

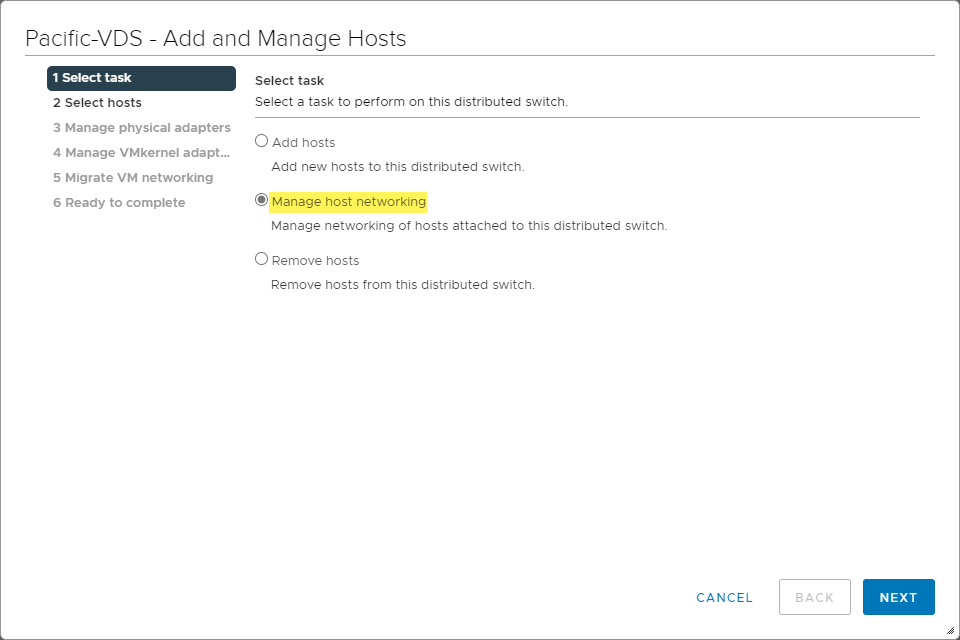

Next, choose Manage host networking.

Choosing to manage host networking

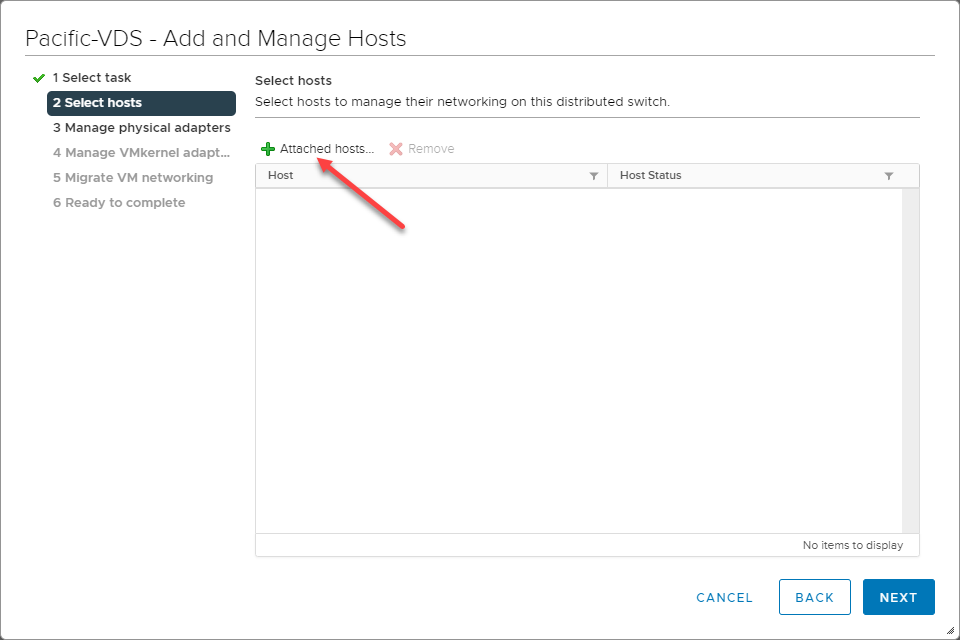

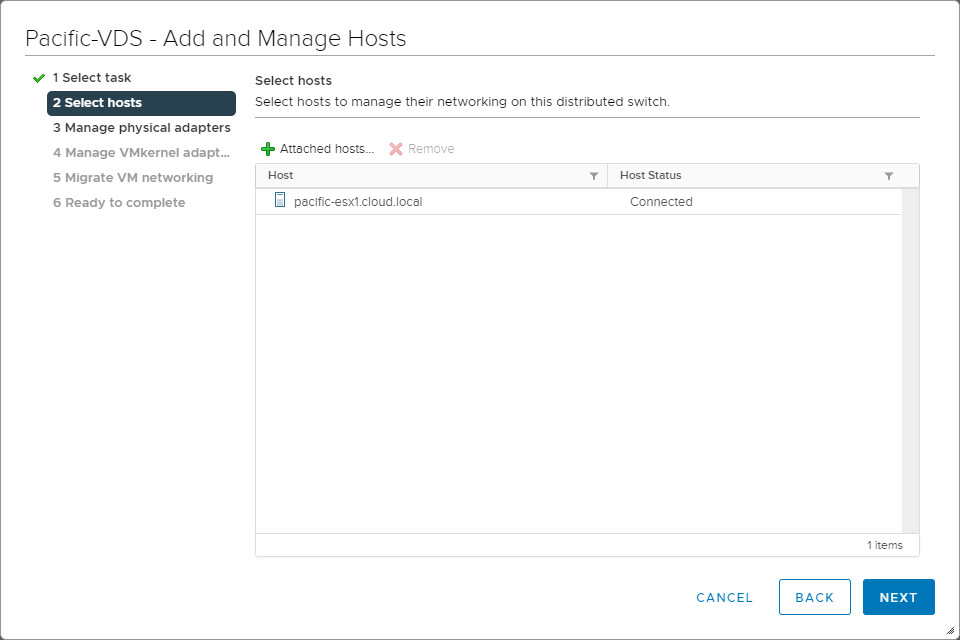

Click the Attached hosts button to choose which ESXi hosts to migrate.

Click the attached hosts button to choose hosts

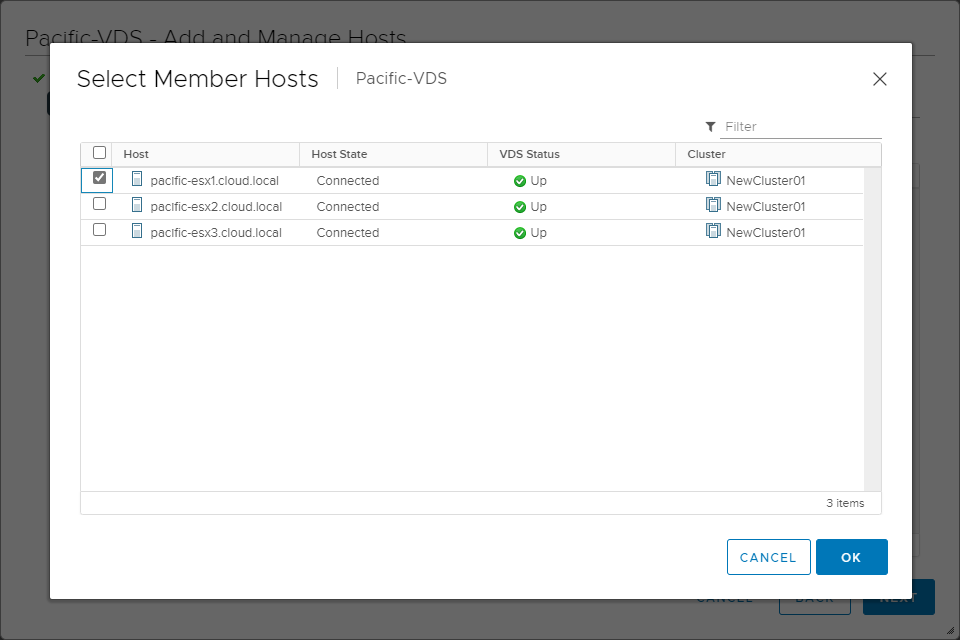

Select a single host or multiple hosts. To be safe, we are going to migrate the networking of a single host.

Choosing a host to migrate from VSS to vDS

The single ESXi host is selected.

Selecting a single ESXi host for migration from VSS to Vds

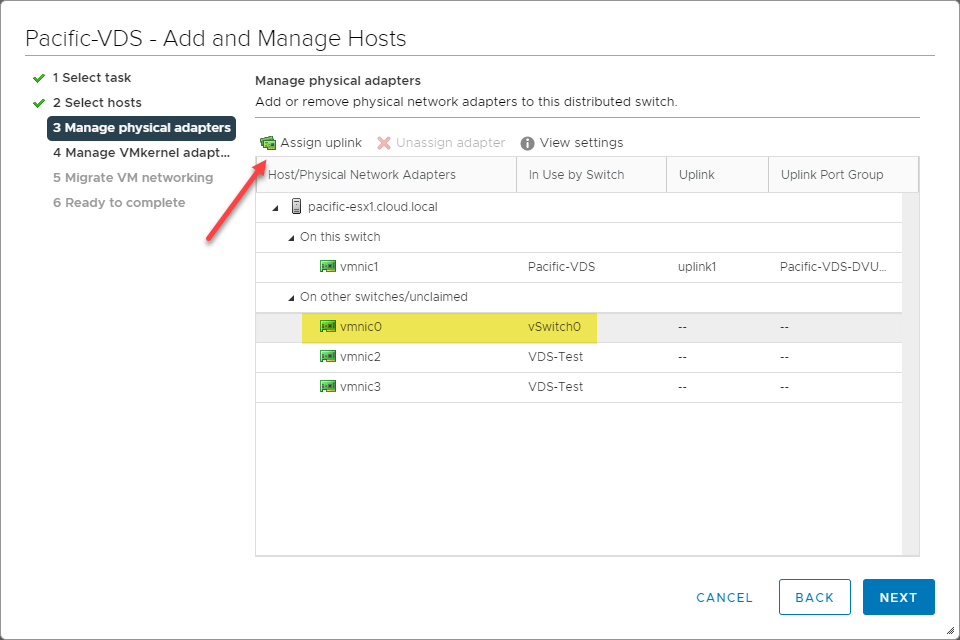

Next, on the Manage physical adapters screen, we are going to migrate the existing adapter backing the VSS to the vDS. Cick the adapter and choose Assign uplink.

Assigning an existing VSS adapter to the vDS

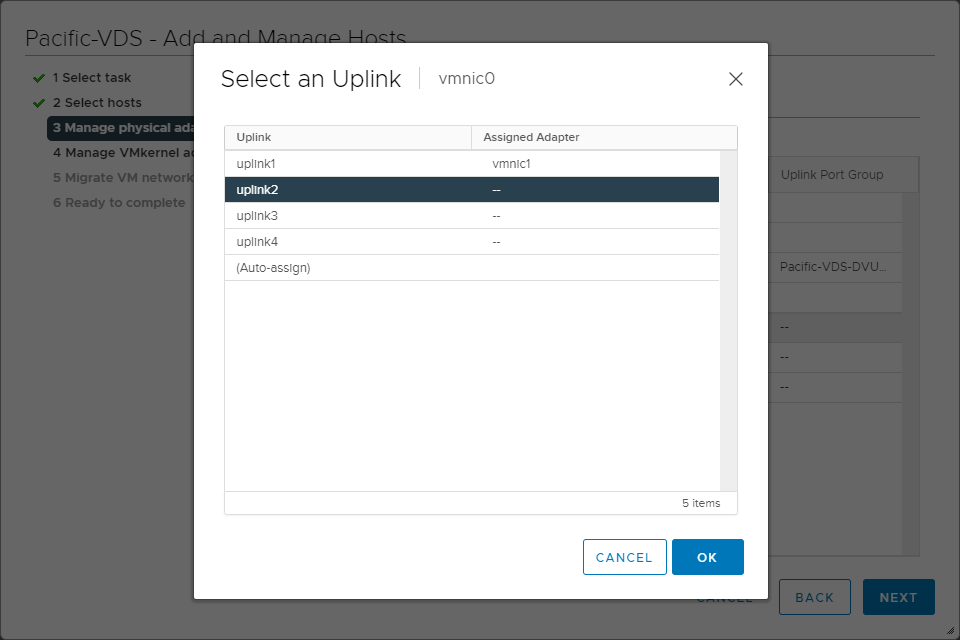

In the Select an Uplink dialog box, select the uplink you want to assign the adapter.

Assigning an adapter to an uplink for the Vds

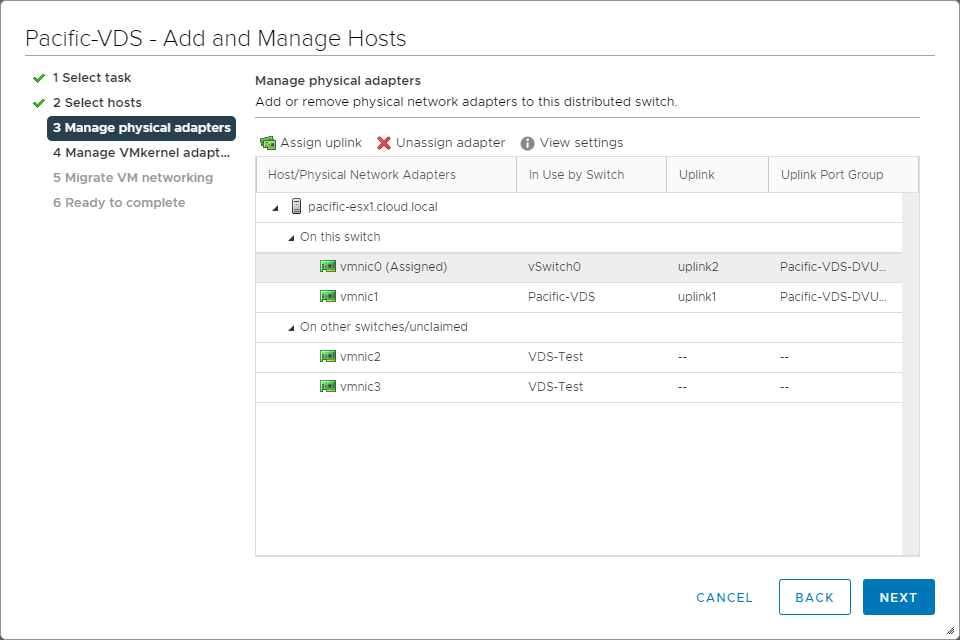

The adapter is now assigned to the vDS uplink port group.

Physical adapter is assigned to the vDS port group

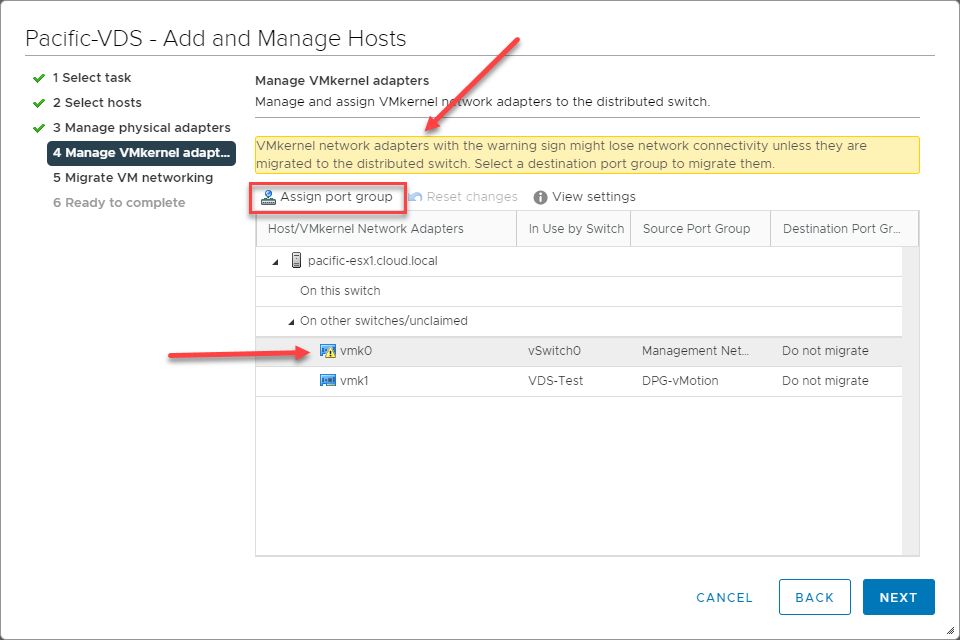

As mentioned earlier, the vSphere Client does a good job of warning you when connectivity issues may arise. The wizard tells us that since the VSS physical adapter is now moved to the vDS, the management network adapter (still residing on the VSS) may lose connectivity. We need to migrate the management VMkernel adapter. Click Assign port group.

Migrating the VMkernel adapter that is carrying management traffic

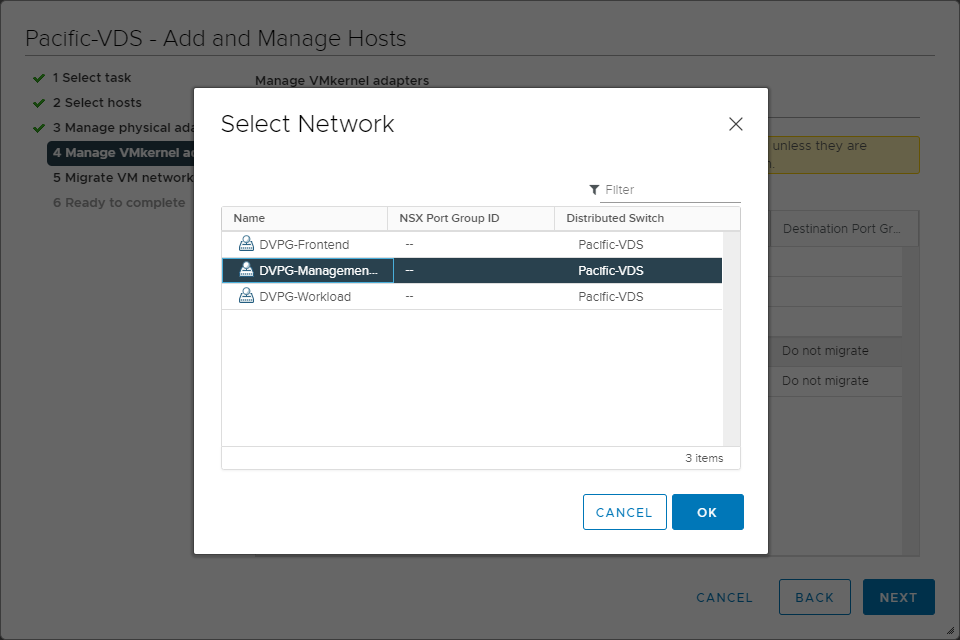

Select the vSphere Distributed Switch Port Group you want to reassign the management VMkernel adapter.

Select the destination vDS port group

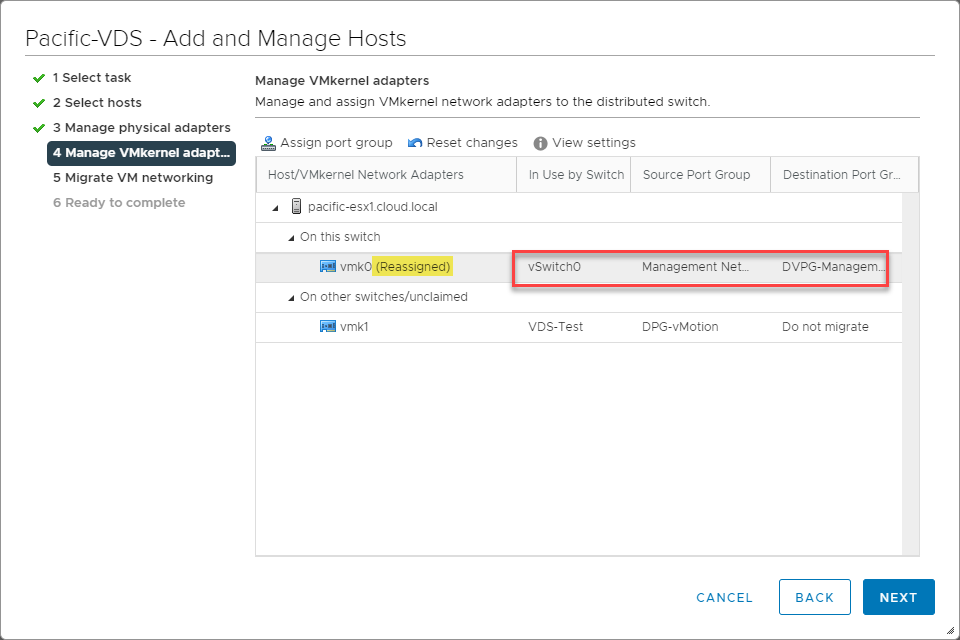

You will note the wizard now shows the vmk0 management VMkernel adapter as Reassigned.

Destination vDS port group chosen for the VMkernel management adapter

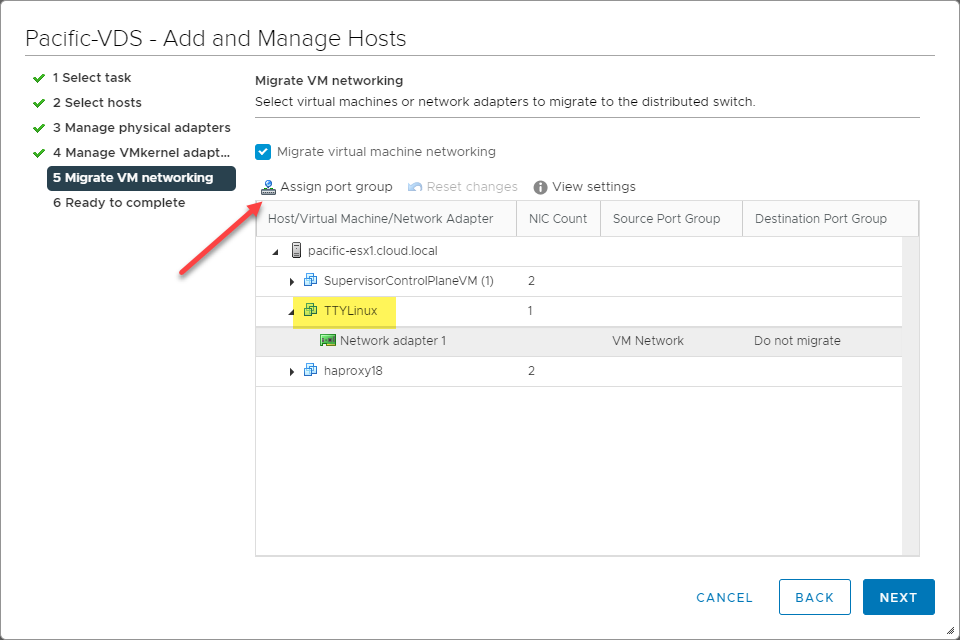

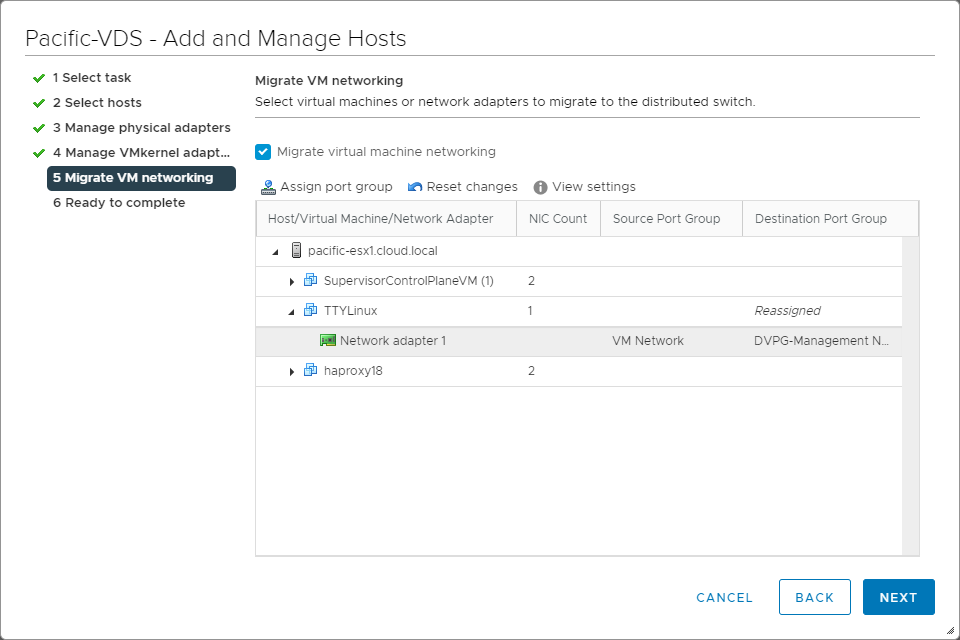

On the Migrate VM networking step, any virtual machines connected to the VSS will lose connectivity since the physical network adapters backing the VSS have been reassigned. Click the Assign port group button to reassign the VM to a different port group on the vDS.

Reassigning a virtual machine to a vDS port group

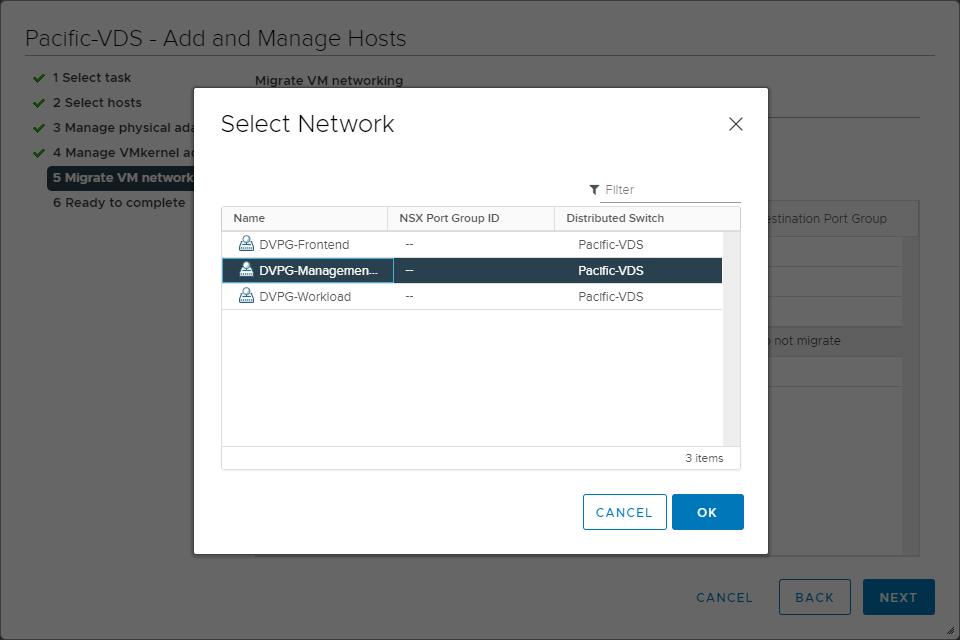

Select the destination vDS port group.

Select the vDS port group for the virtual machines

The destination vDS port group has been chosen and is ready to migrate.

The virtual machine destination port group has been assigned

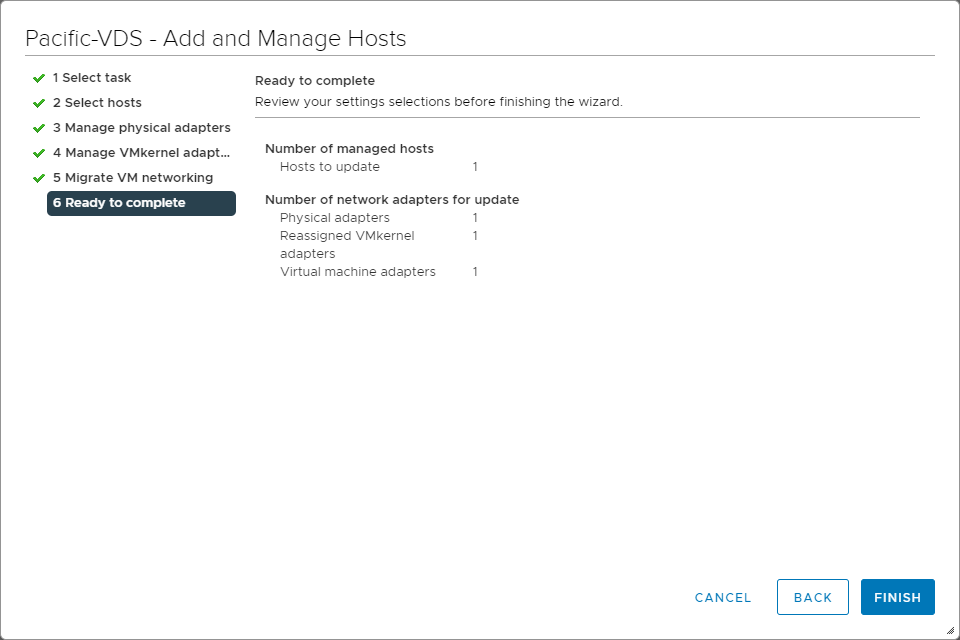

Finally, the VSS to vDS migration is ready to complete.

Completing the migration from VSS to vDS connectivity

After the migration completes, check to ensure you still have connectivity to the ESXi host and the migrated virtual machine.

Conclusion

The vSphere Distributed Switch (vDS) is a powerful virtual networking construct that allows organizations to provision and manage virtual networking at scale in a VMware vSphere environment. The vDS provides many excellent capabilities not included in the VSS. Features such as Network I/O Control are incredibly beneficial when used in conjunction with other VMware solutions such as vSAN.

The vSphere Distributed Switch does require additional licensing, either in the form of vSphere Enterprise Plus or a vSAN license. As shown, provisioning, managing, and migrating to the vSphere Distributed Switch is relatively straightforward and, when planned carefully, can be carried out without any maintenance period or connectivity disruption.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!