Save to My DOJO

Table of contents

- What’s new in vSphere 7.0 Update 2

- NVIDIA AI Enterprise software suite

- vSphere With Tanzu – NSX Advanced Load Balancer Essentials

- Security improvements in vSphere 7.0 Update 2

- Performance improvement

- Improvements to vSphere Lifecycle Manager (vLCM)

- Added vSphere HA support for Persistent Memory (PMEM) workloads

- New versions VMware Tools 11.2.5 and virtual hardware version 19

- VSAN 7.0 Update 2

- Wrap up

Since the release of vSphere 7.0 in April 2020, VMware moved vSphere to a shorter six-month release cycle. This faster pace allows the product to stay on track with VMware’s aggressive roadmap and latest innovations with Kubernetes, modern apps, intrinsic security, hybrid cloud as well as other advanced technologies such as AI and machine learning.

While many organizations are wary of new major versions and prefer to wait it out on the previous one, this second (or should I say third?) iteration should appease their minds, build confidence and drive upcoming upgrade campaigns forward. In this article, we will run through the biggest changes that have been brought to VMware’s flagship product.

What’s new in vSphere 7.0 Update 2

Once again VMware makes a significant leap forward by delivering a plethora of improvements and new features in vSphere 7.0 Update 2. Among the most important ones we find:

- Nvidia backed AI & Developer Ready Infrastructure

- Enhanced security footprint

- Simplified operations with vLCM

- Performance improvements

- vSAN 7.0 Update 2

NVIDIA AI Enterprise software suite

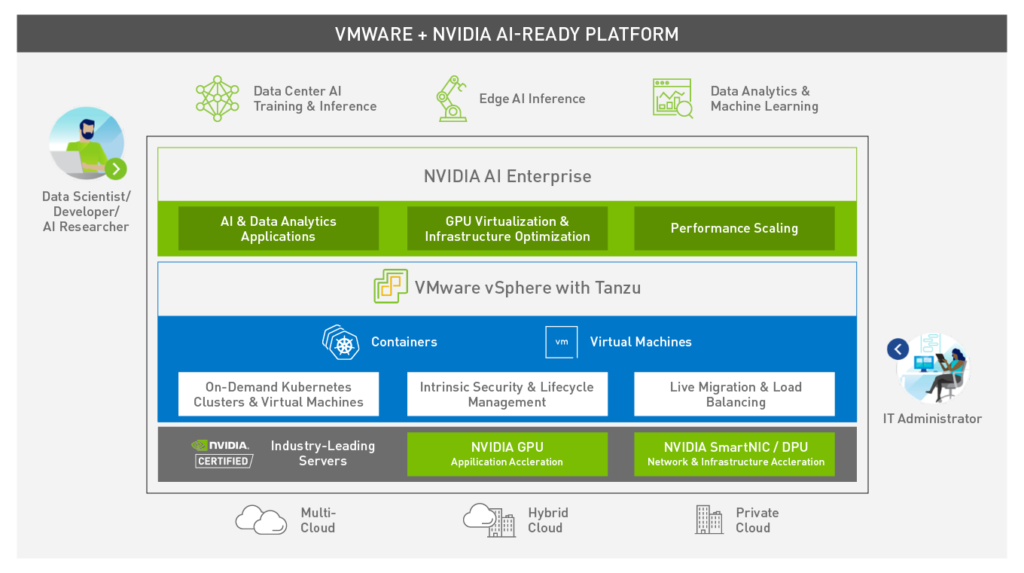

While it wasn’t announced as part of the vSphere changelog, the launch was made jointly with VMware at the same time. Announced during VMworld 2020, NVIDIA AI Enterprise is a cloud-native suite of AI and data analytics software, optimized, certified, and supported by NVIDIA to run on VMware vSphere 7.0 Update 2.

The goal of the two technology giants is to facilitate the virtualization of AI workloads and data science in the hybrid cloud through a certified enterprise platform.

nVidia AI-Ready Platform

AI Enterprise for vSphere can be leveraged with Nvidia certified hardware such as Dell, HPE, Gigabyte, Supermicro and Inspur mainstream servers. Meaning even those futuristic AI applications can be supported like any traditional enterprise workloads using infrastructure management tools like VMware vCenter.

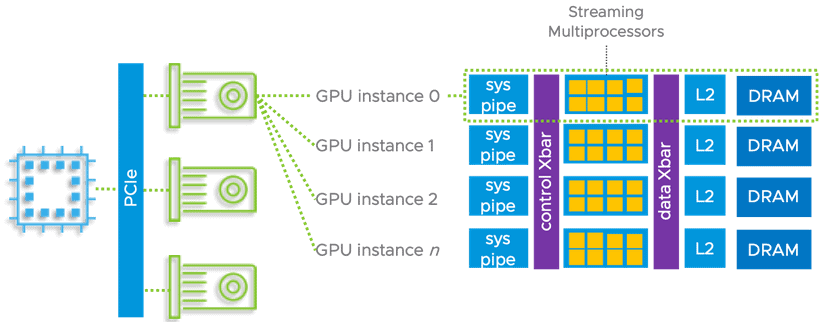

On a side note, support has been added for NVIDIA’s latest Ampere A100 Tensor Core GPUs which delivers up to 20X better performance from the previous generation. This chipset is the latest hardware accelerator for Machine Learning workloads and offers support for Multi-Instance GPUs (MIG).

You now get to choose between both the traditional time-sliced vGPU and the new MIG-backed vGPU mode. Note that all support vMotion and DRS‘ initial placement which will be welcomed with open arms by companies that virtualize AI workloads.

GPU Streaming Multiprocessors

vSphere With Tanzu – NSX Advanced Load Balancer Essentials

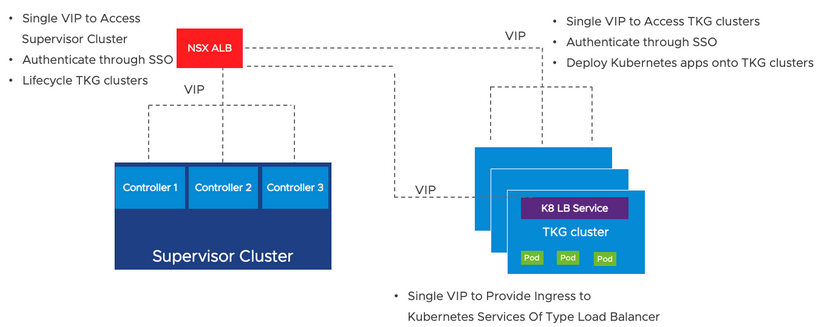

If you tinkered with Tanzu Kubernetes Grid (TKG) in vSphere 7.0 Update 1 you may know that you had to provision an HAProxy based load balancer appliance if you didn’t have NSX.

The latest version of vSphere with Tanzu brings NSX Advanced Load Balancer Essentials (ALB), a production-ready load balancer that does not require to have NSX.

The new vSphere with Tanzu Load Balancer supports the Supervisor cluster, TKG clusters as well as the Kubernetes services. A virtual IP which spreads the load across the nodes is assigned to access the supervisor cluster. Additional vIPs are assigned when DevOps users create TKG clusters.

NSX Advanced Load Balancer Essentials (ALB)

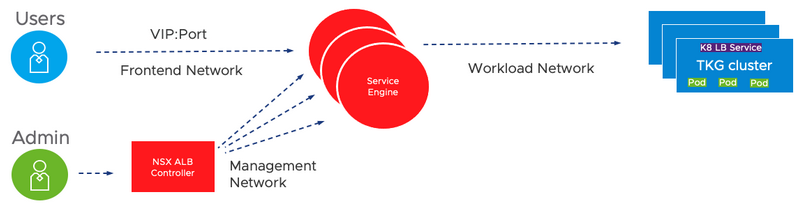

The NSX ALB is made of a control plane where the Load-Balancer is managed and a set of service engines that make up the data plane that receives their instructions from the controller. The users query a virtual IP and a port which are attached to the endpoint they are targeting.

NSX ALB Load Balancer

Security improvements in vSphere 7.0 Update 2

A number of changes have been introduced to reduce the attack footprint and strengthen the security aspect of vSphere environments.

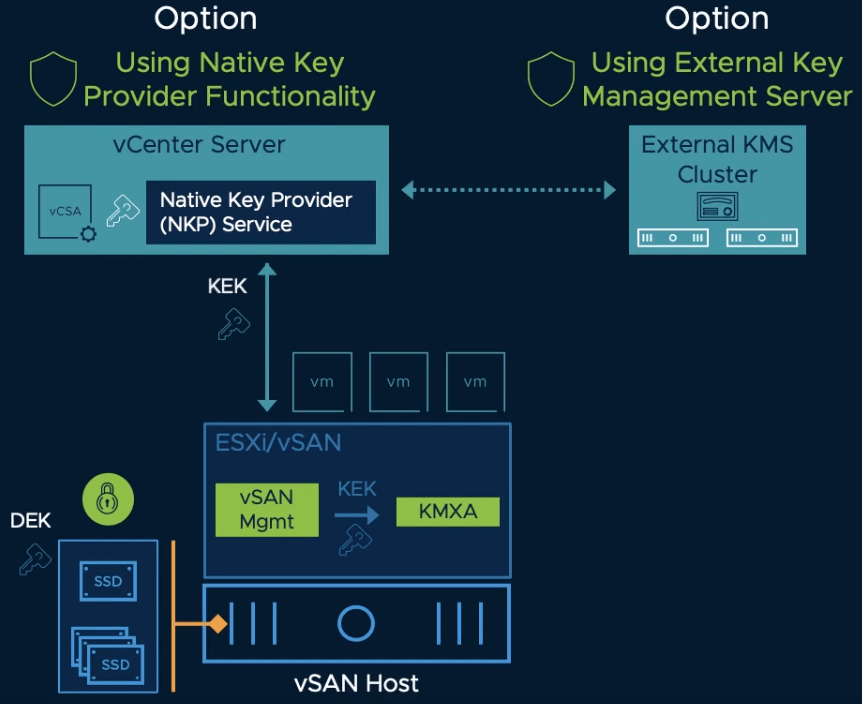

vSphere Native Key Provider

Data-at-rest encryption is a solution that performs vSAN Encryption, VM Encryption, and vTPM. The content is encrypted using a Key Management System (KMS) so only administrators with the right privileges can act on it.

In earlier versions, you had to set up a third part Key provider. This was a limiting factor for small companies that didn’t have a KMS server or the resources but still wanted to benefit from data-at-rest encryption.

in vSphere 7.0 Update 2

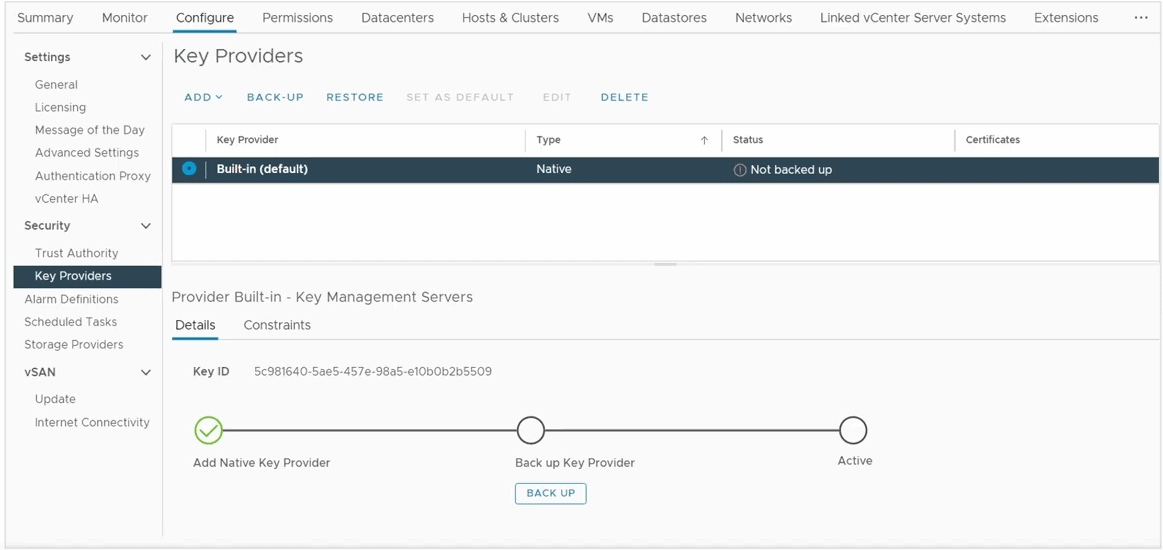

vSphere 7.0 Update 2 introduces a new Native Key Provider that is fully embedded within vCenter and clustered ESXi hosts. It allows out-of-the-box, data-at-rest-encryption and can leverage hardware TPM. Its implementation is quick and easy, while improving the overall security of the environment.

ESXi Host Key Providers

Once set up, you can back up the Native Key Provider which will export the key derivation key (KDK) in “.p12” format that you must store in a safe place. VMware emphasizes that, should you use encryption, vCenter backups are increasingly important and should be implemented asap if not already done (Do it!).

Once the ESXi host receives a KDK from the vCenter server, it does not need access to vCenter Server to do encryption operations which removes a single point of failure.

Note that the Native Key Provider is a convenient way to set up encryption but it is not meant to replace existing KMS servers which embed more advanced features and are usually certified by the company’s security policy.

ESXi Key Persistence

As opposed to earlier versions where encrypted virtual machines and vTPM required access to the key server to work. vSphere 7.0 Update 2 removes that limitation, even across reboots, by persisting the encryption key inside the TPM if the server is equipped with one (TPM 2.0).

Meaning you can keep running encrypted workloads and vTPM when the key server is down.

However, be aware that Key persistence is not enabled by default, it is done manually using esxcli (which can be scripted in PowerCLI).

ESXi Configuration Encryption (TPM Sealing Policy)

Some ESXi services store secrets in config files that are in turn archived in the boot bank. Such files could be leveraged by potential attackers to retrieve secrets and gain access to the system. As of vSphere 7.0 Update 2, the archived configuration of an ESXi host is encrypted with a key that is sealed in the TPM module if there is one (TPM 2.0).

Note that you can still benefit from configuration encryption if the server is not equipped with a TPM device. In this case, ESXi will do it in software with a Key Derivation Function (KDF) to generate an encryption key for the archived configuration file. The inputs to the KDF are then stored on disk in a file named encryption.info.

vSphere 7.0 Update 2 also includes a recovery mechanism by generating a recovery key that can be used to recover the encrypted configuration. It is obviously recommended to back this key up, but don’t worry as vCenter will remind it with an alarm when the host is in TPM mode.

Confidential vSphere Pod

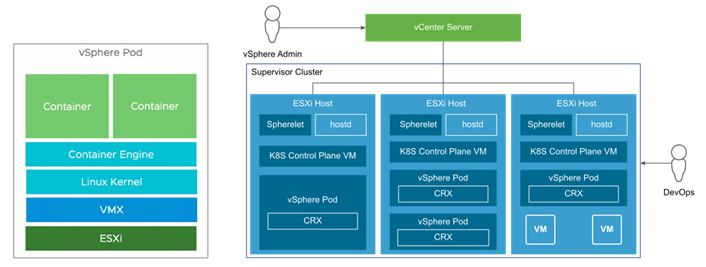

This new feature is related to environments running vSphere with Tanzu that require extra security. Let’s first describe what a vSphere Pod is in VMware’s own words:

“A vSphere Pod Service provides a purpose-built lightweight Linux kernel that is responsible for running containers inside the guest”.

What it means essentially is that the DevOps engineers run containers inside vSphere Pods.

vSphere Pods

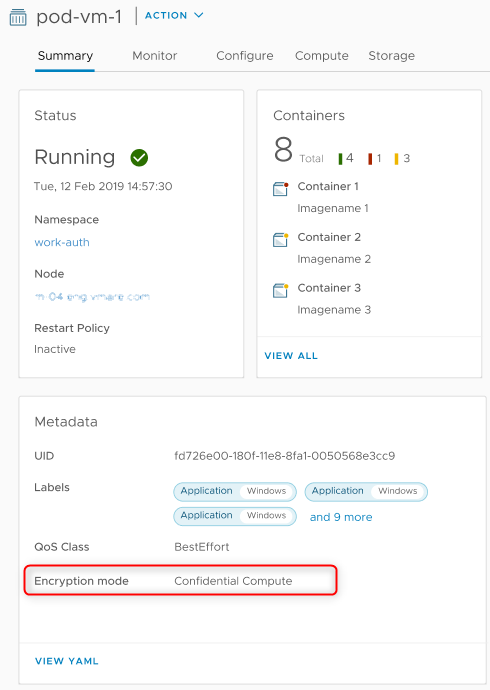

Secure Encrypted Virtualization-Encrypted State (SEV-ES) is a hardware feature available in new AMD CPUs which encrypts the guest’s memory and CPU register to prevent any access to it from the hypervisor. It was already made available for virtual machines in vSphere 7.0 Update 1.

vSphere 7.0 Update 2 brings SEV-ES to vSphere with Tanzu with Confidential vSphere Pods. In order to enable it, SEV-ES must be enabled in the system’s BIOS and configured according to the number of workloads you plan to run. An “Encryption mode” field will have the value “Confidential compute” in the properties of the pods that have it enabled.

Confidential vSphere Pods

Performance improvement

vMotion Auto Scaling

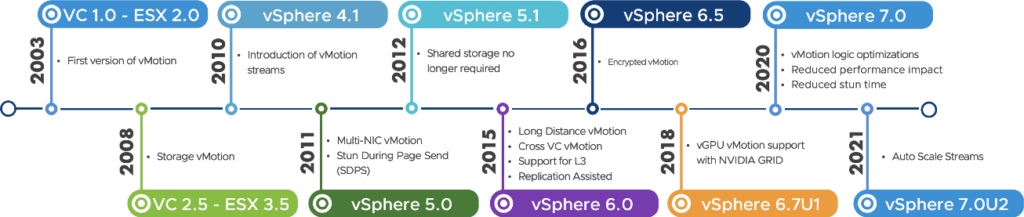

Since the introduction of vMotion back in 2003, VMware never stopped increasing its capabilities and improving it. vSphere 7.0 Update 2 is no exception to the rule.

vSphere Versions

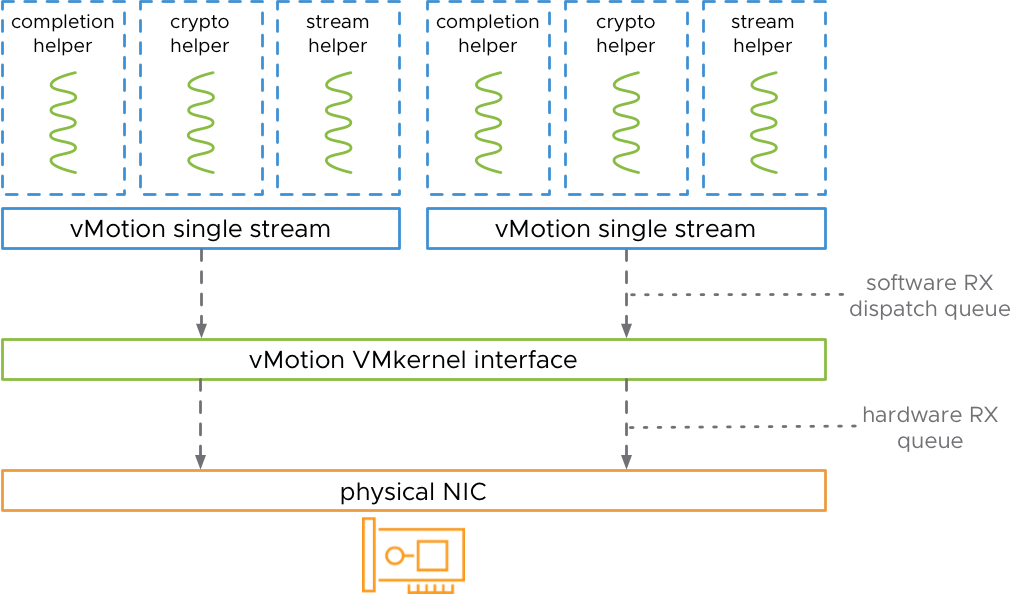

In earlier versions, leveraging high bandwidth NICs for vMotion required multiple layers of tuning. This was because it uses a single stream out of the box which would saturate at around 15Gbps, which is not a problem on 10Gbps links but it becomes to be one when working with a 25, 40 or even 100Gbps interface as you won’t be using its full capabilities.

vMotion interface

In vSphere 7.0 Update 2 you no longer need to go through this tuning process as it will automatically use multiple vMotion streams according to the NIC bandwidth associated with the vMotion vmkernel.

- 25 GbE = 2 vMotion streams

- 40 GbE = 3 vMotion streams

- 100 GbE = 7 vMotion streams

Note that if you changed the advanced settings on your hosts for high bandwidth vMotion, you should revert them to their default values to benefit from vSphere 7.0 Update 2’s Auto scaling feature.

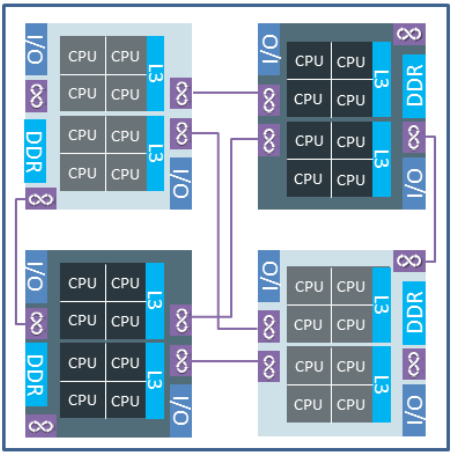

Optimizations for AMD Zen CPUs

If you currently run hosts equipped with AMD CPUs based on the ZEN architecture, you will be happy to learn that vSphere 7.0 Update 2 includes out-of-the-box optimizations for this platform which is said to offer up to 30% performance increase.

This magic is achieved by making the ESXi scheduler better use the underlying AMD NUMA architecture and make optimized VM and container placement decisions.

Improvements to vSphere Lifecycle Manager (vLCM)

vSphere 7.0 brought the vSphere Lifecycle Manager (vLCM), a desired state model that takes over vSphere Update Manager (VUM) to simplify the vSphere update process.

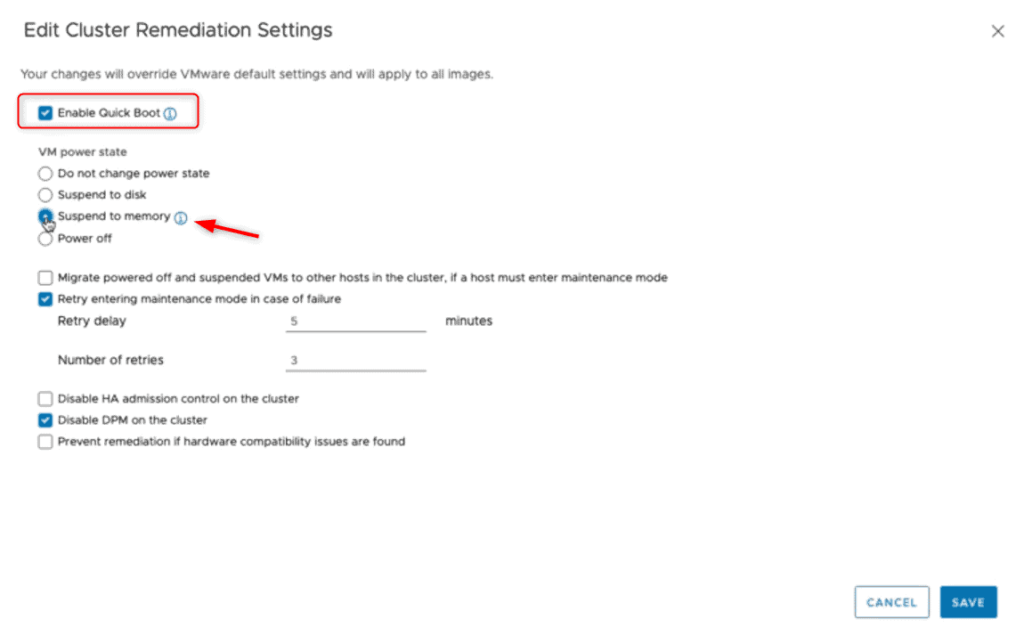

Host patching: ESXi Suspend-to-Memory (STM)

Throughout a patching campaign, upgrading the vSphere hosts in a cluster isn’t actually the time-consuming part of the process as installing the updates and rebooting is pretty quick. What takes the most time is to evacuate the VMs from the host, using vMotion or DRS, prior to putting it in maintenance mode. And if you are still rocking a 1Gbps vMotion link, boy are you in for a treat…

While somewhat uncommon, some of those who can afford the downtime, but don’t want to lose the active state, choose to suspend the VMs and resume them when the host comes back with the “Suspend-to-disk” option. That way there is no need to evacuate the host. However, while it may be faster than a migration, suspending the active state of the VM to disk also takes significant time, especially when the scope is wide.

vSphere Lifecycle Manager

The new Suspend-to-Memory (STM) feature lets you suspend the state of the VM into the host memory as part of the remediation process! Note that because the data is stored in memory the host mustn’t be completely restarted otherwise the volatile memory will be lost. For that reason, it is necessary to have Quick Boot enabled which restarts the hypervisor without rebooting the hardware.

The option is located in the lifecycle manager under image remediation. It is not applicable to the baseline method. That way the VMs will only be suspended while the vmkernel restarts which should be pretty quick.

Support for vSphere with Tanzu on vLCM enabled cluster

In vSphere 7.0 Update 2 you can now enable vSphere with Tanzu on vLCM enabled clusters. You can upgrade a Supervisor Cluster to the latest version of vSphere with Tanzu as well as upgrade the vSphere hosts in the Supervisor Cluster.

Host seeding

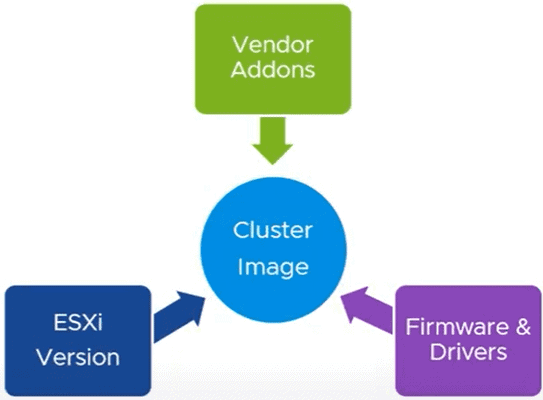

vSphere Lifecycle Manager brought Cluster Image Management which ensures and simplifies consistency across the cluster at 4 different levels: Base Image, vendor add-ons, components and firmware.

vSphere Cluster Image Management

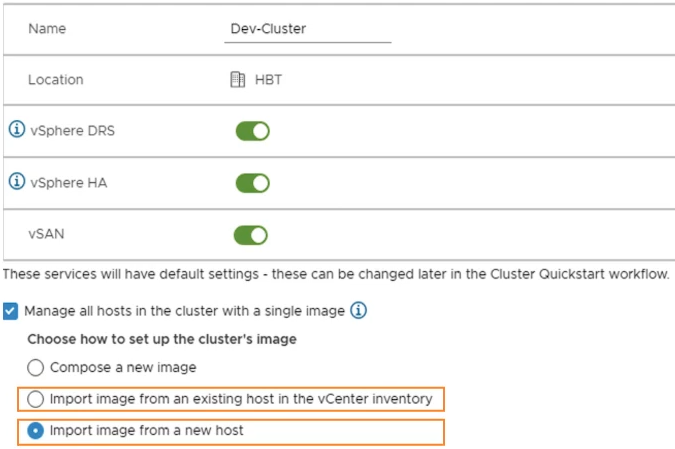

In vSphere 7.0 Update 2, you can now extract the base image from an existing ESXi host! You can reference a host that is part of the same vCenter, a different one or even a standalone host, as long as they are running vSphere 7.0 Update 2 or above.

These are available in the new cluster creation process which now offers the possibility to import the image from a host in the vCenter inventory or from a new one (other vCenter or standalone) as depicted below.

vSphere 7.0 Update 2

This feature should significantly speed up the creation of vLCM enabled clusters and will also prove useful in high security, air-gapped environments.

Parallel remediation of hosts

In order to speed up patching campaigns and reduce operational overhead, vSphere 7.0 Update 2 adds the ability to remediate several hosts in parallel within a cluster that uses baselines. In order to use this feature, you must put the hosts in maintenance mode yourself as it is not done by vLCM itself, most likely as a failsafe mechanism.

It can be configured to a certain degree such as the maximum number of hosts to be remediated in a single remediation task and you can tell vLCM to remediate all hosts that are in maintenance mode in parallel.

Note that parallel remediation is not available for VSAN clusters.

Added vSphere HA support for Persistent Memory (PMEM) workloads

Persistent memory is a feature that was released with vSphere 6.7 to make use of a new type of memory, the Non-Volatile Memory (NVM). It acts as another layer of storage that sits in between the “hard disk” and memory to improve performances and is capable of maintaining data even after a power outage.

In earlier versions, PMEM enabled virtual machines had several limitations such as vSphere HA and DRS compatibility. vSphere 7.0 Update 2 brought support for vSphere HA and DRS initial VM placement (partially automated mode).

In order to benefit from it, the VM must be on Hardware version 19 and not use vPMemDisks. Note that in case of Host failure, the data stored on the PMem device cannot be recovered.

Another checkbox “Reserve Persistent Memory failover capacity” has also been added to admission control to enable it at the PMem level.

New versions VMware Tools 11.2.5 and virtual hardware version 19

Like any major version, vSphere 7.0 Update 2 brings new VMware Tools and VM compatibility versions.

VMware Tools version 11.2.5:

Back in October 2020, VMware Tools version 11.2.0 were released that included significant changes like Precision Time for Windows and Carbon Black native integration. vSphere 7.0 Update 2 delivers the next iteration with version 11.2.5 which includes the following changes:

- OpenSSL updated to 1.1.1i

- Pcre updated to 8.44 (Perl Compatible Regular Expression)

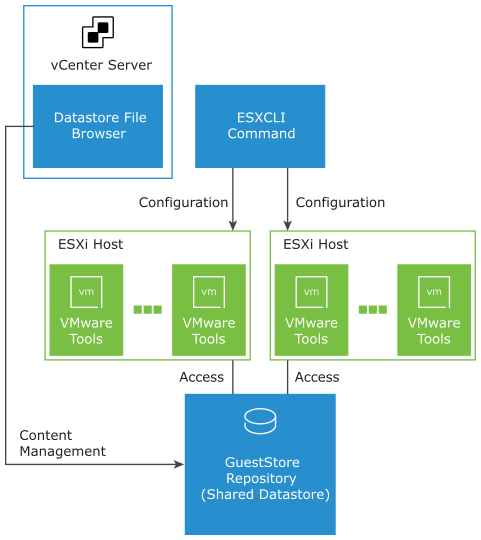

- GuestStore (Windows machines)

This new feature allows to distribute specific content (up to 512MB files) to multiple guest systems from a GuestStore repository. This ensures that the distributed content is consistent across the hosts as opposed to clusters that run multiple vSphere version. The GuestStore repository has to be configured on the ESXi hosts, it can then be easily accessed using the VMware Tools command-line utility.

VMware Tools command-line utility

Virtual Hardware version 19:

- Maximum number of PVRDMA network adapters goes from 1 to 10.

- Added vMotion support for PVRDMA native endpoint

- Added support for Direct3D 11.0

- Extended vTPM support to Linux machines

- Support for vSphere HA with persistent memory

VSAN 7.0 Update 2

As you could expect, VMware’s HCI storage solution received a significant facelift as well with a wealth of great new features.

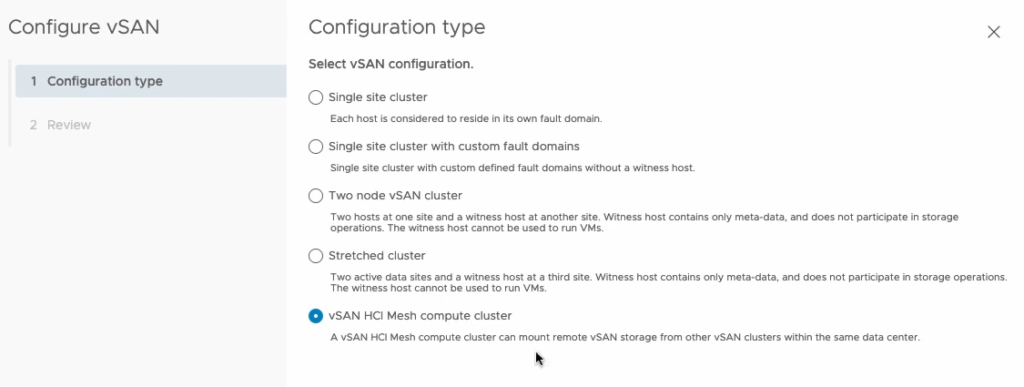

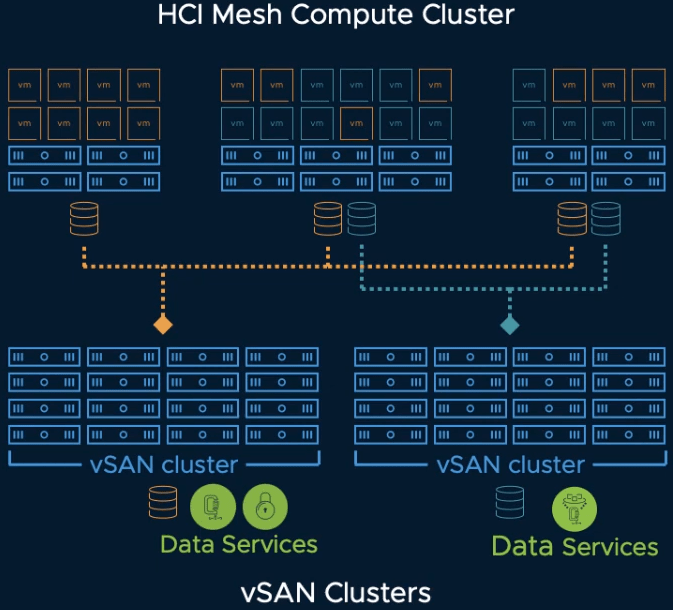

HCI Mesh Compute Clusters

A huge new capability that is brought by VSAN 7.0 Update 2 is definitely HCI Mesh compute clusters. In earlier versions, there was a requirement for all participating clusters to provide vSAN storage. In this latest iteration, it is now possible to mount a remote VSAN datastore to a traditional vSphere cluster, in the same way that they would connect to a storage array!

VSAN-enabled cluster

In VMware’s words “a VSAN HCI Mesh compute cluster is a VSAN-enabled cluster that does not have any storage capabilities itself, but mounts other VSAN datastores within the same datacenter”.

After you have configured your cluster as a VSAN HCI Mesh compute cluster, you can mount a remote VSAN cluster datastore after a series of health checks are performed. You can then start using it and migrate workloads between both clusters without having to move the VM files.

VSAN HCI Mesh

Note that while you need vSAN Enterprise or Enterprise Plus licenses to share storage, you do not need a VSAN license to mount a remote vSAN datastore to an HCI Mesh compute cluster.

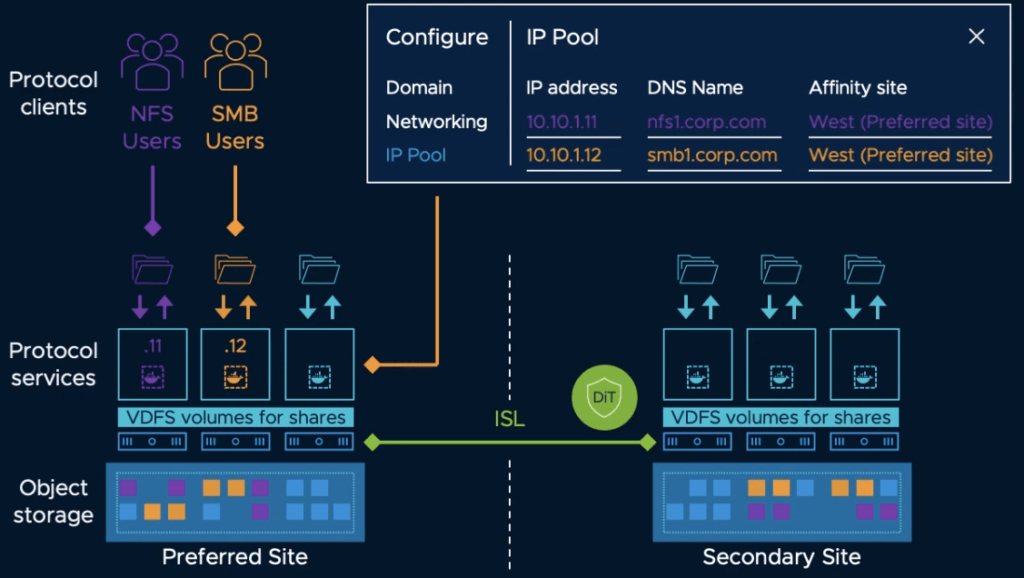

Enhanced File Service

The vSAN file service allows administrators to create file shares in the vSAN datastore that clients and VMs can access via SMB, NFSv3, and NFSv4.1.

vSAN 7.0 Update 2 extends the files services capability to stretched cluster and 2-node configurations which can low be leveraged by edge and ROBO environments. It also increases security with added support for data-in-transit encryption and optimizes space usage thanks to new UNMAP compatibility (unused space reclamation technique).

vSan Enhanced File Service

Another incredible addition is a snapshot mechanism to enable point-in-time recovery of files. It is available via API calls and will allow backup applications such as Altaro Software to protect file shares.

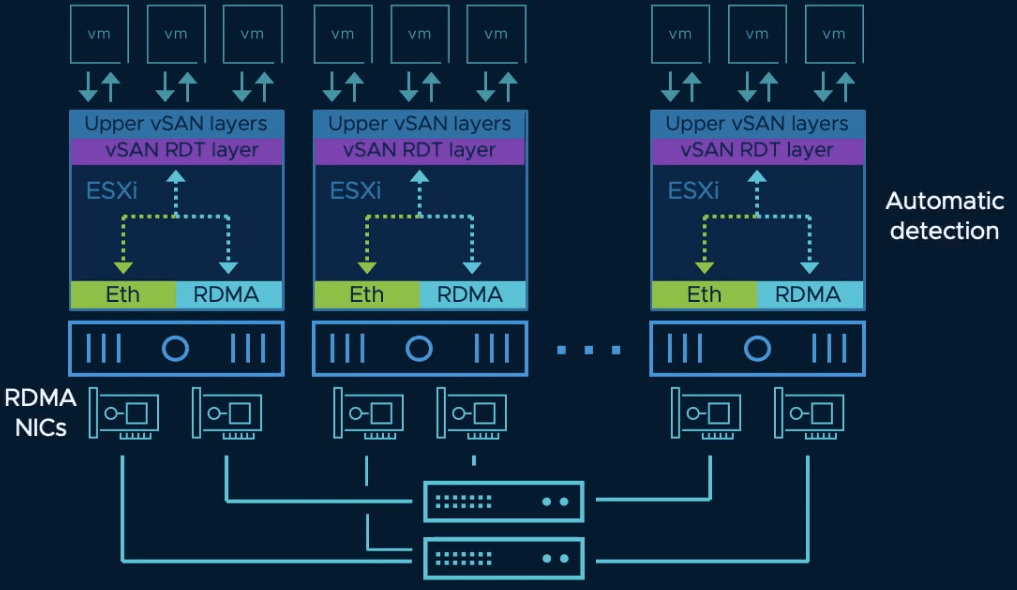

VSAN over RDMA

RDMA is a storage access technology that bypasses CPU to reduce latency and compute overhead which results in improved performances. vSAN 7.0 Update 2 adds compatibility with RDMA over Converged Ethernet version 2 (RCoEv2) for VSAN traffic as long as the devices are supported in the VMware HCL.

VSAN over RDMA

Honorable mentions

A bunch of other features made their way into vSAN 7.0 Update 2 such as:

- Improved performance when using RAID-5/6 Erasure coding

- Improved CPU efficiency

- Support for Native key Provider in VSAN (see the previous chapter)

- Support for proactive HA

- Skyline Health Diagnosis tool for self-service for faster resolution times in isolated environments

- Copy of incremental updates to an extra host in case of unplanned failure to ensure data durability.

- New metrics and health checks to enhance visibility into the environment

- Optimized VM placement in stretched cluster scenarios

Wrap up

In this blog, we covered the major announcements in vSphere 7.0 Update 2 but there obviously are other minor and behind-the-scene improvements that enhance the product. You can find the exhaustive list in the release notes for vCenter 7.0 Update 2, vSphere 7.0 Update 2 and VSAN 7.0 Update 2.

Although VMware moved to a 6 months release cycle, the number of new features and improvements that are brought by vSphere 7.0 Update 2, and update 1 before it, is quite incredible. Not only are they working on new fronts like Tanzu, AI/ML and ever-better cloud integration, but they also keep innovating on historic core components such as vMotion, host patching and such.

We are all already looking forward to discovering what Update 3 will bring. In the meantime, there is plenty to do with vSphere 7.0 Update 2.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!