Save to My DOJO

A while ago, I wrote about the Distributed Resource Scheduler (DRS) more specifically on how it helps you distribute workloads across ESXi hosts in a cluster while ensuring that virtual machines are allocated sufficient resources for peak performance. I had also covered DRS affinity rules which, for one, can be used to tie one or more virtual machines to a specific host.

Another classic cluster feature is High Availability (HA) which, in a nutshell, protects VMs by moving them to a functional host when the parent one goes dark.

I recently got a question on how to disable HA for a specific VM hosted on an HA-enabled cluster but with no DRS enabled. I thought, well, might as well enable DRS and create a VM-Host affinity rule so that the VM in question is always hosted on one specific host. But, memory being what it is, I couldn’t vouch for HA not overriding he DRS affinity rule and spawn the VM on another host. Either way, the client was adamant about leaving DRS disabled and then I remembered about VM Overrides, the main subject of today’s post.

A real-world example

The client deployed a pair of VMs as part of a load balancing setup with its own in-built HA mechanism. Keeping these VMs separate from one another and each hosted on the same host, was one of the prerequisites for this setup. To replicate the setup, I created 2 VMs, each one on a different host. The hosts are members of a DRS and HA enabled cluster and the aim here is to ensure that neither VM gets migrated by DRS or moved elsewhere by HA in the event one host goes down.

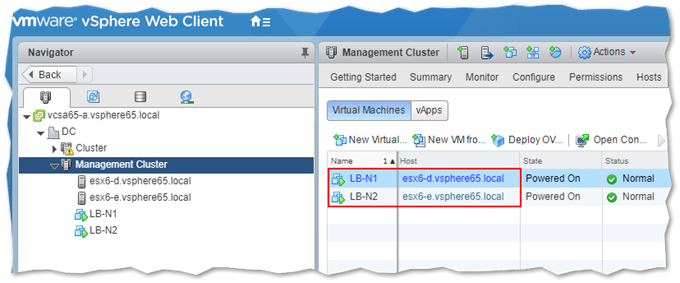

As shown next, the two load balancing nodes LB-N1 and LB-N2 are respectively hosted on esx6-d and esx6-e. We want this to be the status quo.

Two load balancers residing on different ESXi hosts

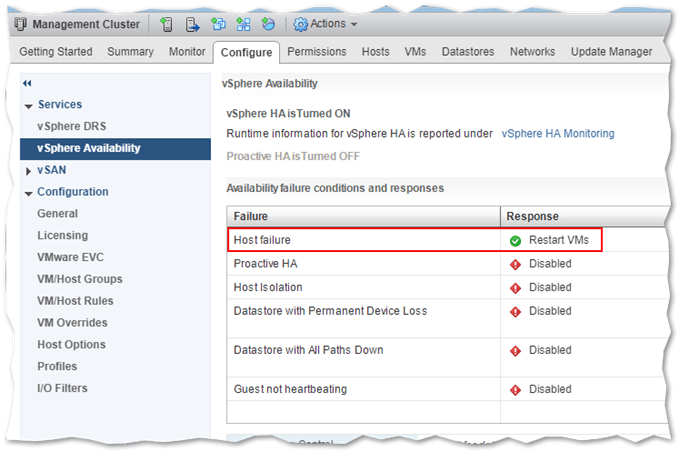

Let’s test HA first. When you enable HA in vSphere 6.5, HA protected VMs are by default configured to restart automatically on the next available ESXi host should the parent host go down. This behavior is governed by the Host Failure response as shown next.

Host failure response for an HA-enabled cluster

For instance, if I kill esx6-d, LB-N1 should restart on esx6-e which is exactly what happens. This also happens to be the scenario the client wants to avoid. I’m running nested ESXi so it’s very easy to simulate a host failure by simply disconnecting the vNICs on the VM running the nested ESXi instance like I demonstrate in this next video. As can be seen, LB-N1 is powered back up on esx6-e a few moments after esx6-d goes down.

Disabling HA for a specific VM via VM Overrides

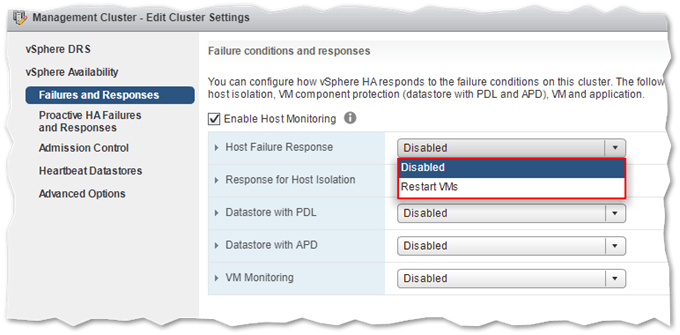

The shortcut to solving this issue is to set the Host Failure behavior to disabled. Doing this, however, renders HA pretty much pointless more so if your goal is to ensure business continuity using HA as a step towards 24×7 availability. Another alternative is to simply move the VMs out of the HA/DRS cluster by placing them on hosts that are not a member of a cluster. But we like doing things the hard way, so let’s try something else!

Setting the host failure response to disabled will prevent VMs from powering up on an alternative host

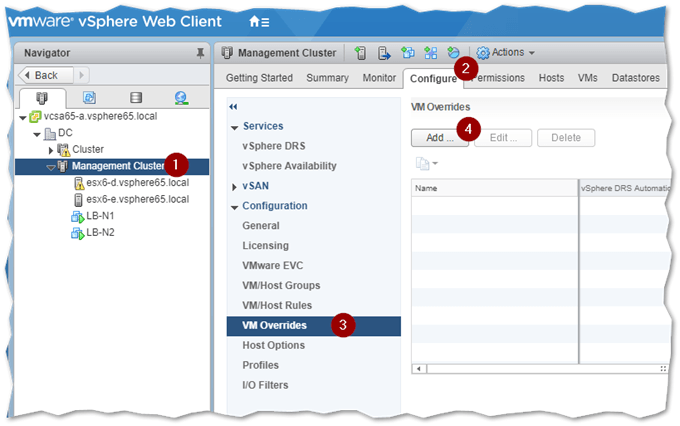

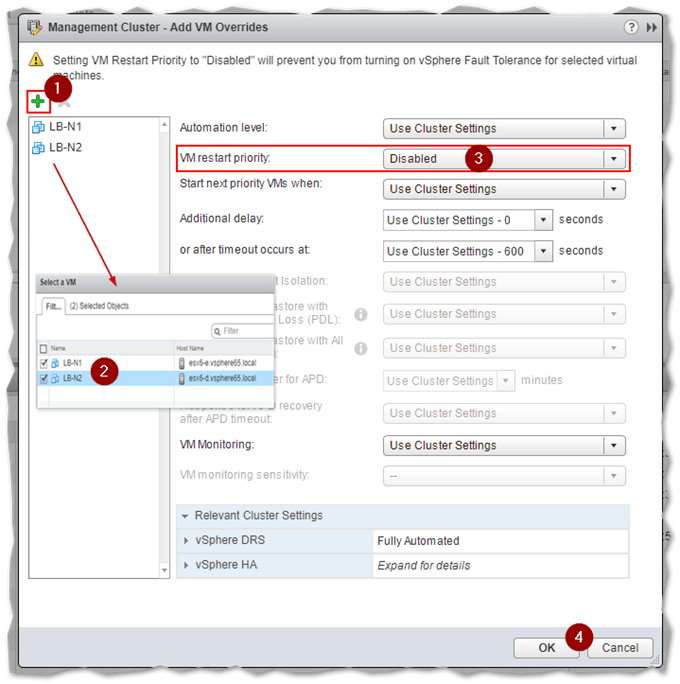

Instead, we can use VM Overrides to stop a VM from being restarting when moved to another host following a host failure. To access this option, highlight the cluster name and click on the Configure tab. Expand Configuration and select VM Overrides. Click Add to launch the Add VM Overrides dialog.

Setting up VM Overrides

Click on the Select Virtual Machines icon (green + button) and select the VMs you wish to set overrides for. When you do, set the VM restart priority to Disabled. The chosen configuration is applied to all the selected VMs. For a granular approach, add one VM at a time and specify individual settings accordingly.

Note: While testing this out, I kept noticing that the VM was being registered on another host regardless of the override setting. As it turns out, starting with vSphere 6.0, the VM will still end up being registered on a functional host. However, as per the override setting, it will not be powered up which is half of what we wanted to achieve. Thanks goes to Yellow Bricks’ post for shedding some needed light on the matter.

Configuring VM Overrides for 2 specific virtual machines

Here’s a second video illustrating the behavior. Once again, I simulated an offline host by disconnecting the VM’s nics. You’ll see LB-N1 being registered on esx6-e and left powered off.

Adding a DRS VM-Host affinity rule

I avoid half-baked solutions like the plague, so I’ll try adding a VM-Host DRS rule to trick the VM into sticking to the same host. Additionally, I want to test how DRS rules play with HA and VM Overrides.

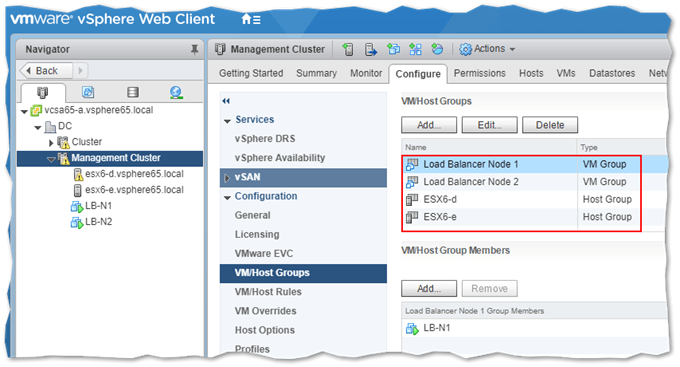

The first thing I need to do is create VM and Host groups since you cannot specify individual VMs and hosts when creating DRS rules. As per the following screenshot, I created 2 VM groups, each containing a Load Balancer node and another 2 Host groups for esx6-d and esx6-e respectively.

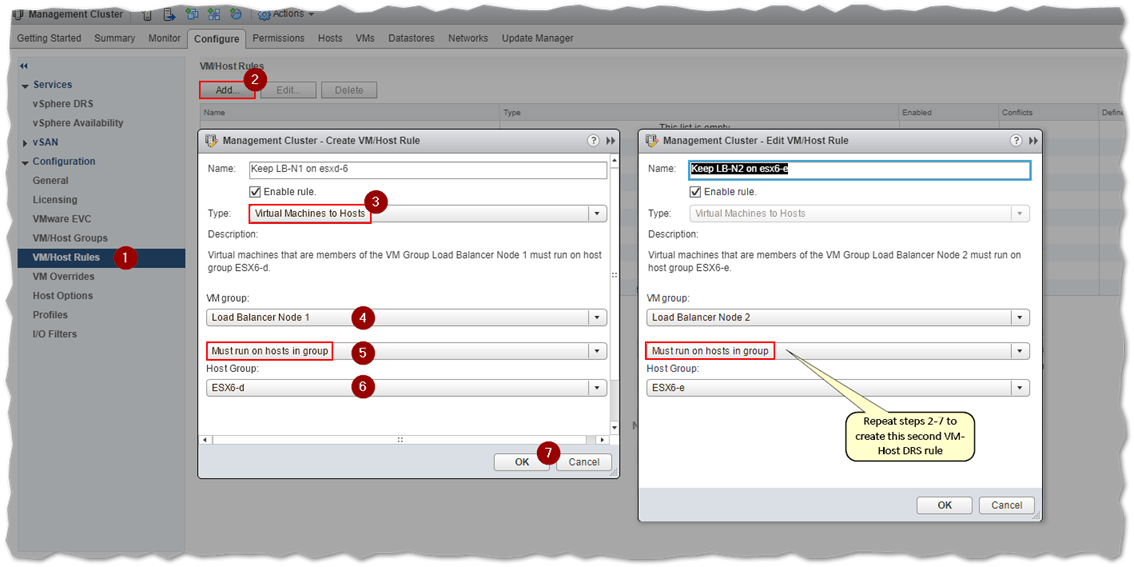

The next step is to create the DRS affinity rules. As always, I’m using the vSphere Web client.

Highlight the cluster name first and select the Configure tab and click on VM/Host Rules under Configuration. Click Add and create the 2 rules as shown. Make sure you select Must run on hosts in group (5) to strictly enforce the setting.

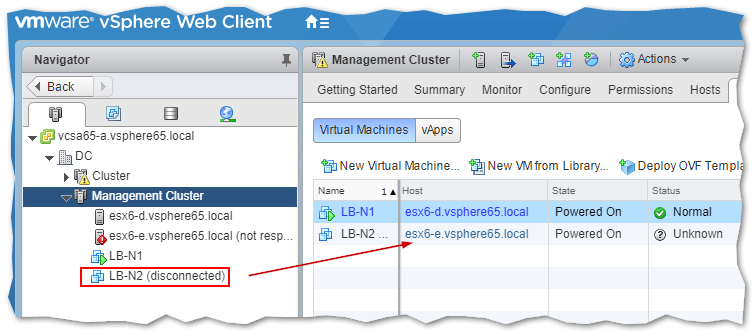

Once again, I’m going to simulate a host failure by disconnecting the nics on a nested ESXi, this time esx6-e. The result is what I expected it to be. LB-N2 which was hosted on esx6-e was moved to the next available host in the cluster. As per the next screenshot, LB-N2 is marked disconnected because vCenter Server is unable to assess its status – since the host where it resides is offline – which is probably why, starting with vSphere 6.0, VMs are registered by HA on a working host regardless of any override settings.

At this point, I reconnected the nic on the host to see if LB-N2 resumes normally. Of course, if you’re doing this for load balancers in real life, make sure to test things out thoroughly to mitigate against any unexpected behavior. In my case, since I only disconnected the nics on the ESXi host, the load balancer node was in fact still running in the background albeit with not network visibility. In a real-world situation, the ESXi host would have probably crashed or suffered hardware failure taking down with it all the VMs unless configured for HA. Always, test, test and test.

Conclusion

HA and DRS remain some of the best features you’ll find in the vSphere product suite. However, as we have seen, there are instances where it is not desirable to have HA protecting each and every VM. So rather than disabling HA – and even DRS – across the board, VM Overrides allow you to take a granular approach and disable or tweak both HA and DRS settings at the VM level.

Write to me

Are you experiencing any problems using VM Overrides and DRS affinity rules or anything else related to this how-to guide? Just write to me in the comments below and I’ll be happy to help you out! Call me a freak but I love helping you guys out 🙂

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!