Save to My DOJO

The various files making up a virtual machine can be found residing in folders under what is known as a Datastore. Simply put, a datastore is just a logical container that abstracts the physical storage layer from the user and the virtual machine itself.

In part 1 of this series, we’ll have a look at the types of physical storage available out there, some storage protocols and the 4 types of datastores available for use within your VMware environments. In the second part of the series, I’ll show you how to create NFS and iSCSI shares on a Windows 2012 Server and how to mount them as datastores on an ESXi host.

Storage Types

There are two main types of storage you’ll be dealing with when working with VMware; these are local storage and networked storage.

Local storage

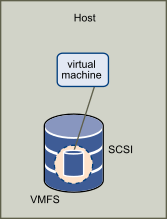

Local storage refers to any type of storage device which is directly attached (local) to an ESXi host such as an internal SCSI or SSD drive. Alternatively, protocols such as SATA or SAS enable you to access an external storage system that is directly connected to an ESXi host.

A VMFS Datastore created on local storage (Source: VMware)

Networked Storage

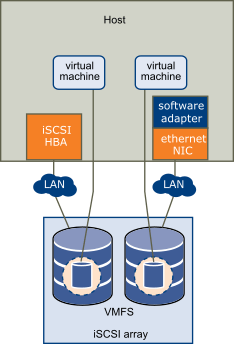

Networked storage on the other hand refers to any remote storage repository which is typically reachable over a high-speed network. The two main contenders for networked storage are NAS (Networked Attached Storage) and SAN (Storage Area Network). Even though the difference between the two has become somewhat blurred, some fundamental differences remain including the way data are written to and read from disk as well as the method used to move data across a network. In a nutshell NAS is used for file-level operations while SAN works on a block-level basis. Here you’ll find listed the differences between the two. Networked storage is important of course because it allows you to do clustering, which is the one feature that gives you goodies such as HA, DRS and Fault Tolerance.

A VMFS Datastore created on an iSCSI array (Source: VMware)

File-Level Access

NAS operates at the file-level using protocols such as NFS and CIFS. In a VMware context, we are only interested in NFS (Network File System) since this is the only file-level protocol that is supported. NFS was developed by Sun Microsystem way back in 1984 to allow computers to access files over a network. What’s more, NFS is a distributed file system, meaning, resources can be accessed concurrently by multiple systems and presented to the user as if they were local. Currently VMware supports NFS versions 3 and 4.1. The following table briefly outlines the differences between the two versions.

Block-Level Access

SAN on the other hand generally involves a more complex setup where one or more protocols can be used. The more common protocols include iSCSI (Internet Small Computer Systems Interface) and FCP (Fiber Channel Protocol) with the latter generally found only in enterprise setups due to the cost involved and implementation complexity. In both cases, a dedicated network is required more so where Fiber Channel (FC) is used.

ESXi hosts participating in an FC network come equipped with HBA (Host Bus Adapters) cards allowing them to be “plugged-in” in the underlying f/o fabric. Note that FC can also run over copper which requires FCoE converged network adapters and twin axial cabling since standard UTP cabling is not supported.

The storage protocol most commonly used to access resources on networked storage is iSCSI where SCSI commands are sent over an IP network. Since iSCSI is an IP protocol, you can use run of the mill Ethernet cards to connect ESXi host to an iSCSI network. That said, specialized iSCSI HBAs with embedded TCP/IP offload capabilities are available if you have cash to spend. These cards are recommended for maximum performance but if you’re tied down by budget constraints and performance is not that much of an issue, any decent Gigabit Ethernet card (avoid like the plague unknown brands and entry-level adapters) and a dedicated network should suffice.

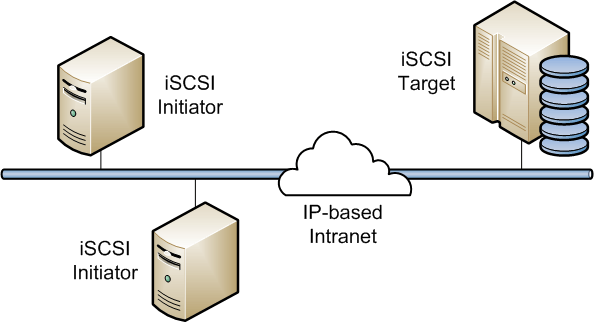

The basic terminology you need to know when setting up and using iSCSI is as follows;

- Initiator – The device that sends out SCSI requests over the network. In our case that would be the ESXi host.

- Target – The device that accepts SCSI requests over the network. In our case that would be the storage unit on which datastores have been created.

- LUN – Though LUN stands for logical unit number which is a descriptor assigned to a SCSI device, in terms of networked storage it’s best to think of it as a volume presented to the initiator.

- IQN – This is short for iSCSI Qualified Name and is nothing more than a label assigned to a device participating in an iSCSI network.

- Discovery – This is the process through which an initiator acquires a list of available targets and LUNs. You’ll need the IQN or IP Address of the target. Likewise you optionally need (highly recommended that you do) to add the initiators’ IQNs to the target to grant them access. iSCSI typically needs TCP ports 860 and 3260 for it to work so make sure that any firewalls sitting between the initiator and target allows traffic through test ports.

A simple iSCSI network (Source: Oracle)

VMware File System

VMware developed its own distributed file system called VMware Virtual Machine File System or VMFS for short. I won’t be discussing the technical aspects of VMFS but you can read on some of the basics here.

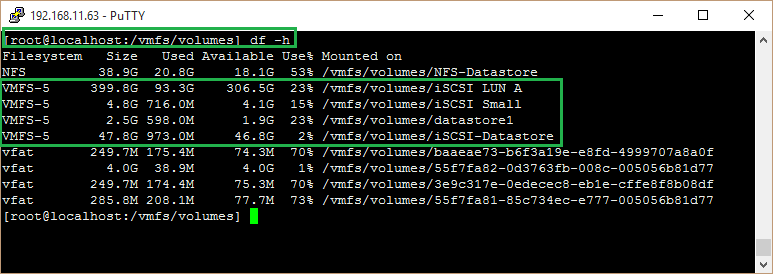

What you need to know is that VMFS is used whenever a datastore is created on local or SAN based storage. You can verify this by either using the vSphere client or by typing df -h in an ssh session when connected to an ESXi host as shown in the following screenshot. The items boxed in green show four VMFS-5 datastores, 3 of which reside on iSCSI storage and one on a drive local to the ESXi host.

A list of datastores and corresponding file systems

At the time of writing there are three flavours of VMFS, these being versions 3, 4 and 5. The following lists the main features of VMFS v5.

- Greater than 2TB storage devices for each VMFS5 extent.

- Support of virtual machines with large capacity virtual disks, or disks greater than 2TB.

- Increased resource limits such as file descriptors.

- Standard 1MB file system block size with support of 2TB virtual disks.

- Greater than 2TB disk size for RDMs.

- Support of small files of 1KB.

- Ability to open any file located on a VMFS5 datastore in a shared mode by a maximum of 32 hosts.

- Scalability improvements on storage devices that support hardware acceleration.

- Default use of ATS-only locking mechanisms on storage devices that support ATS.

- Ability to reclaim physical storage space on thin provisioned storage devices.

- Online upgrade process that upgrades existing datastores without disrupting hosts or virtual machines that are currently running.

Datastore Types

We now turn our attention to the types of datastore available;

- VMFS Datastores – As already mentioned, VMFS is a file system used to format any datastores created on local and SAN based storage.

- NFS Datastores – These are created on an NFS volume residing on a NAS device.

- Virtual SAN Datastores – Using local storage, including directly attached disk arrays, you can now create a clustered datastore that can span several ESXi hosts voiding the need for networked storage. VSAN has been available since vSphere 5.5 and is currently at version 6.2. I’ve written about VSAN, a few months back where I explained how to set it up on a nested vSphere environment for testing purposes.

- VVOL Datastores – Virtual Volume datastores, introduced with vSphere 6.0, provide a whole new level of abstraction doing away with luns and VMFS. There is however one important dependency in that a storage array must be used and be VASA compliant for this type of datastore to be created. I won’t be covering these today mainly because I haven’t as yet had the opportunity to play around with them. This paper, however, is an excellent starting point which I encourage you to read.

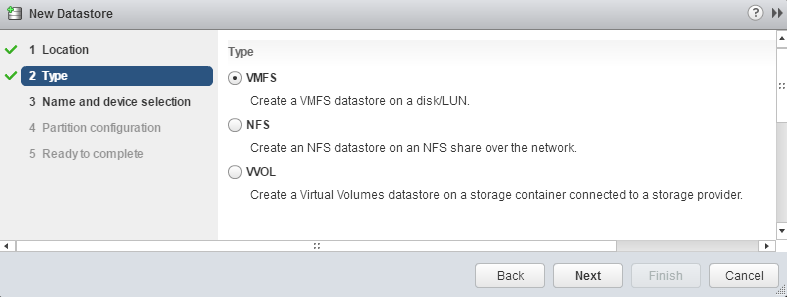

Creating a new Datastore using the vSphere Web Client

Summary

So far we’ve covered the following;

- 2 types of storage – Local and Networked Storage

- 2 main file systems – NFS and VMFS v2, v3 and v5 (actually there’s a third called VSAN which as you guessed – since vSphere 6.0 – is used for VSAN)

- 2 main storage protocols – iSCSI and NFS v3 and v4.1

- 4 types of datastores – VMFS, NFS, VSAN and VVOL

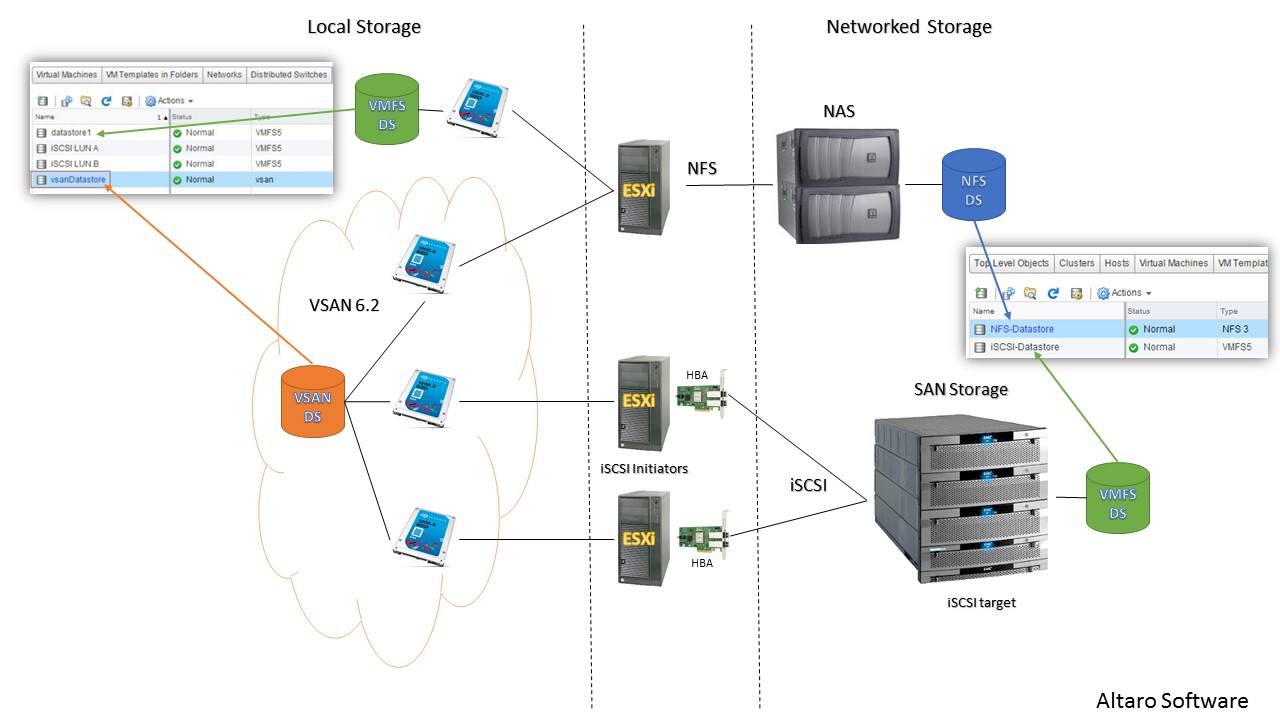

Finally, the diagram below that I put together summarizes the essential information presented thus far. I also included a couple of vSphere Web client screen snippets to illustrate how the various datastores are shown in vSphere client.

A diagram of the available types of physical storage and Datastores when working with VMware

Conclusion

In the second part of the series, I’ll show you how to create NFS shares and iSCSI luns on a Windows 2012 server as well as how to mount them on an ESXi host as datastores. Make sure to tune in next week for Part 2.

[the_ad id=”4738″][the_ad id=”4796″]

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!