Save to My DOJO

In previous posts, we have covered how to get started using Packer for a VMware environment. We also reviewed how to configure our packer builds to automatically run windows updates so that we have templates that are running the latest and greatest OS patches every time (I cannot tell you how much time this saves on patching). Now, we want to take it a step further. Let’s add what we’ve created into an Azure DevOps pipeline. Here are a few of the many benefits we will get from running our Packer build in a pipeline versus a scheduled task on a server:

- Build Success/Failure analytics – Azure DevOps provides built-in metrics and reporting on build failures. Every time our build fails we can configure an email notification letting us know this happened. We could do the same in a scheduled task, however, it would require a lot more elbow grease.

- Source Code Integration – We now get direct integration with our source code to our packer build. Azure DevOps lets us pull the code straight from the repo and execute it. This allows us to make changes and test our packer build configuration with much more speed, especially if we start using Continuous Integration with our pipeline which allows us to deploy our packer config as soon as a change is made to source control.

- Agile Packer Build Workflows – Integrating our Packer build into a pipeline will elevate us to new levels of automation. We can perform tasks on build failures like create a ticket in our company ticketing system to have an engineer look at it. We can even add another stage after the build is successful where we test building a server with the template and scan it with a vulnerability scanner like OpenVAS, Nessus, or Nexpose. The possibilities of what we could do are endless which is why pipelines are so powerful and widely talked about today.

Setting Up Azure DevOps On-Prem Agent

In order for us to be able to run Azure Pipelines on-premises, we will need to build a server or container and install the Azure DevOps Agent on it. This server will allow us to perform tasks on-premises which we will need in order to deploy our packer build for our VMware environment. I have a post that already contains the instructions on how to set this up on a Windows Server, just follow the instructions under the “Setting up Azure DevOps Agent” section. You will need a Windows Server that is able to ping your VCenter server.

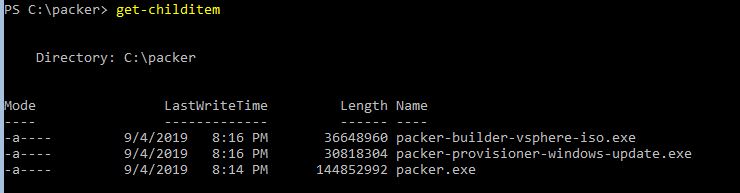

We will also need to install packer, the VSphere-ISO plugin, and the Windows Update provisioner by placing all three executables in a folder. In my example, I place them into C:\Packer. You can find the location to download each one in my previous two Packer posts linked at the beginning of this article:

Then we need to set the environmental variable for the Packer file using the following syntax:

setx PATH "$env:path;C:\Packer" -m

I would highly recommend rebooting the Azure DevOps Agent server after getting Packer configured. I’ve had to troubleshoot several failed packer builds because the packer executable could not be found when running the pipeline and a reboot of the agent server fixed the issue.

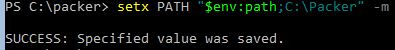

Azure DevOps Repo

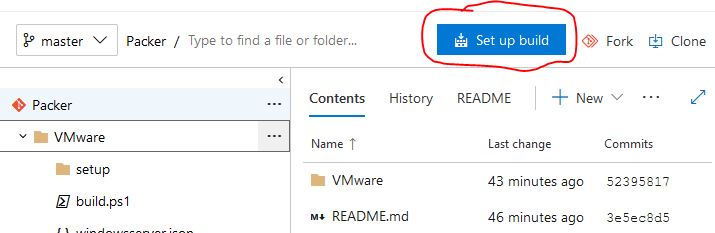

Now that we have our Azure DevOps Agent server configured, We’ll go ahead and create a repo for our Packer build configuration. I have an Azure repo set up called “Packer” with all our configuration files needed to create our packer build:

Creating the Packer Build

Now that we have our agent set up we can create our build. You may have heard developers talk about build and release. The build is typically the stage where we are “building” our application and compiling our code. However, with infrastructure as code tools like Terraform and Packer, our build phase can be pretty small since we are building infrastructure and don’t need to do any sort of compiling like you would from an application standpoint. In our example, we will use our build phase as the method to copy our files from source control and publish them to an artifact. An artifact is a temporary place for us to store our configuration files which we can then retrieve and deploy during our release phase, which is the phase where we will actually run our packer config.

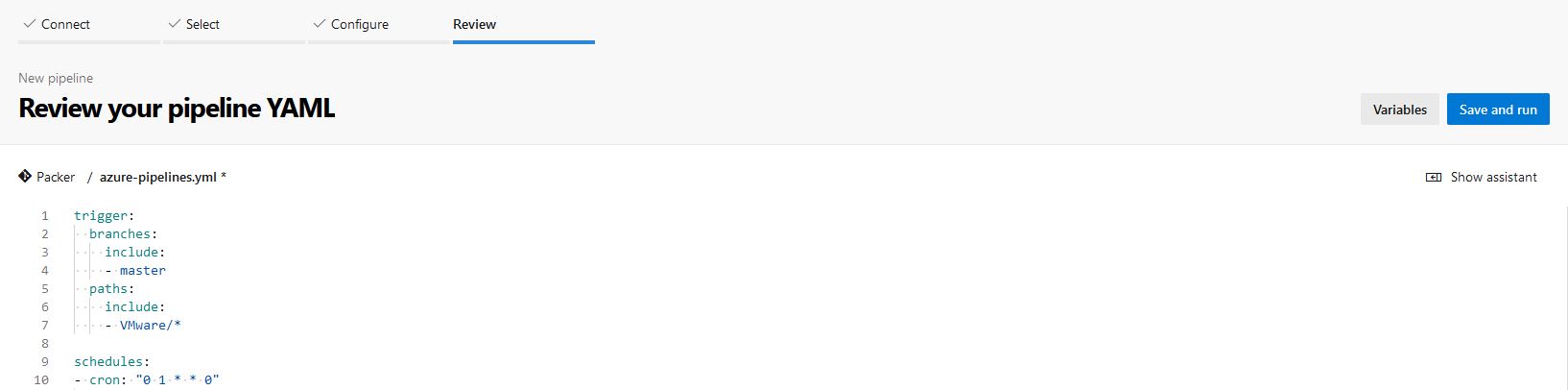

One thing to note is that currently, Azure DevOps allows you to create builds with a YAML configuration. This is awesome because it allows us to whip out these build configs in a more automation fashion. We can even create YAML templates that take parameters from other YAML files but that’s a topic for another time. We will create a build from a YAML file and we will include two additional settings. We want our Packer build to run every week to get the latest and greatest updates for the OS and whatever components we want to update. This means we need it to run on schedule, I have included a schedule section that tells the build to run every Sunday at 1 AM. I have also included a triggers section that enables continuous integration for the VMware folder. This means that any commits made all components under the VMware folder will automatically trigger a build. This will be very useful for testing new builds, if you’re highly dependent on your templates you may want to save this trigger for a “development” packer build:

trigger:

branches:

include:

- master

paths:

include:

- VMware/*

schedules:

- cron: "0 1 * * 0"

displayName: Weekly Sunday build

branches:

include:

- master

always: true

pool:

name: Default

steps:

- task: CopyFiles@2

displayName: 'Copy Packer Files to Artifacts'

inputs:

SourceFolder: VMware

TargetFolder: '$(Build.ArtifactStagingDirectory)'

cleanTargetFolder: true

- task: PublishPipelineArtifact@1

inputs:

targetPath: '$(Build.ArtifactStagingDirectory)'

artifact: PackerConfig

We’ll copy the YAML code above and navigate to the Packer repo we created, we will click on Set Up Build to create a build from this repo:

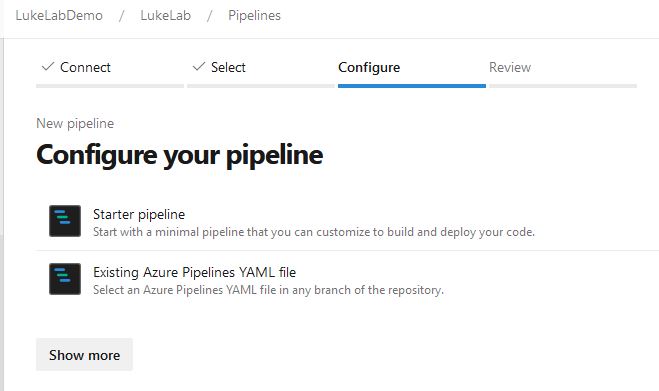

Select the option for Starter Pipeline:

We’ll paste in our YAML code we copied above and select Save and Run:

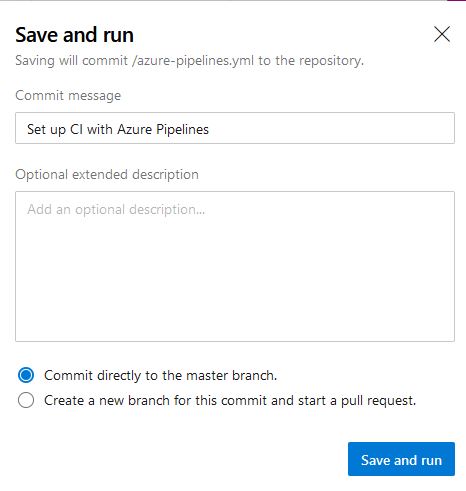

Keep the option to Commit directly to the master branch. Essentially we are saving our pipeline YAML to our Packer repo, this provides a variety of benefits including allowing us to track changes made to the pipeline with source control. Click Save and Run to continue:

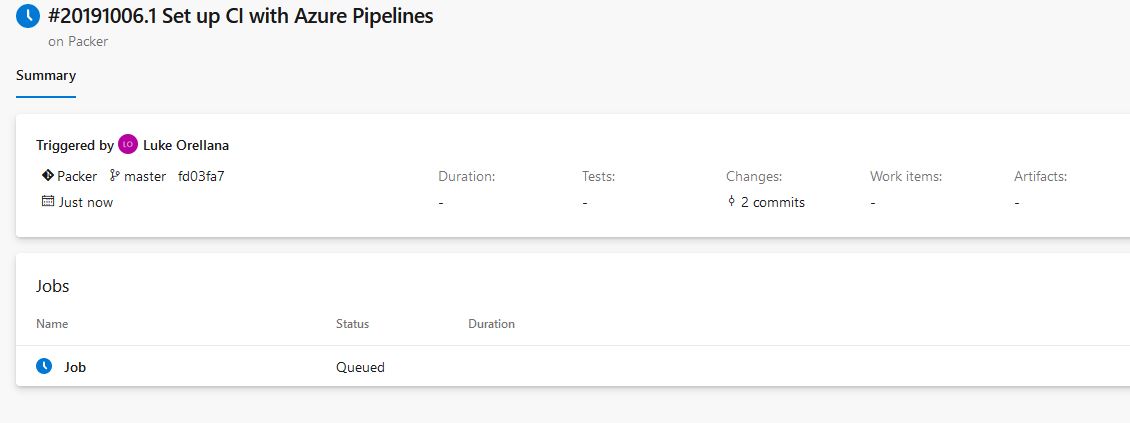

The Job will be set up and queued to start the build job, once it completes we will get a successful status message:

Now we are done with setting up the build, we need to set up our variables group to use with our Packer release.

Creating the Variables Group

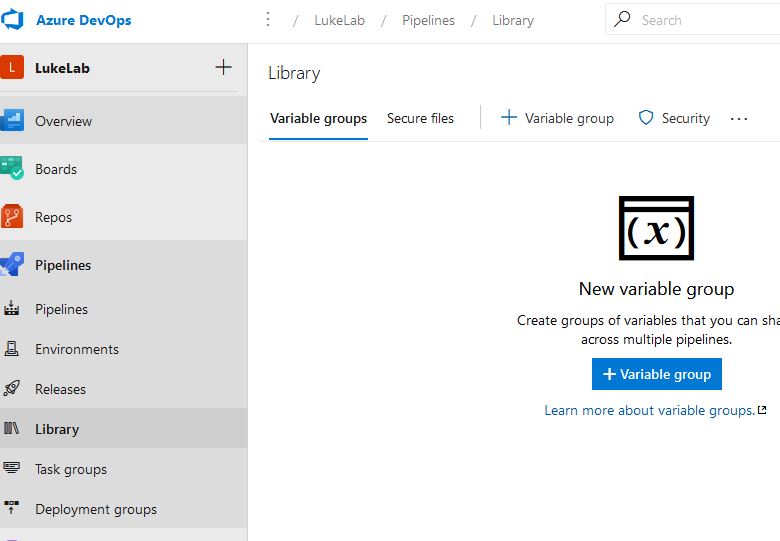

The variables group is a collection of variables that are securely stored in Azure DevOps to use within the pipelines. There are many additional features we could use here, like storing our passwords in Azure Key Vault and then calling them from our pipeline. However, for simplicity’s sake, we will just create a simple variable group with our passwords. To get started, select Library under the Pipelines section. Then select +Variable Group:

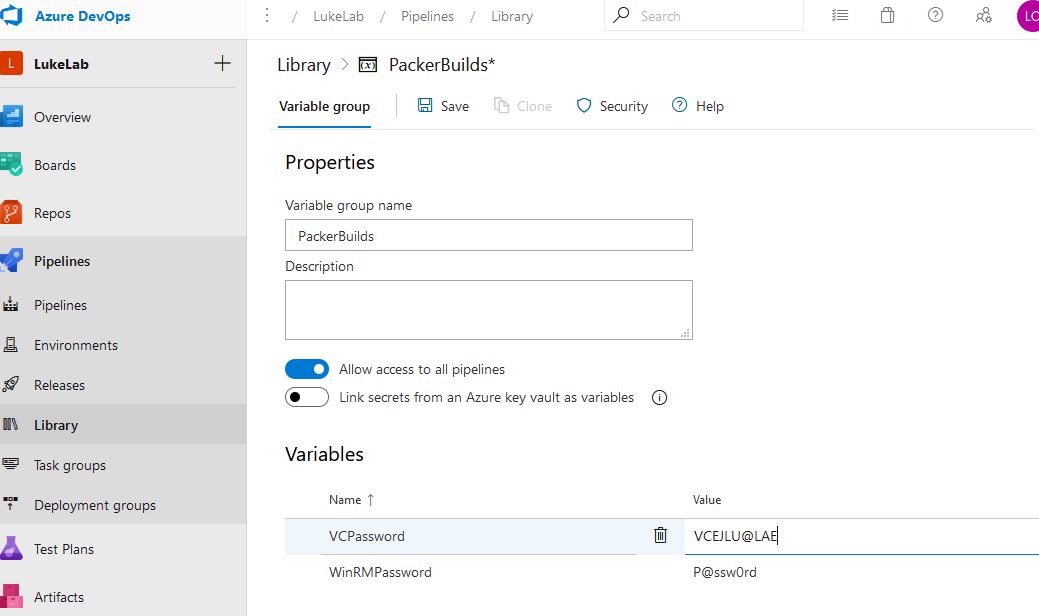

We will call our variable group name “PackerBuilds” and we will include our VCPassword (password for VCenter) and WinRMPassword (password for connecting via WinRM) variables. Don’t worry, the values will only show in clear text for a few minutes. If we click Save and go back to our variable group a few minutes later the values will never show again:

Now that we have our variables we can finally build the release.

Creating the Packer Release

Like I stated previously, the release phase is going to be the part where we actually deploy our Packer config. As of right now, there is no YAML support for Releases in Azure DevOps so we will need to build each step using the GUI. There has been confirmation of YAML support coming in the future which is great. This will allow us to spit out releases much more easily and automate the creation of releases easily.

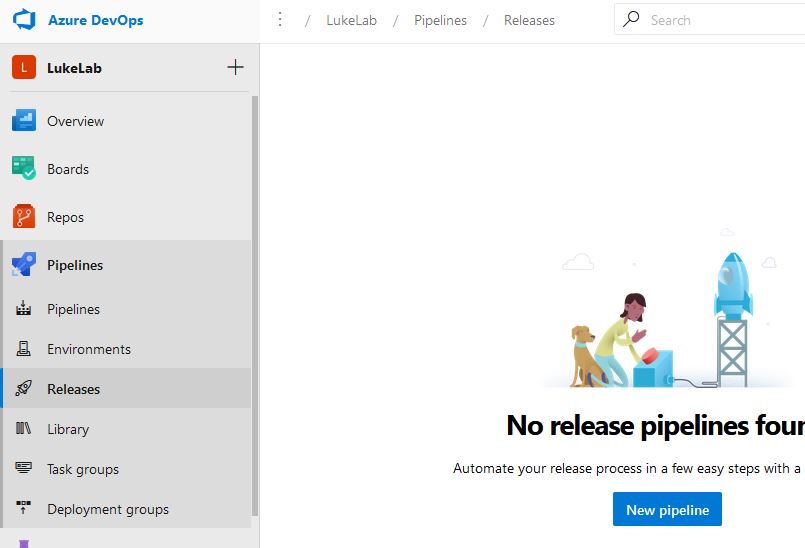

To get started, in Azure DevOps select the Releases section under Pipelines. Then select New Pipeline:

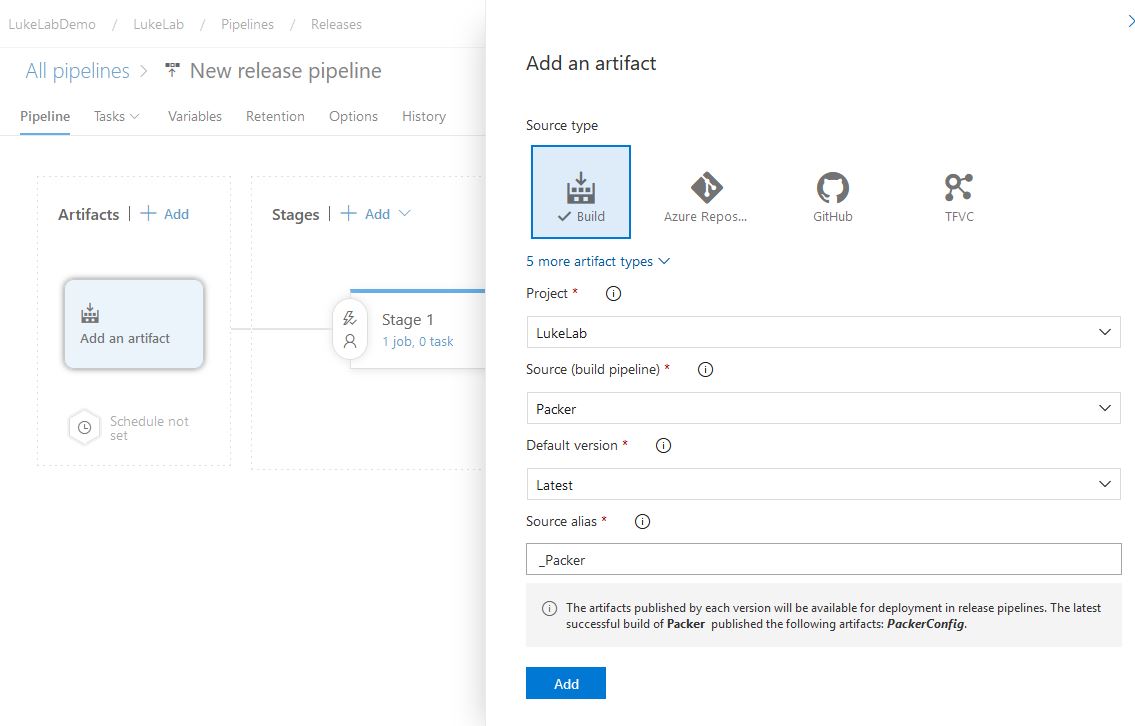

Select Empty Job to get started for now. Then click on Add an Artifact, we need to select the artifact that we created from our build. Select Build source type and we will select the Packer build pipeline that we just created. Click Add to finish:

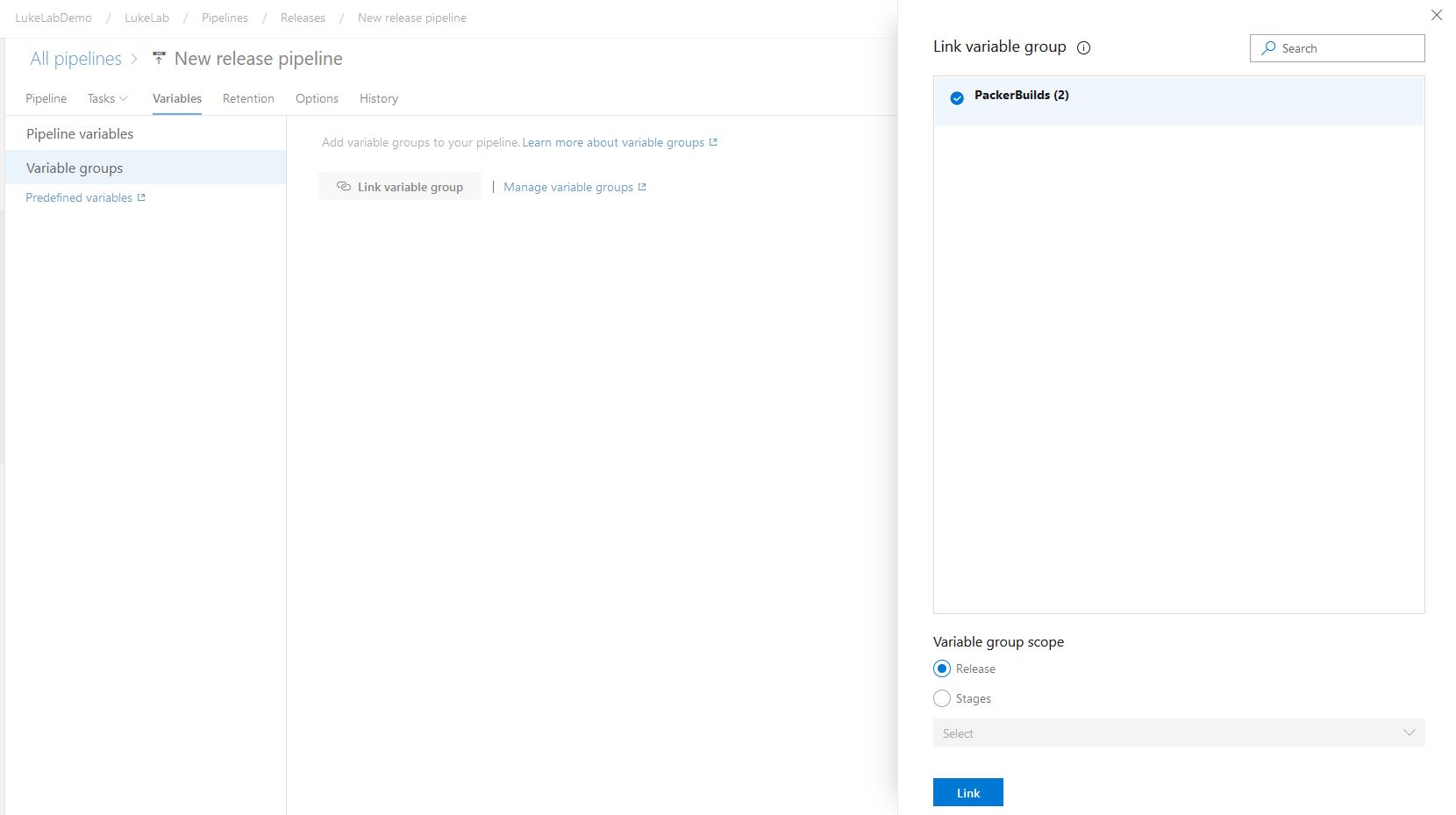

Under the variables tab select Variable Groups and then Link variable group. We will select the “PackerBuilds” group we previously created and then select Link:

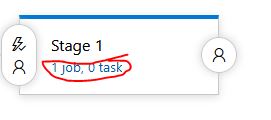

Next click on the link for 1 job, 0 task under Stage 1:

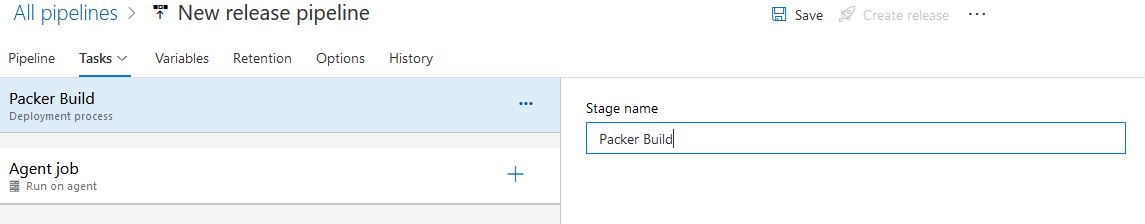

We will name our Stage “Packer Build” since this is the stage where we will be deploying our Packer config:

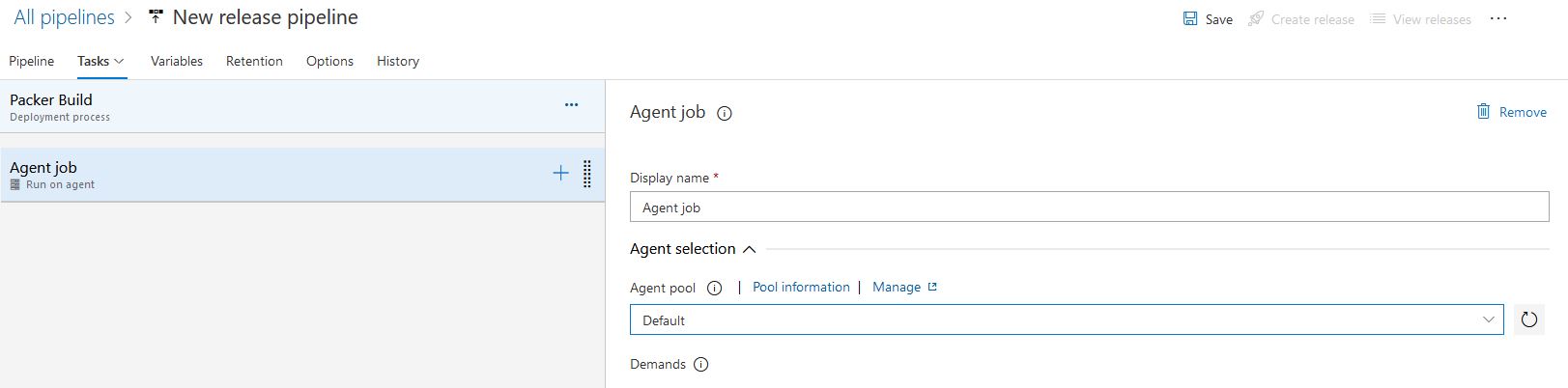

Select the Agent Job and then under Agent Pool, select the pool that contains your on-premise server. In my example the default pool contains my on-premise server with the Azure DevOps Agent installed:

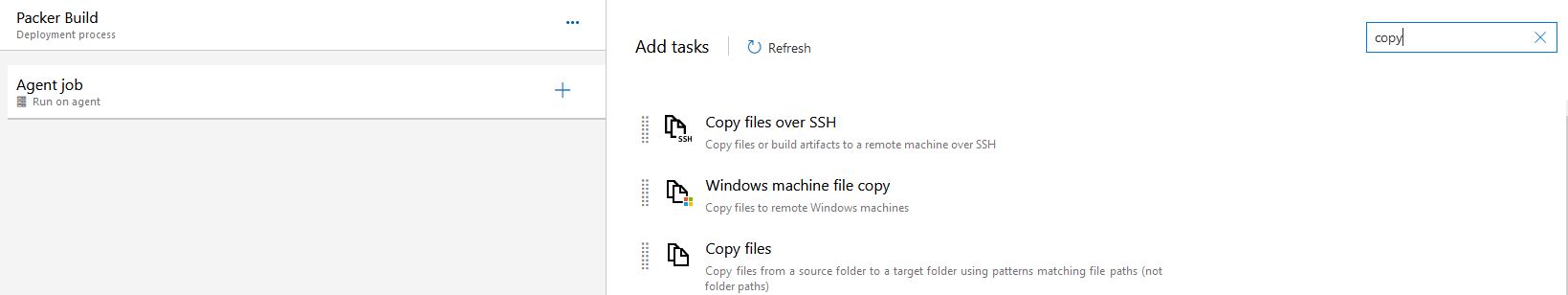

Select the + sign to add our first task, which will be a Copy Files task. Search for Copy in the search bar and select Copy Files:

Essentially we need to copy our Packer configuration files directly into the root of the working directory. This because of a limitation with our Packer task and the way we are using so many additional files other than just the.JSON file. This is the easiest workaround that I’ve found so far, the source and target folders look like the following:

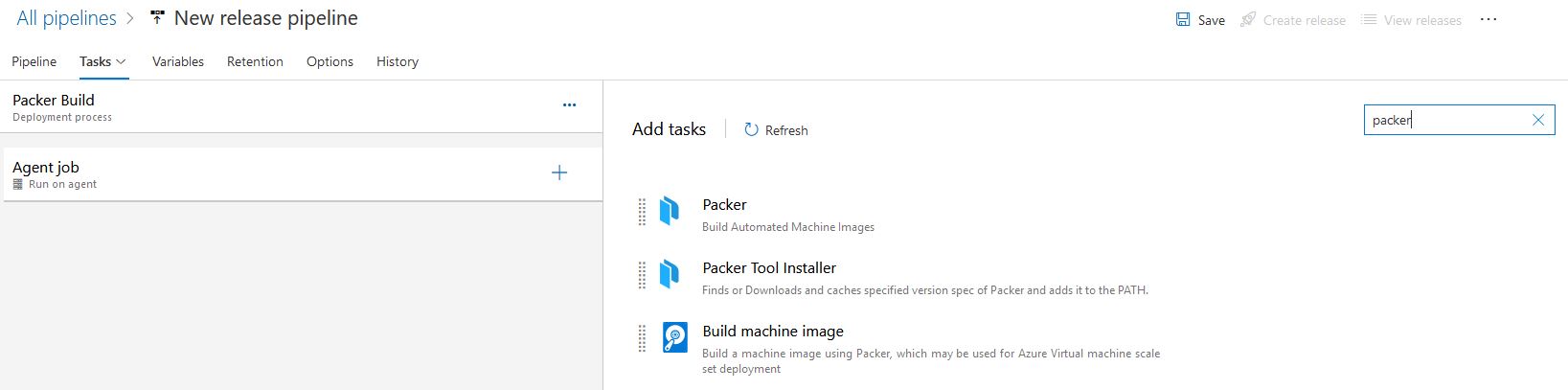

Next, click the + sign again and we will add in our packer task. Search for “packer” in the search bar. Select Build Machine Image:

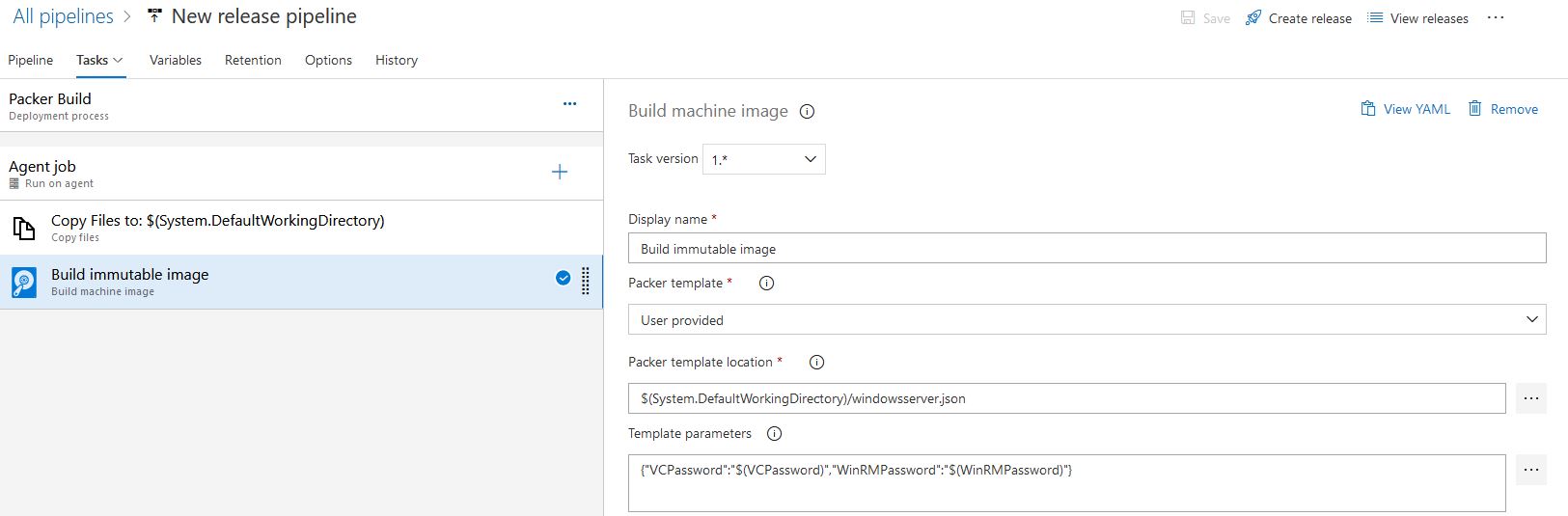

Now we will select a “User-Provided” Packer Template dropdown and we will set the Packer Template Location to “$(System.DefaultWorkingDirectory)/windowsserver.json” since we copied all of our packer files to the root of this directory. Lastly, we need to connect the variables fields in our Packer configuration to the variable values from our variable group. The pipeline variables that we are using will be in the “$(VariableName)” format and it will look like the screenshot below:

{"VCPassword":"$(VCPassword)","WinRMPassword":"$(WinRMPassword)"}

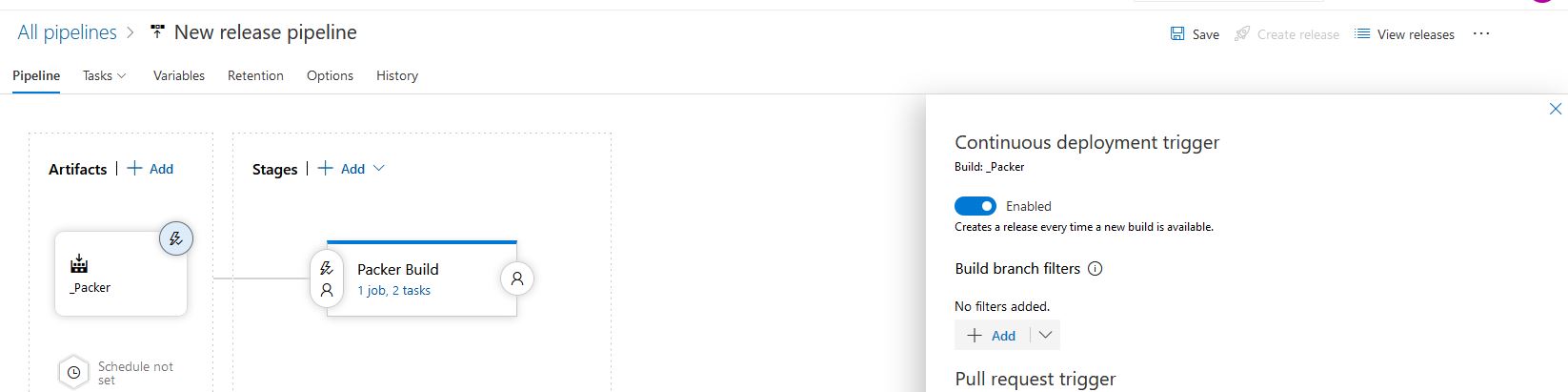

Go back to the Pipeline tab and select the lighting bolt under the artifact. Select the option to Enable Continuous Deployment. This allows us to deploy our packer build every time there is a new artifact build available, and remember previously we configured our build to run every Sunday at 1 am and if there is a new commit made:

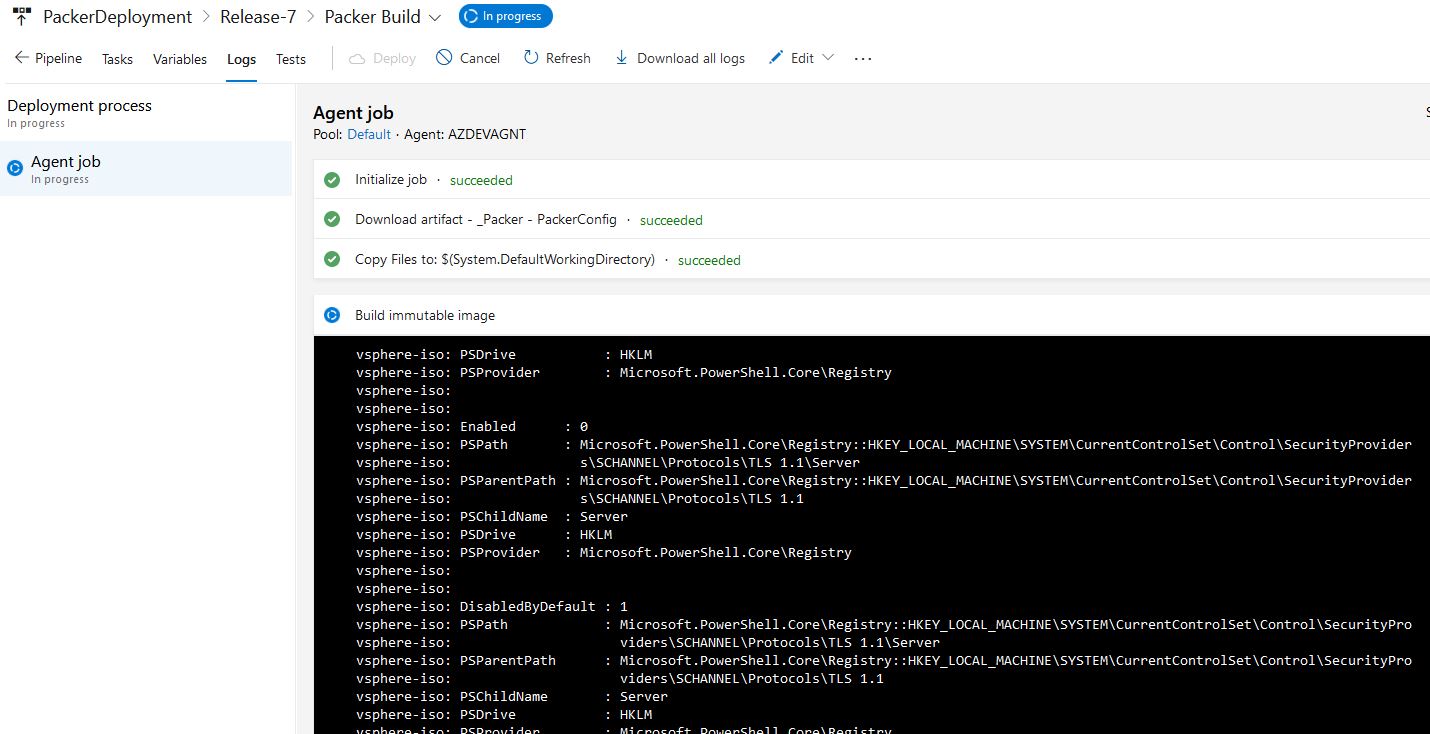

Select Save to save our release pipeline. Now we can run our Build again and watch the Build and Release pipelines run. Once the build completes successfully, the Release pipeline will automatically run because we enabled Continuous Integration. We get a detailed log of what is occurring on our packer build:

See it in Action

This is a nice start for setting up a basic Azure DevOps pipeline for Packer. We could even add extra stages in the release pipeline to perform various security and stability testing against our template image. Also, we could even add an Azure or AWS template build to our Packer configuration. With the rise of multi-cloud, we are starting to see even more benefits to tools like Packer and Terraform where we can re-use the same process or code for deploying and managing infrastructure in both on-premise and in the cloud. Let me know in the comments below what you’re doing with Packer and Azure DevOps.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!