Save to My DOJO

New and exciting software technologies have emerged on the scene, such as Artificial Intelligence (AI) and Machine Learning (ML). These technologies enable businesses to do things considered to be science fiction only a few years ago. It has brought about exciting challenges from a software, hardware, and virtualization perspective to use discrete hardware devices attached to physical servers in the environment for both AI and ML use cases.

In August 2019, VMware acquired Bitfusion, a pioneer in hardware-accelerated devices such as Graphics Processing Unit (GPU) technology. What is Bitfusion? How does it allow organizations to take full advantage of discrete hardware acceleration in new ways in their environment? Does it work with both traditional workloads as well as modern workloads such as containers? Why do you need a GPU acceleration platform? This post will look at VMware Bitfusion and see how it empowers your business to utilize GPU processing more efficiently.

What computing challenge exists for organizations today?

To understand the problem that Bitfusion helps organizations solve, we need to know how organizations are using applications and data. As long as computers have been in existence, artificial machines that can make intelligent decisions has been the holy grail of computing. As computing power and the sheer amount of data has increased exponentially, the concepts of Artificial Intelligence (AI) and Machine Learning (ML) have emerged in the past few years as real, viable technologies. Organizations are now able to use these technologies in real-world use cases.

With the proliferation of AI and ML technologies across various industries, organizations face the pressing challenge of optimizing their computational resources to keep pace with the demands of these cutting-edge applications. As AI and ML become integral parts of business operations, the need for efficient utilization of hardware accelerators, such as GPUs, becomes paramount. Traditional computing architectures often fall short in meeting these demands, leading to suboptimal performance and resource allocation. This is where VMware Bitfusion steps in, offering a transformative solution to effectively harness the power of GPUs and streamline AI and ML workflows. By virtualizing hardware accelerators and providing a shared pool of GPU resources, Bitfusion empowers organizations to unlock new levels of efficiency and scalability in their computing infrastructure. Through seamless integration with existing virtualized environments, Bitfusion enables businesses to leverage GPU processing capabilities with unparalleled flexibility and ease.

If you have used or know of Apple’s Siri, Microsoft Cortana, Google Assistant, Facebook, autonomous vehicles, and many other powerful technology solutions, you have seen AI and ML in action. However, it is not only the hyper-scalers like Amazon, Google, and Microsoft that now have access to AI and ML. SMB and other enterprise organizations can now take advantage of these technologies to drastically improve internal processes, software solutions, and many other areas. Businesses are heavily using AI and ML in the areas of cybersecurity, logistics, statistical analysis.

Machine Learning (ML) is a specific concept of AI. It puts into practice machines’ ability to access the data and then learns independently based on advanced computational algorithms. Machine learning is now driving tremendous innovation in products that perform critical core business functions. It allows businesses to operate with better efficiency, accuracy, effectiveness, and agility than before. Today, machine learning works on the concept of a “neural network” of countless compute resources with access to the vast amounts of data available to most organizations. The idea is very similar to how the human brain works to retrieve, store, and learn. Machine learning using neural networks has resulted in impressive capabilities using machine intelligence.

One of the challenges with AI and ML is they require massive amounts of computing power to perform analysis and modeling of data needed to create the “intelligence” provided by these technologies. Traditional x86 server processors struggle to meet the demands of these applications. A paradigm shift in AI and ML has been the use of Graphics Processing Units (GPUs). Modern GPUs are extremely powerful. Now, modern AI and ML applications can have processing offloaded to these powerful GPU hardware devices, thereby unlocking a tremendous amount of computing potential and freeing up CPUs for other tasks and processes.

Even when organizations provide modern GPUs for AI and ML offloading for additional processing, implementing GPU resources cost-effectively and efficiently can be challenging. These challenges may result in a static allocation of GPU processing power to specific users or applications. This type of allocation leads to a less than efficient approach to realizing the GPU processing power’s full potential. Many larger GPU deployments may not share or pool GPU servers and lead to less efficient implementation.

What challenges surface with this “traditional” deployment of GPU processing across the environment?

- Physical and thermal restrictions limit the density of GPUs in the environment

- Increased cost

- Power supply limitations

- Limited scalability

- Multi-tenancy capabilities are limited

- Applications suffer

What is VMware vSphere Bitfusion?

VMware vSphere Bitfusion is VMware’s solution that sets out to resolve the challenges mentioned with using GPUs. With Bitfusion, organizations can do with physical GPUs what ESX did for physical server resources. Bitfusion allows virtualizing hardware accelerators such as GPUs and providing a pool of shared GPU resources that are network-accessible. With this pool of virtualized GPU processing power, Bitfusion allows much more efficiently and effectively meeting the demands of today’s artificial intelligence (AI) and machine learning (ML) workloads.

VMware Bitfusion provides an entire management solution for GPU resources in the environment. The management features include the ability to monitor health, efficiency, and availability of GPU servers. Organizations can effectively monitor client consumption. Other features such as assigning quotas and time limits in the environment are also possible. It supports and works with leading AI frameworks. These include PyTorch and TensorFlow. It is easy to deploy and can reside in a traditional virtual machine or a modern workload such as a Docker container.

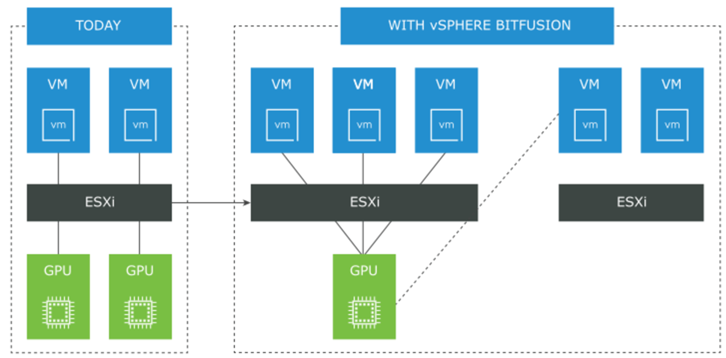

Below is a high-level overview of how VMware Bitfusion changes GPU access in the environment.

VMware vSphere Bitfusion high-level overview

There is no vGPU installed in the virtual machine within the workload VM, as you might expect. Instead of a vGPU, the Bitfusion agent is installed. This agent is called the FlexDirect agent.

Bitfusion provides many tremendous benefits related to running AI and ML applications. These include:

- Organizations can allocate and provide access to GPU resources dynamically. With Bitfusion virtualizing and abstracting access to GPUs, applications can share GPU resources not explicitly dedicated to individual machines. These applications run on a configured virtual machine, container, or other environments.

- Applications can access GPU resources provided by the pool of vSphere Bitfusion servers across the network. These resources are used for a specific length of time allocated to the application. Once an application session ends, the allocated GPU resources return to the available GPU resources for use with the next application.

- Another really interesting capability that Bitfusion provides is the ability to partition GPUs. Through GPU partitioning, a physical GPU’s total memory can be divided into much smaller fractions of memory. Different applications use these much smaller chunks at the same time. Bitfusion does this by intercepting API calls destined for the local GPU accelerator and sending these API calls across the network to the pool of GPU resources.

- It improves the economics of many artificial intelligence (AI) and machine learning (ML) use cases that do not need to take advantage of an entire GPU. Instead, it may only need a fraction or “slice” of the GPU to test or validate ML algorithms. Bitfusion allows assigning as small as 1/20 of existing GPU hardware. It enables a much more fine-grained approach to managing GPU resources across the environment and supporting larger numbers of users using the same amount of high-performance GPU hardware. It reduces costs and helps to right-size the solution.

- Bitfusion allows aggregating and pooling GPU resources in the environment. It also helps to achieve even better performance across AI and ML applications, pooled together. How is this accomplished? Inside the Bitfusion virtualization layer, it has many different runtime optimizations that automatically select and utilize the best combination of transports. These include host CPU copes, PCIe, Ethernet, InfiniBand, GP, and RDMA. The performance resembles native GPUs.

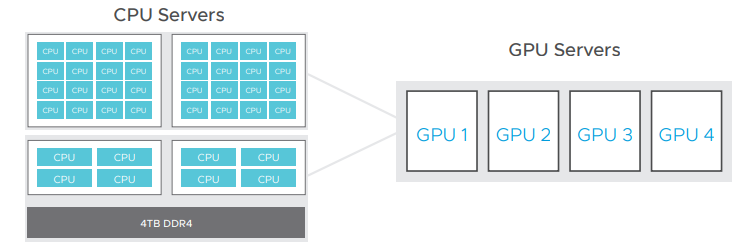

This much more efficient operation of your GPU environment leads to astounding performance benefits:

- 50% less cost per GPU by using smaller GPU servers

- Scalable to multiple GPUs servers

- 4X more AI application throughput

- Supports GPU applications with high storage, CPU requirements

- Scalable—less global traffic

- Composable—add resources as you scale

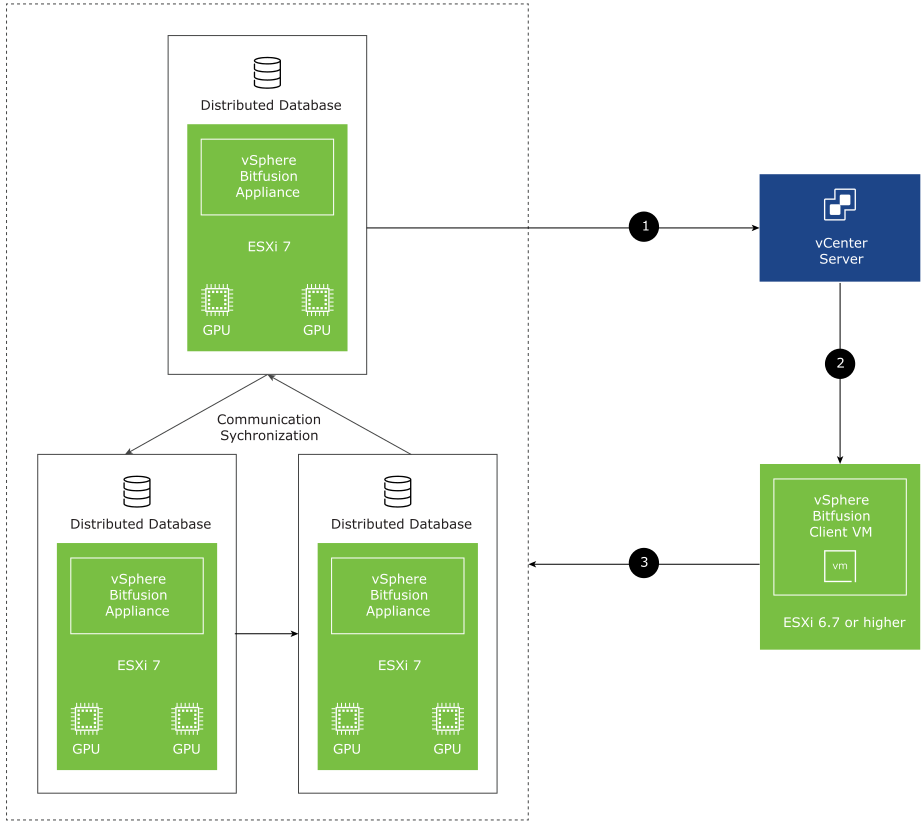

VMware vSphere Bitfusion Components and Architecture

What are the components and architecture of vSphere Bitfusion? VMware vSphere Bitfusion is comprised of the following:

- vSphere Bitfusion Server – The vSphere Bitfusion Server is a pre-configured VMware OVA appliance run as a virtual machine on an ESXi host with a locally installed GPU. It contains pre-configured software and services and software. The Bitfusion server requires access to the local GPUs through DirectPath I/O.

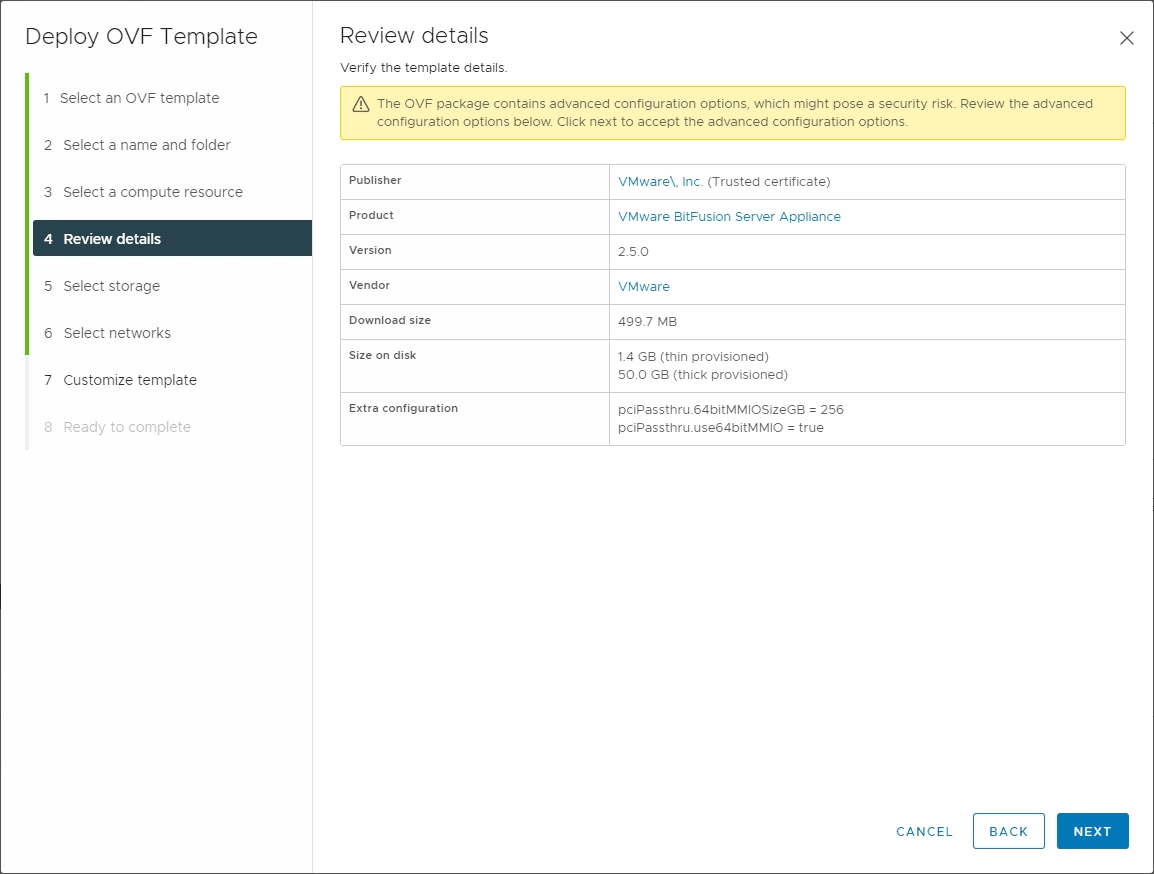

- Below is a screenshot from the deployment of the Bitfusion OVA appliance. It follows the same OVA deployment process that most vSphere administrators are familiar with – configuring (compute, storage, networking, and appliance details).

Deploying the Bitfusion Server OVA appliance

- vSphere Bitfusion Client – The Bitfusion Client component runs inside the VMs running the AI and ML workloads.

- vSphere Bitfusion Plug-in – Like many VMware solutions, the vSphere Bitfusion Plug-in is installed and registered in vCenter Server by the Bitfusion servers. The plugin allows easy management and monitoring of the Bitfusion environment, including clients and servers.

- vSphere Bitfusion Cluster – the Bitfusion Cluster is made up of all vSphere Bitfusion servers and clients in a vSphere environment, managed by vCenter Server

A small Bitfusion cluster example

- vSphere Bitfusion Group – The vSphere Bitfusion client creates a vSphere Bitfusion group during the install of Bitfusion.

- vSphere Client – The VMware vSphere Client is the modern UI that allows easy interaction with Bitfusion and vCenter Server. The modern vSphere Client is an HTML 5 interface that provides quick and easy access to all management, monitoring, and configuration tasks.

- Command-Line Interface (CLI) – You can manage Bitfusion servers and clients using the command-line interface (CLI)

- vCenter Server– The VMware vCenter Server is the centralized management plane for the vSphere environment. VMware vCenter Server unlocks the enterprise features and capabilities in vSphere.

Requirements for vSphere Bitfusion Server

Before installing Bitfusion

You need to take care of a couple of details before installing Bitfusion in your environment. These include the following as noted by VMware Bitfusion documentation:

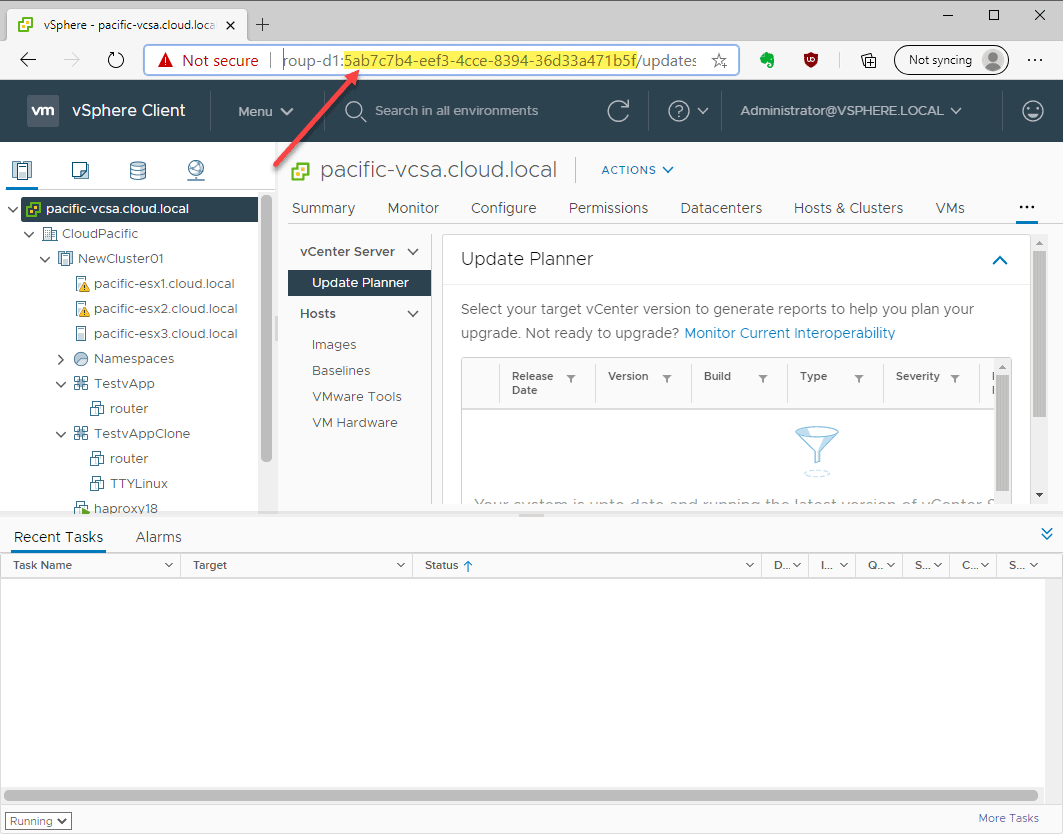

- Find the GUID for vCenter Server and the SSL Thumbprint – To quickly get the vCenter Server GUID, login to the vSphere Client, and you will see the GUID listed in the URL string in the browser. Just copy this GUID string from the browser to the OVA deployment wizard on the customize template screen.

Finding the vCenter Server GUID in a browser session with the vSphere Client

- Enable your GPU for Passthrough to Enable the GPU for passthrough on the ESXi host:

- In the vSphere Client, right-click on the ESXi host and select Settings.

- On the Configure tab, select Hardware > PCI Devices, and click Configure Passthrough.

- In the Edit PCI Device Availability dialog box, in the ID column, select the check box for the GPU device.

- Click OK.

The GPU is displayed on the Passthrough-enabled devices tab.

-

- Reboot the ESXi host.

- You also need to Enable UEFI or EFI in the boot options the virtual machine.

To use the vSphere Bitfusion server, the VM must boot in EFI or UEFI mode for correct GPU use.

-

- In the vSphere Client, right-click on the VM.

- Select Edit Settings > VM Options > Boot Options.

- From the Firmware drop-down menu, select UEFI or EFI.

- Click OK.

System Requirements for vSphere Bitfusion Server

The vSphere Bitfusion server must run on a vSphere deployment with the following system requirements.

- Disk spaced required for a vSphere Bitfusion server appliance is 50 GB.

- The vSphere Bitfusion server requires an ESXi host running ESXi 7.0 or higher.

- Memory requirements for a vSphere Bitfusion server are at least 150% of the total GPU memory installed on the ESXi host.

- The minimum number of virtual CPUs (vCPUs) required for a vSphere Bitfusion server amounts to the number of GPU cards multiplied by 4.

- The Bitfusion network must support TCP/IP or RoCE (PVRDMA adapters).

- The network needs to deliver 10 Gbps of bandwidth for any machine accessing 2 or more GPUs.

- 50 microseconds of latency or less is desirable. It is not a strict requirement, but the vSphere Bitfusion deployment’s performance is better with low latency.

- Time synchronization is essential. It is necessary to connect all vSphere Bitfusion servers to the same valid NTP server.

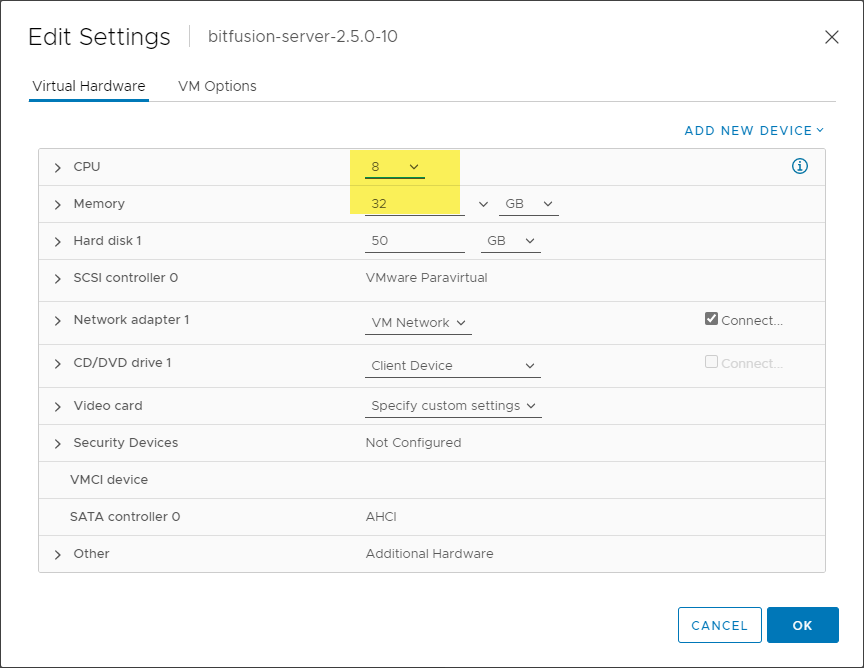

Keep in mind the default deployment configuration of the Bitfusion Server requires a hefty amount of resources. After the Bitfusion Server’s default deployment, you will see the following virtual machine resources configured to the appliance.

- 8 vCPUs

- 32 GB of memory

The default configuration of the Bitfusion Server appliance

Installing the vSphere Bitfusion Server

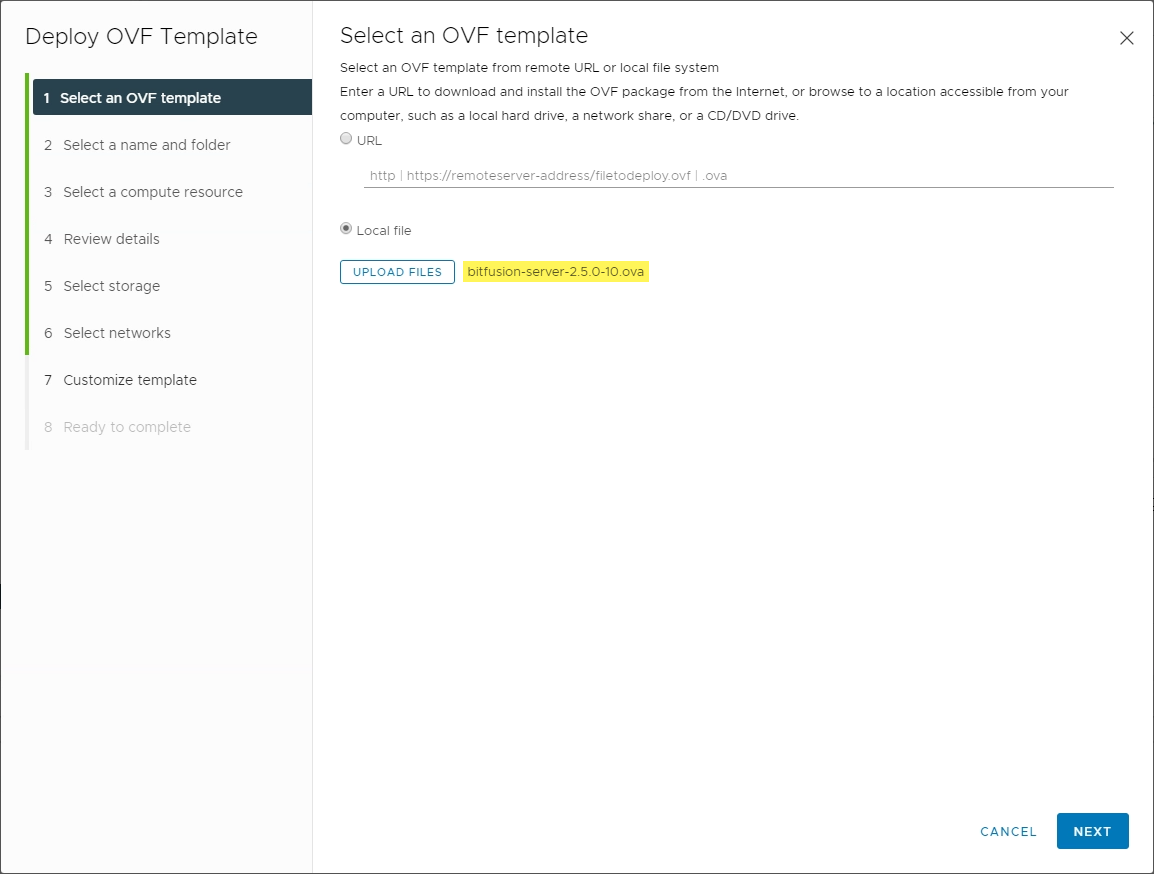

Let’s take a quick look at deploying the Bitfusion OVA Server appliance in a vSphere 7.x and higher environment. Carefully look at the requirements of the Bitfusion Server before installation. For the most part, the installation of the Bitfusion Server is a straightforward OVA deployment process. First, choose the downloaded Bitfusion Server OVA file to upload.

Select the Bitfusion Server OVA appliance

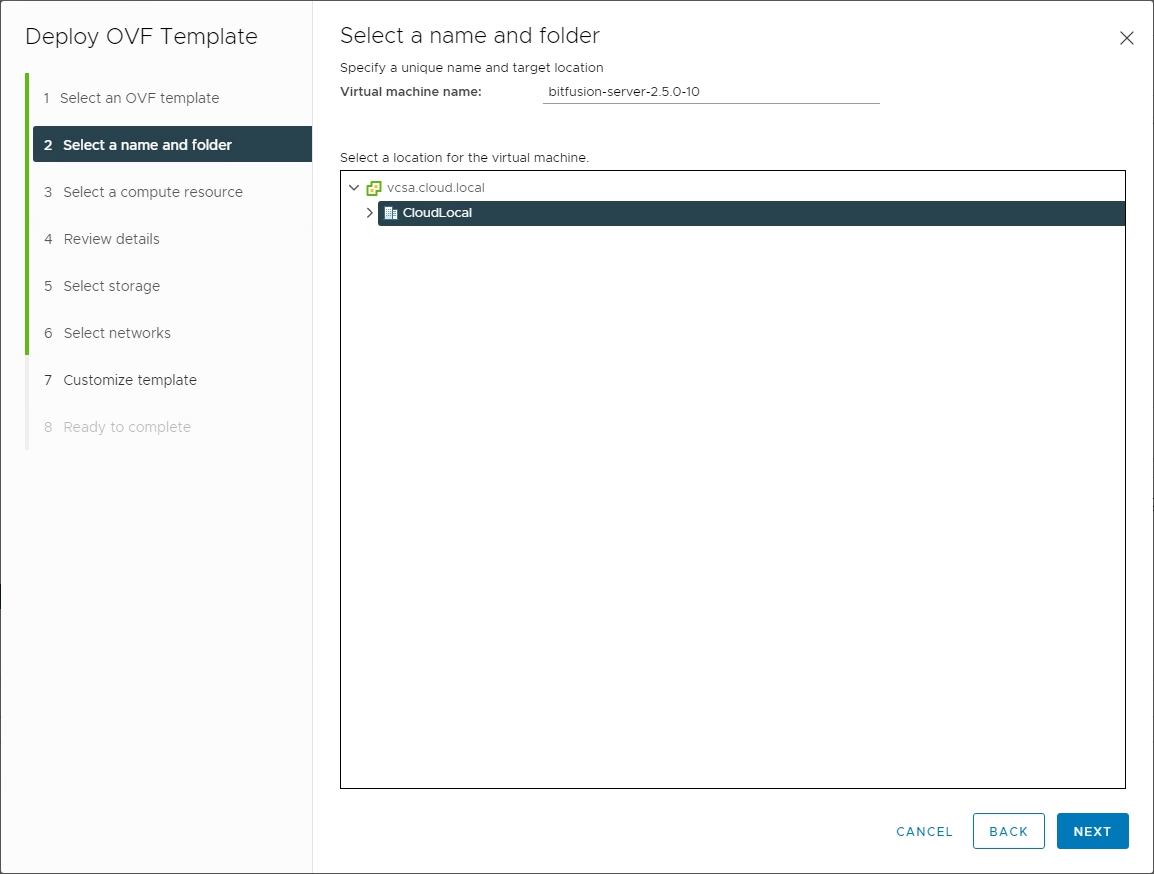

Select the name and folder to house the deployed Bitfusion Server virtual machine that is deployed using the OVA appliance.

Select the name and folder for the Bitfusion Server deployed with the OVA

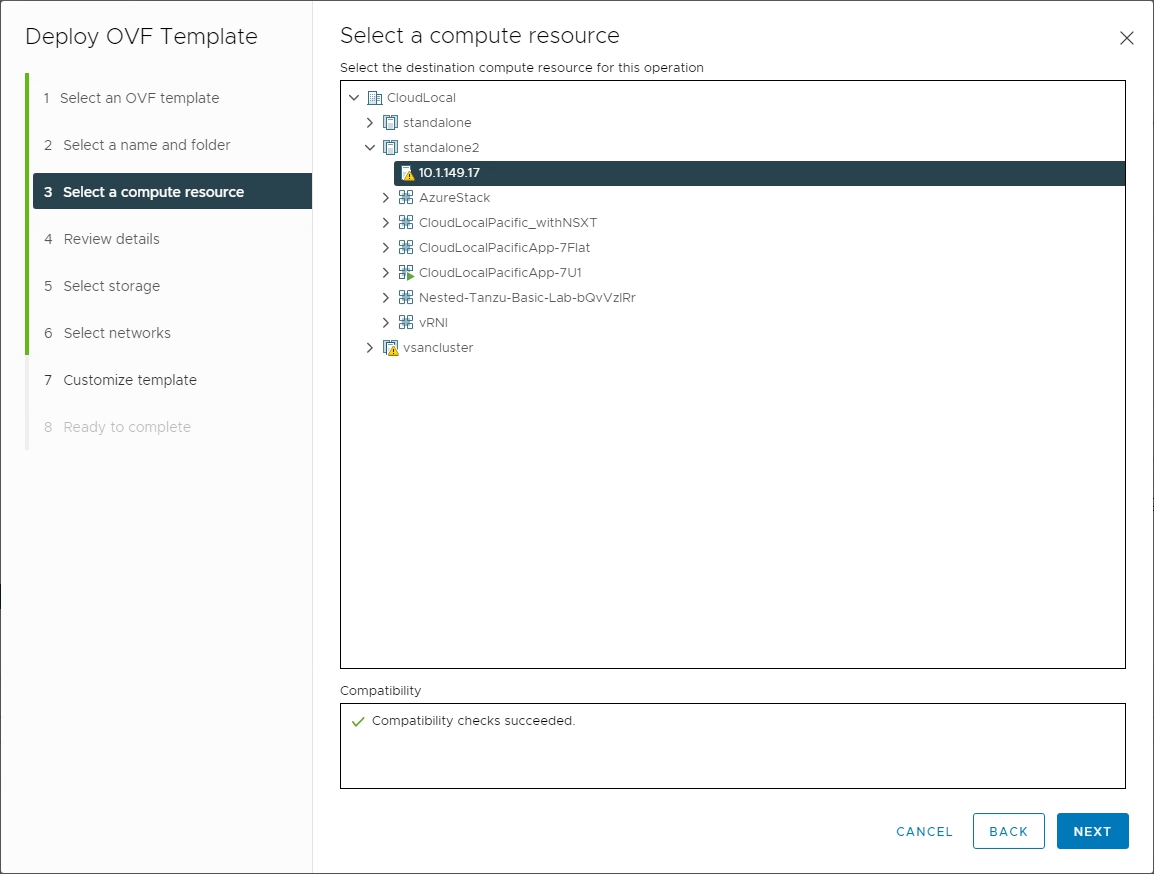

Next, select the compute resource for the resulting Bitfusion Server virtual machine.

Select the compute resource

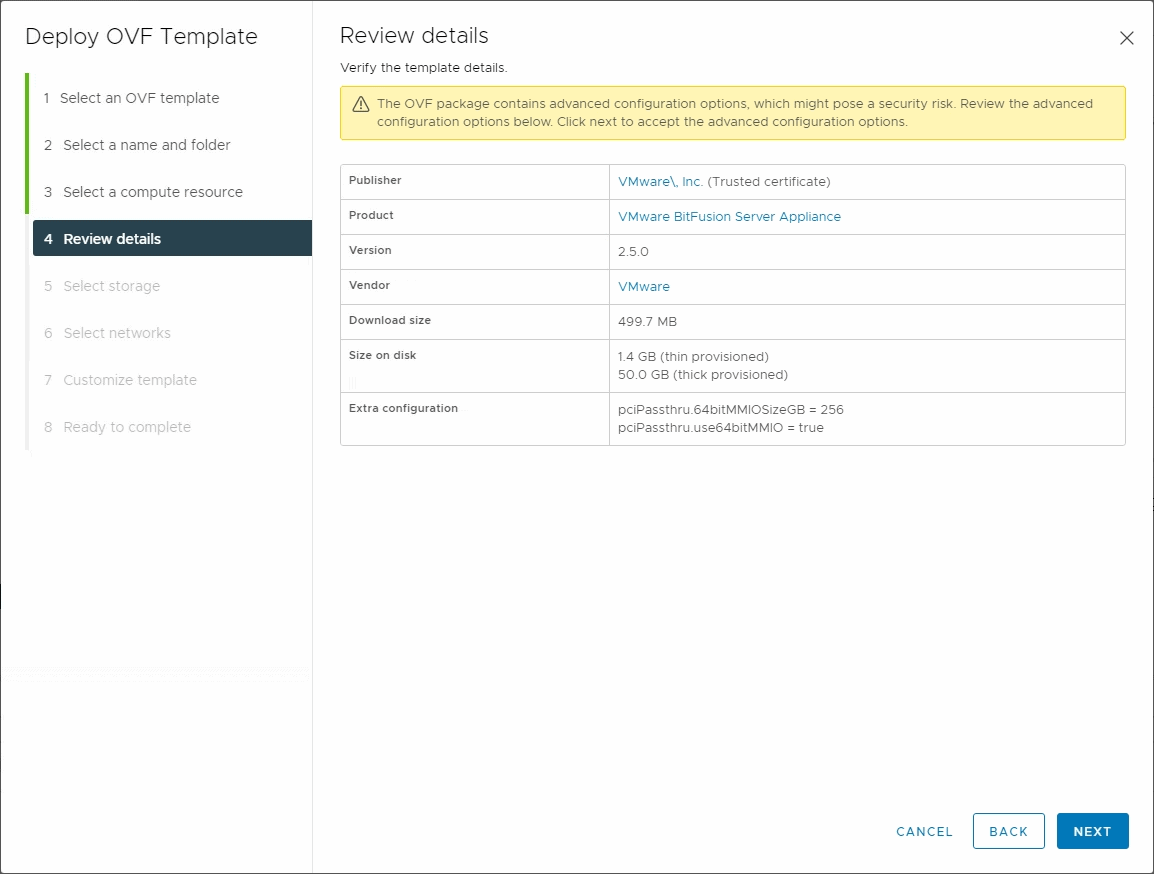

Review the initial deployment details of the Bitfusion Server OVA.

Reviewing the initial deployment details

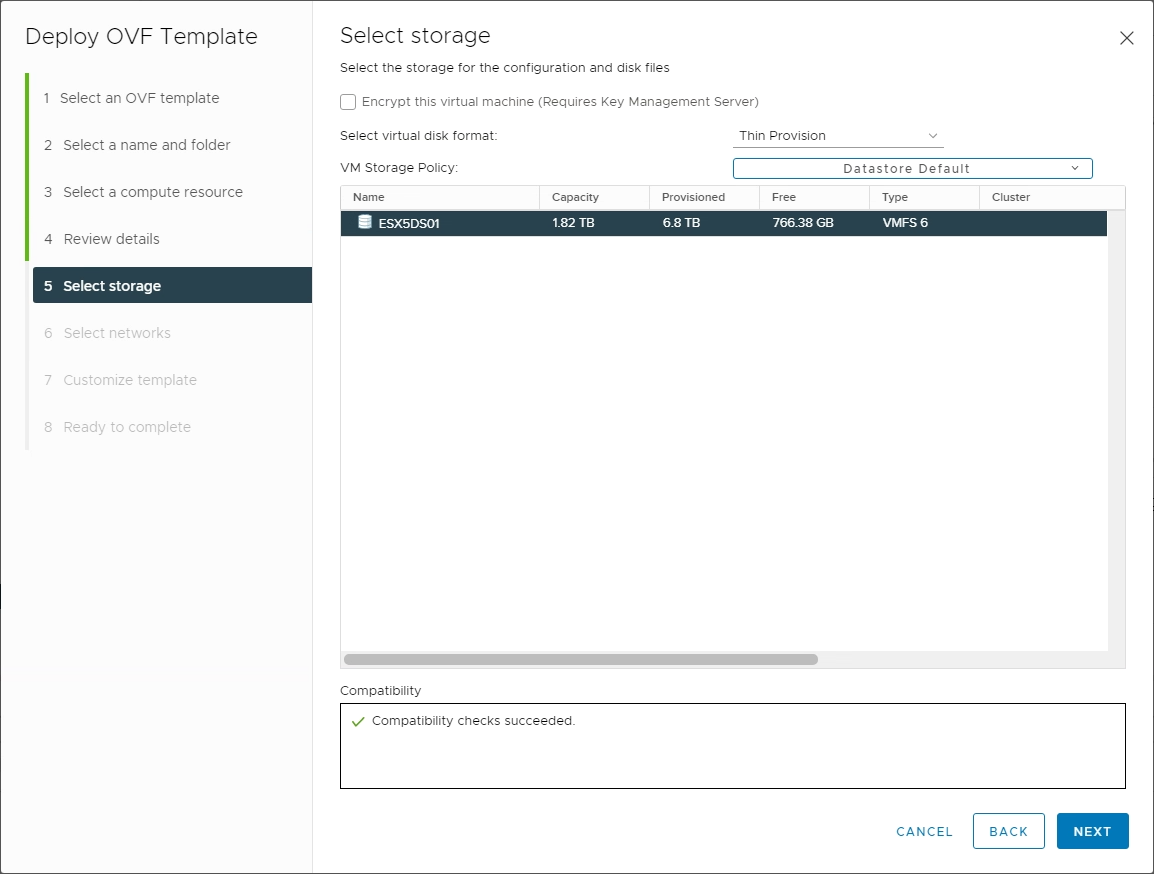

Select the datastore to deploy the appliance. Also, you can select the virtual disk format for the deployment.

Select the datastore and the storage format for the virtual disks

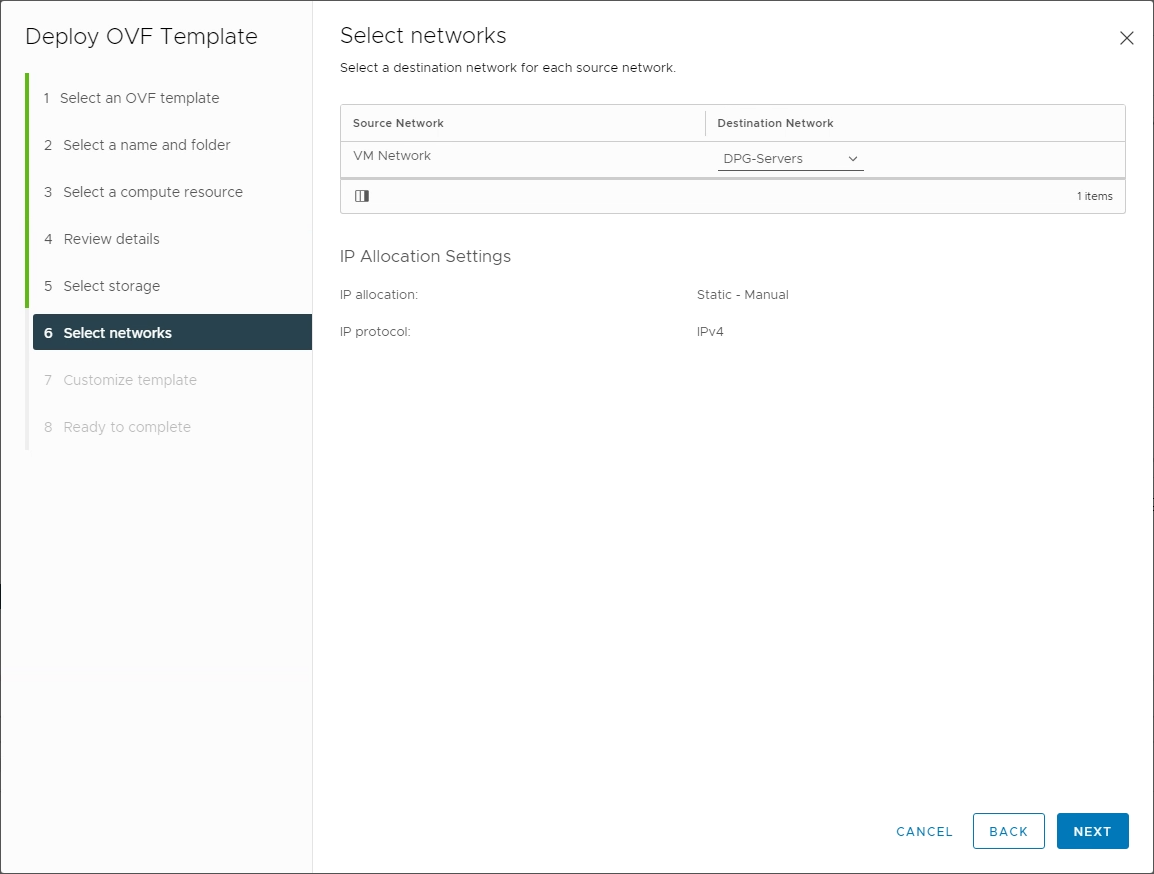

Select the virtual network for the appliance.

Configuring the virtual networks to connect the Bitfusion Server appliance

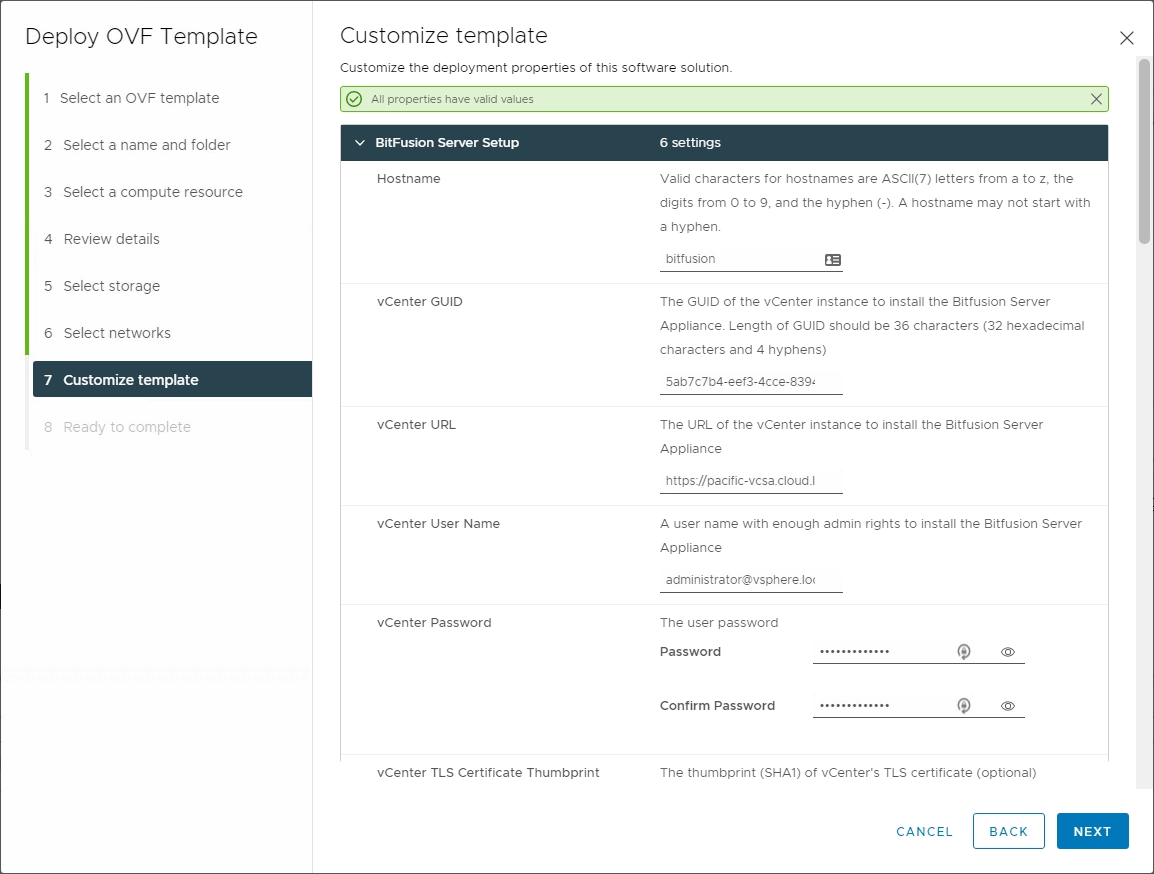

Pay attention to the details on the Customize template screen. Here you will configure the hostname, vCenter GUID, vCenter URL, passwords, and other pertinent network information.

Configuring the Bitfusion Server template before deployment

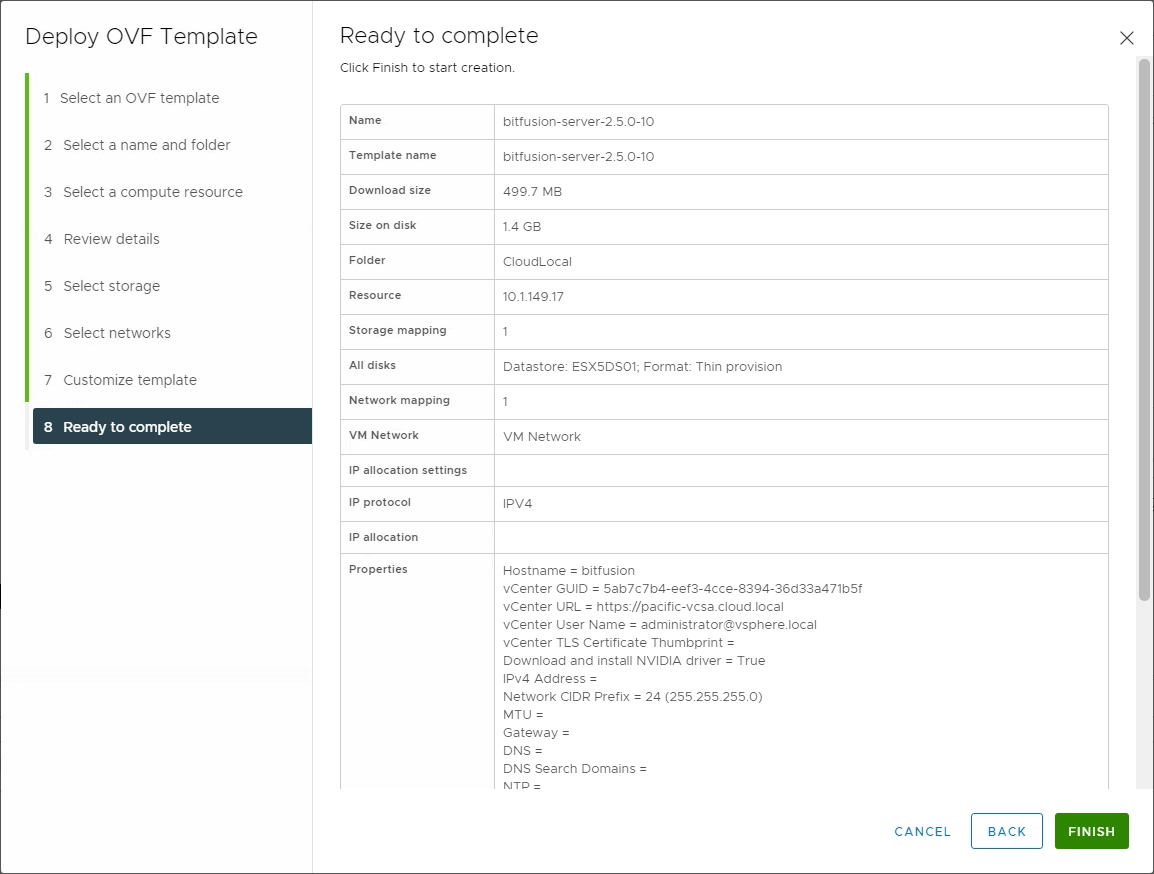

Finally, it is ready to complete the wizard. Click Finish.

Ready to complete the Bitfusion Server OVA appliance deployment

Installing the vSphere Bitfusion Client and Requirements for VMs

The actual artificial intelligence (AI) and machine learning (ML) applications run on a vSphere Bitfusion client machine. The vSphere Bitfusion client can be enabled on a machine with the following requirements:

Operating system supported:

- CentOS 7.0

- CentOS 8.0

- Red Hat Linux 7.4 or later

- Ubuntu 16.04

- Ubuntu 18.04

- Ubuntu 20.04

Additional requirements:

- vCenter Server 7.0 managed environment

- ESXi host version 6.7 or higher

- Network requirements include communication between the client and Bitfusion Server on ports 56001, 55001-55100, 45201-46225.

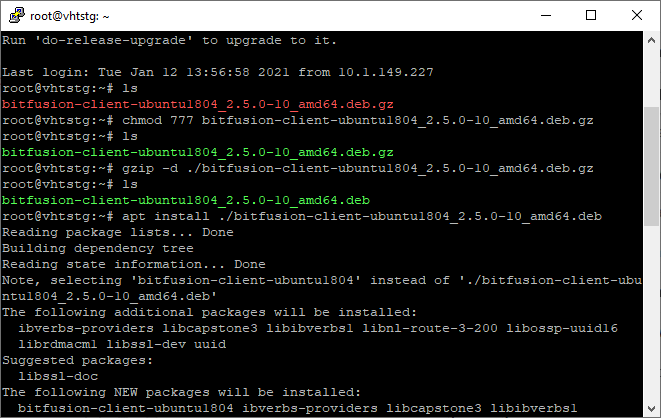

The download for the Bitfusion client software is in the form of a .gz file downloaded from VMware. Copy the installation to your supported Linux distribution, unzip it, and install.

- gzip -d <bitfusion client software file>

- apt install <bitfusionclient.deb file>

Installing Bitfusion client in Ubuntu

Does Bitfusion work with Containers?

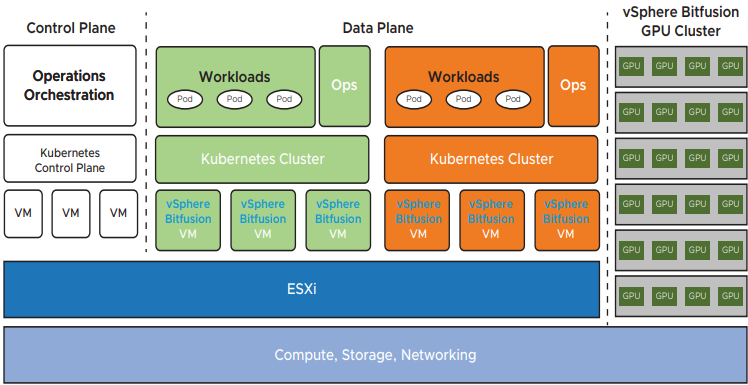

So far, we have covered the Bitfusion solution in the context of the traditional vSphere virtual machine. What about containers and specifically containers managed with Kubernetes? The answer is yes, Bitfusion can make use of network-attached full or partial GPUs in any Kubernetes Cluster. It is a perfect use case for Bitfusion.

Containers, by their nature, are non-persistent workloads. As we have learned, Bitfusion is able to allocate GPU resources to applications as they need them. When using containers, these spin up along with the application, Bitfusion assigns GPU resources, and then de-provision these once the container spins down. Is there anything special that needs to be done on the Kubernetes cluster side of things to take advantage of Bitfusion?

We have already learned that vSphere Bitfusion is a transparent virtualization layer that combines the available GPUs into a pool of GPU resources allocated either in full or by “slicing” the GPU CPUs into smaller segments for AI and ML applications. Kubernetes clusters can take advantage of the network-attached Bitfusion resources without making any architectural changes to your Kubernetes environment.

When you set up the Kubernetes cluster, it is set up just like any other Kubernetes cluster for other use cases such as configuration for CPU nodes. As is the case with virtual machines, the GPU pool with available GPU resources from multiple clusters is available to the Kubernetes cluster. So, the Kubernetes cluster does not need to be aware of Bitfusion. It means no unique or new configuration takes place for integrating Bitfusion with Kubernetes. You can use Bitfusion to attach full or partial GPU resources elastically over the network to applications running inside Kubernetes clusters.

High-level architecture of Bitfusion with Kubernetes

Wrapping Up

The day and age of artificial intelligence (AI) and machine learning (ML) have finally arrived in full force. With the tremendous advancements in computing power and the amount of raw data that organizations have access to, the recipe for computer intelligence by way of AI and ML is now possible. Modern Graphics Processing Units (GPUs) have paved the way for further advancement with AI and ML. Today’s GPUs are extremely powerful and allow offloading computing algorithms associated with modern applications using AI and ML to the GPU instead of the CPU. It is a powerful solution that yields tremendous benefits in terms of computing hardware.

Traditional implementations of GPU hardware relegate the allocation of physical GPUs to specific applications. It does not scale well and does not provide a flexible solution that businesses need. Allocating entire physical GPUs to AI and ML applications by traditional means is also not cost-effective. There is no option to assign only a part of the GPU processing power to the application. Even a small application may receive the entire GPU processing power.

VMware vSphere Bitfusion solves these challenges by virtualizing the physical GPU hardware from any number of ESXi hosts and aggregating these into a single pool of GPU resources. As shown in the post, this provides tremendous benefits. By virtualizing GPU hardware, Bitfusion allows dynamically assigning full or partial GPU resources to specific applications and unassigning these as needed. It provides a much more granular approach to allocating resources. Not only is Bitfusion a viable solution for traditional virtual machine workloads, but it also provides GPU resources that can be consumed by modern applications running in containerized environments such as Kubernetes. It allows Bitfusion to be extremely flexible in its deployment and in supporting both traditional and contemporary applications.

Bitfusion ultimately does for physical GPUs what ESXi did for physical server virtualization in the initial virtualization wave. This layer of abstraction allows businesses to move more quickly in realizing the effective use of AI and ML applications in their environment.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!