Save to My DOJO

Table of contents

Since the rise of Hyperconverged Infrastructures (HCI) and storage virtualization several years ago, VMware has been at the forefront of the movement with VSAN, alongside major players like Nutanix, Simplivity (now part of HPE), VXRail and such.

The main point of hyperconvergence is to consolidate multiple stacks that traditionally run on dedicated hardware in the same server chassis through software virtualization. NSX-T virtualizes the network and VSAN takes care of the storage over high-speed networking. Meaning you don’t need third-party appliances like storage arrays in the case of vSAN.

Each VSAN node includes local storage that makes up a virtualized shared datastore

If you aren’t too familiar with VSAN, make sure to check our blog How it Works: Understanding vSAN Architecture Components, which will give you a better understanding of what it is and how it can help your organization.

Context

VMware VSAN

VMware vSAN is enabled at the cluster level. Each node contains a cache and a capacity tier that make up the shared datastore. VMware offers vSAN Ready Nodes which are certified hardware configurations verified by VMware and the server vendor.

However, you may have already identified how it can become tricky to efficiently scale and maintain a homogeneous cluster. For instance,

-

- If you run low on compute capacity, you will need to add nodes, which means you also add storage capacity that will be unused.

-

- The other way around, if you run low on storage and can’t add disks, you’ll have to add nodes which will bring unneeded additional compute resources.

VSAN HCI mesh clusters

Back in vSAN 7 Update 1, VMware proposed a new feature called “HCI mesh clusters” to try and mitigate this issue by allowing organizations better use their capacity.

In a nutshell, HCI Mesh allows vSAN clusters to remotely mount the datastore of another (remote) vSAN cluster, hence sharing the storage capacity and span its usage to a wider pool of compute resources.

HCI Mesh allows multiple VSAN clusters to share their datastores remotely

Why run multiple vSphere clusters

Now, you may wonder, “Why not run one single cluster in which I throw everything in?”.

Most virtual environments actually start off with a single cluster and grow it as demand increases. However, past a certain point, it is relevant to reflect on the design and consider splitting the capacity into multiple clusters.

-

- Management plane: More and more resources get eaten up as you add core solutions such as NSX-T, vRealize Automation, Tanzu, etc into your environment. It is good practice to isolate these components into a dedicated cluster that does not share resources with the workloads as these are critical for smooth operations.

-

- Workloads: All these mixed workloads provide a service to your internal users or to other services. North-south security is tightened up and those shouldn’t, ideally, expose services to the outside world.

-

- DMZ: As your services become exposed to the internet grow, so too will be the workloads doing the job. In which case, it becomes relevant to only expose the network flux (VLAN, VXLAN, Overlay…) to vSphere hosts in a dedicated cluster.

-

- Tenants: If you have a big client renting resources in your environment, chances are they will not be happy getting a resource pool in a cluster shared with other tenants. In which case, dedicating a cluster to the client may become a clause in a contract.

-

- Other use cases: There are plenty of other cases for dedicated clusters such as VDI, large Big Data VMs, PKIs…

Adopting such a segregation of your workloads at the cluster level not only improves the organization of resources but also enhances security overall.

VSAN HCI Mesh Compute Clusters

In vSphere 7 Update 2, VMware listened to the customers and built on the HCI Mesh feature by extending it to regular (Compute) clusters. The great thing about it is that VSAN HCI compute mesh clusters can be enabled on any cluster and no vSAN license is required!

Once the remote vSAN datastore is mounted, virtual machines can be migrated between clusters using regular vMotion.

Non-vSAN cluster can now mount remote vSAN datastores

Just like HCI Mesh clusters, HCI Mesh compute clusters use RDT as opposed to other protocols like iSCSI or NFS. RDT (Reliable Datagram Transport) works over TCP/IP and is optimized to send very large files. This is to ensure the best performance and rock-solid reliability.

Considerations

While you could already export a vSAN datastore using iSCSI and NFS, using the vSAN protocol offers value at different levels as you maintain SPBM management, lower overhead, end-to-end monitoring, simpler implementation…

vSAN HCI mesh cluster

Before starting with vSAN HCI mesh clusters, consider the following requirements and recommendations:

-

- vSAN Enterprise license on the cluster hosting the remote datastore.

-

- HA configured to use VMCP with “Datastore with APD”.

-

- 10Gbps minimum for vSAN vmkernel.

-

- Both clusters managed by the same vCenter server.

-

- Maximum of 5 clusters per datastore and 5 datastores per cluster.

-

- No support for Stretched and 2-node clusters.

-

- Find the full list on Duncan Epping’s Yellow Bricks.

Cross-cluster networking recommendations

-

- High speed, reliable connectivity (25Gbps recommended).

-

- Sub-millisecond latency recommended. An alert is issued during setup if greater than 5ms.

-

- vSphere Distributed Switches to leverage Network IO Control (NIOC).

-

- Support for both L2 and L3 connectivity (gateway override needed on vSAN vmkernel for routing in case of layer 3).

Note that stretched clusters are not supported as of vSAN 7 Update 2. Meaning it is not recommended at this time to mount a remote vSAN datastore over high-speed WAN.

How to enable VSAN HCI mesh compute cluster

I will show you here how to remotely mount a vSAN datastore using HCI Mesh Compute Cluster.

In this example “LAB01-Cluster” has vSAN enabled with a vSAN datastore creatively renamed “LAB01-VSAN”. Whereas the vSphere host in “LAB02-Cluster” has no local storage but a vSAN enabled vmkernel in the vSAN subnet for the sake of simplicity.

Again, you do not need a vSAN license in the client cluster.

Let’s dig in!

-

- First, navigate in the Configure pane of the cluster on which you want to mount a remote vSAN datastore. Then scroll down to vSAN services and click Configure vSAN.

Enable vSAN services in the configuration pane

-

- This will bring vSAN configuration wizard where you will click on the new option vSAN HCI Mesh Compute Cluster, then Next.

vSAN HCI Mesh Compute Clusters is available as of vSphere 7.0 Update 2

-

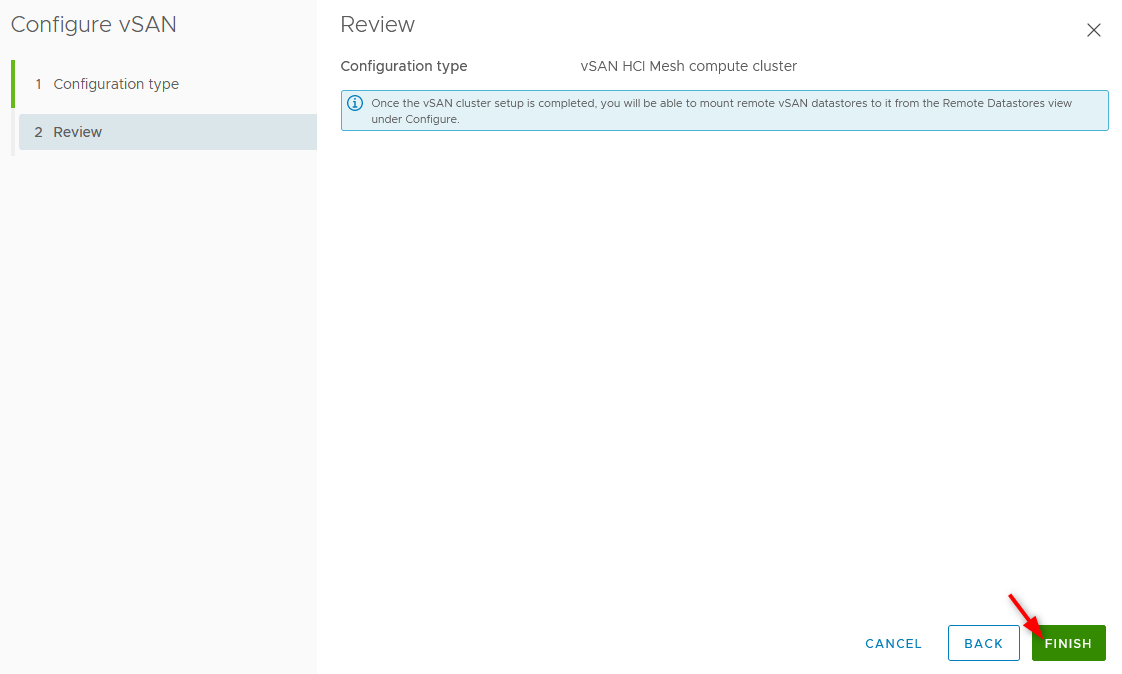

- The next window will just finish the wizard. As you can see enabling it is as easy as it gets. It essentially only enables the vSAN services on the host.

Enabling vSAN HCI Mesh Compute Cluster is a simple 2-step process

-

- It is now time to mount the remote datastore. No configuration is required on the target cluster. Still, in the vSAN service pane of the compute cluster, click on Mount Remote Datastores.

Once enabled, remote datastores can be mounted

-

- Then again, click on Mount Remote Datastore in the Remote Datastores pane.

The remote datastore pane will get you started

All compatible vSAN datastores should appear in the list. If they don’t, make sure the remote cluster is running a supported version of vSAN. As you can see below, the list contains “LAB01-Cluster”. Select it and click Next.

All compatible vSAN clusters are listed for selection

-

- The next page runs a series of checks to ensure the environment is suitable.

A number of requirements must be met to validate a remote mount

-

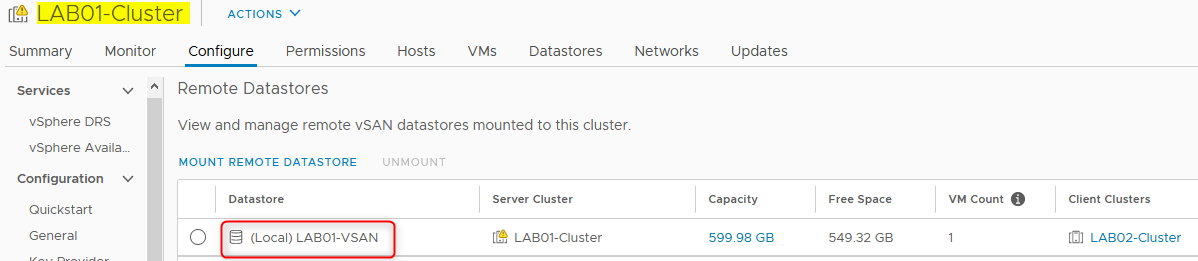

- After the process finishes, the datastore should appear in the Remote Datastores pane. As you can see, the client and server cluster appear in the list which will be useful to understand what’s going on at a glance.

The client (remote) cluster displays the list of mounted datastores

Note that this is also displayed in the vSAN configuration pane of the server cluster (LAB01), except the datastore appears as “Local”.

The server (local) cluster offers the same information

-

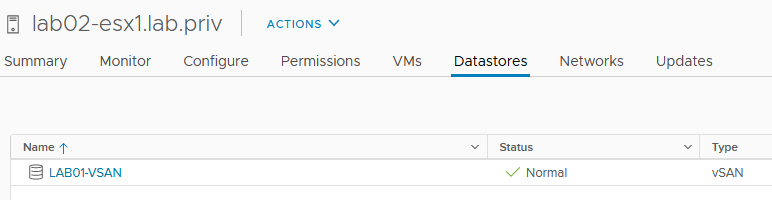

- The datastore should also appear in the datastore list of the hosts in the client cluster (“LAB02-Cluster” in our case).

Mounted datastores should appear on the client hosts

-

- You can then try and migrate a virtual machine from the server cluster to the client cluster (remember the client cluster has no storage in our case).

If the VM was already stored on the vSAN datastore, you can execute a simple vMotion to the client cluster. Note that the mounted vSAN datastore appears as “Remote” in the datastore list when performing a storage vMotion.

Mounted datastores appear as remote when moving a VM

-

- Once the relocation is completed, you get a virtual machine with vSAN objects stored on a remote datastore.

vSan network cluster

Policy-Based Management

Another nifty feature that was added alongside HCI Mesh Compute Cluster are storage rules. Just like RAID levels, those are specified at the VM storage policy level. They add the storage services as a layer of restriction for compatible datastore when applying the policy to a VM.

Storage rules extend datastore compatibility checks to storage services

Unmount a remote vSAN datastore

If you need to unmount a remote datastore, you need to ensure that it is not used by any VM on the client cluster or it won’t be possible. This also concerns the vSphere Cluster Service (vCLS) VMs.

The client cluster must have no resource on the mounted datastore to unmount it

Failure scenarios

While very handy and flexible, such a setup should bring a number of questions regarding availability and tolerance to failures. All vSAN related failures such as disks and nodes incur the same consequences and actions as in a regular vSAN cluster. In this section, we will cover 2 cases that apply to the HCI Mesh compute clusters.

Failure of the link between client and server clusters

Now, in the case of an HCI Mesh architecture, what would happen if the link that connects the hosts in the client and server clusters were to fail?

Inter-cluster link failure will result in loss of access to storage

It is recommended to configure vSphere HA with “Datastore with APD” set to either conservative or aggressive which will result in the following chronology of events:

-

- Failure of the link between client and server cluster.

- 60 seconds later: All paths down (APD) event declared “lost access to volume …”.

- 180 seconds later: APD response triggered – Power off.

After the APD timers are reached, the response is triggered by powering off the VM which appears “inaccessible” until the link is restored.

APD event at the client cluster level: VM powered off

Failure of the vSAN link on a host in the client cluster

If a host in the client cluster loses its vSAN links, the behavior will also be similar to a traditional APD (except here the delay is 180 seconds instead of 140).

Loss of access to vSAN by a host will result in loss of storage

-

- Failure of the vSAN link on the host.

- 60 seconds later: All paths down (APD) event declared “lost access to volume …”.

- 180 seconds later: APD response triggered – Restart VM on another host.

Once the APD response timer is reached, the virtual machine is restarted on a host that still has access to the datastore.

APD event at the client host level: VM restarted

Wrap-up

Ever since its original launch back in 2014, VMware vSAN has dramatically improved over the release cycle to finally become a major player in the hyperconvergence game. It offers a wide variety of certified architectures spanning multiple sites, ROBOs and 2-node implementations (direct-connect or not). Those make vSAN a highly versatile product fitting most environments.

vSAN HCI Mesh Compute Clusters is yet another option brought to you to leverage your existing vSAN environment and make better use of these datastores.

Deploying a vSAN cluster may not be such a huge investment compared to a traditional SAN infrastructure. However, scaling it up is not always financially straightforward as the cost of additional nodes is greater than regular compute nodes. HCI Mesh Compute Clusters offer SMBs and smaller environment the flexibility to present an already existing vSAN datastore to up to 5 clusters!

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!