Save to My DOJO

It has been one of the defining moments and turning points in modern technology – virtualization in computing. Virtualization and the technologies that include virtualized resources have changed the way businesses think about hardware, software, applications, and infrastructure. It has led to a paradigm shift in organizations’ pace, innovation, and agility and is an underlying technology making the cloud as we know it possible. What is virtualization made possible with a hypervisor? How have modern virtualization technologies evolved from the early days of server virtualization?

A look back at the birth of virtualization

If you have been in IT for some years now, you undoubtedly remember the days when we ran everything on physical servers in a 1-to-1 fashion. We had one operating system running on one set of hardware. Application servers ran large monolithic applications on servers outfitted with “large” (for those times) quantities of RAM and extra CPU capacity.

However, even with large amounts of RAM and CPU cycles for the time, most server hardware was only powerful enough to run the single server operating system on top of the physical hardware. However, by the early 2000s, server hardware was starting to outpace operating systems and software requirements. As a result, many began to see the inefficiencies of running a single operating system installed on a single physical set of hardware.

In 2002, a little company called VMware released its first server hypervisor, ESX Server 1.5. After VMware introduced ESX Server 1.5, a new era of enterprise data center technology was about to begin. You can read VMware’s very interesting and impressive pioneering of virtualization technology here: VMware History and Interactive Timeline.

Virtualization and IBM

Virtualization has been around since the late 60s when IBM created a new mainframe based on a virtual machine monitor or hypervisor. However, the world was not ready to capitalize on virtualization technology until decades later, when VMware introduced its solution. The technology debuted by VMware helped organizations realize the benefits of running multiple “virtual” servers on top of the physical hardware. It also made hardware and software vendors rethink their technologies and strategies for the upcoming years and helped utilize the growing power of hardware technologies.

What is virtualization?

What is this technology we refer to as virtualization? In its simplest form, virtualization allows running a virtual computing system in an abstracted layer from the physical hardware on which it is running. Virtualization technologies enable the virtual computer to run unaware of this abstraction layer from the physical hardware. It also means the applications and software running on top of the virtual computer are also unaware they are running in a virtualized environment.

Virtualization in computing has opened up many opportunities to create IT services and software inside virtualized instances traditionally meant to be bound to physical hardware systems. This unique capability provided by virtualization in computing has been made possible by a particular type of software known as a hypervisor.

What is a hypervisor?

The unique software that makes virtualization in computing possible is called a hypervisor. The hypervisor effectively creates the layer of abstraction between the virtual instance of a computer and the underlying physical hardware. It allows effectively taking the physical resources in the physical host and dividing them up so virtual environments can use them.

The hypervisor typically divides the resources from the physical host and assigns these to virtualized resources. End-users interacting with or running applications connected to virtual resources instead of physical resources see no difference between how these behave.

An essential aspect of the hypervisor is dividing the physical CPU resources between different virtual resources – CPU scheduling. For example, what happens when multiple virtual resources ask the same physical CPU to perform specific computational tasks simultaneously? The hypervisor is the brain of virtualization in computing that allows scheduling of all of the computational functions between the various virtual resources and allows these to complete effectively and efficiently by the physical CPU package installed in the hypervisor host.

Running virtual machines on top of a hypervisor was the first wave of virtualization in computing technology used in the enterprise data center in the early 2000s with VMware and other hypervisors. Virtual machines and their hard disks exist as simple files accessed by the hypervisor host. This architecture helped to abstract the physical hard disk from the concept of having virtual hard disks. In addition, it makes moving virtual machines between one hypervisor host and another much easier. The hypervisor abstraction and representation of a virtual machine by simple file constructs allowed virtualization to wow us with capabilities like moving and running virtual machines between one host and another without downtime.

Hypervisor types

There are two types of hypervisors used today – type 1 and type 2 hypervisors. What is the difference between type 1 and type 2 hypervisors? A type 1 hypervisor is used in the enterprise data center running today’s business-critical workloads. Examples include VMware vSphere and Microsoft Hyper-V. A type 1 hypervisor runs directly on the physical hardware as the operating system and offers the most efficient abstraction layer for virtual resources.

Type 2 hypervisors are generally used as client virtualization solutions that run on top of client operating systems. It runs as an application in an operating system and not an operating system itself. Examples of type 2 hypervisors include VMware Workstation and VirtualBox. End-users typically use type 2 hypervisors to run other operating systems, development environments, or browsing workstations for Internet-connected sandboxes.

Benefits of virtualization

We have already mentioned a few of the benefits of virtualization in computing. However, let’s key in on these a bit further. Virtualization in computing has provided a way for organizations to efficiently use the hardware resources available in modern server hardware. Modern servers are extremely powerful, with multi-core CPUs now packing over 100 cores. Thinking about running a single physical workload on top of a physical host with such hardware resources now seems like an odd concept.

Virtualization now allows organizations to realize the potential of such massive hardware resources fully and to use these resources as efficiently as possible. It is not uncommon to see a single physical server running as many as 40-50 virtual machines. Note the following additional areas where virtualization in computing has led to tremendous benefits:

- Availability

- Performance

- Disaster recovery

Availability

Running workloads in a modern virtualized environment helps ensure the workloads’ availability. As mentioned earlier, virtual machines are represented as files on the hypervisor host. With this architecture and shared storage between the hypervisor hosts, the virtual machines can be restarted on a healthy host when a hypervisor host goes down.

Virtualization in computing has also led to massive benefits from an operational and management perspective. For example, IT admins can gracefully migrate virtual machines from one host to another without downtime to perform maintenance on the physical hypervisor host. This capability would have never been possible before virtualization in computing made its way into the enterprise data center.

Performance

Modern hypervisors running on current server hardware can also provide tremendous performance benefits. The hypervisor schedulers found in modern hypervisors are so good that they can often run workloads better than running them on top of the physical hardware.

Virtualization in computing using hypervisor technologies has also allowed organizations to define their performance SLAs in software. Businesses can use resource scheduling and prioritization technologies to determine which workloads have the most performance or largest share of physical resources on the hypervisor host.

Disaster recovery

The capabilities now afforded by virtualized resources have taken disaster recovery technologies to the next level, allowing businesses to perform backups more often and efficiently and replicate entire workloads to completely different locations and data centers.

Modern backup solutions interact with today’s hypervisors to protect virtual machines running in virtualized environments and allow the creation of backups that capture the changed blocks of the virtual workload. This capability enables data protection to happen more frequently, with continuous data protection in some cases and taking as little disk space as possible.

Businesses can also replicate virtual machines from their primary data center to a secondary data center or site, allowing site-level protection and quick failovers in a significant disaster taking down the primary production data center. Aligning disaster recovery strategies with the 3-2-1 backup best practice has been made much easier by introducing virtualization in computing technologies with the abstracted use of physical resources.

Types of virtualization

While server virtualization, where virtual instances of operating systems, aka virtual machines, are run, is typically the type of virtualization that first comes to mind, there are other types of virtualization. These include the following:

- Application virtualization – Application virtualization, such as has been performed by Microsoft Terminal Services and others, allows running applications that behave as if they are installed locally but are running on a backend server

- Network virtualization – Network virtualization creates an overlay network on top of the physical underlay and allows running or stretching subnets between locations. It enables organizations to quickly provision new networks in the software layer, which has never been possible before.

- Network function virtualization (NFV) – Network function virtualization (NFV) refers to the granular virtualization of network devices, such as routers, firewalls, and load balancers, as virtual machines. NFV allows running virtual network functions without the need for proprietary hardware.

- Storage virtualization – Storage virtualization is a technology that has fueled the movement to hyperconverged infrastructure (HCI). Storage virtualization allows aggregating locally attached storage devices on each virtual host and presenting these as a logical storage volume to run workloads. It provides many benefits, such as software-defined performance and availability capabilities. In addition, businesses can define storage policies to control the various storage characteristics.

CPU virtualization and GPU virtualization

CPU virtualization is another type of virtualization technology that brings many benefits to modern virtualized workloads. CPU virtualization enhances the performance of virtualized workloads. Physical resources are used whenever possible. Virtualized resources and instructions are only used when needed for virtual workloads to operate as if they are running on physical hardware. Today’s modern hypervisors are designed to work in harmony with the CPU virtualization feature found in current AMD and Intel CPUs.

Intel’s technology is Intel Virtualization Technology (Intel VT), and AMD CPUs implement the virtualization technology called AMD-V. Despite the different terminology, the technologies help achieve the same types of efficiencies and benefits. CPU virtualization is a feature that is generally enabled or disabled in the BIOS settings.

When CPUs offer hardware assistance for CPU virtualization, the guest virtual machine can use a separate mode of execution called guest mode. The guest code, including application-specific or privileged code, is executed in guest mode. When you use hardware-assisted virtualization, system calls and other workloads can run very close to the native speed, as if they were running on physical hardware. As a result, hardware-assisted CPU virtualization can drastically speed up performance.

GPU virtualization

Many organizations increasingly use virtual desktop infrastructure (VDI) to power mission-critical desktop workloads. Modern GPU technology and graphics cards are now being used in hypervisor hosts to enable accelerating GPU applications running on a virtual machine, such as desktop virtualization and other use cases.

For example, NVIDIA virtual GPU technology provides robust GPU resources and performance enhancements to use virtual machines as graphics workstations. This virtual GPU technology is also being used as a central component of today’s data scient and artificial intelligence (AI) applications.

NVIDIA exposes the virtual GPU technology with specialized software that allows the creation of multiple virtual GPUs shared across multiple virtual machines that multiple users and devices can access from any location. Furthermore, with the performance enhancements of virtual GPU resources exposed to virtual machines, the graphics performance of virtual machines with virtual GPUs is virtually indistinguishable from a bare-metal workload.

Virtualization vs. containerization

With the onset of the cloud era in recent years, we have seen a shift in the virtualization of computing technology used by businesses to modernize their applications. Modern applications are making use of something called microservices. Microservices take larger monolithic applications and divide these into much smaller and modularized components.

This approach provides many benefits and advantages over traditional monolithic applications, including more aggressive release cycles, enhancements, software updates, and other advantages. So what infrastructure allows this shift to modern microservices? Containers. Containerization has been the hottest topic on the minds of organizations looking to transition their applications to much more cloud-native architectures with the ability to scale.

What are containers? They are self-contained software packages that can run on top of container runtime environments like Docker. They provide many advantages over virtual machines when it comes to running applications. They are very lightweight and small in size, and they contain all the software dependencies as part of the container itself. It means you can run a container on one container host and then run it on another container host without worrying about the underlying software requirements, as these are included.

You may see various debates or topics considering virtualization vs. containerization. The term virtualization is more closely aligned with the traditional virtual machine found in on-premises data centers for the past few decades. Virtual machines have typically been the platform that organizations have used to run the more traditional monolithic applications located in the enterprise data center.

However, as organizations look to transition to modern applications and cloud-native environments, virtual machines do not play nicely with the idea of microservices due to their size, complexity, and overall footprint. Instead, microservices need to run on infrastructure that is nimble, agile, small in size, easy to provision, and can easily scale elastically, up or down.

In addition, containers are behind the movement to a more modern style of development processes known as continuous integration and continuous development (CI/CD). With current development pipelines powered by containerized processes, developers can continually develop code with many releases that automatically get integrated and built into the overall application.

Gone are the days where code is only released every 3-6 months in large, major updates to an application. With the new microservices concept and development processes, applications can get updated frequently and allow a much more aggressive release cycle.

Will containers replace virtual machines altogether?

One might think containers are the death knell for virtual machines. However, this is not necessarily the case, and arguably, there will always be a place for virtual machines. After all, containers have to have a container host to run on, and a virtual machine often services this on the backend.

Also, microservices do not solve all problems. There will always be the need for more monolithic applications for various use cases. Virtual machines running entire operating systems are well suited for general workloads and heterogeneous environments. Containers share the OS kernel, so while they can be moved easily from one container host to another, the container hosts need to run the same operating system. For example, Linux containers can’t run on a Windows host, and Windows containers can’t run on a Linux host. Running multiple operating systems in an environment will require running virtual machines installed with those various operating systems.

As with most technologies, no one technology is the end-all-be-all. Instead, containers are a tool that serves a specific purpose exceptionally well. Virtual machines also have a well-defined use case that will likely never disappear completely.

Kubernetes

We can’t discuss containers without mentioning the 800-lbs gorilla in the room – Kubernetes. Containers are fantastic in what they allow developers and IT Ops to accomplish in the software development lifecycle. However, they have a flaw in and of themselves. They are not managed or orchestrated by default. So what happens when you spin up a container on a container host and that container host fails? What if you have a sudden burst of traffic that saturates the single container you have serving your web frontend?

All of these questions can plague an organization moving to containerized workloads. It is where Kubernetes comes into play. Kubernetes is a container orchestration platform that allows organizations to solve many of the problems of running containerized workloads. Note what Kubernetes can do:

- Service discovery and load balancing

- Storage orchestration

- Deployment automation

- Self-healing

- Secrets management and configuration management

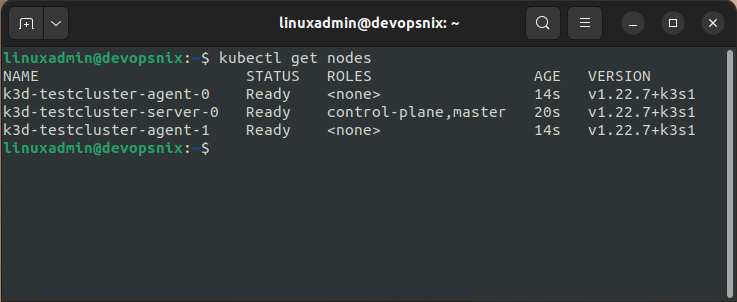

Viewing Kubernetes nodes running in a Kubernetes cluster

While Kubernetes is not a hypervisor, it brings about many of the management benefits we see in an enterprise hypervisor solution that provides high availability, scheduling, and other benefits. Kubernetes helps to solve many of these challenges at the container level, managing the availability and scaling of the solution to handle traffic, the loss of a container host, and many other scenarios.

VMware and Hyper-V

If you have a conversation about virtualization in computing, you cannot do so without mentioning or involving names like VMware and Hyper-V. This is because VMware and Hyper-V are two of the primary hypervisors used in today’s enterprise virtualization environments.

VMware vSphere has been a wildly popular solution to run enterprise virtual machines with the ESXi hypervisor and vCenter Server management. VMware’s own figures show that over 70+ million workloads run in VMware vSphere in the enterprise data center. In addition, many enterprise customers are running traditional virtual machines on top of the vSphere platform. VMware vSphere is known for its ease of management using the centralized vCenter Server management console. VMware has also created a rich ecosystem of additional solutions integrated with vSphere. These include but are not limited to the following:

- VMware NSX-T for Data Center – VMware’s current software-defined networking solution allows customers to provision and manage virtual networking in software

- VMware vSAN – VMware vSAN provides robust software-defined storage

- VMware Horizon – VMware’s virtual desktop infrastructure solution able to deliver dedicated and dynamic virtual desktop resources to end-users

- VMware vRealize Operations – A management and analytics suite of tools offering robust monitoring capabilities for VMware vSphere and public cloud environments

- VMware vRealize Automation – VMware’s automation suite that provides automated blueprints for provisioning and building out virtual resources

- VMware Cloud Foundation (VCF) – VMware Cloud Foundation is VMware’s SDDC cloud suite of products that delivers a cloud-like solution to customers running infrastructure both on-premises and in the cloud

- VMware Tanzu Kubernetes – VMware Tanzu embodies VMware’s portfolio of products supporting the building, configuration, deployment, security, and lifecycle management of their modern containerized environmentsVMware vSphere provides rich features and capabilities running virtual machines in the enterprise data center, such as

- VMware High Availability (HA) – With VMware HA, customers can ensure virtual machines running on a failed host are restarted on a healthy host.

- VMware Distributed Resource Scheduler (DRS) – VMware DRS ensures workloads are running efficiently in the vSphere cluster. DRS handles the placement of new workloads in the cluster to help ensure resources are used efficiently.

- vMotion – VMware vMotion was a capability that wowed us when we first saw virtualization for the first time. It allows moving workloads while they are running between physical hypervisor hosts.

- Storage vMotion – Storage vMotion allows moving virtual machine storage between different datastores in the vSphere environment.

With the release of VMware vSphere 7.0, vSphere has evolved to include native Kubernetes in the ESXi hypervisor itself. The vSphere with Tanzu solution allows ESXi to run a supervisor cluster using the ESXi hosts themselves. The Kubernetes guest clusters are spun up inside special-purpose virtual machines running in the environment. With this evolution of vSphere, running Kubernetes-powered containerized environments is no longer a bolt-on solution but instead is native to vSphere.

VMware vSphere client

It allows customers to run both traditional workloads and modern containerized workloads side-by-side without running containers in a different environment. It also allows IT admins to use the same tools and operational procedures to manage both types of workloads without additional training, skillsets, or other added complexities.

Microsoft Hyper-V is still a major player in the enterprise data center, with many customers running virtual machines on Hyper-V. While Microsoft had a lot of ground to make up compared to VMware in the enterprise data center running production virtual machines, they have closed a lot of the gap between the two solutions. Hyper-V has many of the same capabilities as VMware vSphere in running production virtual machines, including availability and resource scheduling.

Microsoft Hyper-V Manager

The writing is on the wall with on-premises technologies, including Hyper-V, that Microsoft wants you to manage, configure, and license from the cloud. The future of Hyper-V is with Microsoft’s new Azure Stack HCI solution. Azure Stack HCI allows running HCI clusters on-premises but managed from the Azure cloud. Azure Stack HCI is a new hyperconverged infrastructure (HCI) operating system that is specially designed to run on-premises virtualized workloads.

Azure Stack HCI and Azure Arc show Microsoft still sees the use case for on-premises technologies running customer workloads but having the benefits of a single cloud management plane. For example, using Azure Arc-enabled Kubernetes clusters, Microsoft is allowing customers to realize the advantages of running modern workloads on Kubernetes while receiving many of the benefits of Azure Kubernetes, such as Azure functions that can run on-premises.

The Hyper-V role is still found in Windows Server 2022 as a role service that IT admins can add to Windows Server. For now, Microsoft is still including Hyper-V functionality in Windows Server itself. It will be interesting to see what future versions of Windows Server offer in terms of virtualization.

Final Notes

Virtualization in computing has arguably been one of the most significant advancements in the enterprise data center in the past few decades. It has allowed businesses to move faster, make better use of resources, and have more resiliency, high availability, and disaster recovery capabilities. However, while virtualization has been around since the late 60s, it wasn’t until the early 2000s that the first wave of virtualization in computing took off in the enterprise data center.

When the first version of VMware ESX came onto the scene, it opened a whole new world to businesses running business-critical workloads on top of physical servers with its complexities. The first years of virtualization 1.0 included the phase where companies migrated from physical to virtual workloads for business-critical applications.

Today, organizations are moving into modernizing their applications with microservice architectures built on top of containers. Containers are the next virtualization wave powering this application modernization movement. Containers are self-contained bundles of code that have all the requirements contained as part of the container. Organizations are now using containers to architect modern applications using microservices. It is leading to a transformation of how businesses are building out their infrastructure. Today, Kubernetes is the de facto standard container orchestration platform, allowing companies to run highly available containers, resilient and scalable.

Even on-premises technologies are becoming more cloud-integrated and cloud-native. These are becoming more cloud-aware and cloud-exposed with traditionally on-premises hypervisor technologies like Hyper-V. Microsoft is showing where its mindset is in terms of virtualization in computing technologies on-premises as Azure Stack HCI is an on-premises HCI solution managed from the Microsoft Azure cloud. Microsoft’s change in management and architecture is following a trend industry-wide among vendors developing hybrid cloud solutions. These will increasingly be cloud-managed.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!