Save to My DOJO

Table of contents

Hyper-V’s virtual switch is a stumbling block for newcomers on a good day, so it’s certainly no picnic when something goes wrong. This series will arm you with tips, tricks, and tools to solve your dilemma. Part one will discuss a functional but ailing switch. Part two will help you with a completely broken switch.

This post series assumes you have a solid fundamental understanding of the Hyper-V virtual switch. If you do not, please start with our introductory material on the subject.

Troubleshooting a Poorly Performing Switch

So, you made your switch, but performance isn’t right? Before you do anything, make sure you are testing the problem appropriately. Most problem reports come to me from someone performing a file copy. That’s like citing Wikipedia — it’s a good starting point, but you can’t quote it as a reliable source if you want to be taken seriously. File copy is not a professional network testing tool. If you can’t afford professional network testing software, use iPerf. If you don’t want to go through all the drama of compiling iPerf, then get JPerf. JPerf has a Java-based GUI, but it comes bundled with a Windows-compiled version of iPerf that you can use from the command line.

Culprit #1: Bad or Misconfigured Cables and Teams

I have lost track of the number of times that problems with a virtual switch (or any network connection, really) were traced back to something as elementary as a bad Ethernet cable. A former coworker of mine had a sticky note on his monitor with three words: “Check Cables First”. Fewer than 2% of all reported computer issues are hardware-related, but easily half of those have something to do with a wire. Cabling is especially an issue if you’re using teamed adapters, because failure detection in a team is surprisingly poor. Use LACP when you can, but even LACP sometimes gets fooled by a broken pair. What I’ve noticed with a bad cable in a team is that connections alternate between perfect and pathetic. This is natural, since some loads are using the good lines while others are getting lost on the bad line. If you’re using the Hyper-V Ports load balancing algorithm and there’s a bad wire, some virtual adapters won’t be able to send at all and the adapters that can’t send will randomize as their VMs are restarted. For the other load-balancing types, connection drops will be intermittent.

Misconfigured teams will also lead to issues. An LACP team will automatically disable connection across a misconfigured port pair, but the other team types will not. For instance, if you create a static team by connecting four ports on your host to four ports on your switch but you set the wrong four switch ports as members of that team, both sides will tell you that the team is functional. Their only criteria for successful team functionality is if there’s a live device on the other end.

Switches vary greatly in configuration and troubleshooting, so I have to refer you to your manufacturer for help there. If you’re using manufacturer teaming software, same story. If you’re using Windows Server 2012 native teaming, PowerShell will help you out. The two cmdlets you want are Get-NetLbfoTeam and Get-NetLbfoTeamMember . Here’s output from one of my systems for reference:

PS C:> Get-NetLbfoTeam

Name : vTeam

Members : {PTR, PTL, PBL, PBR}

TeamNics : vTeam

TeamingMode : Lacp

LoadBalancingAlgorithm : TransportPorts

Status : Up

PS C:> Get-NetLbfoTeamMember -Team vTeam

Name : PTR

InterfaceDescription : Realtek PCIe GBE Family Controller #3

Team : vTeam

AdministrativeMode : Active

OperationalStatus : Active

TransmitLinkSpeed(Gbps) : 1

ReceiveLinkSpeed(Gbps) : 1

FailureReason : NoFailure

Name : PTL

InterfaceDescription : Realtek PCIe GBE Family Controller #4

Team : vTeam

AdministrativeMode : Active

OperationalStatus : Active

TransmitLinkSpeed(Gbps) : 1

ReceiveLinkSpeed(Gbps) : 1

FailureReason : NoFailure

Name : PBL

InterfaceDescription : Realtek PCIe GBE Family Controller #2

Team : vTeam

AdministrativeMode : Active

OperationalStatus : Active

TransmitLinkSpeed(Gbps) : 1

ReceiveLinkSpeed(Gbps) : 1

FailureReason : NoFailure

Name : PBR

InterfaceDescription : Realtek PCIe GBE Family Controller

Team : vTeam

AdministrativeMode : Active

OperationalStatus : Active

TransmitLinkSpeed(Gbps) : 1

ReceiveLinkSpeed(Gbps) : 1

FailureReason : NoFailure

If there is a problem in the team, Get-NetLbfoTeam will show a Status of Degraded. The Get-NetLbfoTeamMember cmdlet can show you which adapter(s) is encountering a problem. Remember that for a static or switch-independent teaming mode, the only thing it checks is that the other side of the line has a responding device. Because I’m using LACP, if I were to misconfigure the LACP team on the switch side, then the affected ports would be flagged with a LACP Negotiation Issue. Beyond ensuring that all the ports are configured in the same team and that all the cables are OK, there’s not much else to troubleshoot for that issue. Some switches are very particular about their LACP teams, so start with their documentation and support. You may not be able to get Windows Server to successfully create a LACP team on every switch marked as LACP-capable.

Culprit #2: Almost-Implemented Features

So, the feature set on your network adapter says it’s VMQ capable. So you enable VMQ. And your performance is terrible. Ah, how fondly I recall setting VMQ on an Intel something-or-another only to put my host into a blue-screen loop. I think the really big showstoppers like that have all been resolved, but many lingering “It alm0st works” problems continue. The number two way to deal with poor virtual switch performance is to disable absolutely every single advanced feature, see how it works, and then turn them on one-at-a-time until you find your culprit (if you want to know what number one is, that’s a sign that you haven’t been reading very closely). In case the subtle hints haven’t been working, the feature that’s causing your problem is probably VMQ.

Disabling VMQ is probably the easiest thing you’ll do this week:

Disable-NetAdapterVmq -Name "Ethernet 45"

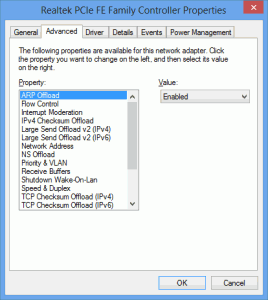

Of course, you’ll need to substitute the “Name” parameter with the actual name of your adapter, since your system isn’t cool enough to have 45 adapters like the fictitious system I wrote that line with. Of course, you can use nifty PowerShell tricks like the pipeline and wildcards to change multiple adapters at once, if you like. Anyway, that’s really all there is to it. If you’ve got a system earlier than 2012, it’s a little tougher, but not much. If it’s got a GUI, go into the adapter’s property screen (where you change TCP/IP settings), click the “Configure” button at the top, and then switch to the “Advanced” tab. There you shall find the settings you seek.

If you don’t have a GUI, or don’t like the GUI, type the following at a command prompt:

netsh interface ipv4 show interface

From the output, get the index number for the adapter(s) you want to modify. Open regedit.exe. Navigate to HKEY_LOCAL_MACHINESYSTEMCurrentControlSetControlClass{4d36e972-e325-11ce-bfc1-08002be10318}. When you expand that, you’ll find a bunch of numeric entries. These are all your network adapters by index number. Click on the index number you got from the netsh command and look in the key list for something like “*VMQ” or “*Virtual Machine Queue”. Set it to zero to disable it.

Now, I have the pleasure of reporting a nasty little oops! that I discovered. If you’ve got budget adapters (like the ones in our sub-$2000 server design) and an adapter team, you definitely want to keep reading. When you create a load-balancing/failover team of network adapters in Windows Server 2012, it makes a virtual adapter for the team. This is pretty standard stuff. That virtual adapter supports VMQ. Cool! It’s enabled even when the underlying hardware doesn’t support VMQ. Not cool! When I first built the system, I didn’t notice the issue. I had originally set the team to use an Address Hash load-balancing algorithm. I flipped it over to Hyper-V Ports to test something, and the performance went straight into the toilet. TCP streams were heavily delayed and UDP traffic dropped about 75% of all packets. Simple tasks like NSLOOKUP against a virtualized DNS server were all but impossible to complete. All I had to do was disable VMQ on the team adapter, and all was well again.

Culprit #3: Drivers and BIOS Settings

This isn’t all that far from culprit #2, really. Poor drivers are one of those things that keep us IT folk employed. But, there’s a new option in the 2012 series: don’t load any drivers at all. Just let the built-in drivers handle it. It may not work in every situation, but then again, it might. If the most recent drivers don’t address your issue, see what uninstalling them altogether does for you.

The BIOS doesn’t always have a lot to do with networking performance, but it does seem that disabling C1E has some positive effects, especially with Live Migration. Personally, I turn off all advanced power states on hypervisor systems. Also, other features, such as SR-IOV, may required specific BIOS settings. Your manufacturer will be the best source of answers on that.

Part 2

The next part of this series will provide guidance for troubleshooting a non-functional switch.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

11 thoughts on "Troubleshooting the Hyper-V Virtual Switch – Part 1"

Hey Eric,

Looking around the web I ran into your post. We are having issues with performance running Quickbooks ENT 14 on a VM. It is not a supported setup, but I am willing to give this VMQ issue a try before migrating it to a stand alone setup. One thing that stood out on your post than other VMQ issue posts, was the paragraph that began with “Now, I have the pleasure of reporting a nasty little oops!” We also are running low end hardware. Realtek GBE Nics, and I do not see VMQ options anywhere on this nic. What is the process of disabling the VMQ on the “team” as you explained?

Thanks!

If you’re running Hyper-V in Windows with a GUI, it’s on the team adapter’s properties.

Either way, you can also set it in PowerShell (assumes you only have one team NIC):

Get-NetAdapter -Name (Get-NetLbfoTeam).TeamNics | Get-NetAdapterAdvancedProperty -DisplayName “Virtual Machine Queues” | Set-NetAdapterAdvancedProperty -DisplayValue “Disabled”

The previous PowerShell command does not work for translated versions of Windows Server.

Here is a command that should work on any version of Windows Sever :

Get-NetAdapter -Name (Get-NetLbfoTeam).TeamNics | Get-NetAdapterAdvancedProperty -RegistryKeyword ‘*VMQ’|Set-NetAdapterAdvancedProperty -RegistryValue “0”

Realtek really performs very poor in Hyper-V. I spent weeks searching where is the problem. I got rid of Realtek and installed Intel-based NIC-s. Voilà !

Some help needed with Virtual switch – would be much appreciated. (I’m a writer, not really a ‘tech’ guy, and I’m trying to get an old Win xp to work under Hyper-V.)

I’ve got the Win xp up and running, can even import files via the CD, but have no way of getting any files out of the vm back to the host. (I want an *internal* virtual switch, since I have no need for an internet connection.)

I’ve got this far: my host machine (win 10 pro), shows, under ‘network connections’, a ‘vEthernet (fvg_VirtualSwitch)’, which I’d set up under Hyper-V, but it’s not working. The error message I get is:

“Windows sockets registry entries required for network connectivity are missing”

I’d be really grateful for a bit of help. (And quite happy to back it up with a bit of paypal support.)

Best,

Fred v.G.

Before you burn a lot of hours on networking, you can:

It will be faster to do it that way. If you want to make the virtual network work, I’ll need more information what you did to set this one up. I’m not entirely clear on where the error is that you’re getting. Did you install the integration services in the WinXP guest?