Save to My DOJO

As you saw in our earlier article that explained how Live Migration works, and how you can get it going in 2012 and 2012 R2, it is a process that requires a great deal of cooperation between source and target computer. It’s also a balancing act involving a great deal of security concerns. Failures within a cluster are uncommon, especially if the cluster has passed the validation wizard. Failures in Shared Nothing Live Migrations are more likely. Most issues are simple to isolate and troubleshoot.

General Troubleshooting

There are several known problem-causers that I can give you direct advice on. Some are less common. If you can’t find exactly what you’re looking for in this post, I can at least give you a starting point.

Migration-Related Event Log Entries

If you’re moving clustered virtual machines, the Cluster Events node in Failover Cluster Manager usually collects all the relevant events. If they’ve been reset or expired from that display, you can still use Event Viewer at these paths:

- Applications and Service LogsMicrosoftWindowsHyper-V-High-AvailabilityAdmin

- Applications and Service LogsMicrosoftWindowsHyper-V-VMMSAdmin

The “Hyper-V-High-Availability” tree usually has the better messages, although it has a few nearly useless ones, such as event ID 21111, “Live migration of ‘Virtual Machine VMName’ failed.” Most Live Migration errors come with one of three statements:

- Migration operation for VMName failed

- Live Migration did not succeed at the source

- Live Migration did not succeed at the destination

These will usually, but not always, be accompanied by supporting text that further describes the problem. “Source” messages often mean that the problem is so bad and obvious that Hyper-V can’t even attempt to move the virtual machine. These usually have the most helpful accompanying text. “Destination” messages usually mean that either there is a configuration mismatch that prevents migration or the problem did not surface until the migration was either underway or nearly completed. You might find that these have no additional information or that what is given is not very helpful. In that case, specifically check for permissions issues and that the destination host isn’t having problems accessing the virtual machine’s storage.

Inability to Create Symbolic Links

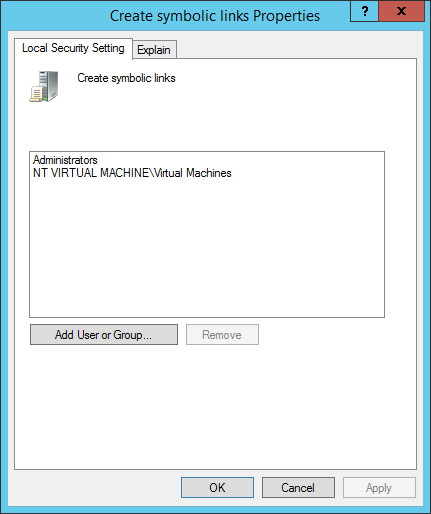

As we talked about each virtual machine migration method in our explanatory article, part of the process is for the target host to create a symbolic link in C:ProgramDataMicrosoftWindowsHyper-VVirtual Machines. This occurs under a special built-in credential named NT Virtual MachineVirtual Machines, which has a “well-known” (read as: always the same) security identifier (SID) of S-1-5-83-0.

Some attempts to harden Hyper-V result in a policy being assigned from the domain involve only granting the Computer ConfigurationWindows SettingsSecurity SettingsLocal PoliciesUser Rights AssignmentCreate symbolic links right to the built-in Administrators account. Doing so will certainly cause Live Migrations to fail and can sometimes cause other virtual machine creation events to fail.

Your best option is to just not tinker with this branch of group policy. I haven’t ever even heard of an attack mitigated by trying to improve on the contents of this area. If you simply must override it from the domain, then add in an entry in your group policy for it. You can just type in the full name as shown in the first paragraph of this section.

Create Symbolic Link

Note: The “Run as a Service” right must also be assigned to the same account. Not having that right usually causes more severe problems than Live Migration issues, but it’s mentioned here for completeness.

Inability to Perform Actions on Remote Computers

Live Migration and Shared Nothing Live Migration invariably involves two computers at minimum. If you’re sitting at your personal computer with a Failover Cluster Manager or PowerShell open, telling Host A to migrate a virtual machine to Host B, that’s three computers that are involved. Most Access Denied errors during Live Migrations involve this multi-computer problem.

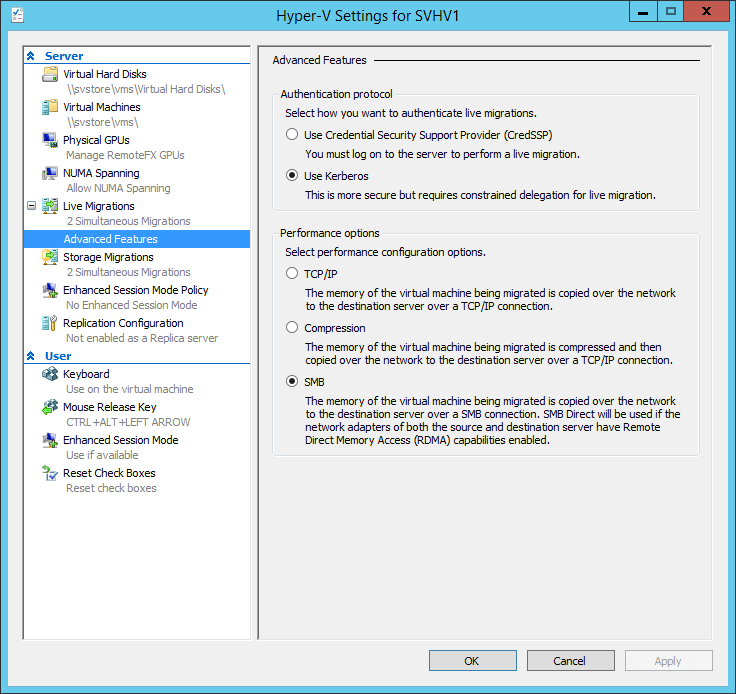

Solution 1: CredSSP

CredSSP is kind of a terrible thing. It allows one computer to store the credentials for a user or computer and then use them on a second computer. It’s sort of like cached credentials, only transmitted over the network. It’s not overly insecure, but it’s also not something that security officers are overly fond of. You can set this option on the Authentication protocol section of the Advanced Features section of the Live Migration configuration area on the Hyper-V Settings dialog.

Live Migration Advanced Settings

CredSSP has the following cons:

- Not as secure as Kerberos

- Only works when logged in directly to the source host

CredSSP has only one pro: You don’t have to configure delegation. My preference? Configure delegation.

Solution 2: Delegation

Delegation can be a bit of a pain to configure, but in the long-term it is worth it. Delegation allows one computer to pass on Kerberos tickets to other computers. It doesn’t have CredSSP’s hop limit; computers can continue passing credentials on to any computer that they’re allowed to delegate to as far as is necessary.

Delegation has the following cons:

- It can be tedious to configure.

- If not done thoughtfully, can needlessly expose your environment to security risks.

Delegation’s major pro is that as long as you can successfully authenticate to one host that can delegate, you can use it to Live Migrate to or from any host it has delegation authority for.

As far as the “thoughtfulness”, the first step is to use Constrained Delegation. It is possible to allow a computer to pass on credentials for any purpose, but it’s unnecessary.

Delegation is done using Active Directory Users and Computers or PowerShell. I have written an article that explains both ways and includes a full PowerShell script to make this much easier for multiple machines.

Be aware that delegation is not necessary for Quick or Live Migrations between nodes of the same cluster.

Mismatched Physical CPUs

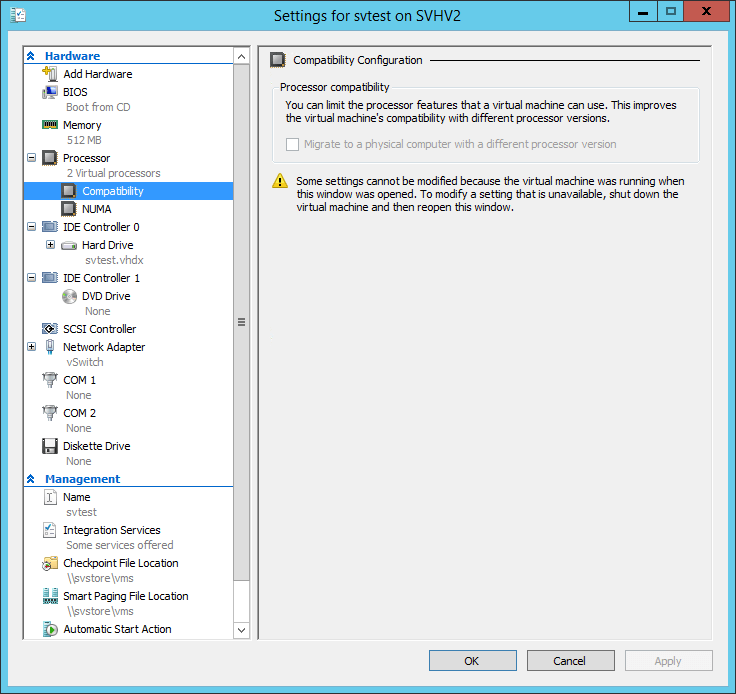

Since you’re taking active threads and relocating them to a CPU in another computer, it seems only reasonable that the target CPU must have the same instruction set as the source. If it doesn’t, the migration will fail. There are hard and soft versions of this story. If the CPUs are from different manufacturers, that’s a hard stop. Live Migration is not possible. If the CPUs are from the same manufacturer, that could be a soft problem. Use CPU compatibility mode:

CPU Compatibility

As shown in the screenshot, the virtual machine needs to be turned off to change this setting.

A very common question is: What are the effects of CPU compatibility mode? For almost every standard server usage, the answer is: none. Every CPU from a manufacturer has a common core set of available instructions and usually a few common extensions. Then, they have extra function sets. Applications can query the CPU for its CPUID information, which contains information about its available function sets. When the compatibility mode box is checked, all of those extra sets are hidden; the virtual machine and its applications can only see the common instruction sets. These extensions are usually related to graphics processing and are almost never used by any server software. So, VDI installations might have trouble when enabling this setting, but virtualized server environments usually will not.

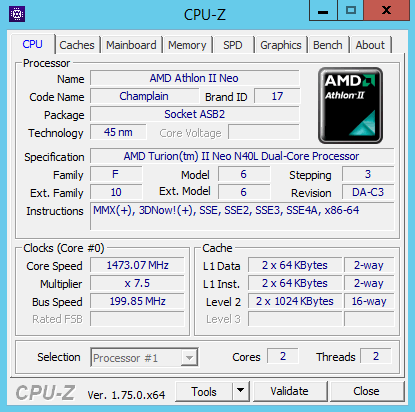

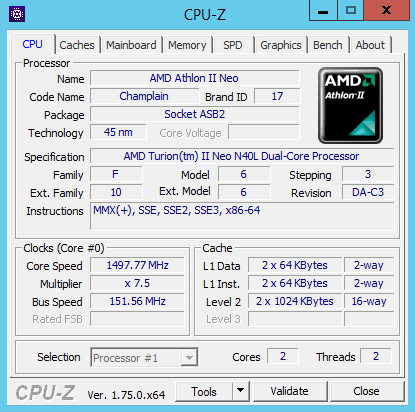

This screenshot was taken from a virtual machine with compatibility disabled using CPU-Z software:

CPU Compatibility Off

The following screen shot shows the same virtual machine with no change made except the enabling of compatibility mode:

CPU Compatibility On

Notice how many things are the same and what is missing from the Instructions section.

Insufficient Resources

If the target system cannot satisfy the memory or disk space requirements of the virtual machine, any migration type will fail. These errors are usually very specific about what isn’t available.

Virtual Switch Name Mismatch

The virtual machine must be able to connect its virtual adapter(s) to a switch(es) with the same name. Furthermore, a clustered virtual machine cannot move if it is using an internal or private virtual switch, even if the target host has a switch with the same name.

If it’s a simple problem with a name mismatch, you can use Compare-VM to overcome the problem while still performing a Live Migration. The basic process is to use Compare-VM to generate a report, then pass that report to Move-VM. Luke Orellan has written an article explaining the basics of using Compare-VM. If you need to make other changes, such as where the files are stored, notice that Compare-VM has all of those parameters. If you use a report from Compare-VM with Move-VM, you cannot supply any other parameters to Move-VM.

Live Migration Error 0x8007274C

This is a very common error in Live Migration that always traced to network problems. If the source and destination hosts are in the same cluster, start by running the Cluster Validation Wizard, only specifying the network tests. That might tell you right away what the problem is. Other possibilities:

- Broken or not completely attached cables

- Physical adapter failures

- Physical switch failures

- Switches with different configurations

- Teams with different configurations

- VMQ errors

- Jumbo frame misconfiguration

If the problem is intermittent, check teaming configurations first; one pathway might be clear while another has a problem.

Storage Connectivity Problems

A maddening cause for some “Live Migration did not succeed at the destination” messages is a problem with storage connectivity. These aren’t always obvious, because everything might appear to be in order. Do any independent testing that you can. Specifically:

- If the virtual machine is placed on SMB 3 storage, double-check that the target host has Full Control on the SMB 3 share and the backing NTFS locations. If possible, remove and re-add the host.

- If the virtual machine is placed on iSCSI or remote Fibre Channel storage, double-check that it can work with files in that location. iSCSI connections sometimes fail silently. Try disconnecting and reconnecting to the target LUN. A reboot might be required.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

56 thoughts on "Your definitive guide to Troubleshoot Hyper-V Live Migration"

Hey Eric,

We have a multi node Hyper V Cluster that has recently developed an issue with intermittent failure of live migrations.

We noticed this when one of our CAU runs failed because it could not place the Hosts into maintenance mode or successfully drain all the roles from them.

Scenario:

Place any node into Maintenance mode/drain roles.

Most VM’s will drain and live migrate across onto other nodes. Randomly one or a few will refuse to move (it always varies in regards to the VM and which node it is moving to or from). The live migration ends with a failure generating event ID’s 21502, 22038, 21111, 21024. If you run the process again (drain roles) it will migrate the VM’s or if you manually live migrate them they will move just fine. Manually live migrating a VM can result in the same intermittent error but rerunning the process will succeed after one or two times or just waiting for a couple minutes.

This occurs on all Nodes in the cluster and can occur with seemingly any VM in the private cloud.

Pertinent content of the event ID’s is:

Event 21502

Live migration of ‘VM’ failed.

Virtual machine migration operation for ‘VM’ failed at migration source ‘NodeName’. (Virtual machine ID xxx)

Failed to send data for a Virtual Machine migration: The process cannot access the file because it is being used by another process. (0x80070020).

Event 22038

Failed to send data for a Virtual Machine migration: The process cannot access the file because it is being used by another process. (0x80070020).

According to this it would appear that something is locking the files or they are not transferring permissions properly, however all access to the back end SOFS is uniform across all the Nodes and the failure is intermittent rather than consistently happening on one Node.

Hmm, I think if that were happening to me that I’d probably just skip straight to getting Microsoft PSS on it. Let them gather some trace logs and see where the files are getting locked.

If you want to try it yourself, you might use Process Monitor to watch the specific files, but when you’re talking about a Hyper-V cluster over a SOFS, that is a lot of moving parts.

Hi Eric,

Firstly, thank you so much for all the great stuff about Hyper-V.

My customer too had a similar situation these days; the VM migrations from one node to the other fails intermittently.

When I had a remote session, I could see that he could migrate some 6 odd VMs to another node, but eventually rest of the VMs fail to migrate.

I have very less time to find out what could be the culprit. I need to provide the root-cause to my customer by EOD today at any cost. I’ve checked the “Hyper-V HA Admin” & “Hyper-V VMMS Admin” logs but to no avail.

Please suggest where else should I look into? Perhaps you’ve seen many such instances till date.

Sorry I didn’t get to this quickly. When things are that serious, I recommend calling product support services. It will cost you, but their tools can pull all sorts of detailed information that might help.

I would check the “Cluster Events” node in Failover Cluster Manager first.

in my case, we realized that some VMs didn’t have High availability configured.

Hey Eric,

We have a multi node Hyper V Cluster that has recently developed an issue with intermittent failure of live migrations.

We noticed this when one of our CAU runs failed because it could not place the Hosts into maintenance mode or successfully drain all the roles from them.

Scenario:

Place any node into Maintenance mode/drain roles.

Most VM’s will drain and live migrate across onto other nodes. Randomly one or a few will refuse to move (it always varies in regards to the VM and which node it is moving to or from). The live migration ends with a failure generating event ID’s 21502, 22038, 21111, 21024. If you run the process again (drain roles) it will migrate the VM’s or if you manually live migrate them they will move just fine. Manually live migrating a VM can result in the same intermittent error but rerunning the process will succeed after one or two times or just waiting for a couple minutes.

This occurs on all Nodes in the cluster and can occur with seemingly any VM in the private cloud.

Pertinent content of the event ID’s is:

Event 21502

Live migration of ‘VM’ failed.

Virtual machine migration operation for ‘VM’ failed at migration source ‘NodeName’. (Virtual machine ID xxx)

Failed to send data for a Virtual Machine migration: The process cannot access the file because it is being used by another process. (0x80070020).

Event 22038

Failed to send data for a Virtual Machine migration: The process cannot access the file because it is being used by another process. (0x80070020).

According to this it would appear that something is locking the files or they are not transferring permissions properly, however all access to the back end SOFS is uniform across all the Nodes and the failure is intermittent rather than consistently happening on one Node.

Great guide! Helped me learn so much. Also – very well written.

Hi Eric,

i have this issue, in my envirinment i can’t migrate only one vm to one server. The machnes are windows 10 and the servers are 2019. What is needed to be checked?

Well, I kind of wrote an entire article on what needs to be checked. It’s right up there. Maybe start with that?

I had a recurring error in the live migration process, but after a few ours in powershell and on the Internet, I finally realized it was as simple as a mounted Iso posing as DVD in two of my VMs.

It made my migrations to fail with error 21024. At first I though I solved it since error 21024 in most forum posts told me that it was a network connectivity issue. Actually I hadn’t renamed my virtual switch on my second host, so I thought I solved it with that.

Unfortunately the same error came up again and again. Telling me that the virtual machine was in the wrong state.

As I said I finally found it to be a mounted ISO.

NOTE: For live migration to work with a mounted ISO that same ISO file needs to exist on the target host.

Thank you for a great article.