Save to My DOJO

Symptom: You attempt to Live Migrate a Hyper-V virtual machine to a host that has the same CPU as the source, but Hyper-V complains about incompatibilities between the two CPUs. Additionally, Live Migration between these two hosts likely worked in the past.

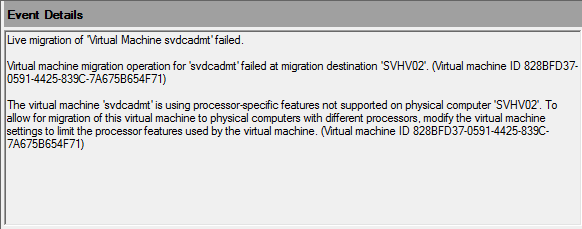

The event ID is 21502. The full text of the error message reads:

“Live migration of ‘Virtual Machine VMName‘ failed.

Virtual machine migration operation for ‘VMNAME‘ failed at migration destination ‘DESTINATION_HOST‘. (Virtual machine ID VMID)

The virtual machine ‘VMNAME‘ is using processor-specific features not supported on physical computer ‘DESTINATION_HOST‘. To allow for the migration of this virtual machine to physical computers with different processors, modify the virtual machine settings to limit the processor features used by the virtual machine. (Virtual machine ID VMID)

Why Live Migration Might Fail Across Hosts with the Same CPU

Ordinarily, this problem surfaces when hosts use CPUs that expose different feature sets — just like the error message states. You can use a tool such as CPU-Z to identify those. We have an article that talks about the effect of CPU feature differences on Hyper-V.

In this discussion, we only want to talk about cases where the CPUs have the same feature set. They have the same feature sets; CPU identifiers reveal the same family, model, stepping, and revision numbers. And yet, Hyper-V says that they need compatibility mode.

Cause 1: Spectre Mitigations

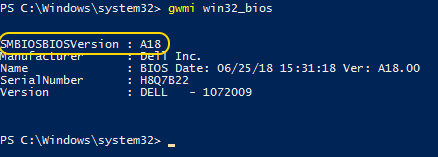

The Spectre mitigations make enough of a change to prevent Live Migrations, but that might not be obvious to anyone that doesn’t follow BIOS update notes. To see if that be affecting you, check the BIOS update level on the hosts. You can do that quickly by asking PowerShell to check WMI: Get-WmiObject -ClassName Win32_BIOS, Get-CimInstance -ClassName Win32_BIOS, or, at its simplest, gwmi win32_bios:

A difference in BIOS versions might tell the entire story if you look at their release notes. When updates were released to address the first wave of Spectre-class CPU vulnerabilities, they included microcode that altered the way that CPUs process instructions. So, the CPU’s feature sets didn’t change per se, but its functionality did.

Spectre Updates to BIOS Don’t Always Cause Live Migration Failures

You may have had a few systems that received the hardware updates that did not prevent Live Migration. There’s quite a bit going on in all of these updates that amount to a lot of moving parts:

- These updates require a cold boot of the physical system to fully apply. Most modern systems from larger manufacturers have the ability to self-cold boot after a BIOS update, but not all do. It is possible that you have a partially-applied update waiting for a cold boot.

- These updates require the host operating system to be fully patched. Your host(s) might be awaiting installation or post-patch reboot.

- These updates require the guests to be cold-booted from a patched host. Some clusters have been running so well for so long that we have no idea when the last time any given guest was cold booted. If it wasn’t from a patched host, then it won’t have the mitigations and won’t care if it moves to an unpatched host. They’ll also happily moved back to an unpatched host.

- You may have registry settings that block the mitigations, which would have a side effect of preventing them from interfering with Live Migration.

I have found only one “foolproof” combination that always prevents Live Migration:

- Source host fully patched — BIOS and Windows

- Virtual machine operating system fully patched

- Registry settings allow mitigation for the host, guest, and virtual machine

- The guest was cold booted from the source host

- Destination host is missing at least the proper BIOS update

Because Live Migration will work more often than not, it’s not easy to predict when a Live Migration will succeed across mismatched hosts.

[thrive_leads id=’17165′]

Correcting a Live Migration Block Caused by Spectre

Your first, best choice is to bring all hosts, host operating systems, and guest operating systems up to date and ensure that no registry settings impede their application. Performance and downtime concerns are real, of course, but not as great as the threat of a compromise. Furthermore, if you’re in the position where this article applies to you, then you already have at least one host up to date. Might as well put it to use.

You have a number of paths to solve this problem. I chose the route that would result in the least disruptions. To that end:

- I patched all of the guests but did not allow them to reboot

- I brought one host up to current BIOS and patch levels

- I filled it up with all the VMs that it could take; in two node clusters, that should mean all of the guests

- I performed a full shut down and startup of those VMs; that allowed them to apply the patch and utilize the host’s update status in one boot cycle. It also locked them to that node, so watch out for that.

- I moved through the remaining hosts in the cluster. In larger clusters, that also meant opportunistically cold booting additional VMs

That way, each virtual machine was cold booted only one time and I did not run into any Live Migration failures. Make certain that you check your work at every point before moving on — there is much work to be done here and a missed step will likely result in additional reboots.

Note: Enabling the CPU compatibility feature will probably not help you overcome the Live Migration problem — but it might. It does not appear to affect everyone identically, like due to fundamental differences in different processor generations.

Automating the Spectre Mitigation Rollout

I opted not to script this process. The normal patching automation processes cover reboots, not cold boots, and working up a foolproof script to properly address everything that might occur did not seem worth the effort to me. These are disruptive patches anyway, so I wanted to be hands-on where possible. If patch processes like this become a regular event (and it seems that it might), I may rethink that. If I had dozens or more systems to cope with, I would have scripted it. I was lucky enough that a human-driven response worked well enough to suit. However, I did leverage bulk tools that I had available.

- I used GPOs to change my patching behavior to prevent reboots

- I used GPOs to selectively filter mitigation application until I was ready

- To easily cold boot all VMs on a host, try Get-VM | Stop-VM -Passthru | Start-VM. Watch for any VMs that don’t want to stop — I deliberately chose not to force them.

- I could have used Cluster Aware Updating to rollout my BIOS patches. I chose to manually stage the BIOS updates in this case and then allowed the planned patch reboot to handle the final application.

Overall, I did little myself other than manually time the guest power cycles.

Cause 2: Hypervisor Version Mismatch

I like to play with Windows Server Insider builds on one of my lab hosts. I keep its cluster partner at 2016 so that I can study and write articles. Somewhere along the way, I started getting the CPU feature set errors trying to move between them. Enabling the CPU compatibility feature does overcome the block in this case. Hopefully, no one is using Windows Server Insider builds in production, much less mixing them with 2016 hosts in a cluster.

It would stand to reason that this mismatch block will be corrected before 2019 goes RTM. If not, Cluster Rolling Upgrade won’t function with 2016 and 2019 hosts.

Correcting a Live Migration Block Caused by Mixed Host Versions

I hope that if you got yourself into this situation that you know how to get out of it. In my lab, I usually shut the VMs down and move them manually. They are lab systems just like the cluster, so that’s harmless. For the ones that I prefer to keep online, I have CPU compatibility mode enabled.

Do you have a Hyper-V Problem To Tackle?

These common Hyper-V troubleshooting posts have proved quite popular with you guys, but if you think there is something I’ve missed so far and should be covering let me know in the comments below and it could be the topic for my next blog post! Thanks for reading!

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

8 thoughts on "What To Do When Live Migration Fails On Hosts With The Same CPU"

Thanks a lot. It really helped me.

We’re seeing this error with E5-2340 and E5-2340 v2 on Dell servers. Firmware and software is completely identical. One server is using BIOS boot and one is using UEFI. Would that cause issues? This seems nuts.

It’s not that nuts. I’ve seen it block on different versions of the same chip before.

Love the blog thanks