Save to My DOJO

It’s no secret that I’m a vocal advocate for a solid, regular, well-tested backup strategy. It’s why I work so well with my friends at Altaro. So, it might surprise you that I do not back up any of my Hyper-V management operating systems. I don’t recommend that you back up yours, either. If you are backing it up, and the proposition to cease that activity worries you, then let’s take some time to examine why that is and see if there’s a better approach. I’ll admit that my position is not without controversy. It’s worth investigating the situation.

Before we even open this discussion, I want to make it very clear that I am only referring to the management operating system environment itself, which would include Hyper-V settings. In no way am I advocating that you avoid backing up your virtual machines.

Challenge Your Own Assumptions

Any time we’re dealing with a controversy, I think the best thing to do is decide just how certain we are that we’re on the correct side. So, I’m going to start this discussion by examining the reasons that people back up their management operating systems to test their validity.

Must We Backup Everything?

Through my years of service in this field, I have developed a “back up everything” attitude. Things that seemed pointless when they were available can become invaluable when they’re missing (as the sage Joni Mitchell tells us, “you don’t know what you’ve got ’til it’s gone”). When I first started working with hypervisors, I intended to bring that attitude and practice with me. However, the hypervisor and the backup products that I was using at that time (not Hyper-V and not Altaro) could not capture backups of the hypervisor. So, I worked with what I had and came up with a mitigation strategy that did not require a hypervisor backup for recovery. It’s coming up on a decade since I switched to that technique, and I have not yet regretted it.

Should Type 1 and Type 2 Hypervisors be Treated the Same Way?

I had been working with type 1 hypervisors in the datacenter for years before I even saw a type 2 hypervisor in action. The first time that I ever heard of one, it was from other Microsoft Certified Trainers raving about all the time that VMware Workstation was saving them. Back then, VMware offered a free copy of the product to MCTs. So, I filled out all the required paperwork and sent it in to see what all of the fuss was about… and never heard back from VMware. I shrugged and went on with my life. Over the years, I learned of other products, such as Virtual PC, but the proposition held very little interest for me. So, just by that quirk of fate, I never really used any virtualization products on the desktop until I had developed hypervisor habits from the server level.

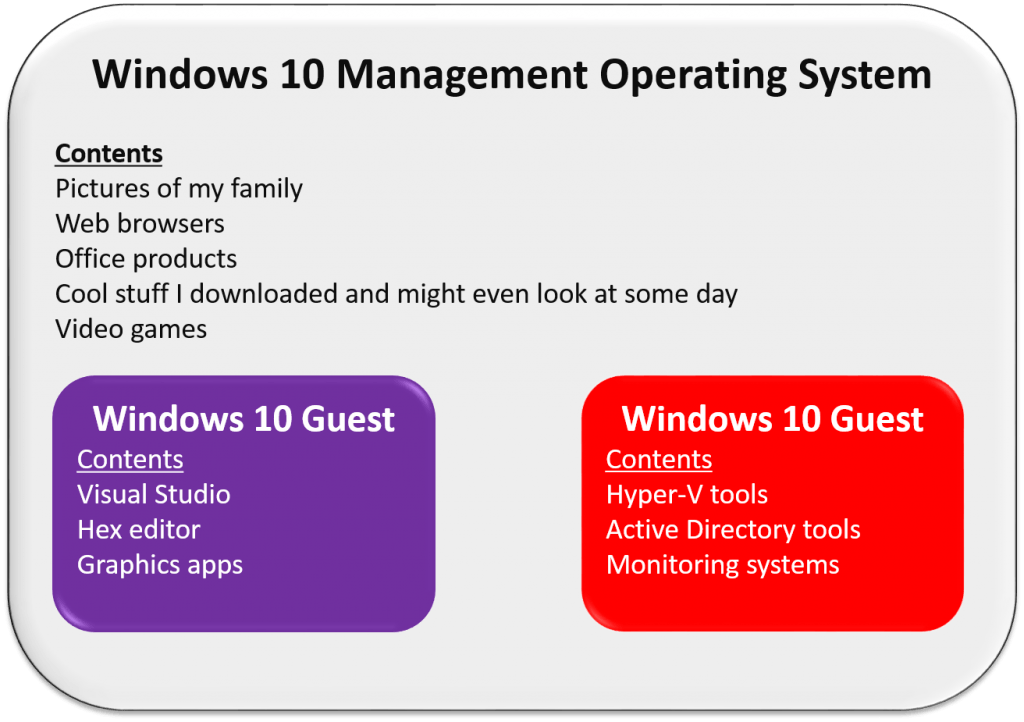

It seems to me that many people have probably gained their experience with hypervisors in the opposite direction. Desktop type 2s first, then server type 1s. It also occurs to me that someone who started with type 2 hypervisors probably has a completely different mindset than I do. In order to examine that, I took a look at my current home system, which does use a desktop hypervisor. It looks like this:

Desktop Virtualization Environment

Whether my desktop hypervisor is type 1 or type 2 is irrelevant, really. I’d also like to avoid talking about the fact that the current security best practice is to not have all the management stuff be in a privileged virtual machine. The big point here is that my management operating system contains some really important stuff that I would be very sad to lose. At least one of my virtual machines is important as well. I’ve got a lot of script and code in one of them that I’d like to hold on to. So, I back up the whole box.

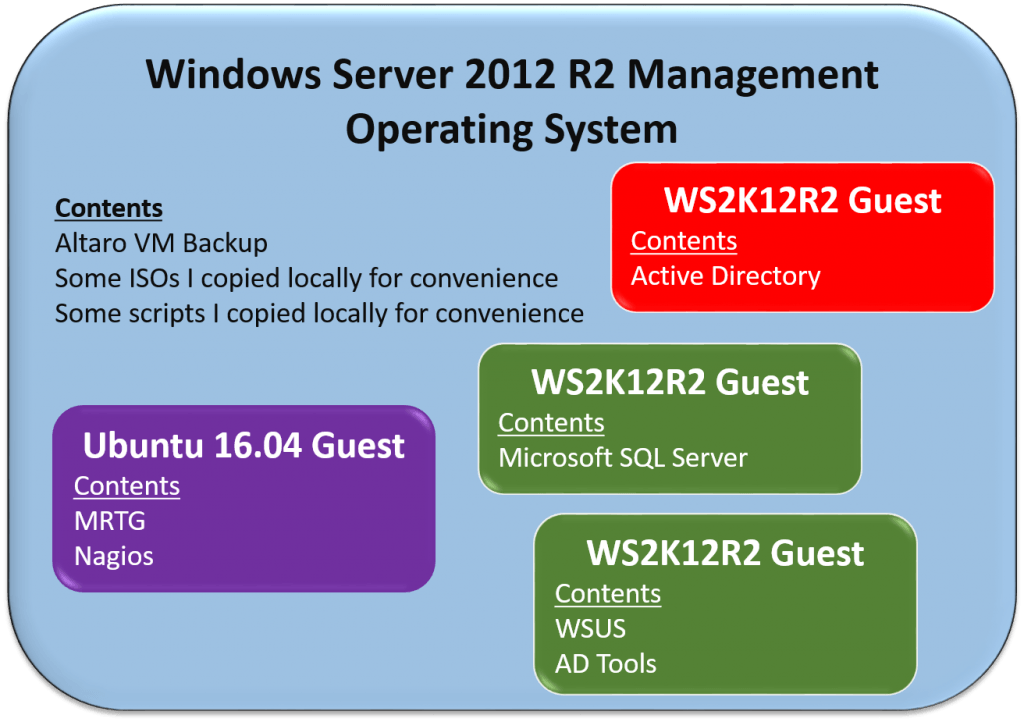

Now, let’s look at one of my Hyper-V hosts:

Host Server Environment

Do you see the difference? My server’s management operating system doesn’t have any data in it that doesn’t exist elsewhere.

So, let’s draw an important distinction: a Hyper-V server machine should not have any important data in the management operating system. I used a little “s” on server for a reason: follow the same practice whether it’s Hyper-V Server or Windows Server + Hyper-V. This statement is a truism and holds its own even when we’re not talking about backup and recovery. It is simply a best practice that happens to impact our options for backup and recovery.

Is a Bare Metal Restore Faster than Rebuilding the Management Operating System from Scratch?

The common wisdom for your average Windows system is that a bare metal restore returns you to full operating mode much more quickly than rebuilding that system from installation media and restoring all of its applications and settings from backup. Is that wisdom applicable to Hyper-V, though? Not really. You have the Hyper-V role and your backup application, maybe an antivirus agent, and that’s it (or should be). The bulk of the data is in the virtual machines, which don’t care if you retrieve them from bare metal restore or using a VM-aware method.

The truth of that will depend on how you’re performing your backup. If you have 100% local virtual machines and you are using a singular method to back up the entire host — management operating system, guests, and all — then the full back up/bare metal restore method is faster. If there is any division in your process — one back up and restore method for the management operating system and another for the guests, or when the virtual machines are not stored locally, then bare metal restore is not faster.

Challenge Common Practice

Don’t do things just because it’s what other people do. We all start off doing that because anyone that’s done it even once has more experience than someone that’s just started. I switched from my habit of backing up everything to leaving the hypervisor out of backup because I was forced to and it worked out well for me. You can use my experience (and the experiences of others) to make an informed decision.

Challenge Your Own Practice

If you’ve been backing up your management operating system for a while, I wouldn’t be surprised if you’re reluctant to stop. No one likes to alter their routines, especially the ones that work. So, what I want you to do is not think so much about changing your routine, but think more about what brought you to that routine and if that is resulting in optimal administrative quality of life. There might be something that you should be changing anyway.

Are you doing any of these things?

- Running another server role in the management operating system, such as Active Directory Domain Services or Routing and Remote Access

- Using the management operating system as a file server

- Storing guest backups in the management operating system’s file structure

- Relying on backup to protect your management server’s configuration

You should not be doing any of these things. Every single item on that list is bad practice.

Running any role besides Hyper-V in the management operating system causes you to forfeit one of your guest virtualization rights. That role will also suffer in performance because the management operating system gets last rights to resources. Some roles, such as Active Directory Domain Services, are known to cause problems when installed alongside Hyper-V. No role is officially supported in the management operating system. If Hyper-V is installed, then you have immediate access to a hypervisor environment where you can place any such other roles inside a guest. So, there is no valid reason to run a non-Hyper-V role alongside Hyper-V.

If you’re using the management operating system as a file server, then you’ve really done everything that I said in the previous paragraph. I only separated it out because some people are convinced it’s somehow different. It’s not. “File server” is a server role, as indicated by the word “server”. Even if the shared files are only used by the guests of that host, it’s still a file server, it is still competing with guests, it is still best suited inside a virtual machine, and it is still a violation of your license agreement — and you’re doing it wrong. Create a file server guest, move all the files there, and back up the guest.

I truly hope that no one is actually storing their guest backups within the management operating system’s file structure. I hesitate to even legitimize that in any way by calling it a “backup”. It’s like copying the front side of a sheet of paper to the back side of that same sheet of paper and feeling like you’ve somehow protected the information on it.

The fourth item, using backup to protect your host configuration, has some validity. Let’s cover what that entails:

- IP addresses

- iSCSI/SMB storage connections, if any

- Network card configuration

- Teaming configuration

- Windows Update configuration

- Hyper-V host settings, such as VM file and storage locations and Live Migration settings

If a basic Hyper-V Server/Windows Server installation is around 20GB, how of it is consumed by the above? Hardly any. A completely negligible amount, in fact. There’s really no justification for backing up the entire management operating system just to capture what you see above.

A Better Strategy for Protecting Your Hyper-V Management Operating System

If we’re not going to back up the management operating system, then what’s our back up and recovery strategy? In the event of total system loss, what is our path back to a fully functional operating environment?

In a typical disaster recovery document, each system, or system type, is broken into two parts: the back up portion and the recovery portion. We’ll investigate our situation from that perspective and create a Hyper-V “Backup” strategy.

“Backing Up” the Management Operating System

There are three parts to the “back up” phase.

- A clean environment

- A copy of all settings and drivers

- Regularly updated installation media

Make Sure the Environment is Clean

Before you can even think about not running a standard backup tool, you need to make sure that your management operating system is “clean”. That means, there can’t be anything there that you would miss in the event of a complete system failure besides the virtual machines. Look in your documents and downloads folders, check the root of the C: drive for non-standard folders, use my orphaned VM file locator to see if you’ve got a detached VHDX with important things in it. Find a more suitable home for all of those items and institute a practice to never leave anything of value on a hypervisor’s management operating system.

Protect Settings and Drivers

Next, we need to deal with the host settings. I don’t want you to need to memorize these or anything crazy like that. What I recommend that you do is save these settings in a script file. I have designed one for you to customize. You can modify that and save it anywhere. You can keep it on one or more USB keys and leave one wherever your offsite backups are kept. You can copy it up to your cloud provider. It’s plenty small to fit any any free online storage account, even. I also counsel you to keep those settings in a plain text file as well. Doing so is a must if you’re not going to script your settings. Even if you are going to script them, you might have other data bits that you can’t add to that script, such as the IP address for an out-of-band management device. For small environments, I like to make paper copies of these and store them with other disaster recovery paper documents. Just for the record, this is not Hyper-V-specific practice. Good backups or not, server configurations should always be documented.

With your settings properly stored away, think next about what you need to run the environment. I’m specifically thinking about drivers and computer system manufacturer’s tools. If your operating environment is large enough, just keeping them on a file server might do. If your file server is dependent upon your Hyper-V host, that might not work so well. You could place them on the USB key with your settings, you could copy them to an external source that holds your virtual machine backups, or you could burn a DVD with them. You could also take the view that if you had to rebuild a host you’d probably skip using anything that’s been sitting in storage since your last disaster recovery update and just get the latest stuff from the manufacturer. If that’s the case, then record the must-have items in your documentation so that you don’t spend a lot of time looking through download lists. As an example:

- Chipset drivers

- Network adapter drivers

- RAID drivers

Protect the Installation

I anticipated that the number one complaint I’ll get about the title/content of this article is that recovering a Windows Server or Hyper-V Server from installation media is a long and time-consuming process. That’s not because installation needs much time, but because Microsoft has ceased making any attempts to keep operating system ISOs up-to-date. As far as I know, the most recent 2012 R2 ISO that is generally available only includes Update 1, which is over two years old. Patching from that point to current could easily take over an hour, and that’s if you’ve got an in-house WSUS system and can skip the Internet pull. Therefore, that complaint is almost valid. However, it’s so easily mitigated that once you know how it can be done, it would be embarrassing not to.

The basic approach is simple. Stop using DVDs to deploy physical hosts. It’s slow and unnecessary. You have three superior options:

- Deploy from USB media

- Deploy from Windows Deployment Services (WDS)

- Bare metal deployment from System Center Virtual Machine Manager

Out of all of these methods, the second is my favorite. WDS is relatively lightweight, with the heaviest component being the large WIM files that you must save. If you’re a smaller business, it would work well as a role on your file server. It’s still not necessarily feasible for the very small shops that only do a handful of deployments in the course of a year (if that). For those, the first option is probably the best. For the cost of two or three USB keys, you can fairly easily maintain ready-to-go reinstallation images. Bare metal deployment from VMM is my least favorite. I’ve used it myself for a while because I have a fair number of hosts to deal with and it has some Hyper-V-centric features that WDS does not. However, I’m starting to lean back toward the WDS method. VMM’s bare metal deployment has always been a rickety contraption, and each new update roll-up adds new bugs untested features that are relentlessly driving it toward being yet another vestigial organ in the morass of uselessness that is VMM.

The first two deployment methods utilize the Windows Imaging File Format (WIM). VMM uses VHDX. Native tools in Windows Server can update a Windows installation in either file type without bringing it online. That’s an extremely important time saver. You can schedule a patch cycle against these files while you sleep and have an always-ready, always-current deployment source if you should need to replace a failed host or stand up a new one. The only things that you need are an installation of Windows Server Update Services (WSUS) and a copy of a script that I wrote specifically for the purpose of updating VHDX and WIM files – get it here. If you haven’t been using WSUS, you should start as quickly as possible. It’s another lightweight deployment that mostly relies on disk space. If you only select Windows Server 2012 R2, it can use as little as 8 GB of drive space (the end-user products can require dozens of GB of space so be careful). That article also provides links to setting up a WDS system, if you’re ready to break free of media-based deployments once and for all.

I typically don’t add drivers right into the image’s Windows installation because so many require full .EXEs, but you can add .inf-based drivers using the Add-WindowsDriver cmdlet. You can hack in to my script a bit and see how I get to that point. What I am more inclined to do is mount the image file and copy the driver installers into a non-system folder so that I can run them after deployment.

“Restoring” the Management Operating System

With the above completed, you’re ready in case a Hyper-V host should need to be rebuilt. All you need to do is:

- Install from your prepared media

- Reload the drivers that you have ready (or just download new ones)

- Re-establish your settings

- Restore your virtual machines

I haven’t timed the process on current hardware, but I’d say that you should reasonably expect to be able to get through the first three in somewhere between 20 minutes and an hour. I think the hour is a bit long and depends on how quickly your host can boot. I know that the more recent Dell rackmount hosts take longer to warm boot than my old 286 needed to cold boot, but that time investment would be a constant whether you were performing a traditional bare metal restore or using one of these more streamlined methods. Once that’s done, the time to restore virtual machines is all a factor of the virtual machines’ sizes, your software, and your hardware.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

11 thoughts on "Hyper-V Backup Strategies: Don’t Worry about the Management OS"

Nice article. Worth to consider. I am a kinda backup everything guy 🙂 What about Hyper-V cluster node? Should we backup it? Or just join new server to the cluster? Whats your opinion?

I am even less inclined to backup a cluster node than a standalone Hyper-V host.

Our hyper-v cluster is 2008 r2. It’s now 4 years old and I am inclined to not touch anything. Some of the VMs are a bit shirty and while I wish I could back it up, some idiot has designed a system that keeps 4TB of (3 copies of ) files on one vm and 2 copies of same on 2 other vms.

I have never tried to restore a backup from the backup system that only has 6TB ^^;

Our hyper-v cluster is 2008 r2. It’s now 4 years old and I am inclined to not touch anything. Some of the VMs are a bit shirty and while I wish I could back it up, some idiot has designed a system that keeps 4TB of (3 copies of ) files on one vm and 2 copies of same on 2 other vms.

I have never tried to restore a backup from the backup system that only has 6TB ^^;

I’m very new to Hyper-v and the do’s and dont’s frighten the hell out of me. As a small business of 25 employees, I have always run physical single servers for DC/AD and file shares. We recently migrated to Sage 200C which requires AD to authenticate. We lost our old DC/AD server a few weeks back due to catastrophic hardware failure. The Machine was 7 years old running Server 2012R2. We decided to install a new server with Server 2019, recreated all the accounts restored all the files and we are good to go, except we weren’t because of Sage. Sage/SQL server needed a lot of work to reintegrate the Sage Authentication with AD for users to login. Gone are the days of defining user access within Sage itself. We run Sage in a 2016 HyperV running on a Server 2016 host. We also needed additional storage for the business so invested in a server with lots of storage space and fast discs for the VM. But given the comments above the host server space should not be used because we are not supposed to run anything on the host OS. The cost to small businesses is prohibitive when you look at Server OS’s and CAL’s and the apparent restrictions imposed on what you can and cannot run on the host OS’s. Once up on a time there was a product called SBS. An all in one product that handled everything from DC, AD, Exchange and file shares. My feeling now is that we have very very expensive solutions to run the simplest of tasks that also bring a lot of technical issues that small businesses just cannot handle, both in cost and expertise.

There is a great deal of lament for the loss of SBS. You’re absolutely right that there is no longer a cost effective way for small businesses to run a full Microsoft stack on-premises. I am 100% on your side on that. No one that can do anything about it is listening anymore, though.

I don’t know what your entire VM outlay looks like, but you can still carve up a big chunk of that storage space into a VHDX and expose it in a virtual machine with SMB.