Save to My DOJO

Table of contents

- 1. Keep Your Hosts Current

- 2. Install Integration Services and Keep Them Current

- 3. Do Not Try to Use Dynamic Memory Everywhere

- 4. Always Monitor Guest Memory Usage

- 5. Do Not Neglect the Startup Value

- 7. Bet Low on Maximum Values

- 8. Use Smaller Buffers for Larger and Static Virtual Machines

- 10. Do Not Rely on the Guest’s Memory Report

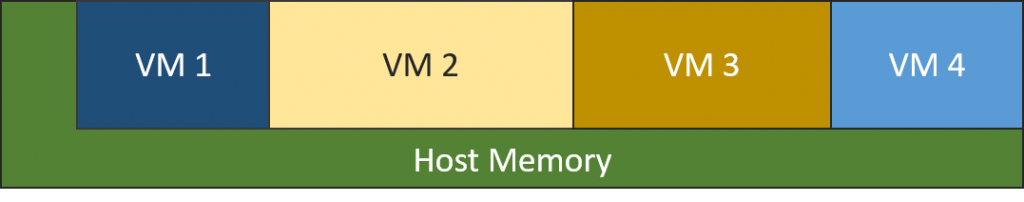

One of the promises made by virtualization is that hardware will be more efficiently utilized. We see that most strongly with CPU, where even the processors on densely packed hosts tend to have more free cycles than not. Memory is at the opposite end of that spectrum. It exhausts so quickly that it almost always defines the limit for the number of virtual machines on any given host. Your best tool for keeping memory consumption tamed is careful management — which, as we all know, is mostly a pipe dream. Precision management requires time that few administrators have and leaves no room for error or growth. Therefore, we must instead turn to a readily available tool: Dynamic Memory. It does not absolve of us the responsibility of due diligence and proper management, but it can turn this:

Underutilized Host Memory

Into this:

Better Computing Through Dynamic Memory

Dynamic Memory allows you to get more usage out of the hardware that you own. It can reduce the number of hosts that you require, which saves on both hardware and licensing costs. It can also help alleviate any burden upon you to attempt to micro-manage the memory allocation of your guests. It is not a magic bullet, unfortunately, nor is it entirely a “set-it-and-forget-it” technology. There are a number of best practices to get the most out of Dynamic Memory.

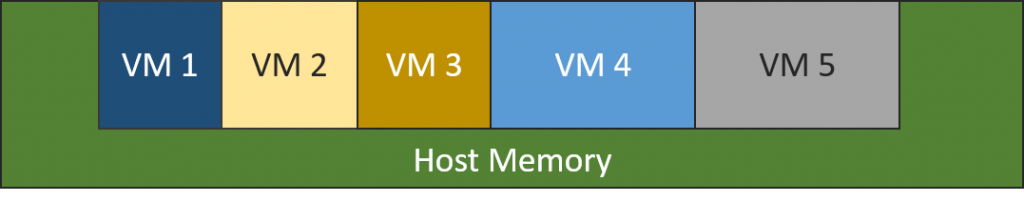

For reference, the following image shows the Dynamic Memory configuration page for a Hyper-V virtual machine:

Dynamic Memory Configuration

1. Keep Your Hosts Current

A bug was discovered in Hyper-V that prevented guests from receiving additional memory, even when demand was up and more was available. The KB article (KB3095308) and patch acquisition instructions are available from Microsoft’s support site. Even when there aren’t any patches available specifically for Dynamic Memory (which is the norm), you still benefit. Live Migrating a guest is a signal to Dynamic Memory to see if there is anything to clean up. Rebooting an unclustered host resets memory allocation for guests that shut down and triggers a reassessment for others in a similar fashion to Live Migration.

2. Install Integration Services and Keep Them Current

All Windows and Windows Server operating systems currently in support are shipped with Integration Services as part of the operating system, although the lower editions of Windows do not support Dynamic Memory (specifics up through Windows 8.1 are available on TechNet). If these fall out of date, you may not get optimal Dynamic Memory behavior. Remember that until the WS2016/W10 code base, Integration Services can only be updated by using the vmguest.iso on the host.

Many Linux guests support Dynamic Memory if the Integration Components are installed. Start with TechNet’s root page for Linux on Hyper-V. On the left, locate your distribution, or the distribution nearest to. You’ll find details on support for Integration Components; the Integration Components are now part of almost every distribution. You may find some particular instructions for ensuring that Dynamic Memory works for your particular distribution, such as memory sizing requirements.

3. Do Not Try to Use Dynamic Memory Everywhere

If you’re using a popular server application, sometimes you can just perform an Internet search for that product and “Dynamic Memory”. For example, “Can I use Dynamic Memory with Exchange?” Always give greater weight to original manufacturer documentation than independent blogs or technical forums… not because the manufacturer is necessarily the best source, but because the manufacturer controls support. Some software vendors will go to the effort of finding out how their application operates with the Windows memory manager and publish solid guidelines. Others will just leave you to your own devices. Unfortunately, many of the vendors I’ve had to work with publish very arbitrary numbers and insist that you reserve 100% of it. You can increase densities and save money by ignoring their requirements, but you risk being placed in support limbo as a result.

4. Always Monitor Guest Memory Usage

Use some automated tool to keep an eye on the memory demand and assignment values for your virtual machines. At worst, schedule something this:

Get-VM | where DynamicMemoryEnabled -eq $true | select Name, MemoryAssigned, MemoryMinimum, MemoryMaximum, MemoryDemand | ConvertTo-Csv -NoTypeInformation | Add-Content -Path C:LogsDynamicMemoryReport.csv

5. Do Not Neglect the Startup Value

Dynamic Memory for a single virtual machine has three allocation values: Minimum, Startup, and Maximum. The Startup memory is what will be allocated to the guest at the moment that it is turned on. That amount will be immutable until the Dynamic Memory driver in the guest successfully communicates with Hyper-V. The Startup value is the most critical place to control host densities. When a host is booted and the Virtual Machine Management Service (VMMS) starts, it looks for all of the virtual machines set to automatically start. If it detects that it is recovering from a crash, it also looks for VMs that were running when that happened. It tries to start all of them simultaneously. Except in the few cases where Smart Paging kicks in (look in the “Minimum memory configuration with reliable restart operation” section near the top of that page), the amount of available physical host memory determines which virtual machines will be turned on. If you have set priorities, that is a guide that VMMS will follow in low memory conditions, but it is not priorities that prevent guests from starting; it is memory starvation. Making reductions to the Startup values can alleviate this situation.

On the flip side of assigning too much Startup memory is not assigning enough. If you’re in the position of installing Windows Server 2012 through 2012 R2, it will lock you at the product key screen if you assigned less than 1 gigabyte. For normal operations, I have not personally encountered any issues with typical Windows Server installations using 512 MB as the Startup value. I have received and read a few reports of people that have encountered problems that appear to be related to operating system cache sizes being insufficient when Dynamic Memory was used. Unfortunately, I have never seen any of these chased down to a root cause or a meaningful fix; it seems that everyone who has encountered the issue has simply stopped using Dynamic Memory. While it is certainly expedient, it is absolutely not the solution that I would recommend.

6. Bet High on Minimum Values

Many people have not yet noticed that you can lower Dynamic Memory’s minimum value at any time. The virtual machine only needs to be turned off if you raise the minimum value. If you routinely see in your monitoring system that a virtual machine’s demand is lower than its assigned value and that the assigned value is near the Startup value, that is potentially a candidate for a lower minimum Dynamic Memory value. Reduce it a little bit. Listen to your monitoring system and your users. Memory usage can be a fickle thing and there are applications whose memory requirements can spike quickly. If you lowered your minimum too far, there may not be enough to keep the memory assigned during that spike within acceptable ranges.

7. Bet Low on Maximum Values

Just as the minimum can be lowered at any time, the maximum can be raised at any time. This is much more critical than the minimum. By default, the maximum Dynamic Memory value for every virtual machine is 1 terabyte. In theory, this is perfectly safe. Hyper-V will never allocate any more memory to a guest than the host can spare. In practice, this is very dangerous.

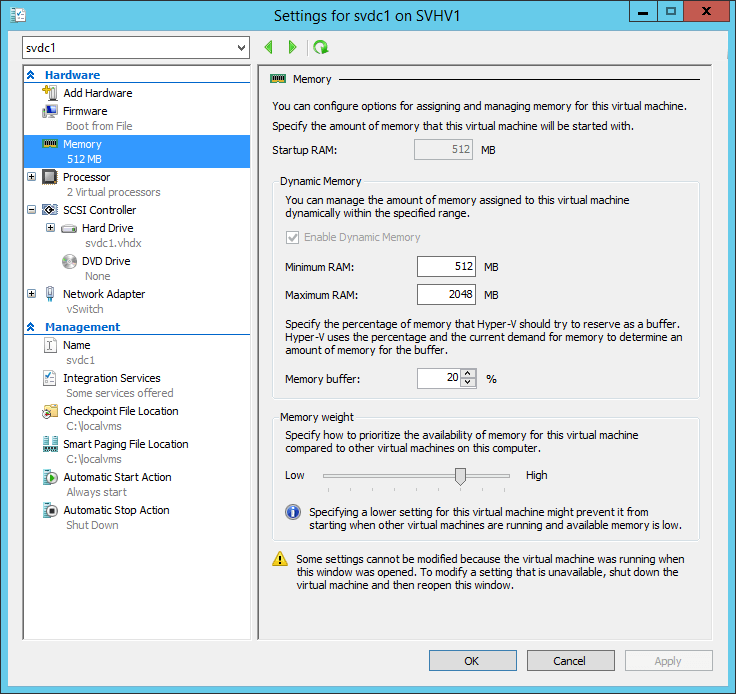

While most things will work fine, there is a very nasty little issue called the “memory leak”. I’m sure you’ve heard of them. If you didn’t know what they were and were afraid to ask, I’ll tell you. A memory leak is when an application requests memory from the operating system, that request is granted, and then the application loses track of it. As far as the operating system is concerned, that memory still belongs to the application. The application forgot about it. Piece meal, memory leaks aren’t a big deal. An application can leak a few bytes here and there without ever causing a problem. Sometimes, the problem is much more pronounced. An application might request memory and use it to represent a particular construct, such as a user setting. It might then have been intended to release the memory for that user setting while the user doesn’t need it, only to request it again when the need returns. If the programmer made an error and that memory is never released, then each subsequent request results in more memory being allocated but never returned. The quantity of the memory being requested combined with the frequency of the memory being requested is the direct measurement of the severity of the leak. The following chart illustrates the difference between an application that’s working and the same application if it has a bug and doesn’t properly release memory:

Memory Leak Example

In the “Leaking Application” line, at every point in the chart that an object is freed, the related memory is not being released. This application’s memory allocation continues to climb over time. In contrast, the properly operating version moves up and down during its normal course of operation. If left unchecked, the leaking application will continue to consume more and more memory until it is closed or its operating system can no longer service its requests.

Obviously, this condition is the fault of the application developer, but that fact doesn’t help you much. Your job is to ensure that all virtual machines are providing their services. The only way that you can control this is by using a sensible maximum for the virtual machine’s memory. Remember that an application that performed perfectly yesterday may have an as-yet undiscovered memory leak and that patches can always break more than they fix.

Use your monitoring tool as a guide to set reasonable maximums and watch for unusual changes in demand.

8. Use Smaller Buffers for Larger and Static Virtual Machines

The buffer is simply shown as a percentage in the configuration window without any context. The basis against which this percentage is calculated is the currently assigned memory value. So, if Dynamic Memory has given 4.2GB to a virtual machine and the buffer is set to 20%, 0.84GB of memory is set aside for memory expansion. On its own, the buffer is not necessarily dangerous. While Hyper-V will be resistant to giving away one virtual machine’s buffered memory to another VM, it will do so if demand requires it. Still, add up all the buffers for all the virtual machines in your host, and you can see that it’s almost certain that at least a few system’s desires are not being met.

The buffer is simply there to make expansion operations occur more quickly in response to a virtual machine’s increased demand. For virtual machines that already have a lot of memory assigned, it’s not very likely that that an expansion will require what that buffer has to offer. Reduce it.

For virtual machines that don’t change much, the buffer is somewhat pointless. Once you find a virtual machine’s equilibrium, reduce or even eliminate the buffer.

9. Use Memory Weight to Guide Memory Allocation

We already talked a bit about priority in #5. The Memory Weight field is where you control that priority. Items with a higher weight get first call on all memory assignments, both at startup and when Dynamic Memory needs to figure out where to push or pull memory.

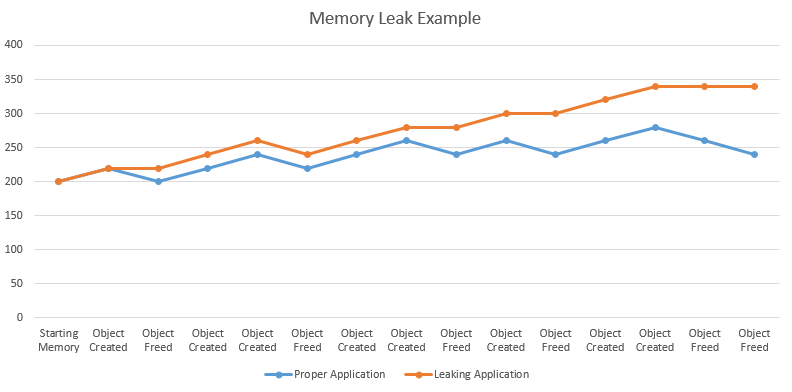

10. Do Not Rely on the Guest’s Memory Report

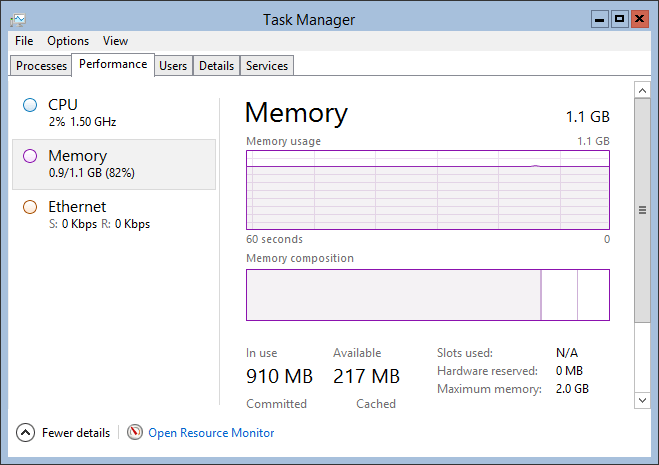

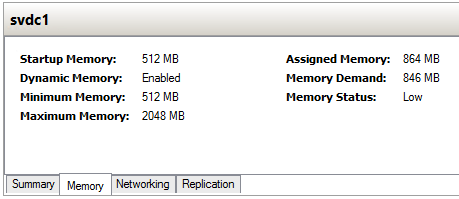

The guest’s memory manager doesn’t have a clear idea of what’s going on, so you can’t trust it. The following screen shot was taken from within a guest with Dynamic Memory enabled:

Guest View of Dynamic Memory

At the same time, the host reports this:

Host View of Dynamic Memory

The host is the reliable source of information. The guest shows its total memory as 1.1 GB. This reflects the maximum amount of memory that has ever been assigned to this guest since it was last powered on. Hyper-V shows that the maximum that can be assigned is 2 GB. The difference between the two reflects the possibility that I may have set the maximum higher than necessary. In the bottom right of the guest’s screen, you can see that it is aware that its maximum memory is 2 GB. That field is specific to newer Hyper-V guests; older operating systems did not have this ability and the field does not appear on physical systems. Next, we come to the important part. Notice that Hyper-V says that 864 MB are assigned whereas the guest says that it is using 910 MB. The Dynamic Memory driver inside the guest has locked enough memory to account for the difference. The guest believes that memory is in use by that driver. In truth, it has been released back to Hyper-V to give to one of my greedier virtual machines. The guest is wrong about its total current memory value. Be aware that while the virtual machine is turned on, that number will never decrease. The driver will claim what it needs. The number can increase from the Startup value up to the Maximum value, depending on guest needs.

11. Watch for Natural Ebb and Flow Cycles that Can be Exploited with Dynamic Memory

Some virtual machines will have natural cycles in which they have high or low memory usage in comparison to other parts of the day. For instance, if you have an application that processes employee clock punch-in/punch-out operations, they are probably busier at shift rotation times than at any other time of the day. Domain controllers tend to have the heaviest load at the start of the business day. Internet proxies often spike around lunchtime. Your monitoring tool can expose these peaks and valleys. You can use Dynamic Memory to increase densities by matching the waxing of one system to the waning of another.

Sensible Dynamic Memory Strategies

Using Dynamic Memory correctly has a bit of an art component. You can certainly use your monitoring system to plot very scientific values, but you’re going to invest a lot of time doing so, and it is highly likely that something will change before your task is complete. You can develop shortcuts to make your life easier. Here are some ideas:

- Make it a policy that Dynamic Memory is the default configuration for virtual machines. Instead of taking the attitude that someone must make the case to use Dynamic Memory, make it so that someone must provide a good reason to not use Dynamic Memory. Require that any such arguments be supported with empirical, repeatable evidence.

- When evaluating new software products, put questions about Dynamic Memory on your discovery checklist. Most importantly, will the vendor support the application in a Dynamic Memory environment?

- Establish a standard Startup and Minimum for specific operating system versions and potentially editions. For instance, I normally use 512MB for both on my 2012 R2 guests. I then monitor all systems and wait for any reasons to make changes to appear. Default maximums can be a little tougher, but feel free to pick a reasonable, but low number. I typically use 2 GB for server operating systems. Once the standards have been established, make it a requirement that anyone that builds a virtual machine off-standard must provide justification.

- If you are clustering, explore automated rebalancing. Microsoft System Center Virtual Machine Manager can provide this functionality, but you could also sit down and write a PowerShell script that auto-balances VMs based on memory demand.

For more information on Hyper-V Dynamic Memory check out our illustrated guide here. If you’re looking for help with Hyper-V Dynamic Memory configuration check out guide.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

12 thoughts on "10 Best Practices and Strategies for Hyper-V Dynamic Memory"

Does Smart Paging figure into the equation?

Can you be more specific in what you’re looking for?

Hi Eric,

I have a Hyper-V host with 64GB ram with 2VMs (VM1 with 40GB RAM and VM2 with 16GB RAM), so the Host has 8GB ram to run on. The host and all the VMs are running on Windows Server 2016, but the performance of the host is very poor with memory utilization always over 80%.

Currently their are no services running on any of the VMs as i need to sort the host memory issue before deploying any services.

Could you please advice

You’ll need to use memory tools to see what the host is doing with its memory. Task Manager should generally suffice. RAMMap is good. If it’s all in Standby then I wouldn’t think it would cause a problem.

High memory utilization does not necessarily translate to poor performance. You’ll need to run performance traces across a greater breadth of KPIs to determine the bottleneck.

Like last week, a great subject for “people like me”. I knew I was running dynamic memory, so that’s a tick, but I thought I’d check my settings too. I have a Host with 36GB RAM Installed, (weird because I thought it was two pairs)

Anyhow I have 4GB and 8GB for startup on the two VM’s and minimums of 512MB and maximums of 1TB and 20% buffer.

Are those figures default? I couldn’t imagine setting a maximum way above available physical RAM, but there you go. I guess the system does what you say, it manages it all very well.

Thanks Eric, for another top post.

Sorry, I see you said a Terabyte IS the default max. Pays to read twice, comment once. 🙂