Save to My DOJO

Table of contents

Should you use fixed or dynamic virtual hard disks (VHDs) for your virtual machines? The basic dilemma is the balance of performance against space utilization. Sometimes, the proper choice is obvious. However, as with most decisions of this nature, there are almost always other factors to consider.

Pass-Through Disks

Although the focus of this article is on VHDs, it would be incomplete without mentioning past-through disks. These are not virtualized at all, but hand I/O from a virtual machine directly to a disk or disk array on the host machine. This could be a disk that is internal to the host machine or it could be a LUN on an external system connected by fibrechannel or iSCSI. This mechanism provides the fastest possible disk performance but has some very restrictive drawbacks.

Pass-Through Benefits

- Fastest disk system for Hyper-V guests

- If the underlying disk storage system grows (such as by adding a drive to the array) and the virtual machine’s operating system allows for dynamic disk growth (such as Windows 7 and Server 2008 R2), the drive can be expanded within the guest without downtime.

Pass-Through Drawbacks

- LiveMigration of VMs that use pass-through disks are noticeably slower and often include an interruption of service. Because pass-through disks are not cluster resources, they must be temporarily taken offline during transfer of ownership.

- Hyper-V’s VSS writer cannot process a pass-through disk. That means that any VM-level backup software will have to take the virtual machine offline while backing it up.

- Volumes on pass-through disks are non-portable. This is most easily understood by its contrast to a VHD. You can copy a VHD from one location to another and it will work exactly the same way. Data on pass-through volumes is not encapsulated in any fashion.

The severity of the drawbacks almost always causes them to outweigh the benefits of using pass-through disks. Furthermore, Microsoft has engineered the emulated IDE and synthetic SCSI drivers in such a fashion that, when used in conjunction with a fixed VHD, performance is almost identical to that of a pass-through disk. There are almost no situations in which the marginally higher performance of the pass-through system warrants acceptance of the limitations. If superior disk performance is desired, the greatest impact will be felt by upgrading the underlying physical disk system.

Dynamic VHDs

All VHDs are just files. They have a specialized format that Hyper-V and other operating systems can mount in order to store or retrieve data, but they are nothing more than files. A dynamic VHD has a maximum size but is initially very small. The guest operating system believes that it just a regular hard disk of the size indicated by the maximum. As it adds data to that disk, the underlying VHD file grows. This technique of only allocating a small portion of what could eventually become much larger resource usage is also commonly referred to as “thin-provisioning”.

Dynamic VHD Benefits

- Quick allocation of space. Since a new dynamic VHD contains only header and footer information, it is extremely small and can be created almost immediately.

- Minimal space usage for VM-level backups. Backup utilities that capture a VM operate at the VHD-level and back it up in its entirety no matter what. The smaller the VHD, the smaller (and faster) the backup.

- Over-commit of hard drive resources. You can create 20 virtual machines with 40GB boot VHDs on a hard drive array that only has 500GB of free space.

Dynamic VHD Drawbacks

- Slower than fixed disks.

- Substantially higher potential for VHD fragmentation.

- The block allocation table always exists in a dynamic VHD, so a fully expanded dynamic VHD will be somewhat larger than a fixed VHD of the same usable size.

- Thin-provisioned volumes in an overcommitted environment could cause the underlying storage to become completely full and lead to other problems.

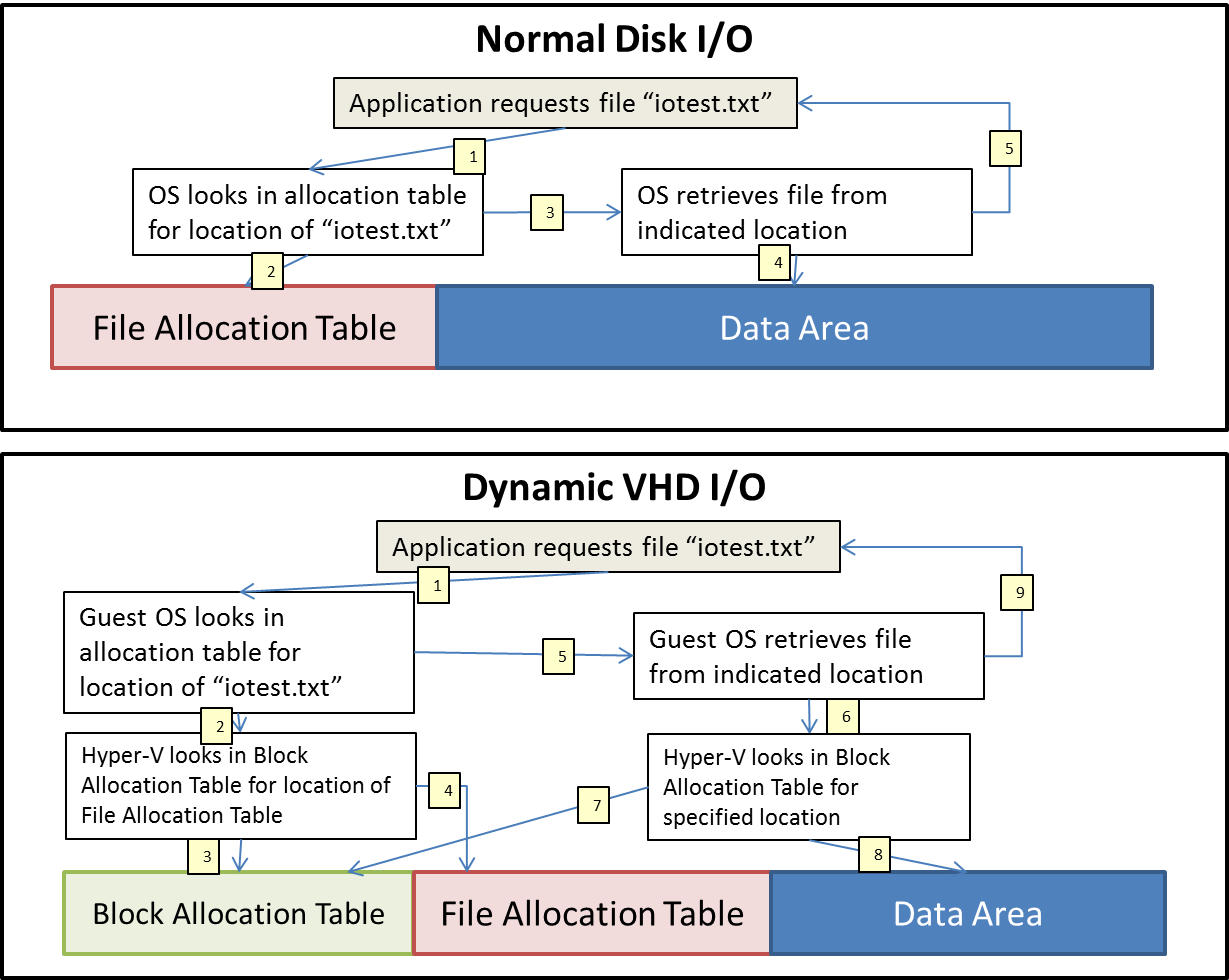

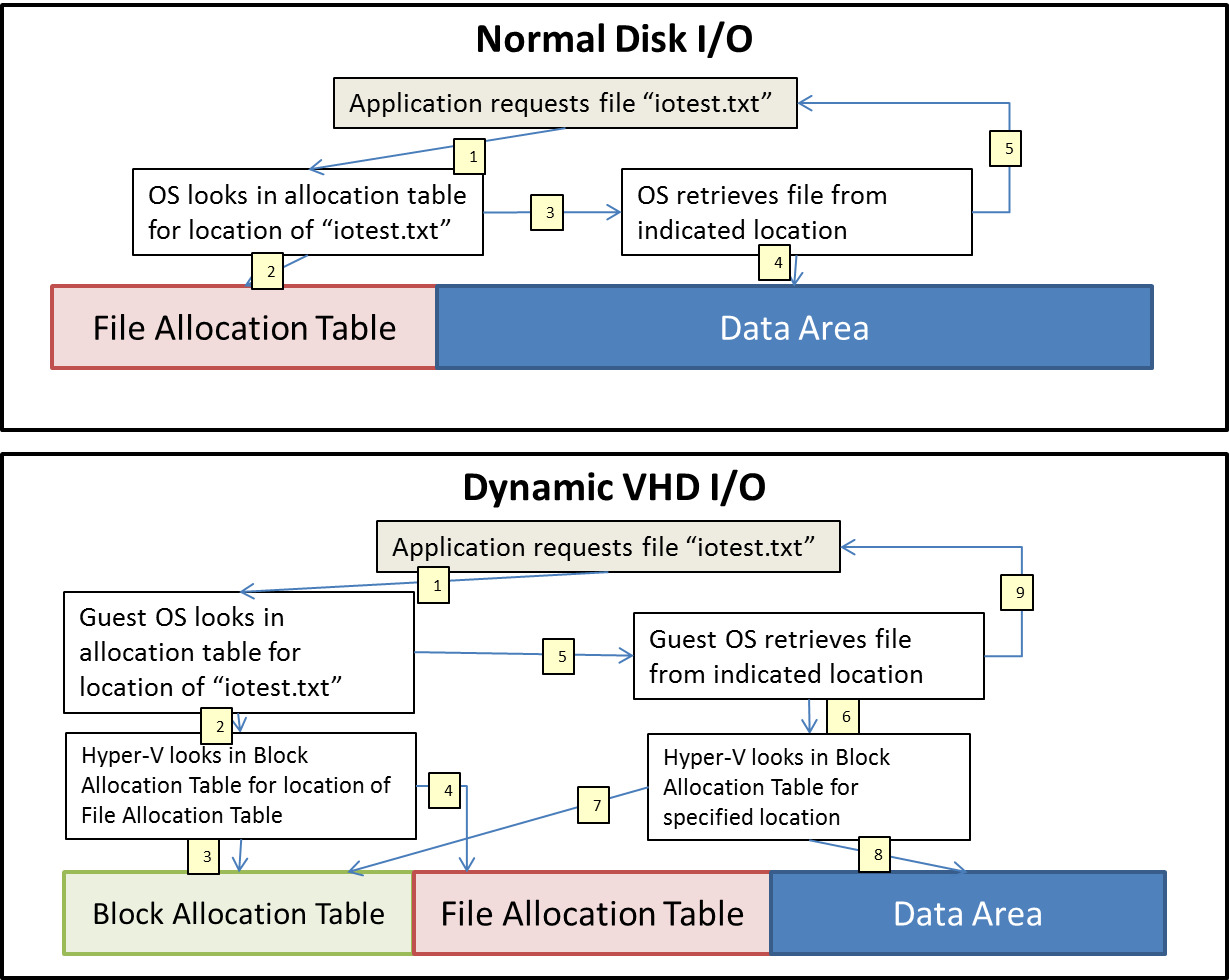

Generally, the biggest reason most people will avoid dynamic VHDs is because of the performance difference. Refer to the following illustration to understand where it is different.

Because all hard drives are extremely slow in comparison to other computer components, file systems use an indexing mechanism called a “file allocation table” to track where files reside on a drive. That way, the OS doesn’t have to seek out each file on access, but can quickly look up its location in the allocation table. For a regular disk or a fixed VHD, that location never changes. It might contain a different file or different data throughout its lifetime, but the location itself is always the same. Since a dynamic VHD only adds blocks as the guest operating system stores data and since it can replace an emptied block with a completely new one, there is absolutely no guarantee that the location won’t move. Therefore, tracking individual files requires an extra layer of checking as indicated by the above graphic. Even with the extra work involved this operation is remarkably fast, but the difference can have a significant impact on high I/O loads.

In general, guest operating systems are not aware that they are using a dynamic VHD, and individual virtualized applications are almost never aware of it. They fully believe they have 100% of the drive’s indicated space at their disposal. If they ask for all that space, Hyper-V will provide it (as long as there is actually enough physical media to back it). They may choose to use that space as a scratch area and cause the VHD to grow faster than you had anticipated. One application that is known to do this is Microsoft’s SQL Server. It will use the empty storage area to hold its temporary database. Hard drive utilities that perform tests by reading and writing to every sector will always cause the entirety of the dynamic VHD to be allocated (utilities of this type should never be used in a virtualized OS anyway). Applications such as these will generally make the benefits of dynamic VHDs moot even if the reduced I/O isn’t a problem.

The VHD file grows as the guest adds data. Since the VHD probably shares physical storage with other data, then it is almost guaranteed that it will become fragmented. It is very easy to overstate the negatives of fragmentation. If your VHD lives on a multi-drive array then the performance impact is negligible. If the drives in that array are 10,000 RPM or faster, then fragmentation poses no practical concern. However, if you’ve only got one physical drive or two in a mirror and the spindle speed is 7,200 RPM or lower, it is possible that fragmentation might become an issue. As always, expanding and upgrading the drive system will produce the best results and is preferable to all other approaches.

Fixed VHDs

After pass-through and dynamic disks, fixed disks are essentially what you have left. When created, they allocate 100% of the indicated space on the underlying physical media. There is no block allocation table to be concerned with, so the extra I/O load above a pass-through disk is only what occurs within the virtualization stack.

Fixed VHD Benefits

- Fastest VHD mechanism

- No potential for causing over-commitment collisions

- Always same fragmentation level as at creation time

Fixed VHD Drawbacks

- VM-level backups capture all space, even unused

- Larger size inhibits portability

Realistically, the biggest problems with this system appear when you need to copy or move the VHD. The VHD itself doesn’t know which of its blocks are empty, so 100% of them have to be copied. Even if your backup or copy software employs compression, empty blocks aren’t necessary devoid of data. In the guest’s file system, “deleting” a file just removes its reference in the file allocation table. The blocks themselves and the data within them remain unchanged until another file’s data is saved over them.

Recommendations and Guidelines

It’s very easy to say that if you have the space, always used fixed VHDs. In fact, that advice is given regularly. Unfortunately, that advice does not consider the backup and portability concerns. Accept that there isn’t necessarily a simple one-size-fits-all answer and think through your situation. There are some pointers you can follow.

- Always use a fixed VHD for high I/O applications like Exchange and SQL Servers. Even if you won’t be placing as much burden on these applications as they were designed to handle, these applications always behave as though they need a lot of I/O and are liberal in their usage of drive space. If space is a concern, start with a small VHD; you can expand it if necessary.

- If you aren’t certain, try to come up with a specific reason to use fixed. If you can’t, then use dynamic. Even if you lose that gamble, you can always convert the drive later. It will take some time to do so (dependent upon your hardware and the size of the VHD), but the odds are in your favor that you won’t need to make the conversion.

- A single virtual machine can have more than one VHD and it is acceptable to mix and match VHD types. Your virtualized SQL server can have a dynamic VHD for its C: drive to hold Windows and a fixed VHD for its D: drive to hold SQL data.

- If using dynamic drives in an overcommit situation, set up a monitoring system or a schedule to keep an eye on physical space usage. If the drive dips below 20% available free space, the VSS writer may not activate which can cause problems for VM-level backups. If the space is ever completely consumed, Hyper-V will pause all virtual machines with active VHDs on that drive.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

531 thoughts on "Hyper-V Guest Design: Fixed vs. Dynamic VHD"

[…] people who are confused by the disk options in Hyper-V. Altaro have updated their blog with a post, discussing the merits of passthrough (raw) disk, fixed VHD, and dynamic VHD and it’s worth a […]

I have a question for you – I have a dynamic-allocated 200 GBs vmdk (thin provisioned) in VMware ESXi. The guest OS size is 50 GBs, but the system shows 200 GBs because I had to perform an operation that used most of the disk space, and then I deleted the results of that elaboration.

Anyway, even if the guest OS size is reduced now to 50 GBs I cannot claim the remaining 150 GBs via shrinking. The only solution they gave me is to clone (at OS level) the guest to a new dynamic VM or to move to a thick-provisioned (static) disk and then shrink.

Do Hyper-V have a better way to claim that disk space without losing the dynamic-expanding ability?

[…] Hyper-V Guest Design: Fixed vs. Dynamic VHD […]

@Marco:

The tools included in ESXi should do what you want, so there may be more going on than just a hypervisor issue. Make sure you run a CHKDSK /R within the guest before attempting to shrink.

To directly answer your question, as long as everything within the guest is OK, Hyper-V’s shrink utility should release it and allow you to reclaim that space.

[…] Altaro guys are writing another important post about Hyper-v Guest design Check there […]

Backing up a fixed disk, all sectors, may have data in them.

How about if you do a full format of the drive in Windows before putting the VHD on it? In that case, does Windows zero out the disk as part of the long format, which would allow a backup program to compress it effectively?

Thanks,

Kirk B.

Unless something has changed since the DOS days and I wasn’t notified, a full format does not zero the sectors. However, Hyper-V does write out an explicitly all-zero file when it’s instructed to create a fixed VHD/X.

Hi, great in depth article! I might have missed this, but what about using fixed size VHDX, but the backend storage is Thin-provisioned. Is this supported, or would it cause problems with performance or other issues? Seems to be tricking the OS and VMs into thinking its fixed size but it really isnt, at the hardware level.. Any insights on this greatly appreciated.

If you create the VHDX as fixed, it will actively write a zero to every allocated block which means that the back end will be expanded to that size.