Save to My DOJO

Teaming and MPIO for connecting to iSCSI storage has been a confusing issue for a very long time. With native operating system support for teaming and the introduction of SMB 3.0 shares in Windows/Hyper-V Server 2012, it all got murkier. In this article, I’ll do what I can to clear it up.

Teaming

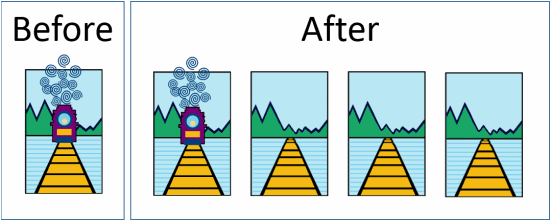

The absolute first thing I feel is necessary is to explain what teaming really does. We’re going to look at a completely different profession: train management. Today, we’re going to meet John. John’s job is to manage train traffic from Springfield to Centerville. John notices that the 4 o’clock regular takes 55 minutes to make the trip. John thinks it should take less time. So, John orders the building of three new tracks. On the day after completion, the 4 o’clock regular takes…. can you guess it?… 55 minutes to make the trip. Let’s see a picture:

Well, gee, I guess you can’t make a train go faster by adding tracks. By the same token, you can’t make a TCP stream go faster by adding paths. What John can now do is have up to four 4 o’clock regulars all travel simultaneously.

iSCSI and Teaming

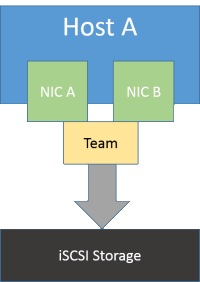

In its default configuration, iSCSI only uses one stream to connect to storage. If you put it on a teamed interface, it uses one path. Also, putting iSCSI on a team has been known to cause problems, which is why it’s not supported. So, you cannot do this:

Well, OK, you can do it. But then you’d be John. And you’d have periodic wrecks. What you want to do instead is configure multi-path (MPIO) in the host. That will get you load-balancing, link-aggregation, and fault-tolerance for your connection to storage, all in one checkbox. It’s a built-in component for Windows/Hyper-V Server at least as early as 2008, and probably earlier. I wrote up how to do this on my own blog back in 2011. The process is relatively unchanged for 2012. Your iSCSI device or software target may have its own rules for how iSCSI initiators connect to it, so make sure that it can support MPIO and that you connect to it the way the manufacturer intends. For the Microsoft iSCSI target, all you have to do is provide a host with multiple IPs and have your MPIO client connect to each of them. For many other devices, you team the device’s adapters and make one connection per initiator IP to a single target IP. Teaming at the target usually works out, although it is possible that it won’t load-balance well.

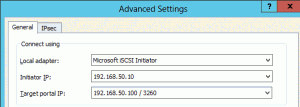

To establish the connection from my Hyper-V/Windows Server unit, I go into iscsicpl.exe and go through the discovery process. Then I highlight an inactive target and use “Properties” and “Add Session” instead of “Connect”. I check “Enable multi-path” and “Advanced”. Then I manually enter all IP information:

I then repeat it for other initiator IPs and target IPs, one-to-one if the target has multiple IPs or many-to-one if it doesn’t. In some cases, the initiator can work with the portal to automatically figure out connections, so you might be able to get away with just using the default initiator and the IP of the target, but this doesn’t seem to behave consistently and I like to be certain.

Teaming and SMB 3.0

SMB 3.0 has the same issue as iSCSI. The cool thing is that the solution is much simpler. I read somewhere that for SMB 3.0 multi-channel to work, all the adapters have to be in unique subnets. In my testing, this isn’t true. You open up a share point on a multi-homed server running SMB 3.0 from a multi-homed client running SMB 3.0 and SMB 3.0 just magically figures out what to do. You can’t use teaming for it on either end, though. Well, again, you can, but you’ll be John. Don’t be John. Unless your name is really John, in which case I apologize for not picking a different random name.

But What About the Virtual Switch?

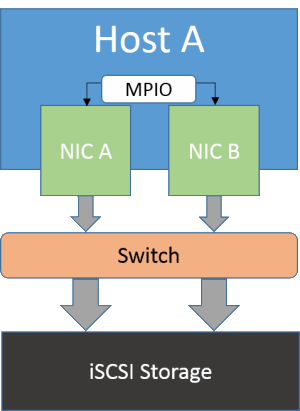

Here’s where I tend to lose people. Fasten your seat belts and hang on to something: you can use SMB 3.0 and MPIO over converged fabric. “But, wait!” you say, “That’s teaming! And you said teaming was not supported for any of this!” All true. Except that there’s a switch in the middle here and that makes it a supported configuration. Consider this:

This is a completely working configuration. In fact, it’s what most of us have been doing for years. So, what if the switch happens to be virtual? Does that change anything? Well, you probably have to add a physical switch in front of the final storage destination, but that doesn’t make it unsupported.

Making MPIO and SMB 3.0 Multichannel Work Over Converged Fabric

The trick here is that you must use multiple virtual adapters the same way that you would use multiple physical adapters. They need their own IPs. If it’s iSCSI, you have to go through all the same steps of setting up MPIO. When you’re done, your virtual setup on your converged fabric must look pretty much exactly like a physical setup would. And, of course, your converged fabric must have enough physical adapters to support the number of virtual adapters you placed on it.

Of course, this does depend on a few factors all working. Your teaming method has to make it work and your physical switch has to cooperate. My virtual switch is currently in Hyper-V Transport mode and both MPIO and SMB 3.0 multi-channel appear to be working fine. If you aren’t so lucky, then you’ll have to break your team and use regular MPIO and SMB 3.0 multichannel the old-fashioned way.

There is one other consideration for SMB 3.0 multichannel. The virtual adapters do not support RSS, which can reportedly accelerate SMB communication by as much as 10%. But…

Have Reasonable Performance Expectations

In my experience, people’s storage doesn’t go nearly as fast as they think it does. Furthermore, most people aren’t demanding as much of it as they think they are. Finally, it seems like a lot of people dramatically overestimate what sort of performance is needed in order to be acceptable. I’ve noticed that when I challenge people that are arguing with me to show me real-world performance metrics, they are very reluctant to show anything except file copies and stress-test results and edge-case anecdotes. It’s almost like people don’t want to find out what their systems are actually doing.

So, if I had only four NICs in a clustered Hyper-V Server system and my storage target was on SMB 3.0 and it had two or more NICs, I would be more inclined to converge all four NICs in my Hyper-V host and lose the power of RSS than to squeeze management, Live Migration, cluster communications, and VM traffic onto only two NICs. I gain superior overall load-balancing and redundancy at the expense of a 10% or less performance boost that I would have been unlikely to notice in general production anyway. It’s a good trade. Now, if that same host had six NICs, I would dedicate two of them to SMB 3.0.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

40 thoughts on "Teaming and MPIO for Storage in Hyper-V 2012"

hi, can we set the storage policy like what we can did in vMware vcenter? round robin, MRU stuff. TQ

Absolutely, although it’s not as easy to get to. If you’re using a GUI version of Windows with Hyper-V as a role, access the properties of the disk in Disk Management. Use MPCLAIM otherwise. This post is a little old, but I think everything still applies: http://etechgoodness.wordpress.com/2011/12/16/iscsi-disk-appears-two-or-more-times-in-disk-management/. Look in the “Verifying the Change and Setting MPIO Policies”, section, about 2/3rd of the way down.

Thanks for the information.

i stumbled upon this article after having some issue’s because of doing it the wrong way (the situation was fast and stable, but our management vlan wasn’t usable anymore on the virtual switch because it was an team interface, after changing it to the right way (with the virtual switch) it worked, maybe you could create an article about the proper setup and why (a lot articles dont explaint the why part)

We already use the Altaro HyperV backup since our 2008 hyper-v cluster and continue to do this for the 2012 version, but is there any benefit for the Altaro software with SMB3.0 share’s? will this automatically/magically work for altaro backup?

Hi Tonnie,

That sounds like a good idea for an article. I’ll get that queued up.

I’m going to defer the question about the Altaro Hyper-V Backup product to an expert on that team. Someone is already looking into your question.

Hi,

Great article.

I have a question:

I have QNAP TS-469 Pro which supports MPIO with 2 Ethernet ports.

Can I connect both ports to the same physical switch and enable MPIO?

Thanks in advanced.

I think so. I’m going to try it next week

Thanks for the great article. Sorry I’m late to the party. I’m still a little confused. Is there any way to use 2 x 10G ports for a clustered iscsi implementation? In my mind I want a team with tagged vlans for guest access and share a management vlan to the host. But I want/need 2 NICs/IPs for the host os for iscsi. I can’t find a way to get that with less than 4 NICs. 2 teamed and two unteamed.

Well, if you want dedicated NICs, then four minimum is correct. But 2x10GB is a monster set of pipes. I’d make the team and a couple of vNICs in the management OS for iSCSI, configure MPIO, and call it a day.

I’m confused as well… so how do you go about setting up iSCSI MPIO if you’ve teamed 2x 10G NICs? Once they’re teamed, you can create virtual interfaces for the individual vLANs, but I’m not seeing how I can create multiple vNICs to handle the MPIO, unless you’re talking about re-enabling the protocols on the physical interfaces and running MPIO over that.

In this article, I’m talking about creating a Hyper-V virtual switch on top of the team. The vNICs are then created on that switch. That solution only applies if you’re using Hyper-V, of course.

Do not re-enable the protocols on the individual pNICs. That will almost certainly end in disaster.

If you aren’t using Hyper-V, you could get a similar outcome by creating additional logical NICs on the team. That would be a problem for some iSCSI systems because each tNIC must be in its own VLAN.

Another option would be to simply add another IP address to the single logical team NIC. I’ve never tried doing that for MPIO purposes, but the theory is sound.

That’s what was throwing me off. So you’re talking about just the VMs being able to access the shared storage with MPIO or am I missing something and the hosts are still going to communicate to the SAN via shared management or something? Sorry but I’m just trying to wrap my head around this one.

No, this is not for VM connectivity. You could do that, if you were so inclined, but that’s not what I’m talking about.

The management OS gets its own set of vNICs just for iSCSI. So, before, you would have had a couple of pNICs in a team for the VMs and a couple of unteamed pNICs for iSCSI. Now you have all virtual NICs. It’s a virtual version of the common physical design.

Ok… things are slowing coming out of the fog… so on a bare config:

– create team of pNICs

– create vSwitch using team as attachment to network

– create 2x vNICs on vSwitch on SAN vLAN

– create 1x vNIC on vSwitch on Heartbeat vLAN

– create 1x vNIC on vSwitch for Management/VMs on LAN vLAN

– configure MPIO over 2x vNICs attached to the SAN vLAN

Sound right?

Yep! Although the “Management/VMs” line will just be “Management”. VMs have their own vNICs and don’t need representation within the management OS 🙂

Do the 2 vNICs on the vSwitch for iSCSI, when using MPIO, need to be in separate VLANs? Same VLAN but separate IP subnets? Same VLAN and same IP subnet? I can’t seem to find a definitive answer on this for which is the better way to go, even if they all “work”.

On one hand, I could configure the Hyper-V cluster member with two iSCSI vNICs, in either the same iSCSI VLAN or separate iSCSI VLANs (is one better over the other?):

Initiator vNIC1: 10.0.10.1/24 (VLAN 10)

Initiator vNIC2: 10.0.11.1/24 (VLAN 10, or 11 if different VLANs)

Target NIC1: 10.0.10.100/24 (VLAN 10)

Target NIC2: 10.0.11.100/24 (VLAN 10, or 11 if different VLANs)

…[additional target interfaces]…

OR, I could keep everything in the same VLAN and same IP subnet:

Initiator vNIC1: 10.0.10.1/24 (VLAN 10)

Initiator vNIC2: 10.0.10.2/24 (VLAN 10)

Target NIC1: 10.0.10.100/24 (VLAN 10)

Target NIC2: 10.0.10.101/24 (VLAN 10)

…[additional target interfaces]…

Thoughts?

I know a lot of people that split their iSCSI across multiple subnets and VLANs. Personally, I don’t. Really all you get for your effort is some protection against broadcast attacks. All of my iSCSI networks are isolated anyway. Any attacker will almost certainly have gone after easier fruit.

Got it! So if I understand correctly, 2 vNICs and multiple iSCSI target interfaces on:

•1 storage VLAN, 1 IP subnet

•1 storage VLAN, 2 IP subnets

•2 storage VLANs, 2 IP subnets (one per VLAN)

will all provide equal benefit and “true” multipathing capability? One’s not better than the other? They’ll

all be far better than just one vNIC communicating with multiple target interfaces on the same SAN with MPIO enabled?

Right, at least as far as my experience. I have read some material insisting on option 3 but I have not seen anything concrete to support it.

great article. I also read the MSFT technet blog and was little surprised that ISCSI can handled via vNIC. Am I right that in your newer post you favorit iSCSI to have it’s owhn physical NIC or is this obsolet if you have one fat converged team. actually we use HPE FlexFabric NIC and not Mellanox and not sure if HPE is absolute stable with drivers etc.

There are two branches.

I need iscsi Lun direct in one VM. I know it’s better do all on HOST Level, but would this scenario be supported:

vNIC in VM (additiional Network Card)

then bind iscsi Initiator session on this vNIC

Regards

It’s supported. I strongly encourage you to look into re-architecting to use VHDX, though.

What is the point of creating vNICs for MPIO if vNICs are connected to vswitch with teamed interfaces? We could use MPIO or teaming to access iscsi, there is no point of using both. MPIO should use different physical interfaces to be truly mulipath, but when you create a team, you don’t really control wich vNIC corresponds to wich hardware NIC at a time. Or am i wrong?

MPIO uses layer 3 for pathing, where teaming is layer 2. If you just use a team and do not enable MPIO, then you don’t have multi-pathing. If you have a single VNIC for iSCSI on a teamed switch, it still won’t use multi-pathing. You need the additional VNIC to create an additional IP endpoint to give MPIO choices. There is never a guarantee the team WILL separate the traffic, but having different IP endpoints (for dynamic or hash-based load-balancing) on different VNICs (for dynamic or Hyper-V port load-balancing) gives the team an opportunity to try.

Thank you so much for this great article, I know it has been ages since you published it, but this is pure gold!

How many sessions would you recommend if using many-to-one towards a single initiator IP and single target IP? Currently we have 1 active and 1 passive for Failover and wondering if adding more (either active or passive) sessions makes any difference. Our storage is a single target with a teamed NICs.

Thank you!

If the target has only one IP, then any connections above one is only for redundancy.

Thank you Eric!

Since, in this scenario, the second session is a failover policy we still have a single point of failure on the host’s single NIC and we are limited to speed of that NIC towards storage, correct?

So if we add vNICs to hosts and repeat the MPIO setup…what are we really gaining here? Redundancy of NICs only or can these subsequent MPIO sessions be used as Round Robin/load balancing? Are we increasing a total pipe towards storage?

I guess I am getting stuck on understanding the performance benefit of MPIOs per single vNIC (essentially per physical NIC) vs. NIC teaming with LACP.

In our case we have hosts with 4 physical NICs:

-1 NIC for iSCSI to storage

-3 teamed up NICs for VMs.

What setup would you recommend for hosts in this case if goal is to have the best performance/pipe to Storage with teamed NICs and single IP? (I guess we would break the team on the storage if needed)

Thanks again!

Clear everything that you know about all of this. MPIO is a layer 3 technology. It understands IP addresses and nothing else. It doesn’t know anything about NICs or backing paths or teaming or anything at all. Just IPs. So, if you have two IPs on your initiator and one IP on your target, then it will create two paths. If you have a round robin load balancing policy, then it will alternate between the two paths. But, because MPIO is IP only, you have absolutely no way to know if it will use both sides of the target team or not. The intermediate hardware will decide. I would expect that sometimes it will load balance and sometimes it won’t.

But, if you have two initiator IPs and two target IPs, then you can create four paths. If you have no teaming in the way, then you can effectively guarantee that load balancing will happen as evenly as your MPIO policy allows.

As described, you have only one physical pathway on the initiator. So, no matter how many paths you create, you can never exceed 1 gigabit on any host. If anything, MPIO will make that slower because it has to do some calculations to choose a path and to keep the bits in order.

What I would do for now is leave everything as-is and put some networking monitoring in place. If most traffic moves over the storage network, consider changing that configuration. If most traffic moves over the VM network, then just leave it alone. Also, on your hosts, configure a performance monitor to watch for queue depth on your disks. Sustained queue depths deeper than 2 signify a problem.