Save to My DOJO

This entry in our series on storage for Hyper-V is devoted to the ways that a Hyper-V host can connect to storage. This will not be a how-to guide, but an inspection of the technologies.

Part 1 – Hyper-V storage fundamentals

Part 2 – Drive Combinations

Part 3 – Connectivity

Part 4 – Formatting and file systems

Part 5 – Practical Storage Designs

Part 6 – How To Connect Storage

Part 7 – Actual Storage Performance

Don’t Overdo It

The biggest piece of advice I hope all readers take away from this article is: do not over-architect storage connectivity. As you saw in part one, hard drives are slow. Even when we get a bunch of them working in parallel, they’re still slow. As you saw in my anecdote in part two, even people who should know better will expend a lot of effort trying to create ultra-fast connections to ultra-slow hard drives. Unless you have racks and cabinets brimming with hard drive enclosures and SANs, installing 16Gb/s Fibre Channel switches and 10 GbE switches to connect to storage is a waste of money. Your primary architectural goals for storage connectivity are stability and redundancy. As for speed, figure out how much data your host systems need to move and how much your drive systems are capable of moving before you even start to worry about how fast your connectivity is. I guarantee that most people will be surprised to see just how little they actually need.

As a point of comparison, I managed a Hyper-V Server system with about 35 active virtual machines that included Exchange, multiple SQL Servers, a corporate file server, and numerous application servers for about 300 users. There was a single backing SAN with 15 disks and a pair of 1GbE connections. The usage monitor indicated that the iSCSI link was generally near flat and almost never exceeded 1Gb/s in production hours. The only time it got any real utilization was during backup windows. Even then, it was only as full as the backup target could handle. My storage network almost never reached its bandwidth capacity.

Fibre Channel

Fibre Channel (FC) is a fast and dependable way to connect to external storage. Such external storage will almost exclusively be mid- to high-end SAN equipment. The FC protocol is designed to be completely lossless, which means there is very little overhead. This allows FC communications to run at very nearly the maximum rated speed of the equipment (4, 8, and 16 Gbps are the most common speeds).

Historically, FC was the premier method to connect to storage. External storage was considered a top-shelf technology under any circumstances, and nothing else had anything near FC’s speed and reliability. These are still true today, but Ethernet is starting to close the gap rapidly. The issue is that FC has always been very expensive. It gets even more expensive when FC switches are required. Many FC SANs allow multiple systems to connect directly without going through a switch, but this limits the number of connected hosts and removes a few options. For instance, you might want your hosts to connect to multiple SANs or you might want to employ some form of SAN-level replication. These are going to need switching hardware.

Another issue with FC is that it’s really its own world. It requires understanding and implementation of World Wide Names and masking and other terms and technologies. They aren’t terribly difficult, but you can’t really bring in other knowledge and apply it to FC’s idiosyncracies. A serious side effect of this is that you can’t really find a lot of generic information on connecting a host to an FC device. You pretty much need to work off of manufacturer’s documentation. For a new system, that’s not such a big deal; it probably (hopefully!) comes with the equipment. If you inherit a device, especially an older one that the manufacturer has no interest or incentive in helping you with, you might be facing an uphill battle. As the data center becomes flatter and administrators are expected to know more about a wider range of technologies, having portability of knowledge is more important.

When configuring FC, the best advice I can give you is to follow the manufacturer’s directions. You do want to avoid using any equipment that converts the FC signal. You can find FCoE (Fibre Channel over Ethernet) devices, but please don’t use them for storage. The FC equipment believes that its signal will be received and doesn’t deal with loss very well, so converting FC into Ethernet and back can cause serious headaches — read that as “data loss”.

iSCSI

iSCSI is, in some ways, a successor to FC (although it’s a bit of a stretch to think that it will replace FC). It’s not as fast or as reliable, but it’s substantially cheaper. Instead of requiring expensive FC switches and archaic configurations, it works on plain, standard Ethernet switches. If you understand IP at all, you already know enough to begin deploying and administering iSCSI.

What hurts iSCSI is that it relies on the TCP/IP protocol. This protocol was designed specifically to operate across unreliable networks, and as such has a great deal of overhead to enable detection and retransmission of lost packets and resequencing of packets that arrive out of order. Even if all packets show up and are in the correct sequence, that overhead is still present. This overhead exists in the form of control information added on to each packet and extra processing time in packaging and unpackaging transmitted data. It’s very easy to overstate the true impact of this overhead, but it’s foolish to pretend that it’s not there.

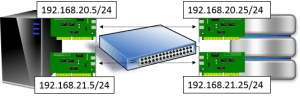

A pitfall that some administrators fall into is treating iSCSI traffic like all other TCP/IP traffic. Their configurations usually work, just not as well as they could. The most common mistake is allowing iSCSI traffic to cross a router. A very common piece of advice given is to segregate iSCSI devices and hosts into separate subnets. This makes sense. This is often extended into a recommendation that if the hosts and hardware have multiple NICs, to also place those into unique subnets. This quality of this advice is questionable, but if properly implemented, not harmful. The following image represents what this might look like.

Here, all switching is layer-2. What that means is that source and destination systems are separated solely by MAC address, which is in the header portion of the Ethernet frame where it’s easy for the switch to access. As long as the switch isn’t overloaded, layer-2 connectivity happens very rapidly. It’s so fast that it’s difficult to measure the impact of a single switch. The only real dangers here are overloading the switch, having too much broadcast traffic on the same network as the iSCSI traffic, or forcing iSCSI traffic to go through numerous switches. It’s a lot harder to overload switches than most people think, even cheap switches, but an easy fix is to just use switching hardware that is dedicated to iSCSI traffic. Broadcast traffic can be reduced by putting iSCSI traffic into its own subnet. If you like, you can further isolate it by placing it into its own VLAN. Finally, try to use no more than one switch between your hosts and your storage devices.

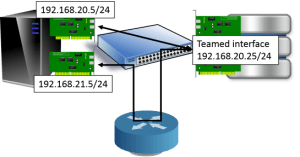

A problem arises when iSCSI initiators (clients) connect to iSCSI targets (hosts) that are on different subnets. This connection can only be made through a router. This often happens when the SAN’s adapters are teamed, won’t allow their adapters to participate on separate subnets, or the configuring administrator inadvertently connects the initiator to the wrong target. One possible mis-configuration is illustrated below:

The problem here is a matter of both latency and loss. The second adapter in the host cannot connect directly to the SAN’s team IP address. People often look at this and think, well, if the source adapter knows the MAC address of the destination adapter, why doesn’t it just send it there using layer-2? The answer is: that’s not how TCP/IP works. The source system looks at the destination IP address, looks at its own address and subnet mask, and realizes that it needs a router to communicate. The destination MAC address it’s going to use when it creates the Ethernet frame is that of its gateway, not the SAN’s teamed adapter. The layer-2 switch at the top will then send those frames to the gateway because, being only layer-2, it has no way to know that the ultimate destination is the SAN. When the gateway receives the data, it will have to dig below the Ethernet frame into the header of the TCP packet to determine the destination IP address. It then has to repackage the packet into a new frame with the source and destination MAC addresses replaced and send it back to the layer-2 switch.

Hopefully, it’s obvious that layer-3 communications (routing) are a more involved operation than layer-2 switching. Layer-3 delays are much easier to detect than layer-2, especially if the router is performing any form of packet inspection such as firewalling. Also, routers are much more inclined to drop packets than simple layer-2 switches are. Remember that in TCP/IP design, data loss is accepted as a given. As far as the router is concerned, dropping the occasional packet is not a big deal. For your storage connections, the extra delays and misplaced packets can accumulate to be a very big deal indeed.

1GbE vs. 10GbE iSCSI

10GbE is all the rage… for people who make money on equipment sales. 10GbE cards are not terribly expensive, but 10GbE switches are. Unless you’re absolutely certain that your storage can make decent use of 10GbE, you should favor multi-path 1GbE in your storage connectivity purchase decisions. Again: don’t overarchitect your storage connectivity.

Jumbo Frames

The size of the standard Ethernet frame is 1538 or 1546 bytes, with 1500 bytes as the payload and the rest as Ethernet information, resulting in about 3% overhead on each frame. That’s not a great deal, but superior ratios are available by employing jumbo frames. These allow an Ethernet frame to be as large as 9216 bytes. The amount of overhead bytes remains the same, however, reducing it to a trivial fraction of the frame’s size. In aggregate, this means that far fewer frames are required to transmit the same amount of data. Less total data crosses the wire, increasing throughput. Another benefit is that packet-processing is decreased, since fewer headers need to be packaged, processed, and unpacked. With modern adapters and switching hardware, the effects of the reduced processing aren’t as significant as in the past, but they are still present.

Configuring jumbo frames for a standard network adapter is just a matter of changing its properties. Configuring in Server Core or for Hyper-V can be somewhat more complicated. I provided directions for 2012 in an earlier post. 2012 R2 is the same, except that, as I noted in my first impressions article, you no longer need to configure the switch. If you dig through this old document I wrote, you can find information on configuring jumbo frames for 2008 R2.

Internal and Direct-Attached Storage

External storage certainly isn’t the only way to connect to disk, although it usually gets most of the press just because of how large and complicated the subject can be. Internal and direct-attached storage are certainly viable for non-clustered Hyper-V hosts. If you employ virtual SAN technology, you can even use internal storage on one Hyper-V host that is a member of a cluster.

Internal storage doesn’t need much discussion. You can use a RAID system, Storage Spaces, or a even a single disk, if performance and redundancy isn’t important. Direct-attached storage (DAS) can be a bit more complicated. You can use eSATA or SCSI, usually SAS in modern equipment. USB 3.0 drives might also provide sufficient performance for small Hyper-V systems. For the most part, DAS drives behave mostly like internal storage. Some may have their own RAID controllers.

MPIO

Multi-path I/O (MPIO) is a generic technology that works with all of the previously mentioned storage access types. If multiple paths to storage exist, I/O can be aggregated across them. Since connectivity to storage is often faster than the storage itself, this is often mostly for redundancy purposes. For larger arrays, however, the aggregation can result in improved throughput. Configuring MPIO hasn’t changed in quite some time. I provided some crude, but effective instructions on my own blog some time ago. There are also some cmdlets for 2012 and later.

Without MPIO, multiple links to storage are useless. The targets will show up multiple times on the same Windows host system, it will mark all but one of them as redundant, and take all except that one offline. If that particular path goes down, the system might be able to flip to one of the other lines, but not quickly enough to prevent at least a delay. More likely, there will be an outage.

MPIO works on FC, iSCSI, and internal storage. You’ll notice that some SAS drives have two connectors. You can connect both of them and configure MPIO.

Connectivity Controllers

Each of the above has some form of controller that interfaces between the host connection and the hard drives. For internal storage, it’s an onboard chipset or an add-in card. For iSCSI and FC devices, it’s the equipment that bridges the network connection to the storage hardware. There’s really not a whole lot to talk about in a generic discussion article like this, but I do want to point out one thing. Many SANs come with a dual-controller option. This allows the system to lose a controller and continue running. If it’s an active/active system, this can occur without an interruption. As a bonus, active/active systems can typically aggregate the bandwidth of the two controllers. Some of them even allow for firmware updates on the SAN without downtime. These are very nice features and I have nothing bad to say about them. What I have is a warning.

I’ve been working with server-class computer systems since 1998. I have literally been responsible for thousands of them. In all that time, I have seen three definite controller failures and one possible controller failure. If you’re pricing out a SAN and the dual-controller option is just really hard to get into your budget, then take some comfort in the knowledge that the odds heavily lean toward a single controller being satisfactory. I’m not brushing aside that it’s a single point of failure and that you would be giving up some of the nice features I mentioned in the previous paragraph. I’m merely pointing out that when money is tight and you’re weighing risks, a single controller may be acceptable.

SMB 3.0/3.02

SMB 3.0 is a new introduction in Windows Server 2012. 2012 R2 brings some improvements in the new 3.02 version. Like iSCSI and FC, SMB is a protocol, although it has critical differences. All previously discussed connectivity methods are considered “block-level”. That is, they allow the connecting operating system to have raw access to the presented storage. The operating system is responsible for formatting that storage and maintaining its allocation tables, indexes, file locks, etc. The storage device is only responsible for presenting a chunk of green field drive space.

In contrast, SMB 3.0 is a file-level protocol. The hosting system or device is responsible for managing the file system, allocation tables, so on and so forth. Prior to SMB 3.0, the SMB protocol was just too inefficient for something like a virtualization system. That was all right, since it was just intended for basic file sharing, which usually doesn’t require a high degree of performance. SMB 3.0 really changed that equation. It’s able to provide performance that is on par with the traditional block-level approaches. This is something that’s important to understand, as many detractors attack SMB 3.0 simply on the basis that it’s not block-level. That’s just not enough to make the case anymore. SMB 3.0 can certainly handle the workload.

Rather than retrace a lot of ground well-paved by others, I’ll give you a couple of links to start your research. The first is a quick introduction to Hyper-V with SMB 3 written by Thomas Maurer. TechNet has a great article that can show you how to get Hyper-V on SMB 3.0 going. If you want to really dive in and get to the nitty-gritty of SMB 3, there is no substitute for Jose Barreto’s blog.

Make sure to watch out for FUD around this topic. The “block-level” versus “file-level” issue isn’t the only baseless argument. I’ve seen a lot of false claims, such as Storage Spaces or Clustered Storage Spaces being a requirement. Storage Spaces and SMB 3.0 can certainly work together but these are distinct technologies. Many other false claims rely on the incorrect confusion of Storage Spaces with SMB 3.0, such as claims that SMB 3.0 can only work on SAS JBOD. You could connect one or more Windows Server 2012 hosts to an FC SAN and expose it as SMB 3.0 without ever looking at Storage Spaces or JBOD.

What’s Next

We have now climbed up from the most basic concepts of drive systems to a fairly thorough look at the ways to connect to storage. In the next part of our series, we’ll look at the layouts and formatting issues used to prepare storage.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

17 thoughts on "Storage and Hyper-V Part 3: Connectivity"

Thank you for your great article.

Sebastien OLLIER

Thank you Eric, well done once again. I have a question regarding FC Adapters. Its also on technet on the website field. Is there a way to list with a powershell command all of the virtual servers on a host, or even a cluster that have Fibre Channel Adapters connected?

I don’t have any way to test this because I don’t use vSAN, but Get-VMFibreChannelHba -VMName * should return a list of all HBAs, with nothing returned for any VM not using an HBA. If it follows the conventions of all the other Hyper-V cmdlets, it should have a field that contains the name of the virtual machine for you to filter against. Perhaps: | select VMName.

Thank you again Eric. This did the trick:

Get-VMFibreChannelHba -VMName * | select VMName

nice article, very clear and not boring. Thanks