Save to My DOJO

In our article on common Hyper-V deployment mistakes, one item we discussed was the creation of too many Hyper-V virtual switches. This article will expand on that thought and cover various Hyper-V virtual switch deployment scenarios.

One Switch will Usually Suffice

The first and most important point to make is the same as mentioned in the earlier article: one virtual switch is enough for most Hyper-V hosts. We’ll start by showing how advancements in Windows and Hyper-V technologies address problems that necessitated multiple virtual switches in earlier versions.

Native OS Teaming is Now Available

In 2008 R2 and earlier, you could only team network adapters by using software provided by the network card manufacturer. This software was often buggy and unstable. Furthermore, Microsoft would not officially support any system that used such teaming. In those situations, Hyper-V administrators would often choose to leave the physical adapters unteamed and create multiple virtual switches. This was a headache that required a lot of manual balancing and tuning, but it kept the host in a fully supported configuration while still allowing administrators to spread out their virtual machine networking load.

With the introduction of native teaming in 2012, this problem was solved. You no longer need to use manufacturer software. Microsoft fully supports a host that uses the built-in teaming mechanisms. You can go to any general Hyper-V forum and get help on teaming issues.

Why you really want to use this over the multiple-team approach is ease of administration. Setting up a network team is not complicated. If a physical network adapter fails, virtual adapters are automatically reassigned to other adapters. You can configure quality of service to shape traffic. Network teaming uses networking standards so you should be able to reliably predict its behavior (assuming you understand the teaming and load-balancing options).

For more information, you can read our article on adapter teaming and our article on load-balancing algorithms.

There are Better Options for Isolation

Another reason multiple switches are created is for the purpose of isolating some virtual machine traffic from others. This has some very legitimate uses which we will cover in a later section, but it’s often not really justified.

The first reason is that Hyper-V has a great deal of built-in isolation technology. It was designed with the notion that virtual machines are inherently untrustworthy and must be walled gardens at all times. Without intentionally setting the port mirroring option, no Hyper-V virtual machine has access to traffic meant for another. There’s really nothing magic here; this is how switches are supposed to operate. In order to compromise this traffic, an attacker would need to break the Hyper-V switch in such a way that s/he had visibility to all packets that it’s handling. There are no such attacks known at this time, nor have there ever been. While an attack of this nature is theoretically possible, it’s not likely enough that you need to exert a great deal of planning around it.

That’s a basic level of isolation that works for small environments. Computers on a network are reachable by all other members of that network, and they’ll periodically send out broadcasts that advertise their presence. You could use things like firewalls, but another option is to use VLANs. These create unique networks that are not directly reachable by members of other networks. The Hyper-V virtual switch natively supports VLANs. For more information, read our post on how VLANs work in Hyper-V.

A third option for isolation is virtual networking. It’s conceptually similar to VLANs, but adds a great deal of scalability. It’s not terribly useful in small or even many medium environments and requires additional software to be used effectively. For more information, start with Microsoft’s article.

iSCSI and SMB Should Be Kept Off the Virtual Switch

I’ve seen a few documents and posts floating around that suggest creating a secondary switch just for the purpose of carrying storage traffic. This is rarely a good idea. It’s best to just leave those adapters completely unteamed. If you’ll be using iSCSI traffic, set up MPIO. If you’ll be using SMB traffic for high performance needs (as opposed to basic file serving), make sure SMB multichannel is working. Teaming for these two traffic types is nowhere near as efficient no matter how you configure it. Forcing them to make the extra hop through the Hyper-V switch doesn’t help.

If your virtual machines will be connecting to these remote storage types using their virtual adapters, then you’ll have no choice but to go through the Hyper-V switch. In that case, making a separate switch might be a good idea. We’ll talk about that in a bit. However, I’d strongly recommend that you reconsider that plan. It’s much better for Hyper-V to make the connection to storage and present it to the virtual machines.

Redundancy is Best Achieved through More Convergence

I’ve seen a few people configure a secondary switch as some sort of backup for the primary. This really doesn’t work well. Your traffic is divided and you have to do a lot of manual work in the same fashion that we used to before convergence was an option. If you join all available physical NICs into the same team hosting the same switch, your odds of surviving any individual failure increase. You also have the benefit of traffic being spread more evenly across available hardware.

When Multiple Virtual Switches Make Sense

There are some cases where multiple virtual switches are a good idea. Sometimes, they are the best option.

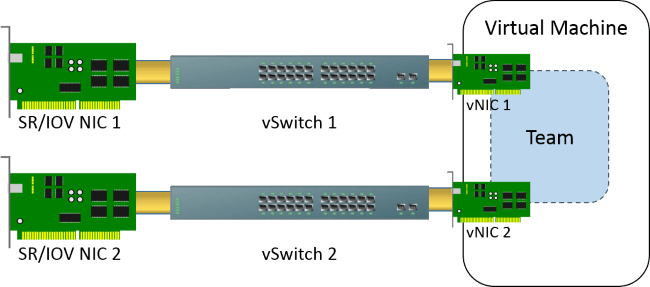

SR-IOV

If you use SR-IOV (a technology that allows virtual adapters to bypass the virtual switch and use the physical adapters directly), you can’t use Windows teaming of the physical NICs. So, if you want to give your virtual adapters the benefit of Windows teaming along with SR-IOV, your only option is to leave the physical NICs unteamed, create virtual switches on each one, and then use teaming inside the virtual machines. It’s complicated and messy, and you won’t be able to create teams for virtual adapters in the management operating system, but it works.

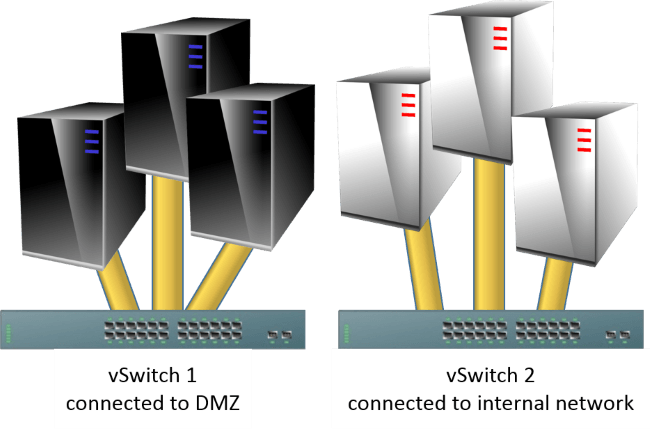

Physically Isolated Networks

Physically Isolated Networks

The primary purpose of physically isolated networks is to separate insecure externally-facing virtual machines (as in, a DMZ) from more secure internal virtual machines. My preference in those situations is to use separate physical hosts. Hosts with guests in the DMZ can be placed in the DMZ while hosts with internal guests can be placed on the internal network.

If that’s not an option, then the next best thing is to bring all the networks into the same virtual switch, using VLANs or virtualized networking to segregate traffic.

If that’s not possible or desirable, then you can use multiple virtual switches.

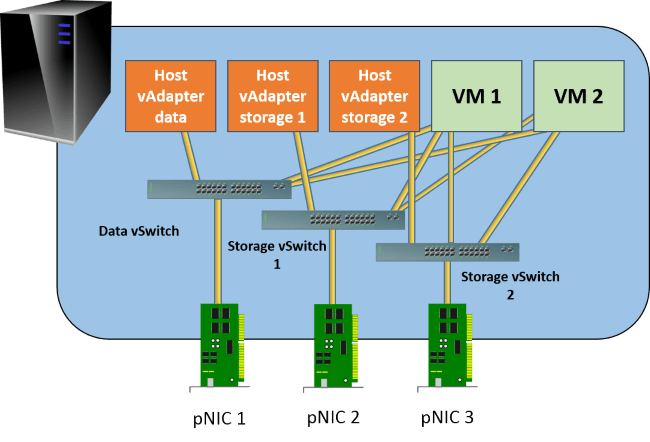

Direct Guest-to-Storage Connections

Direct Guest-to-Storage Connections

If you intend to give your virtual machines direct access to iSCSI target locations or to SMB 3 storage, perhaps for guest clustering, then it might be a good idea to create multiple virtual switches. By doing so, you can create multiple virtual adapters inside the guests that are going to connect to that back-end storage and connect them to different virtual switches. What this does is guarantee that no two virtual adapters will ever wind up using the same physical network adapter.

The graphic is already pretty messy so I didn’t want to add any more to it, but what you want to do is ensure that each virtual adapter that connects to the storage vSwitches is configured for MPIO and/or SMB 3 multichannel, depending on the back-end target that you want to connect to. You don’t need to configure anything special for the physical adapters, except perhaps jumbo frames. If you can make out all the lines, you’ll see that the VMs each have a connection to each switch. They’ll need 3 virtual adapters apiece to make that work.

The graphic is already pretty messy so I didn’t want to add any more to it, but what you want to do is ensure that each virtual adapter that connects to the storage vSwitches is configured for MPIO and/or SMB 3 multichannel, depending on the back-end target that you want to connect to. You don’t need to configure anything special for the physical adapters, except perhaps jumbo frames. If you can make out all the lines, you’ll see that the VMs each have a connection to each switch. They’ll need 3 virtual adapters apiece to make that work.

Straddling Physical Switches

If you read my earlier article on teaming, then you know that there are some drawbacks to the switch independent mode. But, if you haven’t got smart switches, then you can’t use any switch dependent mode at all. If you’ve got smart switches that don’t stack, then connecting your Windows/Hyper-V teams to only one in order to take advantage of switch dependent modes also places you in a position of having a single point of failure.

In order to avoid that, you could create multiple virtual switches that connect to multiple physical switches. Then, you could multi-home the host and create switch dependent teams inside the guests. It’s quite a bit of overhead that probably won’t yield a meaningful return, but it’s an option.

Other Uses

I never pretend to have all the answers or to have the ability to divine every possible use case. Systems architecture is mostly science but there is a heavy artistic component. As systems architects, we also need to be able to adapt our knowledge to fit the needs at hand. We don’t design systems to fit some arbitrary “best practices” list. We build systems to best fit the needs of their applications and clients. If that means you need to design a multi-switch configuration that doesn’t fit neatly into one of the above categories, then you should design a multi-switch configuration.

As a general rule (or “best practice”, if you prefer), you should start with one virtual switch by default. Every switch you add after that needs to be justified. It adds overhead, it negatively impacts the redundancy available to any given virtual adapter (because physical adapters you use in the second and later switches cannot be used to directly add redundancy to the first virtual switch’s team), it depletes VMQs more quickly, and if you’re routing storage over a virtual switch, it adds latency and reduces the maximum bandwidth. These are considerations, not automatic show-stoppers. You have the knowledge to make an informed choice. It’s up to you to apply it to your situation.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

9 thoughts on "Explored: Using Multiple Hyper-V Virtual Switches"

How to two virtual switches in hyper v.

New-VMSwitch

Great post! I consider adding an internal virtual switch for virtual machines to interconnect at 10GB speeds, apart from external communication through their separate, individual external hyperv switches (via 1GB NICs) Does this make sense?

No. VMs will already communicate via VMBus wherever possible and forcing multihomed systems to use a specific pathway requires a substantial amount of administrative effort.