Save to My DOJO

There are currently a lot of emerging technologies in the marketplace right now, and there are few that are more popular than the concept of nested virtualization. This capability has been a possibility in the vSphere ESXi stack for some time, but until recently, Microsoft had shown little interest in providing this same functionality. It could be, this was due to the fact that the only real use cases were lab environments and training. This has changed recently with the advent of cloud and container technologies as we continue to abstract more and more layers of our IT infrastructure.

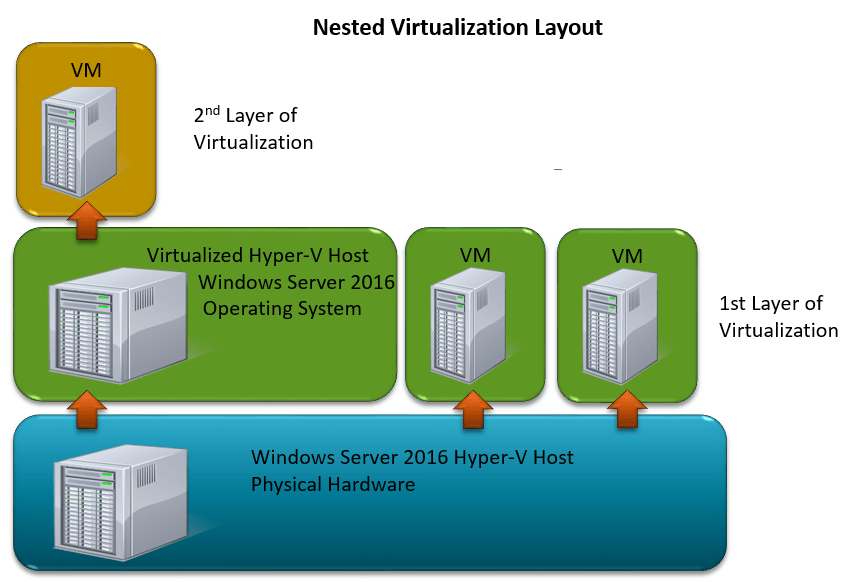

Before we delve deeper into that topic, what is nested virtualization? Nested virtualization is simply the practice of running a hypervisor within a virtual machine as shown in the figure below. You essentially have two layers of virtualization happening and this can be useful for a number of reasons.

Figure 1-1: Logical Layout of Nested Virtualization

Figure 1-1: Logical Layout of Nested Virtualization

Test Environments: Hardware isn’t cheap, and many organizations struggle with a way for their IT staff to effectively train up on the newest virtualization technologies. Being able to nest Hyper-V now provides the ability for IT Pros to simulate entire virtualized environments, without the hefty price tag associated with dedicated equipment.

Containers: This is the real reason, why Microsoft took the time to create nested virtualization. If you haven’t heard of containers, I’m wondering what rock you’ve been living under for this past year. In the event you are still in the dark, think of a container as a type of mini virtual machine, simply with applications in mind. Instead of virtualizing the entire OS, a container focuses on providing an isolated environment for an application to reside in without the overhead of a full virtual machine. At any rate, Microsoft is providing us with two different types of containers.

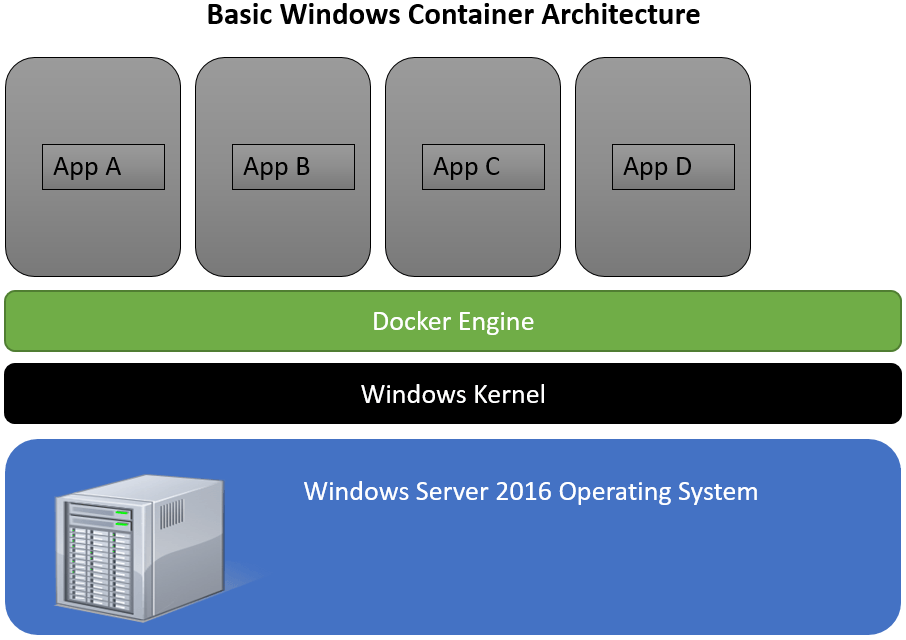

Windows Server Containers

Windows Server containers aren’t really relevant to our conversation other than to see how they are different from Hyper-V containers, which DO apply to our discussion on nested virtualization. As you can see in figure 1-2 below containers run on top of the docker engine. This allows the Windows OS to host up applications in an isolated, almost VMish, type of manner. The big benefit here is the ability to host up an app without having to bring the operating system along with it.

Figure 1-2: Basic Windows Container architecture

Windows containers, while amazing, do have one issue however, when it comes to security and system use. In order to remove the OS requirement, they all share the underlying Windows Kernel of the host operating system. Making them unusable for environments requiring higher levels of isolation. That is where Hyper-V Containers come in.

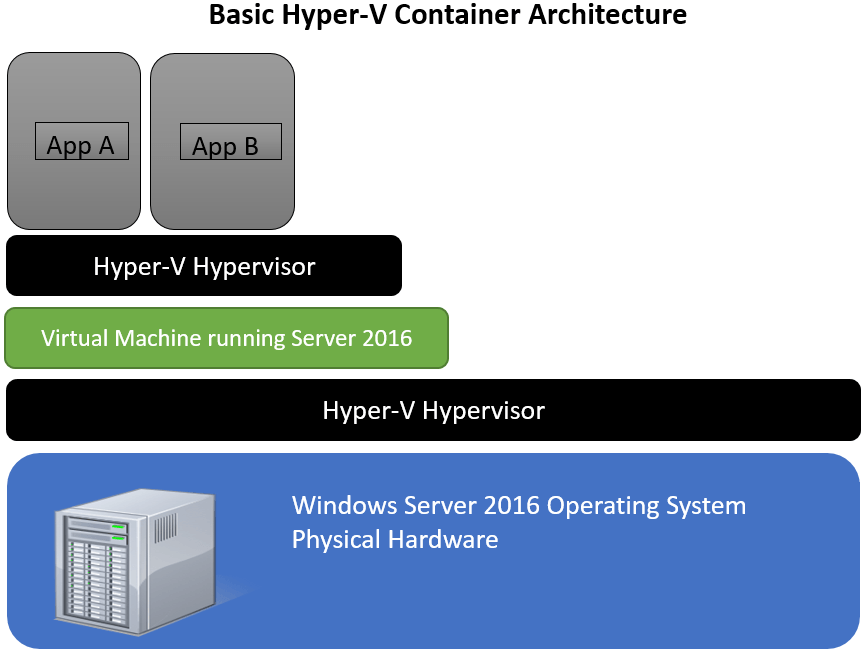

Hyper-V Containers

Hyper-V containers take pretty much the same approach, but add in a Hyper-V virtual machine element. As shown below in figure 1-3, we can see that things look very similar to figure 1-1 in that this technology is using the model of nested virtualization, with multiple layers of virtualization. The big difference between Windows Containers and Hyper-V containers is this added layer. The application is placed into a container within a virtual machine running on Hyper-V, OS and all. This provides that further level of isolation, and prevents shared use of the host kernel in those situations where the application can’t share the kernel, or you don’t want it too.

Figure 1-3: Basic Hyper-V Container Architecture

The next question you might ask is, “Why wouldn’t I run the Hyper-V Container directly from the host level? Why do I need to add in the extra virtual machine and run it from there?”

The answer is, you don’t. You could certainly run a Hyper-V container directly from the physical host, but Microsoft doesn’t want to lock you in at that layer. Think about Azure. Think about service providers and large hosters. Think about large enterprises. These entities are not going to want to give tenants access to the hypervisor running on the physical box in all cases. That could create problems depending on the hosting model.

Customers and organizations hosted within these datacenters are going to want access to this type of technology. Hyper-V containers and nested virtualization provide that ability, by allowing us to stand up Hyper-V containers on Hyper-V in a nested state.

What about App-V?

A lot of folks are going to look at this and say, “gee… that looks an awful lot like what App-V does”. Does this replace App-V. The answer is no. From my perspective, containers are intended to run in the datacenter, and primarily for stateless workloads at this point in time. They are designed to run on servers. App-V, virtualizes an application and packages it up for distribution to client end-points. The two essentially have different use cases, and I don’t see that changing in the near future, so there shouldn’t be any concerns for the App-V folks out there. Though, don’t let that stop you from learning about containers?

How to Enable and Use Nested Virtualization on Hyper-V in Windows Server 2016 TP4

With all of this in mind, you can see “Why” Microsoft finally decided to provide this functionality. Their primary concern was for containers. Now, how do we go about using it?

As of this writing, there are a couple of requirements needed to enable this functionality.

- Dynamic Memory MUST be disabled on the virtual machine containing the nested instance of Hyper-V

- VM must have more than 1 vCPU

- MAC address Spoofing must be enabled on the NIC attached to the virtual machine. This setting can be found in the advanced settings under the NIC in the virtual machine’s properties.

- Virtualization Extensions need to be exposed to the VM as seen below.

The current setting of the virtualization extensions can be seen by running:

Get-VMProcessor -VMName <Target VM’s name> | FL *

The setting you’re looking for in the output is: ExposeVirtualizationExtensions = True. By default this setting is disabled. To enable it simply run:

Set-VMProcessor -VMName <Target VM’s Name> -ExposeVirtualizationExtensions $true

That’s all there really is too it. Once these steps are followed for the target VM, you can simply install Server 2016 TP4 within the VM, install the Hyper-V Role, and away you go! Nest away!

All of these items are displayed in the video below:

https://youtu.be/o0U2DvOYFQA

What Doesn’t Work? (as of this writing)

Needless to say, THIS IS NOT FOR PRODUCTION USE! This functionality is still in early testing phases, so there are some things that don’t currently work.

- Running OS MUST be Server 2016 TP4 Build 10565 or newer

- Live Memory Resize will not work

- Checkpoints will not work

- Live Migration does not work

- Save/Restore will not work

- Does not work on AMD chips currently.

Wrap-Up

So today we’ve shown you how to enable and nest Hyper-V inside of a virtual machine using Windows Server 2016 Technical Preview 4. In Future posts we’ll talk more about containers and how they can be deployed and used alongside this technology, but for now, enjoy building your new nested Hyper-V labs!

Thanks for reading!

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

20 thoughts on "How to enable Nested Virtualization on Hyper-V & Windows Server 2016"

Great blog and demo Andy!

Thanks for this guide. Though may I ask, how will I make my nested VM to communicate on other VMs on my host (physical)?

Hi Jes!

First you’ll want to treat your virtualized Hyper-V host as if it was a real physical Hyper-V host. You’ll want to create an external vSwitch for the nested VM to be attached to so it can talk outbound.

On top of that, I’ve seen situations where MAC address spoofing needs to be enabled in the advanced features for the NIC on the virtualized Hyper-V host. You may not see traffic flow until you’ve done that.

Hope that helps!

Is there any way to enable nested visualization on a TP4-2016 VM running on Azure ?

Unfortunately no. The compute nodes inside of Azure would need to be running Windows Server 2016 in order to do that, and beings 2016 isn’t even in RTM yet, that functionality being added anytime soon are pretty slim. Once 2016 is officially released, I would imagine this would be added in the following months.

A great write-up! I have been tasked to make an isolated environment for the students to configure their AD and clients running on the server. I know the most reliable setup is to run sth like VMWare Workstation on a physical PC. I have setup an Azure environment but was not able to meet the requirements as such the template is already in AD joined to the student’s client and unable to make the students manipulate the network freely. Is there any successful production cases for nested environment for any hypervisors or successful lab environment such as VMWare workstation but hosted on a server that you know of to get me in the right direction?

Hi Shalihin! Apologies for the late response

Nested virtualization would certainly work well for the scenario you mentioned. While using it in that manner for production would be a no-go, using it for a class/training would be acceptable use for sure. For some of the demos I put on for webinars and speaking engagements I use nested VMs quite frequently. It’s a nice feature to use so I don’t have to carry a ton of hardware with me! =)

is there way to do this on windows 10 pro

Hi Timo!

Yes it is completely possible to do this on Windows 10 Pro as long as your system meets the requirements for Hyper-V located HERE.

If you meet the requirements, simply enable Hyper-V from the Program and Features View in the Legacy Control Panel.

Once that’s done you can do all the nesting you want!

Hi Andy,

Thank you for your post and replies.

I am trying to get my IT department to seen a windows 10 Pro virtual machine with hyper-v enabled however they came back to me with ‘It can’t be done’.

In your response to Timo, do you mean you can enable hyper-v on

a Windows 10 Pro in a virtual machine or on a windows 10 pro running on bare metal?

Cheers,

Gus.

Hi Gus!

To answer one of your questions. Yes Hyper-V can be enabled in certain versions of Windows 10 on bare metal.

Whenever I’ve done it, I’ve nested Windows Server 2016 Hyper-V in the guest VM with Windows 10 running on my laptop. However, in the nested virutalization documentation below, it sounds like this should work with Windows 10 in the Guest VM as well, though I’ve never tried it myself.

https://docs.microsoft.com/en-us/virtualization/hyper-v-on-windows/user-guide/nested-virtualization

Hey Andy!

I have an azure ARM windows server 2016 vm with hyper-v role installed. It further has 5 nested VMs

i want to RDP to nested vms individually using the public ip of the host(2016 server) itself ? how to achieve this?

The nested Vms should be accessible from mstsc using the public ip of host and port number.

Hi Nitanshu!

First off, I would start by saying, I’d be VERY wary about using this deployment in a production scenario. While Microsoft hasn’t released official use cases for nested virtualization yet outside of test/dev and containers, I would still be very cautious about deploying anything in production using nested virt. outside of those two mentioned options. As they haven’t officially listed RDS as a supported nested workload, they would be well within their rights to refuse support, in the event you needed it, even though it “technically” can be made to work.

As for doing what you mentioned above. (DISCLAIMER: I’ve never done this in Azure myself) but I can foresee a few issues. You won’t be able to use the network security group functionality because you have to associate it with a NIC that is visible to the Azure fabric. You may have to run a second Azure managed VM that acts as a firewall that can handle the port redirection based on that same static IP address. There is likely an Azure specific service that can do this, that I’m not aware of…..(again…. I haven’t done this particular deployment myself).

Hope that somewhat helps!

Port Forwarding Withing the Azure

Each computer you will need to assign an IP address

within your Azure Gateway assign each computer an external port

Make each External port translated to port 3389

So From the outside it will be (Azurewebcloud:36000) = Main

Azurewebcloud:36001 = VM1

Azurewebcloud:36002 = VM2 ….

Hi Andy

Nested Virtualization something really great for test labs for learning or so.

how about in production environment, is it fully supported ? or can we rely upon something like this to deliver say RDS environment in nested way. so that virtual machine host don’t have to be a physical server. that will save lot of resouces and make life easy for Hosted Desktop providers etc.

i do want to use it for production but dont really feel confident . anyone else started using it or think this is way forward ?

Hi Harry!

Nested Virtualization is indeed awesome for test labs and demos, and that has been one of the mentioned use cases for nested virt. right from the get go along with Containers.

As far as other “Supported” use cases go it gets a bit trickier. While “technically” possible I wouldn’t recommend running RDS with nested hyper-v hosts at this time due to a number of potential issues. The 1st being the fact that there is some compute overhead associated with nested virtualization, and as we know RDS is very performance driven to insure a good user experience. Additionally, you miss out on some things too, like Dynamic Memory, Discrete Device Assignment….etc..etc.

Lastly, and possibly the most problematic issue is, Microsoft has no official statement outside of containers and test/dev for officially supported use cases with nested virtualization. So while again, “technically” it may work, if there are ever any issues, and you need support, they may reserve the right to refuse support. So, that’s something to keep in mind as well.

Hope this Helps!

Thank you for this great article. I’m interested in any new information regarding AMD compatibility.