Save to My DOJO

Domain controllers don’t mix with virtual environments — or do they? A mountain of conflicting information exists on this topic, and few of us have time to make the expedition over all of that territory. You’ll find a guide map here that will take you safely past the traps of myth straight to the pinnacle of best practices for your virtualized domain controllers.

Virtualized Domain Controllers: Myths

You’ll have to get through the fiction before you can get to the facts. Many outdated truths, fear, and blind guesses have led to the creation of abundance of misinformation on the subject of virtualized domain controllers.

Myth 1: Domain Controllers Should Not be Virtualized

We might as well start with this catch-all myth. Whatever arguments, whatever anecdotes are supplied as support, they are insufficient. We’ll look at many of those in the next sections, but there is simple proof that this is a myth: many, many institutions are virtualizing their domain controllers with success.

Myth 2: You Must Keep at Least One Physical Domain Controller

While this might have had some truth years ago, it’s not a big issue today. The reasons that people maintained physical domain controllers in earlier times had more to do with the comparatively primitive state of virtualization. Failures and issues were more common. Even when failures didn’t occur, virtualization was still a young and somewhat unknown quantity. It only made sense to be distrustful.

Times have changed. If you have a physical domain controller, I wouldn’t get in a hurry to rid yourself of it. If you don’t have one, or have one you don’t want to keep, move ahead. Any fears that you have should be alleviated by the regular backups that you’re going to take.

Myth 3: A Domain Controller on Hyper-V is Vulnerable to the “Chicken and Egg” Problem

Of all the myths around domain controllers and Hyper-V, the most tenacious is the notion of the “chicken and egg”. I’ve even talked to MVPs that believe this one.

Here’s the format of the myth: a Hyper-V system is a member of a domain run by a domain controller that it hosts. When the host starts, it can’t talk to a domain controller because that VM hasn’t started yet. So, the universe implodes and everyone becomes Justin Bieber fans. Or something. I’m not really sure what they think is supposed to happen, but the faithful of this myth are certain to their core that Something Bad(TM) will arise.

Their position has a major problem: this myth is demonstrably false, and ridiculously simply so. Anyone with a Hyper-V-capable physical machine or nested environment and access to a trial copy of Windows Server can disprove this one in under an hour. What irks me is that anyone who has much experience with Active Directory shouldn’t need to have this proven to them. The reasons that there is no “chicken and egg” problem:

- Hyper-V is a Microsoft kernel. No Microsoft kernel requires access to a domain controller in order to start. Anyone that has taken their laptop home from work can demonstrate this. It is possible that you won’t be able to log on as a domain user, but that doesn’t mean you can’t log on as a local user. Here’s the bigger implication that myth-believers seem to ignore: if you see a logon screen, Hyper-V has already started. That’s how type 1 hypervisors work. The hypervisor starts first, then it starts the management operating system.

- The service that you care most about, VMMS.EXE (Hyper-V Virtual Machine Management Service) runs under the Local System account. It doesn’t care one bit about a domain. If you configured it to run as a domain user, you’re going to have other problems anyway.

SMB 3 and the “Chicken and Egg”

There is one condition in which you could encounter a partial chicken and egg scenario with Hyper-V and domain controllers. SMB 3 requires active authentication. A partial chicken and egg scenario could occur if both of the following are true:

- A domain controller guest is stored on SMB 3 storage

- No other domain controller is reachable by the Hyper-V host

This issue has a very simple solution: don’t put your domain controllers on SMB 3 storage. Also, it’s not a true chicken and egg situation because the chicken is alive and clucking. The egg just won’t hatch. You might have some unpleasant work ahead if you find yourself in this situation, but you can fix it.

Clusters and the “Chicken and Egg”

In 2008 R2 and prior, a cluster wouldn’t start at all if it couldn’t contact a domain controller. This is no longer true in 2012 R2 and later. Even if the cluster service won’t start, both Hyper-V and VMMS.EXE will. With basic cluster troubleshooting techniques, you can bring a clustered virtual machine online without the cluster running. These are techniques that you should know anyway if you’re operating a Hyper-V cluster.

Hyper-V Hosting its Own Domain Controller

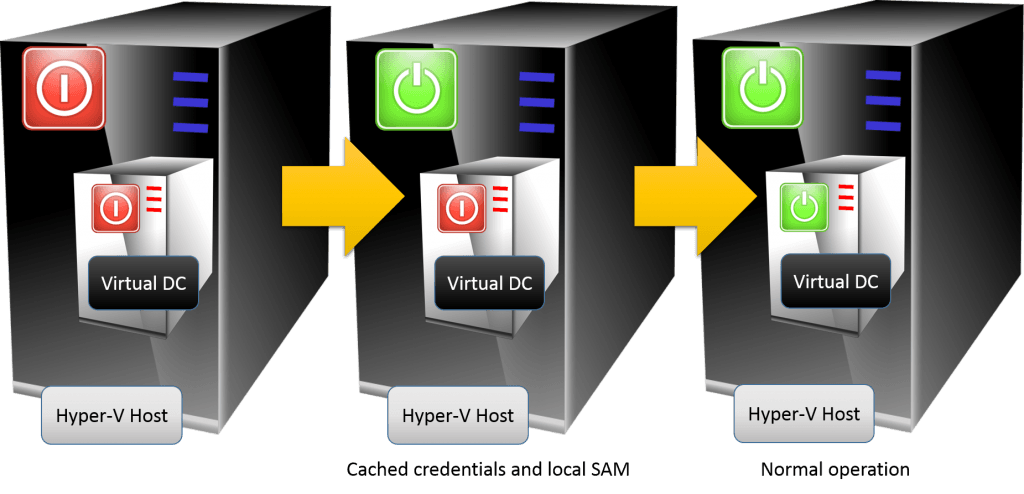

Simply put, there is no chicken and egg problem. This is the process:

Virtual DC Power On Process

Myth 4: Time Drift is Uncontrollable When Domain Controllers are Virtualized

Windows is not a real-time operating system, so time drift is inevitable. If a Hyper-V host’s CPUs are heavily burdened, time will drift more quickly. This is not an uncontrollable issue, though, unless the CPUs are really bogged down. If that’s the case, the problem isn’t that the domain controller is virtualized; the problem is that the host is overburdened. There are simple ways to deal with normal drift. Time drift is not a good argument against virtualizing domain controllers.

Virtualized Domain Controllers: Best Practices

With the myths out of the way, you’re clear to design your domain controller deployment. Let’s look at some of the best practices around domain controllers, with an emphasis on running them in a virtualized environment.

1: Always Start by Assessing Your Situation

Before you begin, determine what you want your final domain controller situation to look like, how small failures will be handled, and how you’ll recover from any catastrophic disasters. How many domain controllers do you need? Where should they be placed? Should domain controllers be highly available? What will the backup schedule look like? The answers to these questions will draw the most definitive picture of what your final deployment should look like. Do not start throwing up domain controllers with the notion that you’ll figure all of this out later, because later never comes until there is a failure to be addressed.

2: Determine How Many Domain Controllers are Necessary

After assessing your situation, answer this question first. If you operate a small shop with a single location, you can shortcut this question. A single domain controller can easily handle thousands of objects. Any extra DCs are for resiliency, not capacity. If you have multiple sites, try to place at least one domain controller in each site and make sure to configure those sites in Active Directory Sites and Services so that the directory properly handles authentication and replication traffic. If you need more in-depth help, refer to TechNet’s thorough article on the subject.

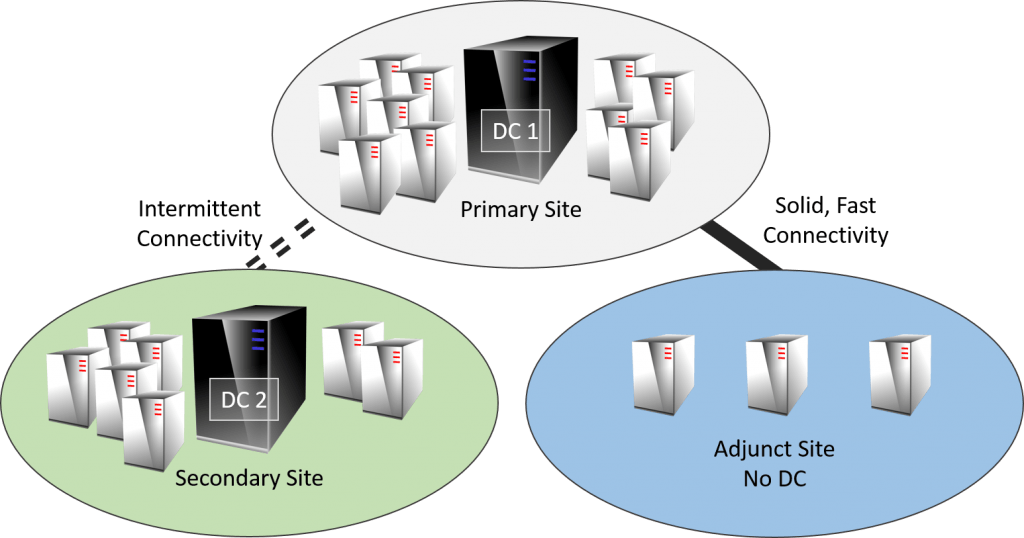

3. Determine Where to Place Domain Controllers

There are some very simple guidelines for domain controller placement.

- If possible, multiple domain controllers should not reside on the same hardware. The primary purpose of multiple domain controllers is to provide 100% availability for domain services. If you have two domain controllers on the same host, that’s not substantially better than just running with a single domain controller. Active Directory is resilient even without redundancy

- If you have multiple sites and they have any sort of network connection to each other, your preference should be to place one domain controller in each site. The need for domain controllers in any given remote site is tied to the number of users in that site and the quality of the intersite connection. If you have a lot of users, you want a domain controller. If the intersite link quality is poor, you want a domain controller. Make sure that the domain controller or some other server in the remote site is also running DNS and that all systems in that site refer to it.

Example: Multi Site Domain Controller Architecture

4. Do Not Checkpoint Virtualized Domain Controllers

Prior to 2012, reverting a domain controller to a checkpoint (snapshots in those days) could cause irreversible damage to your domain. That’s because the other domain controllers thought that they’d already performed replication with that domain controller, but it would be oblivious to any changes that were discarded when the checkpoint was reverted. It would then resubmit object changes using Update Sequence Numbers that it had already used, causing inconsistencies in the directory. Sometimes the directory can detect these problems (called a USN rollback); sometimes it can’t. The problem is fairly catastrophic and the fixes are painful, when they’re even possible. Microsoft has a TechNet article that explains this condition and can help you to find solutions if it happens to you.

In 2012, a new feature called VM Generation-ID was added. As long as the virtualized domain controller is running 2012 or later, USN rollbacks due to checkpoint reversion should be prevented. There are no guarantees. Your first, best choice is to never checkpoint a domain controller. A domain controller that runs no other services does not fit the envisioned use cases for checkpoints anyway, so you should be highly skeptical of any reasons that anyone submits to the contrary.

As several readers have correctly noted, modern backup software relies on Hyper-V checkpoints to perform backups. However, you might have also noticed that these checkpoints do not appear in most typical tools. There is a very good reason for that: Microsoft never intended for backup checkpoints to be reverted. When a virtual machine has a checkpoint, all activity goes into the newly-created checkpoint files. Everything prior to that is in a read-only state. It’s a perfect condition for backup software to use. Once the backup software completes, it should notify Hyper-V so that it can merge the checkpoint. These types of checkpoints do not threaten domain controllers because they are never reverted.

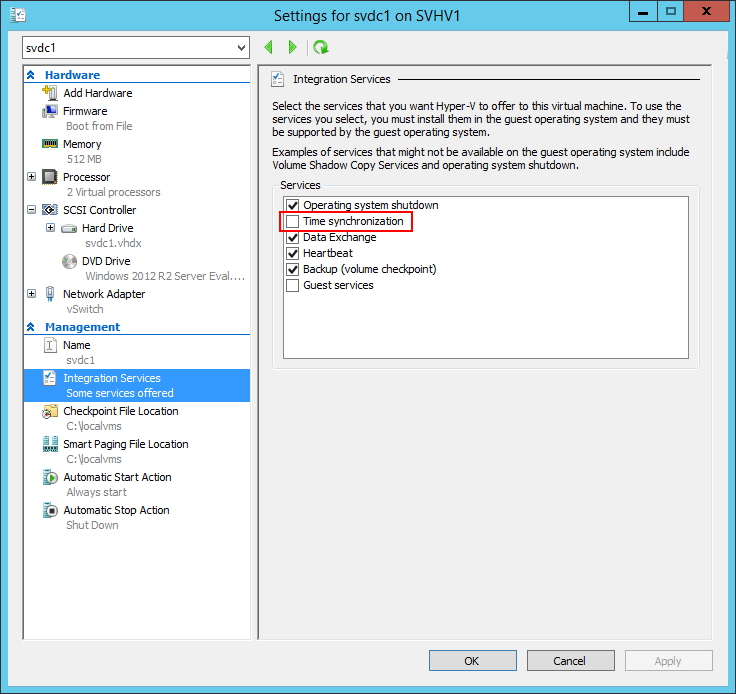

5. Disable Hyper-V Time Synchronization for Virtualized Domain Controllers

By default, Hyper-V is in charge of keeping the clocks updated in its guests. In days past, we recommended sharing responsibility for the clock in virtualized domain controllers. That’s because resuming a virtual machine from a saved state or reverting a checkpoint will ensure that its clock is incorrect and it might be too skewed to allow for updates via channels other than Hyper-V’s time integration service. With a time-sensitive application like domain services, that can be a very bad thing. Unfortunately, the shared responsibility setting is no longer possible; something has changed in the Hyper-V Time Synchronization Service that causes it to completely override any other source set for the Windows Time service. This means that any guest with the Hyper-V Time Synchronization Service enabled at all will always get its time from the host. Because domain controllers expect that they are at the top of the local time hierarchy, this could cause problems.

First, disable the synchronization service for virtual domain controllers:

Disable Time Synchronization

Second, configure your domain and devices for authoritative time synchronization. This is a fairly lengthy procedure, but definitely worth it.

For further reading, we have a recent article on time and Hyper-V.

6. Do Not Place Domain Controllers in Saved State

Saving a domain controller is not as dangerous as checkpointing it, but it’s not a great thing, either. When a virtual machine resumes from a saved state or is reverted to a checkpoint, the only thing that is guaranteed to fix its clock is the Hyper-V Time Synchronization Service. But, as you know from the previous list entry, you can’t enable that on virtualized domain controllers. If its clock skews too far, it might never fix itself automatically. That would be bad.

A worse situation is a long-saved domain controller. Consider the following scenario:

- A virtualized domain controller is placed into saved state.

- A user is terminated and his user account deleted.

- The tombstone lifetime for the user account object expires and the record of the account is deleted.

- The virtualized domain controller is resumed.

Of course, in order for this to work at all, there must be multiple domain controllers. You can’t delete objects if the only DC isn’t responding.

In the described scenario, the resumed domain controller will reanimate the deleted account. It does that because the object was active on that domain controller at the time that it was saved. When it resumed, it had an active record while no other domain controller in the environment even remembered that it ever existed. They will see it as a new object and replicate it as though nothing ever happened. If you haven’t got a password expiration policy or if its cycle is longer than your tombstone lifetime, this situation is a security risk.

If you have multiple domain controllers and you determine that one has been saved for a very long time, you can discard its saved state. Active Directory can handily deal with the data loss. There is some risk if the domain controller is your only global catalog server, but an offline solo GCDC will cause noticeable problems long before the tombstone lifetime expires.

I tried to find a definitive answer on default tombstone lifetimes, but I could not find one that covers all versions. Defaults have been 60 days and 180 days, depending on the Windows Server version. However, knowing the default only goes so far; if a domain began its life in one version, that tombstone lifetime will persist through upgrades unless changed. Instead of guessing, you should ask your domain for its setting. This TechNet article explains how to determine the tombstone lifetime.

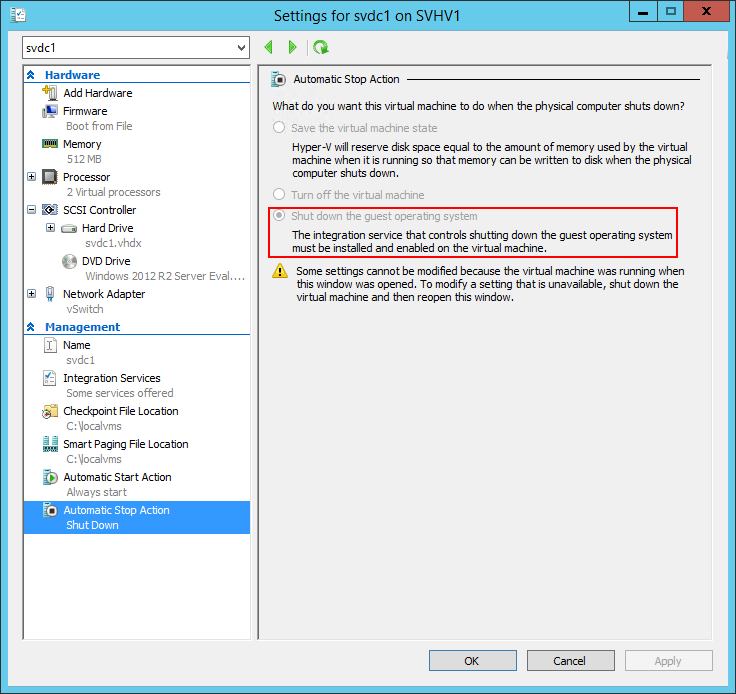

7. Set the Automatic Stop Action of Virtual Domain Controllers to Shut Down

By default, shutting down a post-2012 Hyper-V host will save all the guests. We want to avoid Saved State wherever possible for virtualized domain controllers.

Automatic Stop Action for Domain Controllers

This setting sometimes does not work for guests that need a long time to shut down. Keep your domain controllers lean (no other software or unnecessary roles).

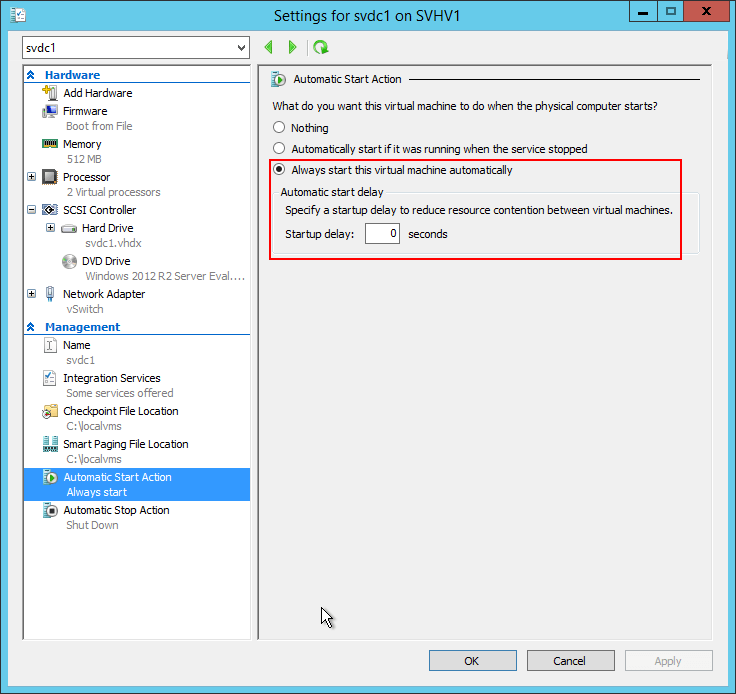

8. Set the Automatic Start Action of Virtual Domain Controllers to Always Start without Delay

Active Directory Domain Services typically provides core functionality to most everything else in a Windows environment. Make sure that it’s available without manual intervention.

Automatically Start Virtual DCs

Hyper-V sometimes ignores this setting. We will talk about that in a bit.

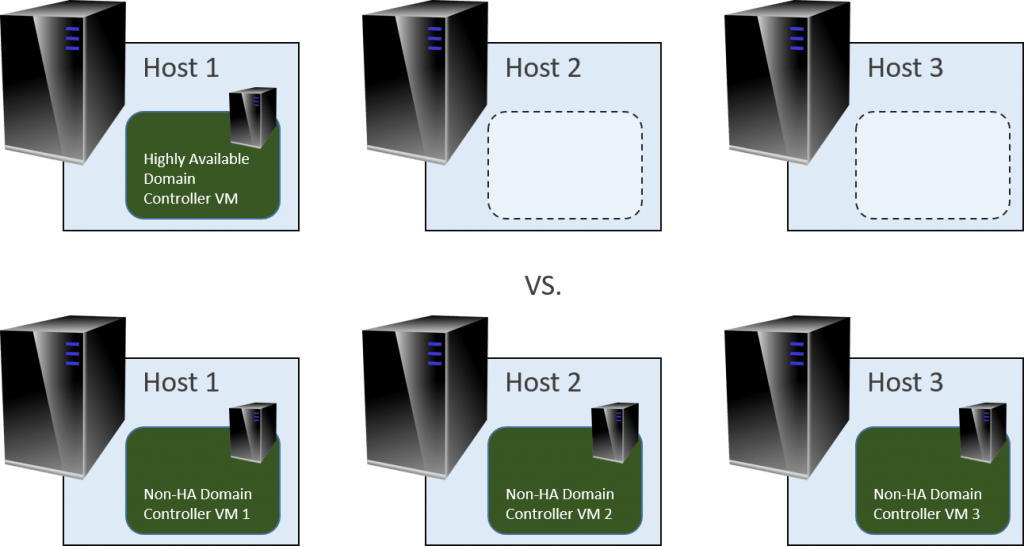

9. Do Not Make Domain Controller Virtual Machines Highly Available

Due to the vastly different natures of the technologies, Active Directory’s high availability features are dramatically superior to anything that Hyper-V and Failover Clustering can provide. There is generally no benefit to clustering the VM that contains a domain controller. I do make one small exception: consider clustering VMs that hold FSMO roles, but only if you have at least one non-HA domain controller and you have enough domain activity to justify it.

If you’ve got some notion that a single HA virtual machine saves on licensing as opposed to multiple non-HA VMs, I’m going to have to dispel it. A highly available virtual machine must have an available virtualization right on every host that it will ever run on:

Both groups require the same licensing, but the second group is more resilient

Furthermore, keeping domain controllers non-HA also implies that you will run them locally, which has great benefit. Best practice #10 explains why.

10. Place Virtualized Domain Controllers on Local, Preferably Internal Storage

As mentioned in the myths section, the closest you can get to a “chicken and egg” scenario happens when you place a domain controller on SMB 3 storage and have no other domain controllers. You must avoid that configuration. Additionally, Microsoft does not support non-HA virtual machines running from Cluster Shared Volumes. It does function, but behavior can be strange. Because the fate of non-HA VMs is already inextricably linked to the fate of the host that they live on, the best thing to do is place them on internal storage. Use mirroring or some other technology to protect them against storage failures.

11. Do Not Perform Physical-to-Virtual Conversions on Domain Controllers

Active Directory has one of the smoothest migration paths that I have ever seen for any application. If you want to keep the name and IP address of your physical domain controller, then use a temporary domain controller to make the transition. If you search for “Active Directory Migration”, you’re going to get a lot of articles that talk about migrating objects from one domain to another with the Active Directory Migration Tool (ADMT). If that looks complicated, that’s because it is. But, we’re not talking about a cross-domain migration, just moving directory services from one system to another. That’s not complicated at all.

The simple path:

- Build a new virtual machine, install Windows Server, and ensure it has a valid, activated key.

- Install ADDS in the new virtual machine as a new domain controller in an existing domain. Ensure that it connects with your existing domain.

- If necessary, make the new DC a global catalog and/or transfer FSMO roles. Configure DNS, DHCP, and any other adjunct services performed by your DCs as necessary. If you have a source DHCP server on 2012 or later, you can use DHCP failover to quickly and easily replicate DHCP scopes and then break the relationship if necessary.

- Whenever you’re ready, uninstall ADDS and decommission the existing physical domain controller (as a Best Practice, you should follow step 7 below, but it’s less critical when the old DC name isn’t reused).

The complicated path, if you want to keep the name and IP of the existing DC:

- Build a new virtual machine and install Windows Server. This is a temporary installation, so don’t worry about keying it.

- Install ADDS in the new virtual machine as a new domain controller in an existing domain. Ensure that it connects with your existing domain.

- Make the new DC a global catalog and transfer FSMO roles.

- Configure DNS, DHCP, and any other adjunct services performed by the original DC. If the original DC is running DHCP services and is Windows Server 2012 or later, you can use DHCP failover to quickly and easily replicate DHCP scopes and then break the relationship.

- Remove DNS, DHCP, etc. from the original domain controller.

- Remove ADDS from the original domain controller and decommission it.

- In Active Directory Sites and Services, Active Directory Users and Computers, and ADSIEdit, track down the remnants of the original domain controller and wipe them out. The most important place is ADSS.

- Follow steps 1-7 again, using a permanent domain controller that has the same name and IP as the source.

12. Always Join Your Hyper-V Host to Your Domain

People that don’t have domain controllers are free to leave their Hyper-V hosts in workgroup mode. I can see some theoretical reasons for not joining a hosts when all its guests are in a public-facing DMZ, although those reasons are usually flimsy. No one else has any excuse. I’m quite familiar with the counter-arguments, so I’ll just deal with them now:

- There is no chicken and egg problem as demonstrated above.

- If workgroup mode is more secure than your domain, then you need to fix your domain security.

- The headache of managing a non-domain-joined host qualifies as torture outlawed by the Geneva Convention.

A Hyper-V host is just another member server with a very long track record of stability. Stop treating it like it’s radioactive. I’ve hit the point where I feel that all of the myths around virtualized domain controllers that people use to justify workgroup-only hosts have been so thoroughly debunked by myself and others that responding to the same objections is no longer worth my time. If you haven’t got a new objection with concrete proof, don’t expect me to listen.

Frequently Asked Questions about Virtualized Domain Controllers

I’ve been writing about virtualized domain controllers for quite some time and have received and seen many questions on the subject. I’ve collated the most common questions into a FAQ format.

Q: Do I Need a Second Domain Controller or is One Enough?

A: Smaller Organizations Do Not Need a Second Domain Controller

The purists and the textbook admins always say that multiple domain controllers are a minimum requirement. Usually, the purists work on the provider side and have never had the pleasure of balancing a small business operating budget — other people’s money is a foreign, abstract concept. The textbook admins are so paralyzed by the fear of a 2% failure rate that the 98% success rate looks like a zero.

For most of my career, the vast majority of companies that I have worked for/with (we’re talking in the thousands here) used only a single domain controller. Everything was fine. It even has some benefits:

- It saves on Windows licensing and hardware costs

- USN rollback is impossible; this is important in a small environment, as technical resources with the capacity to detect it and the expertise to correct it are in short supply

- If Bad Things should occur, restore of domain services is extremely simple in a single DC environment

In today’s world of ubiquitous virtualization, the single DC environment is quite low-risk. A small business could run Hyper-V on a host with two guests; one running domain services and the other running the company’s other services. This only requires a single Windows Server Standard Edition license and satisfies the best practice of separating domain services from other server applications. This could all then be backed up using any Hyper-V-aware solution (as a shameless plug, Altaro Backup Hyper-V would handle this scenario with its free edition). This is a solution that I wish I had access to many years ago, as it would have fundamentally changed the way I worked with many small business customers.

The single most important thing is backup. Backup provides data redundancy at a very low cost. Providing run-time redundancy more than doubles the cost. If that cost is too great and you understand the risks and you take the time to develop solid contingency strategies, then the single domain controller environment is just fine. I have seen it in common practice since 1998.

Q: If I have one Hyper-V host and it hosts the only domain controller, what happens if the domain controller doesn’t start?

A: Use cached or local credentials.

Joining a domain does not affect the local credentials by default. Some domains rename the local administrator account. No domain policy should disable the local administrator, especially on mission-critical servers. Disabling the local administrator account does not meaningfully improve security but exposes you to needless risks. You should already have a policy of maintaining local administrator credentials in a secure fashion. As long as you have those available, you can log on. As long as you have backup available, you can rebuild from scratch in the worst case scenario anyway.

Also, most domains retain the default cached credential setting, which allows you to log on using any domain account that the host has seen recently. The debate on the trade-offs between security and convenience of cached credentials would take us on a wide tangent so I won’t rehash it here. If you have decided to use cached credentials in your domain, then the condition of a Hyper-V system hosting its own domain controller should not scare you.

Q: Isn’t it better to just make the Hyper-V host a domain controller?

A: Absolutely not. Never make a Hyper-V host into a domain controller.

I wrote an entire article explaining why. I have seen a number people going against this recommendation and I pity their users because of the problems they have needlessly introduced.

Q: What if I Can’t Trust All of My Hyper-V Admins Around Virtual Domain Controllers?

A: That is a Human Resources Problem.

Avoiding any technology because someone made a poor hiring decision results in an unmaintainable house of cards. Someone needs to have that uncomfortable, “Sorry but it’s not working out,” conversation so you can move on. I understand that there are politics involved that result in situations like this, but there is also a reason that good administrators tend to job hop a lot before they find their forever home (and some never do). In my experience, many of the untrustables are more of a training issue than anything else, so I always try education before ostracization or termination. Sometimes, “s/he goes or I go” is your best course of action.

A reader pointed out that this position sort of waves away the very real concern of having rogue admins. I conditionally plead guilty. The main thrust of this point: malicious or undeservedly confident people with administrative credentials will eventually destroy something and no technical solution will prevent or fix that. Most germane to this article: leaving a Hyper-V host in workgroup mode will not even present them with a speed bump. Yes, you must employ good security measures. Yes, you must stay on top of your security status. Role-based access is good thing and I eventually plan to see how Shielded VMs work with domain controller guests.

Q: Windows Server Full GUI or Core for Domain Controllers?

A: Yes.

There is no right answer.

Personally, I prefer Core. I set up domain controllers and then never log on to them directly again, so what do I care about a GUI? I have management systems running versions of Windows with their matching version of Remote Server Administration Tools. When I need to manage a server, I either do so via an administrative PowerShell session from my primary instance or I log in to the version-matched instance as a domain administrator and manage the server. Nowadays, I can avoid a lot of that hopping around with Windows Admin Center. The bonus for some of you is that when a questionable administrator connects to one of those Core-mode virtual machines and sees that black box with the flashing cursor, they panic and go into a catatonic state that lasts at least a couple of hours. There is no better preventative against Click Next Admins than Server Core.

But, that’s me. I am one of a nearly infinite set of possibilities. If Core is just not where you are, that’s OK. Use the GUI. I have no patience for people that refuse to use the command-line/PowerShell or think that CLI tools are somehow lesser than the GUI, but I have absolutely no problem with people who are just at a different place in their career development. I can’t claim that I instantly abandoned GUI domain controllers when Core became available, so I won’t criticize anyone else. It’s true that the GUI uses a bit more resources and is a bit more susceptible to malware because of the larger surface area, but these are very manageable issues. I ran a pair of DCs, one GUI and one non-GUI, side-by-side for a while, and the memory difference was never more than about 100MB. They both had to reboot after each patch cycle. The GUI installation might have had 4 “needs restart” patches as opposed to the non-GUI DC’s 1, but a restart is a restart.

Q: Should I Abandon Physical Domain Controllers?

A: Not Necessarily.

If you have functional hardware and no need to free up physical space or transfer the license (or if it’s OEM and the license isn’t transferable), keep your existing physical domain controller. The additional resiliency is nice and a weaning period for the uncertain might be helpful.

But, going forward, I personally would not create any new physical domain controllers. I haven’t kept on top of scalability knowledge for domain controllers so I don’t know where they stand today, but in the past, a single domain controller could only reliably handle a certain amount of objects before adding hardware just didn’t help anymore. In those cases, adding domain controllers was the only solution. I spent some time researching for this article, and found that most of the official documentation that I used in those days has never been updated, even into the beginning of the 64-bit era. The best article that I found was this one, and I’m questioning the validity of more than a little of it. Today, I would be unlikely to build a domain controller with more than 4 cores and 8 GB of RAM… maybe up to 16 GB… and a few hundred gigabytes of disk space at most. I would only ever expect a single domain controller to handle about 5,000 users. With the pricing of modern server hardware, building a stand-alone unit of that size is nearly pointless because you can more than double those numbers for only a fraction of the base cost. Use a bigger Hyper-V host and virtualize domain services. If a domain controller starts acting sluggish and has hit those numbers, then you should scale out, not up.

Q: Should I Use Dynamic Memory for Domain Controllers?

A: Yes.

ADDS is very respectful of memory, as server applications go. For small domains with only one or two domain controllers, find the size of your NTDS.DIT file and make that the Startup. You can make the Minimum a little bit smaller. For the maximum, don’t go more than 2 GB over the size of NTDS.DIT. You can watch Hyper-V Manager’s demand metric to see how it’s working, although Performance Monitor tracing is preferred.

Q: Should I Place the Active Directory Database on C: or Make a D: VHDX?

A: For Generation 1 VMs, create a D: drive on the virtual SCSI chain to hold the AD database. For Generation 2 VMs, it doesn’t matter.

I believe that all of the issues around caching for the virtual IDE drive have been resolved, but better safe than sorry.

Q: Should I Place the NTDS.DIT on a Pass-through Disk?

A: You Should Not Use Pass-through Disks

Stop using pass-through disks. If you don’t want to do that, just stop virtualizing altogether. You’re doing it wrong. I know some people still believe that pass-through disks are faster than VHDX, but if your domain controller chews disk so hard that you care about performance, you’re doing it very wrong.

Q: Should I Use Virtual Domain Controllers with Fixed or Dynamically Expanding Virtual Hard Disks?

A: Domain Controllers are an Ideal Use for Dynamically Expanding Virtual Hard Disks

Unless you make your VHDX files ridiculously small, your virtualized domain controllers will never consume their allocated space. Domain controllers are relatively low on the IOPS consumption scale. Dynamically expanding is the way to go.

Q: Should I Use Hyper-V Replica with Domain Controllers?

A: No.

If your disaster recovery site has sufficient connectivity for Hyper-V Replica to function, then it has more than enough connectivity for Active Directory replication to function. As with clustered VMs, Replicated VMs require the same licensing as creating two distinct, active VMs (unless Software Assurance is in play). Active Directory replication is superior to anything that Hyper-V Replica can do for it. Also, if a change is successfully replicated to the master image of a domain controller but does not make it to the Replica, then a USN Rollback could occur if that Replica is brought online. However, Hyper-V Replica cycles more frequently than inter-site Active Directory replication does. Despite that, I would always counsel to make changes to Active Directory replication and backup to address any concerns and use Hyper-V Replica strictly for VMs whose contained applications do not have their own replication technology.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

99 thoughts on "Virtualized Domain Controllers: 4 Myths and 12 Best Practices"

Hmmm. There is actually some weight to myth 3. If theres an issue where hyper-v reboots and the AD VM can’t start, logging on using Ad credentials can be painful (both ad and dns will cause headaches – usually long login times).

Of course this can be mitigated easily by logging on locally. Unless your security minded and have changed the local (of course renamed) admin account password to something very complex…

You could set the host up so it’s in a workgroup. Though that setup will give you a set of management issues to deal with.

Having personally experienced this issue several years back (two hyperv hosts – 2008r2), for the sake of a few hundred pound for a pizza box style server (when your spending many thousands on a SAN / cluster) its probably a good idea to keep physical AD box just for this situation.

What you’re describing isn’t a chicken and egg problem, though. The chicken and egg myths state that the configuration will not function due to a circular dependency, which is untrue. What you’re describing is poor management of at least one resource. A few thoughts:

I’m not opposed to physical DCs as such, I just would default to a virtual DC mindset and need to be talked into physical. Exactly the opposite of where I was five or so years ago. I would also point out that things are different now than they were when 2008 R2 was the norm — I had a very similar class of problems to the one that you’re speaking about in your last paragraph, but in my case there was a physical DC (three, in fact), and we still had issues during full power outages.

The chicken and the egg is really when your SMB SAN relies on Active Directory authentication. I would much rather spend 3k on a phyical DC than remotely put the company at any level of risk.

As mentioned in the article, keeping DCs on local storage would be a perfectly viable solution. Running a DC from any kind of remote storage will always be a risk anyway. But, as long as you’ve got $3,000 of someone else’s money to spend on a <$300 problem, I suppose it's OK.

Hmmm. There is actually some weight to myth 3. If theres an issue where hyper-v reboots and the AD VM can’t start, logging on using Ad credentials can be painful (both ad and dns will cause headaches – usually long login times).

Of course this can be mitigated easily by logging on locally. Unless your security minded and have changed the local (of course renamed) admin account password to something very complex…

You could set the host up so it’s in a workgroup. Though that setup will give you a set of management issues to deal with.

Having personally experienced this issue several years back (two hyperv hosts – 2008r2), for the sake of a few hundred pound for a pizza box style server (when your spending many thousands on a SAN / cluster) its probably a good idea to keep physical AD box just for this situation.

Thanks for this article – the chicken & the egg problem has always confounded many but rarely has there been a well rounded discussion on this topic.

I would like to see some discussion in a similar vein on visualizing exchange and the issue of resource management control in a VM

Hi Ed,

Thanks!

Exchange, we can do. Can you expand on what you’d like to see regarding resource management?

Thanks for this article – the chicken & the egg problem has always confounded many but rarely has there been a well rounded discussion on this topic.

I would like to see some discussion in a similar vein on visualizing exchange and the issue of resource management control in a VM

Great article Eric

Thanks

Ernest

Great article Eric

Thanks

Ernest

Hi Eric,

Great article, Thanks!

Could you please fix a link related to “DHCP failover” ?

Thanks! The URLs are fixed. I have no idea what happened there.

Hi Eric,

Great article, Thanks!

Could you please fix a link related to “DHCP failover” ?

I have been running Hyper-V virtualization since it became avaliable and the cost-to-function ration (compared to VM Ware) are outstanding, especially for smaller businesses (20-100 users). I´ve seldom read such a good and easy to comprehend article regarding Hyper-V myths. Allthough I do have to confess that I´ve never actually joined a Hyper-V host to a virtual DC.

The reason for this is, as you say, the myth regarding circular dependencies. I first came across this “warning” in early 2010 and never questioned it. To this day I´ve for some reason kept believing it.

Keep up the good work

I have been running Hyper-V virtualization since it became avaliable and the cost-to-function ration (compared to VM Ware) are outstanding, especially for smaller businesses (20-100 users). I´ve seldom read such a good and easy to comprehend article regarding Hyper-V myths. Allthough I do have to confess that I´ve never actually joined a Hyper-V host to a virtual DC.

The reason for this is, as you say, the myth regarding circular dependencies. I first came across this “warning” in early 2010 and never questioned it. To this day I´ve for some reason kept believing it.

Keep up the good work

Agree with about 90% of your thoughts. Great article 🙂

Excellent article, thanks.

It seems a lot of info out there regarding servers & networking is written with only large enterprise in mind & small business scenarios are forgotten.

I have successfully configured a number of small offices with a single virtualised domain controller & a good backup plan. Nice to see a thorough write-up about it and some validation of my method!

Excellent article, thanks.

It seems a lot of info out there regarding servers & networking is written with only large enterprise in mind & small business scenarios are forgotten.

I have successfully configured a number of small offices with a single virtualised domain controller & a good backup plan. Nice to see a thorough write-up about it and some validation of my method!

Hi,

I agree a lot of things of your article. But, I have in the following scenario – mot chicken-egg related, but interested.

I have 2 Hyper-V hosts, each of them has a DC guest.

The Hyper-V hosts are domain members and the firewall is turned on.

After a power failure, the Microsoft NLA service identifies that the hosts are in public network and the clients couldn’t reach the DC’s until the hosts NLA service restarted. And this can be done by me or other one with proper credentials.

I think, if I have a 3rd DC – a physical one – which start first after a power failure for example, the hosts can start with correct NLA status (domain network), and everything will work fine.

Yes, I know, I can switch off the firewall on the hosts to avoid this issue, but it would be great if you have any other advice for this situation.

Regards,

Géza

The problem I have is that I have never encountered the NLA issue even once. So, I’ve either been extremely lucky or this is the result of a misconfiguration. I would check that DNS does not use Internet secondaries; DNS misconfiguration is the root of most evils. I also don’t understand what any clients would be trying to do that the state of the host’s firewall would matter at all. The host’s firewall could be turned to “Deny All” and traffic inbound to guest OSs would not be impacted. This always feels like someone tried to fix a problem that they didn’t have and accidentally introduced one that they didn’t need.

A hackaround would be to duplicate the firewall settings across all profiles.

To fix it, this needs to be investigated as an NLA issue. Why does it pick public, and why does it not set itself to Domain once the DCs are reachable? Hyper-V is not causing that problem.

A physical DC would probably mask the problem, but whatever is causing it would still be present.

Thank You, Eric,

very interesting article, I’m reading all yours!

Alessandro

I real pain for me was getting the whole Hyper-V with a new DC in a new domain to start in the first place.

Setting up the host was okay but then – connecting to it wasn’t possible because it didn’t belong to any domain (but the workstation used did) . Chick and egg, not?

I had to do some weird commands adding credentials to some internal datastore to finally be able to manage the host.

And now today – DC doesn’t work properly and customer is locked out completely because Hyper-V manager can’t authenticate against the domain. Maybe I missed something…

In any organization small enough to get by with only one DC, I would use Windows Server with Hyper-V, not Hyper-V Server. That would take care of the initial configuration issues. If Hyper-V Server is a must, then I would configure the DC in advance and transfer it over to the new Hyper-V deployment. Basically, avoid any need for remote management during deployment.

Your current problem sounds frustrating, but leaving it out of the domain would not make anything better. Again, Windows Server with Hyper-V would help here because they could RDP to the console any time that standard remote management techniques didn’t work.

When you have a single virtual host, is it better to have all services on a single VM (DNS, AD, WDS, whatever), or to split across multiple VMs? (licence costs are not an issue)

It depends.

Separation is preferred, but not to the point of being ridiculous. If a single VM can handle it, AD/DNS/DHCP go well together. File and print go well together. File and WDS makes some sense. Etc.

Excellent article, thanks about it. One of my constant problems is this though: how should I scale this practices down? I have many clients that, although small, 10-15 PCs, have to use a Windows Server (and AD, and SQL, and so on..) basically, because their ERP software requires them to do so. They can’t afford two physical servers and licenses, and they can’t afford a powerful server, so, how do you go and nest things for some reliability, and some sense, and ease of maintenance? Install Windows Server with HyperV, make two VMs, one as PDC/DNS, the other one as SQL/Application Server? Too much overhead on the hardware? Install as Windows Server, PDC/DNS, and have only one VM as SQL/Application Server? Too unreliable or risky? In both cases, I always have only one DC, but I try and have a strong backups/images strategy: but is this enough? And the best way to go?

10-15 people, I would have one physical system with two or four guests.

1 domain controller

1 application server

Split up the other items across the two guests as makes sense. I wouldn’t put SQL and AD together. Probably would do AD/DNS/DHCP/file/print in guest one and everything else in guest 2. If they can afford the second set of Windows Server licenses, then AD/DNS/DHCP in guest 1, SQL in guest 2, file/print in guest 3, and application(s) in guest 4.

Like you say, backups are the biggest thing.

I would have to say this issue is a chicken and egg related issue I am having.

I had to restore my virtual domain controller on the hyper-v host machine. The permissions are not correct for some reason with the virtual hard disk. So, the virtual domain controller cannot start.

When following posts about adding the virtual machine SID to the virtual hard disk so the machine can start I receive an error that the trust relationship between this workstation and the primary domain failed.

This host “workstation” cannot talk to the domain controller to regain trust because that is the virtual machine that cannot start because the permissions are boinked.

If I had a physical domain controller, this would not be an issue because the domain controller would not rely on permissions from a machine that relies on the domain controller. A nice little cyclical loop of permissions requirements.

If you however know how to solve domain trust problems in a virtual environment where the virtual host cannot start the domain controller please let me know.

To begin, you need to find out why the system is trying to authenticate against a domain controller to start a virtual machine. That is not normative behavior.

First, log in with a local account that is a member of the Administrators group. “The” administrator is preferable, but not necessary.

If that doesn’t work, then there is a configuration problem. You could try just setting the VM to auto-start and rebooting the host. That will place all of the responsibility for starting the VM on the Hyper-V Virtual Machine Management service.

Make sure that the Hyper-V Virtual Machine Management service runs as LocalSystem and not a domain account.

You can quickly correct permissions on any VM’s VHDX by disconnecting it from the VM and immediately reconnecting it to the same VM. But, it could be that someone has configured some overriding NTFS permissions that require access by a domain account. Check into that.

Hi Eric

Thanks once more for your invaluable input, greatly appreciated 😉

I am successfully using virtualized Domain Controllers for quite some time now without a single physical machine as DC since years. There is however a probably minor annoyance as the event log tells on every reboot of a DC VM, that the write cache could not be deactivated. Disabling caching in the policy settings of the VMs disk is also not possible since it tells me there, that disabling write caching is not possible. Can this be safely ignored on a Gen2 VM?

The underlying Storage is a flash backed raid controller with a cache module.

Thanks for your help 😉

Yes, ignore it. It’s a warning because it will change behavior. Since it cannot detect the state of caching, it will automatically use the I/O commands that instruct for an instant write.

There’s a lot of good context here, and it’s well backed – thanks.

HOWEVER…

I’m a security consultant and penetration tester.

The way you gloss over how the “principle of least privilege” is undermined by hypervisor and/or storage administrators as “a human resources problem” is the reason for the sacking of at least one architect I know of – because that line of thinking exposed his company not just to compromise, but an incapacity for his employer to be able to demonstrate their compliance with statutory, regulatory and client-contractual obligations.

The concept is “trust but verify”.

Our hypervisor and storage admins are well vetted.

They can still go rogue later, or be blackmailed or deceived, or just phished.

And the local account on the hypervisor…?

I’ve used that more than once to gain access to the host, dump the desired guest’s NTDS.dit, extract its “krbtgt” hash, and go on to minting golden tickets. All because local accounts are much harder to audit at scale than domain accounts.

Praise sandwich time: still a LOT of good information in this article, it’s just not complete if security is your concern.

If a thoroughly complete discussion on security were my goal, then I would not use a blog article. I would write a 20 volume set of ten pound books, and even those would need more “for more information, see…” entries than I could count. Some people argue that workgroup mode is more secure than domain mode. That fallacy was my target.

If you have a rogue admin, then “I disjoined the Hyper-V hosts so it’s all better now” is not the answer. Local accounts are a problem in both modes, but domain membership grants access to superior tools for managing and auditing those local accounts. And ultimately, yes, rogue admins are always a human resources problem. You can’t simply apply a patch and continue using them. All the things that you bring up are valid but belong to a superset of this article’s content.

Eric,

Thank you for this article. I am a new admin for a company, and new admin in general. I have been getting ready to retire some old equipment and in the process started digging into Virtualized DC’s, etc. I have 1 hardware DC and one Virtualized DC (Small company, about 30 workstations).

My goal is to go down to a single DC (The virtualized one). But, when i started digging into the time synchronization I discovered this :

so, we have the following setup for how time works:

Server1 is a virtual domain controller, it gets its time from the integration components in hyper-v

Server2 is the hyper-v host, it gets its time from Server3

Server3 is a physical domain controller, it gets its time from Server1

The above was all shown using w32tm /query /source on each server

This causes a loop of dependencies, but out of curiosity – how on earth did my time not drift by hours/days/weeks in this setup – its been running for at least a year like this, its a closed loop. Neither Server1 or Server3 are the Physical DC, that’s actually another domain controller not included in the above. Can anyone shed any light on this?

My biggest concern now is, once I turn off the Hyper-V time synchronization services to the Virtual DC, and then setup the Virtual DC to sync to an external source, am I going to run into timing issues, with Kerberos, etc, etc. I also have concerns now about what will happen when I point all my other servers to the Virtual DC.

You’re not the first person to report that you’ve had good luck with your time not drifting under less than ideal conditions. At some point in your setup, an authoritative source was used to seed it, and the host’s CPUs never came under enough load to throw it off. In the modern era of hardware, that doesn’t surprise me much.

If “real” time is less than 5 minutes from your current time, then nothing bad will happen at all. If it’s off by less than 15 minutes but more than 5, then maybe things will be a little odd until everything sorts itself out, but it won’t be too bad. If things are over 15 minutes off but less than 2 hours, there might be some issues while things sync up, but still nothing insurmountable.

I really wouldn’t worry.

Also, your non-virtualized systems are already pulling from your DC, assuming you left the defaults. Allow the virtualized servers to continue to use the Hyper-V ICs.

We have 2 DCs running as Gen 1 hyper v vms on Server 2016. My PDC was set up with a legacy network adapter. Is there any reason I should change to the synthetic adapter. Our environment is stable. Thanks

If everything is fine then I wouldn’t worry about it. The legacy network adapter tops out around 100Mbps, which is fine for regular authentication traffic in most domains. Patching might take longer, is about all that I can see.

After finally being convinced that running a DC on a VM was ok, I took the leap. The problem is that I found your article after I already did “11. Do Not Perform Physical-to-Virtual Conversions on Domain Controllers.” You say what to do instead, but you don’t even touch on what to do if you din’t know better and your once physical DC is now a Hyper-V guest. The most obvious symptom I have right now is DNS does not work and the DNS manager cannot connect to the other DC, which is still physical. Right now, after a couple hours searching and your article being the only thing thing even mentions this that I have found, I simply have the VM powered down. So, what do I do now?

If you still have a separate and functional DC, then I would:

I didn’t elaborate in the article because I have very simple rules for domain controllers: no in-place upgrades, no migrations (VM migrations don’t count), not even restores unless I have no other choices. Promote new builds in, demote old builds out.

Hello Eric,

Thank you for this article. It’s very useful.

If I may ask, why exactly can’t we checkpoint virtualized domain controllers? This means that there is no way to make proper backups. VM backup are based on checkpoints.

Or maybe we can make VMs checkpoints/backups, but only when all DCs are powered off?

If so, this would also mean, we can virtualize physical domain controllers, when they are all powered off.

This is one example. The point is, it can be done if you consider all problems.

What do you think?

I haven’t reviewed this article in a while and it seems to have some rendering problem at the moment. But, I should have explained the problem with checkpointing domain controllers in the text.

Taking a checkpoint does not hurt the domain controller; reverting to a checkpoint potentially causes problems.

When a backup takes a checkpoint, it is only for the purpose of freezing the data. Writes continue in the AVHDX while the backup copies information out of the static checkpoint. When the backup completes, it merges the checkpoint. It never reverts. Also, you cannot accidentally revert a backup’s checkpoint because it hides it from you.

So if you took a checkpoint of a domain controller and never reverted it, it would be OK. But what purpose does that serve?

I would not P2V a domain controller. Even the best, smoothest, least painful P2V experience is an order of magnitude tougher and more harrowing than the standard domain migration process.

Eric, I see your point, but I meant something else:

when you create backup, there is no problem. When you have some kind of a disaster and you have to restore DC Virtual Machine from backup, it is almost the same as reverting to snapshot.

You will restore DC to some point in past.

My point is: if you can do this you can virtualize DC.

If not, there is no point in making backups.

Well, yeah, you can use virtual DCs. All of my DCs are virtual. But I don’t understand where you’re going with checkpoints.

Let’s say that you checkpoint a DC and then revert it. You still have to go through the non-authoritive DSRM steps or you risk damaging your domain. What possible positive reason is there for checkpointing a DC with the intention of reverting?

Hilarious and insightful. Great article.

Dear Eric,

thank you for the very interesting article !

I would like to ask whether you come to different conclusions when it comes to a 2-node hyperconverged cluster with Storage Spaces Direct based on Windows Server 2016/2019.

I am currently working on an installation based on and Romain is recommending to make the Hyper-V-nodes also the DCs. Is this correct in this specific scenario or would you still recommend to put the DC in a VM ?

Thanks a lot,

Stefan

Where you do you see that he recommends that?

I see: “Because I have no other physical server, the domain controllers will be virtual.”

Oh yes, sorry, my confusion. Not the nodes are the DCs but they are VMs outside the cluster.

This was my doubt from the beginning as I currently have a DC running as VM in a KVM (Linux) cluster and this is working quite well – therefore I was intending to do the same with Hyper-V.

However, if I understand correctly (finally *g*), Romain is recommending the same as you do : not to put a DC VM inside a cluster. Right ?

Kind of. He’s saying that you need to have a local DC to ensure that all of the HCI components can start. I don’t see that he’s specifically ruling out HA DCs. You must have local DCs (or DCs reachable over the network) for HCI to work, but you could ALSO have one or more clustered DCs. I don’t see that he makes any particular comment on that. Adding one or more HA DCs would not be a Bad Thing as such but it adds no value.

I have 2 DCs in my environment. DC1 is showing it is connected to a public network. After restarting NLA and checking DNS settings to confirm our domain name, it now says it’s connected to the domain, but as an unauthorized network. Do you have any suggestions from here? What am I missing? A reboot? I’m always willing to learn and appreciate all advice. Thanks in advance!

Hi Hannah! Looks like you posted the question on our Hyper-V forums and Eric is helping you out over there. Just posting a response with the link here for folks that may have a similar question. Thanks!

I want to migrate 2 virtual machines from hyperv 2008r2 to hyperv 2012r2 .

Ive read that the you need to stop vmms on the 2008r2 server and copy all the virtual machine files to the Windows 2012r2 server and then import them.

2 questsions .

1: Wich import do i need to use 1) register 2) restore 3) copy

Im guessing i need to use 1 but pls help me out

2: one of the virtual machines is a Domain controller is this a problem or same way ?

Im asking this because in the past i had problems with copying domain controllers and active directory replication stopped working USN Rollback

Thx for helping !

Greetz Rudy Timmer

Probably “Register”, but I don’t know exactly what you’re doing. The 3 types are explained in detail here: https://www.altaro.com/hyper-v/import-a-hyper-v-virtual-machine/

Do not migrate domain controllers. It takes less overall time and effort to make a new one and decommission the old one.

Great article. But I’m still not completely sure how I should implement my DC’s on a Hyper-V failover cluster. I’m running 10 VM roles in a Failover Cluster with 2 Hyper-V nodes with central (clustered) storage. I have two Active Directory Domain Controller VM’s. I want to run them on these Hyper-V nodes. It is not recommended to add them to the cluster so I would store them on local storage and run them in Hyper-V without clustering them. But I see a problem when updating the Hyper-V nodes. The AD VM’s need to be shutdown when installing updates via Cluster Aware Updating (this runs automatically). When a node restarts the VM probably will be shut down and restarted. Is this the best practice?

If I remember my testing, CAU will not shut down non-clustered VMs, but the host will when it reboots. Set the DCs’ automatic stop action to stop and their automatic start action to always start. I would also set up a nanny script at the end of the CAU run to make certain that they’re online, as there seems to be a bug in the automatic start code.

Thanks. I tried this, but the VM was turned off instead of shut down (possibly a problem with Win2012r2 AD running on Hyper-V Win2019). I’ll try with a PreUpdateScript. I’m running the second AD VM in the cluster so that I’m sure this one is always up during cluster aware updating.

Hmm, what about making the DCs HA (“cluster-aware”), setting them to start at once and set them to a / one “Preferred Owner” only?

I don’t see a benefit and don’t like to have DCs dependent on a cluster, but it would work.

Hi Eric, thanks for the great article. I already have one DC on the CSV. How do I make it local (non-HA) as you suggested? Do I just have to remove it from the cluster and that’s it. Do I have to change the location of the vhdx file to point to the Hyper- V host?

Delete it from the cluster, then use Storage Migration to move the VM files to the local disk. That’s it.

Thanks for this article! I’m trying to remediate an ADRAP finding for “securing virtual machine files” for our domain controllers. Most of which are RODC’s and some writable domain controllers. If a malicious person was to steal the .vhdx files, what are they actually really able to see from those files and how easy really would it be to attach or mount that file elsewhere? Thanks!

If someone takes an unencrypted VHDX file, it is safest to assume that they can read everything. Basically, stealing an unencrypted VHDX file is equivalent to stealing an unencrypted hard drive.

Breaking the directory file is relatively simple, although it takes time. You can search for various ways to read or extract data from an NTDS.DIT file.

That said, the only truly secret items in your domain file are the password hashes. The structure of your domain already grants read access to almost everyone. So, if you believe that an NTDS.DIT file has been stolen, you need to change all domain passwords as quickly as you can. If you have an enterprise password management tool, that could be –relatively– simple. Overall steps:

To prevent reading the VHDX in the first place, you need to encrypt it. If your host has a TPM, then you can just enable BitLocker in the guest. If you ever want to move the guest to another host, you’ll need to make certain that you retain its BitLocker key somewhere other than in the domain. Personally, I consider it a good thing to have a domain controller that can only ever run on the host I put it on, but that does mean that you must be absolutely certain that there is no way to ever lose all of your domain controllers. If you want to make an encrypted VM mobile (and REALLY increase its security), then you need to set up Shielded VMs. That’s a non-trivial exercise that I have not yet written anything serious about. I did write up something on non-mobile Shielded VMs. The tech was still kind of new then and I don’t think I mentioned disk encryption.

Yet, when a Hyper-V host is rebooted that points to its own DC-VM, it doesn’t see the domain and joins a public network…

Mine don’t. Most don’t.

There is a comment further down the page that goes over the NLA thing.