Save to My DOJO

Lots of people give lots of excuses for not using dynamically expanding virtual hard disks. Situationally, some of those excuses have merit. However, I have never seen any excuse with sufficiently broad applicability to justify the “never” approach championed by far too many.

The Excuses for not Using VHDX

These are the excuses that most people give for not using dynamically expanding VHDXs:

- “If I can’t do math well enough to predict growth and I’m too lazy to monitor my systems, dynamically expanding VHDXs will cause me to run out of drive space. Nevermind that I’ll also run out of drive space even more quickly if I use the same tactics with fixed VHDX.”

- “I don’t understand how hard drives work in this century so I’m terrified of fragmentation.”

- “Dynamically-expanding VHDXs aren’t recommended for databases that need high performance so I’ll never use them for anything else either because somehow that makes sense to somebody.”

- “Dynamically-expanding VHDs were slower than fixed VHDs in Hyper-V Server 2008 and updating knowledge requires effort.”

- “Expansion of a VHDX requires tons and tons of CPU and IOPS. No one has ever demonstrated that to be true, but I feel it in the depths of my soul. Good systems admins should always make decisions based on instinct rather than evidence.”

- “I heard one or more of the above from someone that I consider to be an expert and I don’t yet have the experience to realize that they are all just weak, unsubstantiated excuses.”

The last item in the list is valid. If that’s you, I’m glad that you’re here. I’m not going to debunk all of these items in this article because most of them are self-debunking. One in particular irks me to no end.

The Expansion Excuse is the Worst

The second-to-last item in the list, expansion of a VHDX, is the focus of the article. I’m picking on that one because it is the single most nonsensical thing I have ever heard in my entire career. I don’t even understand why anyone would ever think it was an issue. It’s not.

The claim is made. Now it’s time to prove it.

How Expansion Works

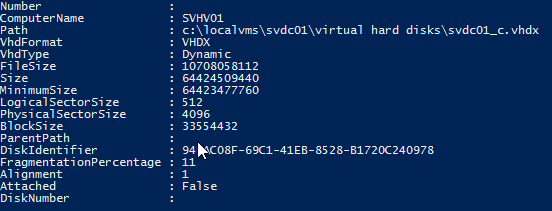

What you see below is the output from Get-VHD for my primary domain controller’s VHDX:

When the disk subsystem expands a VHDX, it does so by “blocks”. Blocks in this context are defined per VHDX. As you can see in the screenshot, this VHDX uses a block size of 33,554,432 bytes (32 megabytes). Therefore, each expansion event will grow the VHDX by a multiple of 33,554,432 bytes.

Next, we turn our attention to the “Size”. For a dynamically-expanding VHDX, “Size” represents the maximum number of bytes that the VHDX can grow to without intervention. With some quick and simple math, we discover that this VHDX file has an upper size limit of about 1,900 blocks. If you don’t see that math, we are dividing 64,424,509,440 (the maximum number of bytes) by 33,554,432 (number of bytes in each block). In this case, the math ends up with a perfectly round number. However, not all of the data in a VHDX is a data block. There’s a header and the block allocation table. I suppose there’s a way to figure out exact numbers, but an approximation is more than sufficient for this article.

Our next number of interest is “FileSize”. That represents the current expansion state of the VHDX, in bytes. With a bit more quick math, we discover that the VHDX is currently expanded to somewhere around 310 blocks. If you’re not following that math, we divided 10,708,058,112 (the current file size in bytes) by 33,554,432 (the number of bytes per block). Again, we’re fudging a bit because we didn’t determine the exact size of the header and block allocation table.

To be a little more accurate for our final numbers run, I’m going to only use the difference between the maximum file size and the current file size. That completely negates the size of the header, although there will still be some fudgery due to the block allocation table. We wind up with a potential expansion of about 1,600 blocks.

Let’s assume the absolute worst-case expansion scenario:

- This VHDX will eventually expand to 100% (it won’t)

- Each expansion of each block of this VHDX requires 100% CPU utilization for an entire second (it doesn’t — not even close)

- Expanding this VHDX requires 100 I/O operations per expansion event (I don’t know what it really needs, but that sounds like a lot to me)

Let’s further estimate that this VHDX will live for another three years from this point.

The final results:

- Fully expanding this VHDX will require 160,000 I/O operations. Across three years. A single disk that can perform 100 IOPS can do that much work in 26.6 minutes, or 0.0017% of the lifetime of the VHDX. Assuming that it would really require 100 I/O operations to expand one block. Which, maybe it does, but probably doesn’t.

- Fully expanding this VHDX will require 160,000 seconds of CPU time. Across three years. Or, 0.17% of the entire lifetime of the VHDX. Assuming that each block is expanded individually and requires an entire second of CPU time. Which, they won’t be, and, it doesn’t.

- All of these totals assume that the VHDX will eventually expand to its maximum capacity. It won’t.

So, even when we really fudge the numbers to make expansion look a lot scarier than it can ever possibly be, there still isn’t anything scary about it.

A Real Test

There’s a problem with all of that, though. It sounds good, but really, it’s just spit-balling. It’s better spit-balling than all of those people that tell you that you should hide under your bed when the expansion monster goes hunting, but, it’s still just spit-balling.

So, let’s prove that those people have no idea what they’re talking about.

To perform this test, I created two identical virtual machines. Each was running Windows Server 2016 Standard Edition. The hypervisor is Windows Server 2016 Datacenter Edition. To reduce the number of variables that could affect the test, I used internal storage, which is a pair of 7200 RPM SATA drives in a cheap ROMB mirror.

Virtual machine 1 is named “writetestdyn”. To that, I attached a 60GB dynamically-expanding VHDX. I formatted it inside the guest OS as D:. I set up performance monitors inside the management operating system to watch:

- Read operations per second from host’s C:

- Read bytes per second from host’s C:

- Write operations per second to host’s C:

- Write bytes per second to host’s C:

- Read operations per second from guest’s D:

- Read bytes per second from guest’s D:

- Write operations per second to guest’s D:

- Write bytes per second to guest’s D:

Inside the guest, I ran a PowerShell script to write files of the same size as a block until the disk ran out of space and measure the time of the operation. The script is a bit rough but it did what I needed; it’s listed after the results.

Once that test was complete, I attached a 60GB fixed VHDX to the other virtual machine, named “writetestfixed”. I duplicated the test on that one.

I did watch the host’s CPU counters but I couldn’t even get a clean reading to distinguish the disk I/O CPU from normal CPU (in other words, the CPU performance stayed near 0% the whole time). I don’t know why anyone would expect anything different. Conclusion: expansion events require so little CPU that measuring it is prohibitively difficult.

I got much more meat from the disk tests, though.

Fixed vs. Dynamically-Expanding VHDX Expansion Test: Time

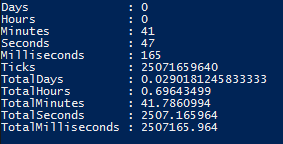

This is the screenshot showing the in-VM test:

Expanding this disk from 0 to its full size of 60GB required 41 minutes and 47.165 seconds. Remember that this ALSO required writing 60GB of data to the disk. If we compare that to the projections that I made above, then that means that this system would need to be able to write 60GB of data into a fixed disk in less than 20 minutes in order for the worst case scenario to be of concern.

Expanding this disk from 0 to its full size of 60GB required 41 minutes and 47.165 seconds. Remember that this ALSO required writing 60GB of data to the disk. If we compare that to the projections that I made above, then that means that this system would need to be able to write 60GB of data into a fixed disk in less than 20 minutes in order for the worst case scenario to be of concern.

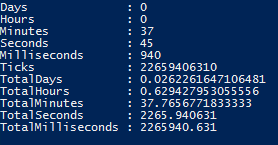

The reality? See for yourself:

The same system needs 37 minutes and 45.94 seconds to write 60GB of data into a fixed VHDX. The time added by expansion was about 4 minutes. Or, if the virtual machine used this disk 24 hours per day for 3 years, 0.00025% of its entire lifetime. To expand from 0 to 60GB. Terrifying, right?

The same system needs 37 minutes and 45.94 seconds to write 60GB of data into a fixed VHDX. The time added by expansion was about 4 minutes. Or, if the virtual machine used this disk 24 hours per day for 3 years, 0.00025% of its entire lifetime. To expand from 0 to 60GB. Terrifying, right?

Conclusion: Expansion of a dynamically-expanding VHDX does not require a meaningful amount of time.

Fixed vs. Dynamically-Expanding VHDX Expansion Test: I/O Operations

As I started compiling the results of this test, I realized something. Hyper-V’s I/O tuner was active. The guest virtual machines were hammering their virtual disks so hard that the VMs became nearly unresponsive. That’s to be expected. However, the host was fine. I intentionally kept my activities to a minimum so that I wouldn’t disrupt the test, but I didn’t notice anything different. I’m telling you this because all of the people telling ghost stories about expansion invariably make the claim that expansion I/O operations are absolutely crippling. I say thee nay. And I can show you.

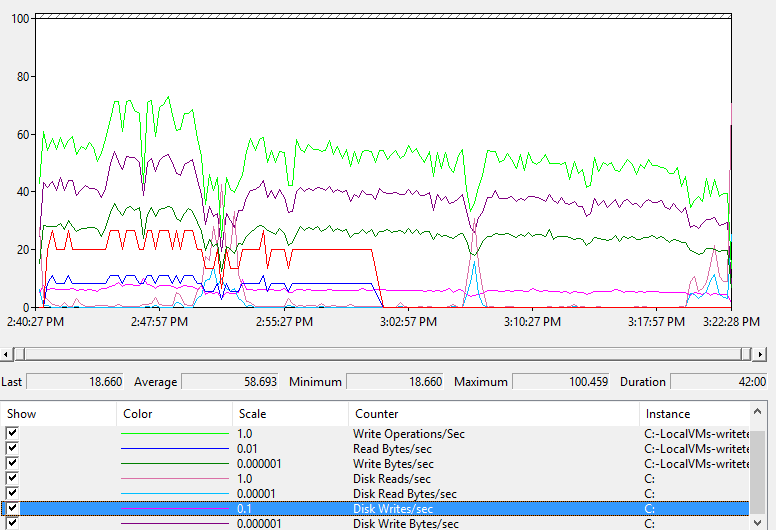

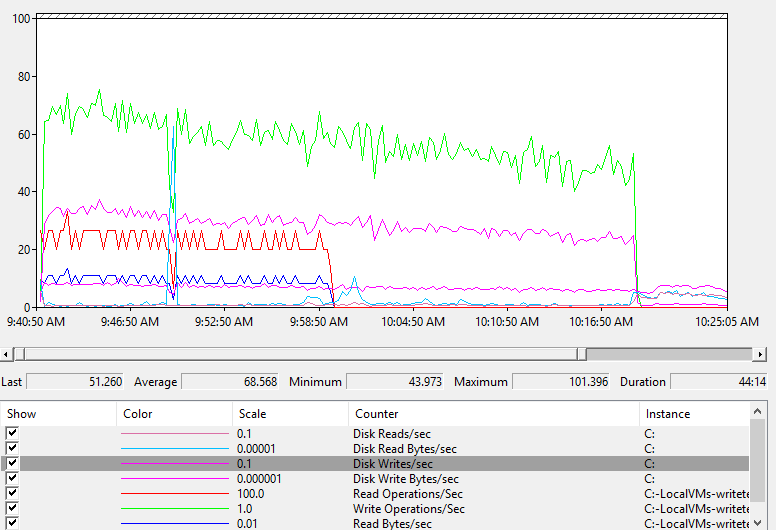

Performance trace for dynamically-expanding VHDX during the operation:

Performance trace for fixed VHDX during the operation:

Performance traces can be a little tough to look at. To make things easier, I ensured that both sets use matching colors for matching counters. I also highlighted the item that I considered most important: write IOPS from the host’s perspective. The fixed disk had higher IOPS than the dynamically-expanding disk. Without any meaningful levels of CPU, I can’t explain exactly what caused that. However, if total IOPS during VHDX expansion terrify you, then it’s clear that write operations to a fixed disk should leave you paralyzed in fright.

Conclusion: Disk IOPS are of no meaningful concern for dynamically-expanding VHDX.

If you want to see the traces yourself, you can download the trace files. Be aware that even though I started the traces closely to the start of the operation, I was slower to turn them off. Watch the timestamps.

Overall Conclusion on VHDX Expansion Tests

The outcome of these tests conclusively prove that:

- Expansion does not require measurable CPU

- Expansion does not require meaningful time

- Expansion does not cause blocking I/O

If anyone disagrees, it’s on them to provide countering proof. Scare rhetoric won’t cut it anymore.

But, Bigger VHDXs

Of course, someone will likely say that this test isn’t valid because of the size of the disk. OK, so you like to make big, fat VHDXs. That’s fair. Let’s use a disk that has 10 times as many empty blocks (somewhere in the 600GB range). 40 minutes total to expand. If it lives three years, that’s 0.0025% of its time. The rest of the numbers will remain constant. Well, constant if you use the same crummy hardware that I did. Which you wouldn’t, would you?

Scared yet? Didn’t think so.

But, More VMs! Scalability!

I expect the next retort to mention something about running more than one VM and how the aggregate will cause more performance hits than what I showed you. Well, no.

- Even if you have three hundred virtual machines running on the exact same hardware, none of the numbers above change at all. There may be contention, but the requirements remain precisely the same for each. I doubt anyone could even figure out how all of that would overlay in a real-world scenario, but I’m certain that no one can come up with a map that should cause any kind of fear.

- As you saw, writing to a fixed VHDX uses higher IOPS than writing to a dynamic VHDX with expansion. So, contention arguments wind up in favor of dynamic VHDX.

- The I/O tuner will remain active. Your other VMs will be fine.

- If you’re running even one virtual machine on hardware as slow as what I used, you have other problems.

The Test Isn’t Realistic

This test isn’t realistic, but that works in favor of dynamically-expanding disks.

- Almost no dynamically-expanding VHDXs will start empty. OS disks will be seeded with OS files.

- Far fewer dynamically-expanding VHDXs will ever reach maximum expansion. That OS disk that I started the article with? I would be surprised if it ever grows even 5GB from its current size.

- If a disk ever starts at zero and expands to maximum as quickly as possible in a real-world environment, something is badly broken. I mean, really broken. What I learned during this test is that Hyper-V 2016 will pause the runaway VM when the storage space approaches maximum capacity and leave the other ones up. So, as long as you are monitoring like you’re supposed to, casualties of a runaway expansion can be mitigated.

The Script

This is the script that I used for the test. It’s very rough but it served its purpose. I’m providing it more so that my methodology is transparent than anything else. If you can tweak it for your purposes, great!

The execution call that I made was: Test-BlockWrites -BlockCount 0 -Path D:testwrites. This forced it to use all remaining space. The path must already exist.

Side note: there is a call to the random number generator to get seed data for a file. If you write an all-zero block to a dynamically-expanding VHDX, it just updates the BAT without writing anything to the data region. That causes any speed tests to be invalid (unless you’re specifically testing for that).

#function Test-BlockWrites

#{

#requires -Modules Storage

[CmdletBinding(ConfirmImpact='High', DefaultParameterSetName='BySize')]

param

(

[Parameter()][Uint32]$BlockSize = 0,

[Parameter(ParameterSetName='BySize')][Uint64]$ByteCount = 0,

[Parameter(ParameterSetName='ByBlock')][Uint32]$BlockCount = 0,

[Parameter()][String]$Path

)

process

{

if([String]::IsNullOrEmpty($Path) -or -not (Test-Path -Path $Path))

{

$Path = $env:TEMP

}

if($BlockSize -eq 0)

{

$BlockSize = 33554432 # default block size for VHDX

}

$Path = (Resolve-Path -Path $Path).Path

$TargetDrive = (Get-Item -Path $Path).PSDrive.Name

$MaxSize = (Get-Volume -DriveLetter $TargetDrive).SizeRemaining

$MaxBlocks = [Uint32]($MaxSize / $BlockSize)

switch($PSCmdlet.ParameterSetName)

{

'BySize' {

if($ByteCount -lt $BlockSize) { $ByteCount = $BlockSize }

if($ByteCount -gt $MaxSize) { $ByteCount = $MaxSize }

$BlockCount = [Uint32]($ByteCount / $BlockSize)

}

'ByBlock' {

if($BlockCount -eq 0 -or $BlockCount -gt $MaxBlocks) { $BlockCount = $MaxBlocks }

}

}

$Payload = New-Object Byte[] $BlockSize

$RNG = New-Object System.Random

$RNG.NextBytes($Payload)

$TempDir = New-Item -Path $Path -Name ('TestBlockWrites{0}' -f (Get-Date).Ticks) -ItemType Directory

Measure-Command {

for($i = 0; $i -lt $BlockCount; $i++)

{

$FilePath = Join-Path -Path $TempDir -ChildPath ('Block{0:D16}.data' -f $i)

[System.IO.File]::WriteAllBytes($FilePath, $Payload)

}

}

Remove-Item -Path $TempDir -Recurse -Force

}

#}

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

4 thoughts on "Why Expansion for Hyper-V’s Dynamic VHDX Doesn’t Matter"

thanks for the article.

I even put some SQL Databases on a VHDX Drive with 600gb. The only problem I had when I want expand it to 800gb I saw that a Backup (Dumps) was running (schedulded task) and made lot of I/O. then I canceled expandind and from then drive was degrated and Hyper-V settings was hanging/locked. Finally I need to reboot Host.