Save to My DOJO

In the past few years, sophisticated attackers have targeted vulnerabilities in CPU acceleration techniques. Cache side-channel attacks represent a significant danger. They magnify on a host running multiple virtual machines. One compromised virtual machine can potentially retrieve information held in cache for a thread owned by another virtual machine. To address such concerns, Microsoft developed its new “HyperClear” technology pack. HyperClear implements multiple mitigation strategies. Most of them work behind the scenes and require no administrative effort or education. However, HyperClear also includes the new “core scheduler”, which might need you to take action.

The Classic Scheduler

Now that Hyper-V has all new schedulers, its original has earned the “classic” label. I wrote an article on that scheduler some time ago. The advanced schedulers do not replace the classic scheduler so much as they hone it. So, you need to understand the classic scheduler in order to understand the core scheduler. A brief recap of the earlier article:

- You assign a specific number of virtual CPUs to a virtual machine. That sets the upper limit on how many threads the virtual machine can actively run.

- When a virtual machine assigns a thread to a virtual CPU, Hyper-V finds the next available logical processor to operate it.

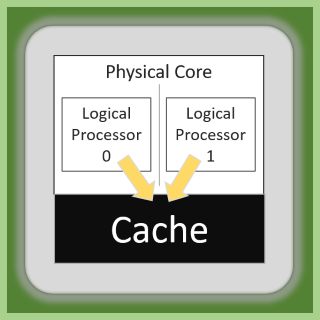

To keep it simple, imagine that Hyper-V assigns threads in round-robin fashion. Hyper-V does engage additional heuristics, such as trying to keep a thread with its owned memory in the same NUMA node. It also knows about simultaneous multi-threading (SMT) technologies, including Intel’s Hyper-Threading and AMD’s recent advances. That means that the classic scheduler will try to place threads where they can get the most processing power. Frequently, a thread shares a physical core with a completely unrelated thread — perhaps from a different virtual machine.

Risks with the Classic Scheduler

The classic scheduler poses a cross-virtual machine data security risk. It stems from the architectural nature of SMT: a single physical core can run two threads but has only one cache.

In my research, I discovered several attacks in which one thread reads cached information belonging to the other. I did not find any examples of one thread polluting the others’ data. I also did not see anything explicitly preventing that sort of assault.

In my research, I discovered several attacks in which one thread reads cached information belonging to the other. I did not find any examples of one thread polluting the others’ data. I also did not see anything explicitly preventing that sort of assault.

On a physically installed operating system, you can mitigate these risks with relative ease by leveraging antimalware and following standard defensive practices. Software developers can make use of fencing techniques to protect their threads’ cached data. Virtual environments make things harder because the guest operating systems and binary instructions have no influence on where the hypervisor places threads.

The Core Scheduler

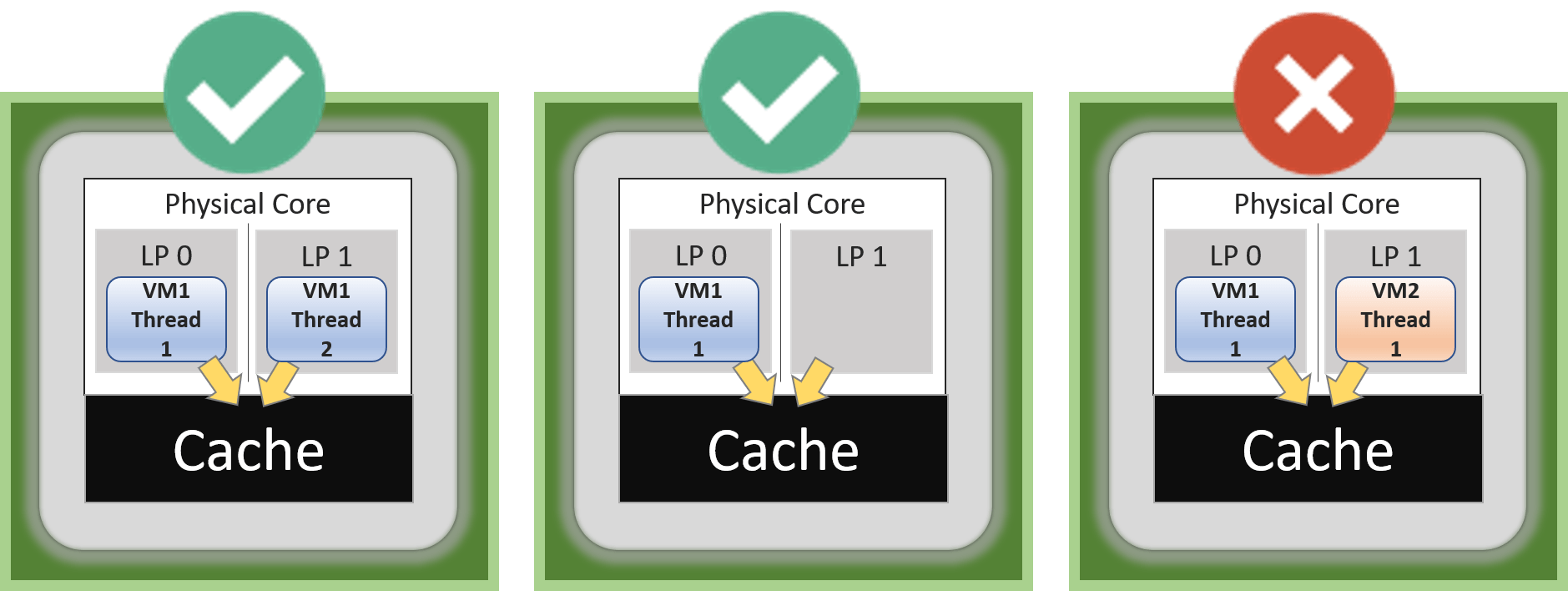

The core scheduler makes one fairly simple change to close the vulnerability of the classic scheduler: it never assigns threads from more than one virtual machine to any physical core. If it can’t assign a second thread from the same VM to the second logical processor, then the scheduler leaves it empty. Even better, it allows the virtual machine to decide which threads can run together.

We will move on through implementation of the scheduler before discussing its impact.

Implementing Hyper-V’s Core Scheduler

The core scheduler has two configuration points:

- Configure Hyper-V to use the core scheduler

- Configure virtual machines to use two threads per virtual core

Many administrators miss that second step. Without it, a VM will always use only one logical processor on its assigned cores. Each virtual machine has its own independent setting.

We will start by changing the scheduler. You can change the scheduler at a command prompt (cmd or PowerShell) or by using Windows Admin Center.

How to Use the Command Prompt to Enable and Verify the Hyper-V Core Scheduler

For Windows and Hyper-V Server 2019, you do not need to do anything at the hypervisor level. You still need to set the virtual machines. For Windows and Hyper-V Server 2016, you must manually switch the scheduler type.

You can make the change at an elevated command prompt (PowerShell prompt is fine):

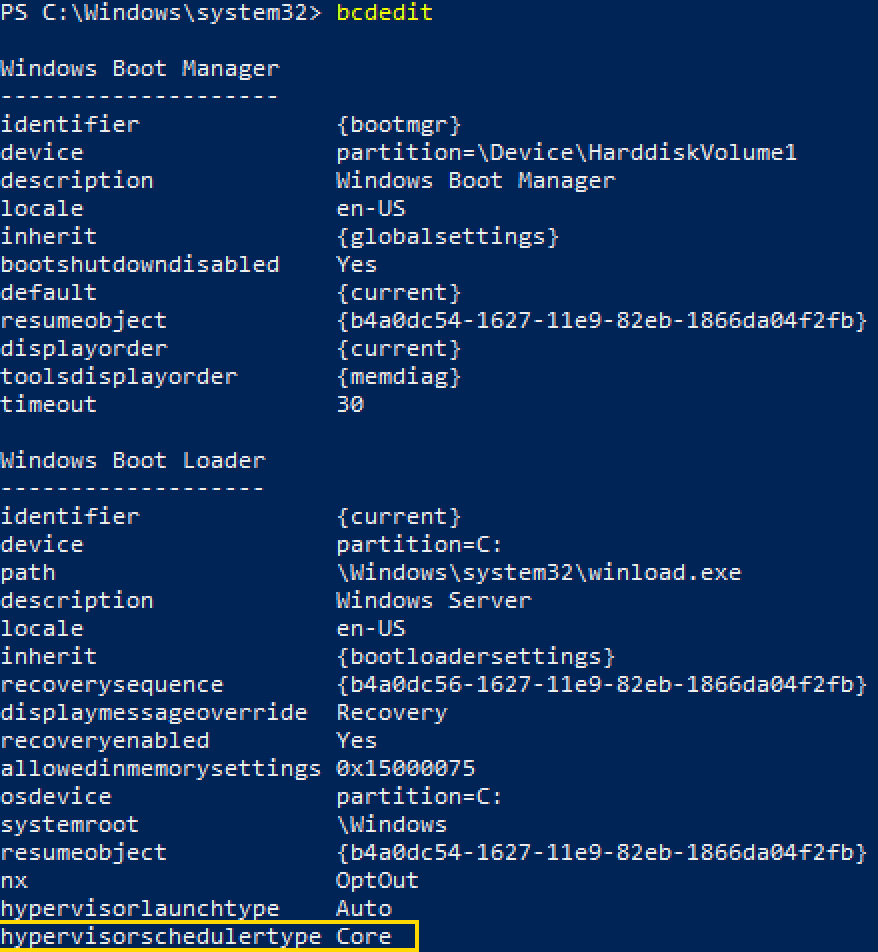

bcdedit /set hypervisorschedulertype core

Note: if bcdedit does not accept the setting, ensure that you have patched the operating system.

Reboot the host to enact the change. If you want to revert to the classic scheduler, use “classic” instead of “core”. You can also select the “root” scheduler, which is intended for use with Windows 10 and will not be discussed further here.

To verify the scheduler, just run bcdedit by itself and look at the last line:

bcdedit will show the scheduler type by name. It will always appear, even if you disable SMT in the host’s BIOS/UEFI configuration.

How to Use Windows Admin Center to Enable the Hyper-V Core Scheduler

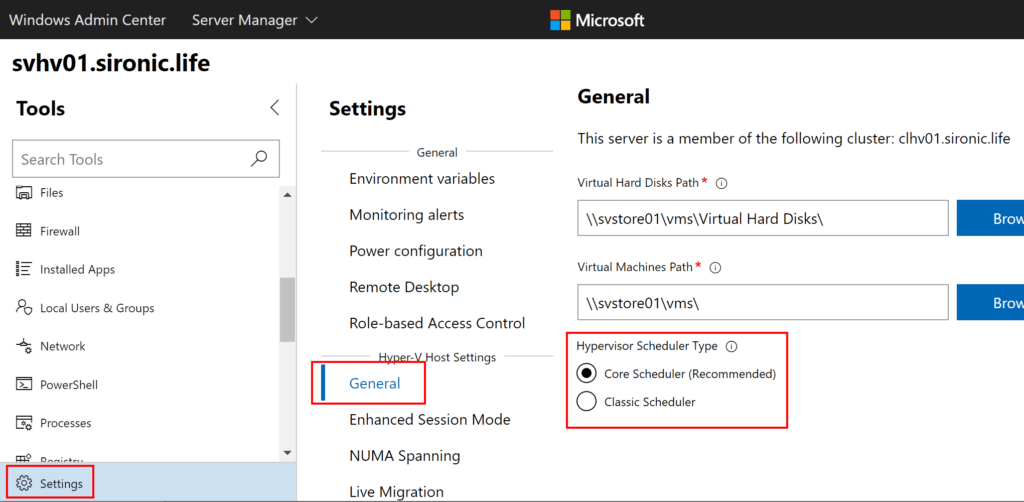

Alternatively, you can use Windows Admin Center to change the scheduler.

- Use Windows Admin Center to open the target Hyper-V host.

- At the lower left, click Settings. In most browsers, it will hide behind any URL tooltip you might have visible. Move your mouse to the lower left corner and it should reveal itself.

- Under Hyper-V Host Settings sub-menu, click General.

- Underneath the path options, you will see Hypervisor Scheduler Type. Choose your desired option. If you make a change, WAC will prompt you to reboot the host.

Note: If you do not see an option to change the scheduler, check that:

- You have a current version of Windows Admin Center

- The host has SMT enabled

- The host runs at least Windows Server 2016

The scheduler type can change even if SMT is disabled on the host. However, you will need to use bcdedit to see it (see previous sub-section).

Implementing SMT on Hyper-V Virtual Machines

With the core scheduler enabled, virtual machines can no longer depend on Hyper-V to make the choice to use a core’s second logical processor. Hyper-V will expect virtual machines to decide when to use the SMT capabilities of a core. So, you must enable or disable SMT capabilities on each virtual machine just like you would for a physical host.

Because of the way this technology developed, the defaults and possible settings may seem unintuitive. New in 2019, newly-created virtual machines can automatically detect the SMT status of the host and hypervisor and use that topology. Basically, they act like a physical host that ships with Hyper-Threaded CPUs — they automatically use it. Virtual machines from previous versions need a bit more help.

Every virtual machine has a setting named “HwThreadsPerCore”. The property belongs to the Msvm_ProcessorSettingData CIM class, which connects to the virtual machine via its Msvm_Processor associated instance. You can drill down through the CIM API using the following PowerShell (don’t forget to change the virtual machine name):

Get-CimInstance -Namespace root/virtualization/v2 -ClassName Msvm_ComputerSystem -Filter 'ElementName="svdc01"' | Get-CimAssociatedInstance -Namespace root/virtualization/v2 -ResultClassName Msvm_Processor | Get-CimAssociatedInstance -Namespace root/virtualization/v2 -ResultClassName Msvm_ProcessorSettingData | Select-Object -Property HwThreadsPerCore

The output of the cmdlet will present one line per virtual CPU. If you’re worried that you can only access them via this verbose technique hang in there! I only wanted to show you where this information lives on the system. You have several easier ways to get to and modify the data. I want to finish the explanation first.

The HwThreadsPerCore setting can have three values:

- 0 means inherit from the host and scheduler topology — limited applicability

- 1 means 1 thread per core

- 2 means 2 threads per core

The setting has no other valid values.

A setting of 0 makes everything nice and convenient, but it only works in very specific circumstances. Use the following to determine defaults and setting eligibility:

- VM config version < 8.0

- Setting is not present

- Defaults to 1 if upgraded to VM version 8.x

- Defaults to 0 if upgraded to VM version 9.0+

- VM config version 8.x

- Defaults to 1

- Cannot use a 0 setting (cannot inherit)

- Retains its setting if upgraded to VM version 9.0+

- VM config version 9.x

- Defaults to 0

I will go over the implications after we talk about checking and changing the setting.

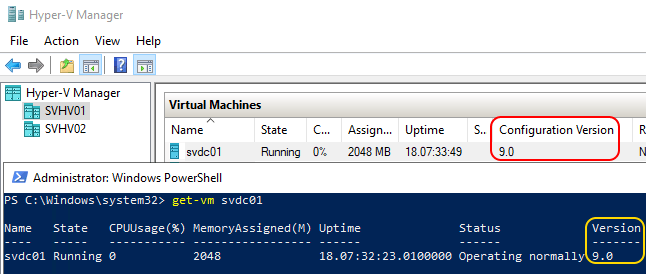

You can see a VM’s configuration version in Hyper-V Manager and PowerShell’s Get-VM :

The version does affect virtual machine mobility. I will come back to that topic toward the end of the article.

How to Determine a Virtual Machine’s Threads Per Core Count

Fortunately, the built-in Hyper-V PowerShell module provides direct access to the value via the *-VMProcessor cmdlet family. As a bonus, it simplifies the input and output to a single value. Instead of the above, you can simply enter:

Get-VMProcessor -VMName svdc01 | Select-Object -Property HwThreadCountPerCore

If you want to see the value for all VMs:

Get-VMProcessor -VMName * | Select-Object -Property VMName, HwThreadCountPerCore

You can leverage positional parameters and aliases to simplify these for on-the-fly queries:

Get-VMProcessor * | select VMName, HwThreadCountPerCore

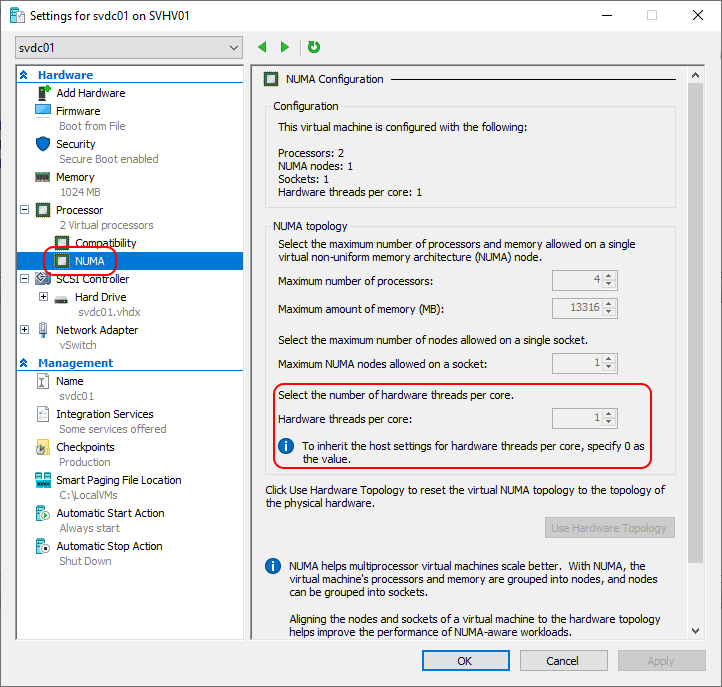

You can also see the setting in recent version of Hyper-V Manager (Windows Server 2019 and current versions of Windows 10). Look on the NUMA sub-tab of the Processor tab. Find the Hardware threads per core setting:

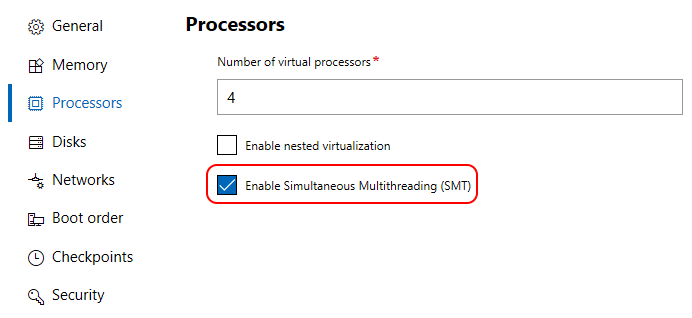

In Windows Admin Center, access a virtual machine’s Processor tab in its settings. Look for Enable Simultaneous Multithreading (SMT).

If the setting does not appear, then the host does not have SMT enabled.

How to Set a Virtual Machine’s Threads Per Core Count

You can easily change a virtual machine’s hardware thread count. For either the GUI or the PowerShell commands, remember that the virtual machine must be off and you must use one of the following values:

- 0 = inherit, and only works on 2019+ and current versions of Windows 10 and Windows Server SAC

- 1 = one thread per hardware core

- 2 = two threads per hardware core

- All values above 2 are invalid

To change the setting in the GUI or Windows Admin Center, access the relevant tab as shown in the previous section’s screenshots and modify the setting there. Remember that Windows Admin Center will hide the setting if the host does not have SMT enabled. Windows Admin Center does not allow you to specify a numerical value. If unchecked, it will use a value of 1. If checked, it will use a value of 2 for version 8.x VMs and 0 for version 9.x VMs.

To change the setting in PowerShell:

Set-VMProcessor -VMName svdc01 -HwThreadCountPerCore 2

To change the setting for all VMs in PowerShell:

Set-VMProcessor -VMName * -HwThreadCountPerCore 2

Note on the cmdlet’s behavior: If the target virtual machine is off, the setting will work silently with any valid value. If the target machine is on and the setting would have no effect, the cmdlet behaves as though it made the change. If the target machine is on and the setting would have made a change, PowerShell will error. You can include the -PassThru parameter to receive the modified vCPU object:

Set-VMProcessor -VMName * -HwThreadCountPerCore 2 -Passthru | select VMName, HwThreadCountPerCore

Considerations for Hyper-V’s Core Scheduler

I recommend using the core scheduler in any situation that does not explicitly forbid it. I will not ask you to blindly take my advice, though. The core scheduler’s security implications matter, but you also need to think about scalability, performance, and compatibility.

Security Implications of the Core Scheduler

This one change instantly nullifies several exploits that could cross virtual machines, most notably in the Spectre category. Do not expect it to serve as a magic bullet, however. In particular, remember that an exploit running inside a virtual machine can still try to break other processes in the same virtual machine. By extension, the core scheduler cannot protect against threats running in the management operating system. It effectively guarantees that these exploits cannot cross partition boundaries.

For the highest level of virtual machine security, use the core scheduler in conjunction with other hardening techniques, particularly Shielded VMs.

Scalability Impact of the Core Scheduler

I have spoken with one person who was left with the impression that the core scheduler does not allow for oversubscription. They called into Microsoft support, and the engineer agreed with that assessment. I reviewed Microsoft’s public documentation as it was at the time, and I understand how they reached that conclusion. Rest assured that you can continue to oversubscribe CPU in Hyper-V. The core scheduler prevents threads owned by separate virtual machines from running simultaneously on the same core. When it starts a thread from a different virtual machine on a core, the scheduler performs a complete context switch.

You will have some reduced scalability due to the performance impact, however.

Performance Impact of the Core Scheduler

On paper, the core scheduler presents severe deleterious effects on performance. It reduces the number of possible run locations for any given thread. Synthetic benchmarks also show a noticeable performance reduction when compared to the classic scheduler. A few points:

- Generic synthetic CPU benchmarks drive hosts to abnormal levels using atypical loads. In simpler terms, they do not predict real-world outcomes.

- Physical hosts with low CPU utilization will experience no detectable performance hits.

- Running the core scheduler on a system with SMT enabled will provide better performance than the classic scheduler on the same system with SMT disabled

Your mileage will vary. No one can accurately predict how a general-purpose system will perform after switching to the core scheduler. Even a heavily-laden processor might not lose anything. Remember that, even in the best case, an SMT-enabled core will not provide more than about a 25% improvement over the same core with SMT disabled. In practice, expect no more than a 10% boost. In the simplest terms: switching from the classic scheduler to the core scheduler might reduce how often you enjoy a 10% boost from SMT’s second logical processor. I expect few systems to lose much by switching to the core scheduler.

Some software vendors provide tools that can simulate a real-world load. Where possible, leverage those. However, unless you dedicate an entire host to guests that only operate that software, you still do not have a clear predictor.

Compatibility Concerns with the Core Scheduler

As you saw throughout the implementation section, a virtual machine’s ability to fully utilize the core scheduler depends on its configuration version. That impacts Hyper-V Replica, Live Migration, Quick Migration, virtual machine import, backup, disaster recovery, and anything else that potentially involves hosts with mismatched versions.

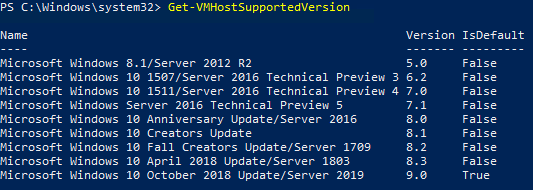

Microsoft drew a line with virtual machine version 5.0, which debuted with Windows Server 2012 R2 (and Windows 8.1). Any newer Hyper-V host can operate virtual machines of its version all the way down to version 5.0. On any system, run Get-VMHostSupportedVersion to see what it can handle. From a 2019 host:

So, you can freely move version 5.0 VMs between a 2012 R2 host and a 2016 host and a 2019 host. But, a VM must be at least version 8.0 to use the core scheduler at all. So, when a v5.0 VM lands on a host running the core scheduler, it cannot use SMT. I did not uncover any problems when testing an SMT-disabled guest on an SMT-enabled host or vice versa. I even set up two nodes in a cluster, one with Hyper-Threading on and the other with Hyper-Threading off, and moved SMT-enabled and SMT-disabled guests between them without trouble.

The final compatibility verdict: running old virtual machine versions on core-scheduled systems means that you lose a bit of density, but they will operate.

Summary of the Core Scheduler

This is a lot of information to digest, so let’s break it down to its simplest components. The core scheduler provides a strong inter-virtual machine barrier against cache side-channel attacks, such as the Spectre variants. Its implementation requires an overall reduction in the ability to use simultaneous multi-threaded (SMT) cores. Most systems will not suffer a meaningful performance penalty. Virtual machines have their own ability to enable or disable SMT when running on a core-scheduled system. All virtual machine versions prior to 8.0 (WS2016/W10 Anniversary) will only use one logical processor per core when running on a core-scheduled host.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

11 thoughts on "What is the Hyper-V Core Scheduler?"

Excellent article as always, Eric. I love the way you take your time to cover the fundamental technical details and build up from that, so the reader can get a good grasp of not just what is happening, but why.

Just a note for anyone limited to Hyper-V 2012/R2 (basically any version prior to 2016) that Microsoft’s HyperClear (of which the new Core Scheduler is a component) is not compatible and cannot be deployed, no matter your patch level – while Eric obviously covered the behaviour for 2016 & 2019, I wanted to explicitly point out that prior versions can’t implement these enhancements, leaving those hosts wide open for exploit by side-channel attacks. Hyper-V 2016 and 2019 are both 100% free though, so there’s no reason you can’t test upgrading to these in your environment and then upgrade your older hosts.

If you’re still running virtual machines on top of full versions of Server 2012/R2, this is a good argument for upgrading your license, as you simply can’t protect against side-channel exploits on those machines. Frankly considering the potential ramifications for a successful side-channel attack and the impact it will have on your business, it is well worth the cost of the new license(s).

So using the Core schedule, and have 2 threads per hardware core set, is assigning 2 vCPU the equivalent of 1 CPU core with SMT (2 threads), or 2 CPU cores with SMT (4 Threads)

Neither. The assigned vCPU count specifies how many logical processors the VM can access simultaneously.

A VM with 1 vCPU can use 1 logical processor, which practically means that it always uses 1 LP in a physical core while the other LP always sits idle. A VM with 2 vCPU can use 2 LPs, but the scheduler has discretion on placing them together on the same physical core or distributing them across two cores.

Edit, clarification on “it always uses 1 LP in a physical core while the other LP always sits idle”: The other LP always sits idle while that VM’s single thread executes. When the scheduler switches that CPU’s context to another VM, or to processes in the management OS, it might get a thread to execute.