Save to My DOJO

In this guide, we’ll cover how to create fault domains and configure them in Windows Server 2019. We will also run down the different layers of resiliency provided by Windows Server and fault domain awareness with Storage Spaces Direct. Let’s get started!

Resiliency and High-Availability

Many large organizations deploy their services across multiple data centers to not only provide high-availability but to also support disaster recovery (DR). This allows services to move from servers, virtualization hosts, cluster nodes or clusters in one site to hardware in a secondary location. Prior to the Windows Server 2016 release, this was usually done through deploying a multi-site (or “stretched”) failover cluster. This solution worked well, but it had some gaps in its manageability, namely that it was never easy to determine what hardware was running at each site. Virtual machines (VMs) were also limited to move between cluster nodes and sites, but had no other mobile granularity, even though most datacenters organize their hardware by chassis and racks. With Windows Server 2016 and 2019, Microsoft now provides organizations with the ability to not only have server high-availability but also resiliency to chassis or rack failures and integrated site awareness through “fault domains”.

What is a Fault Domain?

A fault domain is a set of hardware components that have a shared single point of failure, such as a single power source. To provide fault tolerance, you need to have multiple fault domains so that a VM or service can move from one fault domain to another fault domain, such as from one rack to another.

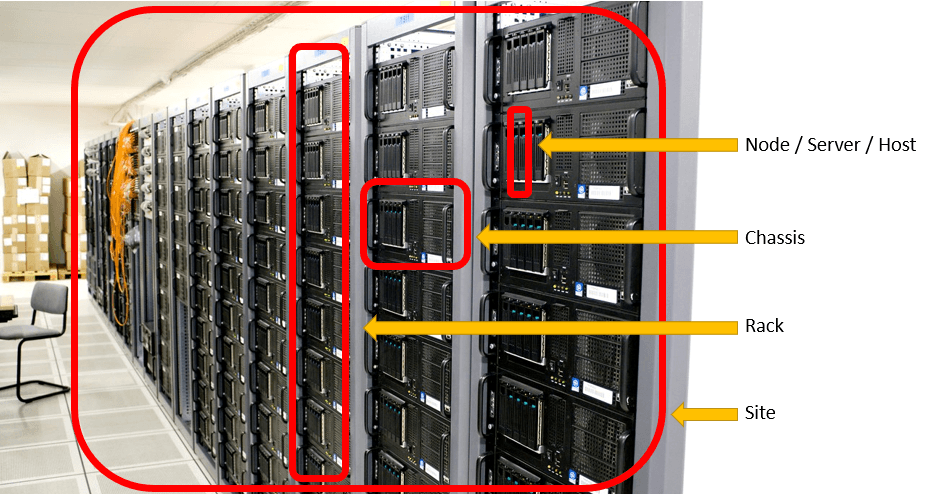

The following image helps you identify these various datacenter components.

Defining a Node, Chassis, Rack, and Site for Fault Domains

Source: https://ccsearch.creativecommons.org/photos/c461e460-6a99-4421-b5f1-906e74c9446b

Configuring Fault Domains in Windows Server 2019

First, let’s review the different layers of resiliency now provided by Windows Server. The following table shows the hierarchy of the Windows Server hardware stack:

| Fault Domain | High-Availability |

| Application | Failover clustering is used to automatically restart an application’s services or move it to another cluster node. If the application is running inside a virtual machine (VM) then guest clustering can be used which creates a cluster of virtualized hosts. |

| Virtual Machine | Virtual machines (VMs) run on a failover cluster and can be restarted or failover to another cluster node. A virtualized application can run inside the VM. |

| Node (Server / Host) | A server can move its application to another node on the same chassis using failover clustering. The server is the single point of failure, and this could be caused by an operating system crash. |

| Chassis | A server which has a chassis failure can move to another chassis in the same rack. A chassis is commonly used with blade servers and its single point of failure could be a single power source or fan. |

| Rack | A server which has a rack failure can move to another rack in the same site. A rack may have a single point of failure from its top of rack (TOR) switch. |

| Site | If an entire site is lost, such as from a natural disaster, a server can move to a secondary site (datacenter). |

This implementation of fault domains lets you move nodes between different chassis, racks, and sites. Remember that hardware components are only defined by the software, and they do not change the physical configuration of your datacenter. This means that if two nodes are in the same physical chassis and it fails, then both will go offline, even if you have declared them to be in different fault domains via the management interface.

This blog will specifically focus on the latest site availability and fault domain features, but check out Altaro’s blogs on Failover Clustering for more information. Additionally, as a side-note, Altaro VM Backup can provide DR and recovery functionality with its replication engine if desired.

Fault Domain Awareness with Storage Spaces Direct

A key scenario for using fault domains is to distribute your data, not just across different disks and nodes, but also across different chassis, racks and sites so that it is always available in case of an outage. Microsoft implements this using storage spaces direct (S2D) which divides cloned disks across these different fault domains. This allows you to deploy commodity storage drives in your datacenters, and its data is automatically replicated between each disk. In the initial release of S2D, the disks were mirrored between two cluster nodes, that if one failed, the data was already available on the second server. With the added layers of chassis and rack-awareness, additional disks can be created and distributed across different nodes, chassis, racks, and sites, providing granular resiliency throughout the different hardware layers. This means that if a node crashes, the data is still available elsewhere within the same chassis. If an entire chassis loses its power, a copy is on another chassis within the same rack. If a rack becomes unavailable due to a TOR switch misconfiguration, the data can be recovered from another rack. And if the datacenter fails, a copy of the disk is available at the secondary site.

One important consideration is that the site awareness and fault domains must be configured before storage spaces direct is set up. If your S2D cluster is already running and you are configuring fault domains later, you must manually move your nodes int the correct fault domains, first evicting the node from your cluster and its drive from your storage pool using the cmdlet:

PS C:> Remove-ClusterNode -CleanUpDisks

Creating Fault Domains

Once you have deployed your hardware and understand the different layers in your hardware stack you will need to enable fault domain awareness. Since a minority of Windows Server users have multiple datacenters, this must be enabled through a command run from any node. Microsoft wanted to avoid exposing it directly through the GUI interface so that inexperienced users did not accidentally turn it on and expect an operation that they lacked the hardware to support. Enable fault domains using the following PowerShell cmdlet:

PS C:> (Get-Cluster).AutoAssignNodeSite=1

Remember that this hardware configuration is hierarchical, so nodes are part of chassis, which are stored in racks, which reside in sites. Your nodes will use the actual node name, and this is set automatically, such as N1.contoso.com. Next, you can define all of the different chassis, racks, and sites in your environment using friendly names and descriptions. This is helpful because your event logs will reflect your naming conventions, making troubleshooting easy.

You can name each of your chassis to match your hardware specs, such as a “Chassis 1”.

PS C:> New-ClusterFaultDomain –Type Chassis -Name “C1” PS C:> New-ClusterFaultDomain –Type Chassis -Name “C2”

Next you can assign names to your racks, such as “Rack 1”.

PS C:> New-ClusterFaultDomain –Type Rack -Name “R1” PS C:> New-ClusterFaultDomain –Type Rack -Name “R2”

Finally define any sites you have and provide them with a friendly name, like “Primary” or “Seattle”.

PS C:> New-ClusterFaultDomain –Type Site –Name “Primary” PS C:> New-ClusterFaultDomain –Type Site –Name “Secondary”

For each of these types, you can also use the -Description or -Location switch to add additional contextual information which is displayed in event logs, making troubleshooting and hardware maintenance easier.

Configuring Fault Domains

Once you have defined the different fault domains, you can configure their hierarchical structure using a parent (and child) relationship. Starting with the node, you define which chassis they belong to and then move up the chain. For example, you may configure nodes N1 and N2 to be part of chassis C1 using PowerShell:

PS C:> Set-ClusterFaultDomain –Name “N1”,”N2” -Parent “C1”

Similarly, you may set chassis C1 and C2 to reside in rack R1:

PS C:> Set-ClusterFaultDomain –Name “C1”,”C2” -Parent “R1”

Then configure racks R1 and R2 within the primary datacenter using:

PS C:> Set-ClusterFaultDomain –Name “R1”,”R2” -Parent “Primary”

To view your configuration and these relationships, run the Get-ClusterFaultDomain cmdlet.

You can also define the relationship of the hardware in an XML file. This method is described in Microsoft’s Fault domain awareness page. If you want to dig deeper, check out the full PowerShell syntax.

Wrap-Up

Now you are able to take advantage of the latest site-awareness features for Windows Server Failover Clustering, giving you additional resiliency throughout your hardware stack. We’ll have further content focused on this area in the near future, so stay tuned!

Finally, what about you? Do you see this being useful in your organization? Do you see any barrier to implementation? Let us know in the comments section below!

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

39 thoughts on "How to Customize Site-Aware Clusters and Fault Domains"

Symon, is it possible to apply fault domain on existing 2016 12 node HCI S2D Cluster? All CSV’s are 3way mirror, 3 Racks, 4 nodes each?

Hi Patrick,

Microsoft supports S2D fault domains in Windows Server 2016 and 2019, so this configuration should work. Make sure that you run cluster validation on the entire solution.

Per Microsoft Documentation:

Specify fault domains before enabling Storage Spaces Direct, if possible. This enables the automatic configuration to prepare the pool, tiers, and settings like resiliency and column count, for chassis or rack fault tolerance. Once the pool and volumes have been created, data will not retroactively move in response to changes to the fault domain topology. To move nodes between chassis or racks after enabling Storage Spaces Direct, you should first evict the node and its drives from the pool using Remove-ClusterNode -CleanUpDisks.

Source: https://docs.microsoft.com/en-us/windows-server/failover-clustering/fault-domains

Thanks,

Symon

Sorry, question was. Can Fault Domain be setup after the fact? If so what are the steps.

Hi Patrick,

This is possible, but requires some manual configuration, so it is not ideal. The guidance is as follows: If your S2D cluster is already running and you are configuring fault domains later, you must manually move your nodes into the correct fault domains, first evicting the node from your cluster and its drive from your storage pool using the cmdlet: PS C:> Remove-ClusterNode -CleanUpDisks

More info on this cmdlet is here: https://docs.microsoft.com/en-us/windows-server/storage/storage-spaces/remove-servers

Thanks,

Symon

An impressive share! I’ve just forwarded this onto a colleague who has been conducting a little research on this. And he in fact bought me dinner because I discovered it for him… lol. So allow me to reword this…. Thanks for the meal!! But yeah, thanks for spending some time to discuss this matter here on your web site.|