Hyper-V Storage is one of the most variegated subjects in all of computing. As with most other technologies, Hyper-V can use many different types of storage and methods of storage connectivity and configuration. This affords it a great deal of flexibility at the expense of presenting a maze of choices. Configuring and connecting storage is usually not the biggest challenge that administrators face. The focus of this article will be architecting a solid storage configuration for your Hyper-V infrastructure with best practices to help get you started.

One very real danger in storage design is over-engineering. It can be difficult to predict in advance what a virtualization platform will need. As a result, many institutions will purchase a cookie-cutter design from a vendor’s list of arbitrarily tiered and titled packages. In the most common cases, these designs will cost far more than a more realistic solution.

While rarer, some do not provide adequate performance. The danger with an underpowered solution is that it can cause an institutional revolt against virtualization in general, which in turn can cause management to demand an architectural reversion to non-virtualized systems. This is more concerning worry than over-engineering, as non-virtualized systems almost always have a higher overall cost to purchase and operate.

Another issue with storage is that, even with Hyper-V’s versatility, not all kinds will function well with Hyper-V. The easiest way to avoid this problem is to purchase equipment listed in the Windows Server Catalog. The site shows the logos that manufacturers are allowed to use if their hardware is certified to run with the operating system(s) that you will be using.

Assess Your Environment to Plan for Hyper-V’s Storage Needs

The best way to know what you’re going to need is to find out what you are using today. If you’ve already got monitoring systems in place, you may not need to do anything but aggregate and analyze the data that you’ve got on hand. If you don’t have monitoring in place, then you’ll need to get started as soon as possible. The longer the data collection phase is, the more accurate your predictions will be. What you’re primarily looking for is input/output operations per second (IOPS) and capacity. If possible, you will want to determine the percentage of IOPS that are read operations as opposed to write operations, as that balance can affect your solution.

If you’re working with a storage or virtualization solutions vendor, they may already have a storage assessment tool designed specifically for this purpose. This is usually your best option as it has been built for the specific purpose of automating what will be a tedious process. In a later article in this series, free tools will be explored that will enable you to perform similar actions yourself.

Storage Options for Hyper-V

Once you have an idea of what your deployment’s storage needs will be, the next portion will be to determine the best way to meet them. That requires an understanding of your options. They’ll be presented here in neatly defined categories, but there’s no need for you to make such a clean choice. If you have multiple workloads that vary in their needs, you’re certainly allowed to use multiple solutions with varied capabilities to match.

Disk Options

The most basic component of a storage system is its disks. Disks are common commodities, which means that there is a fairly direct correlation between price and capability. The two major capability metrics for drives are speed and capacity; as one rises, the other tends to decrease. Inexpensive drives can be slow and large or fast and small. Higher-priced drives offset some of the differences.

Most drives contain conventional rotating platters; the presence of the electric motor that spins them is the source of the word drive. These vary greatly in speed. These spinning drives are usually categorized by their rotational speeds, which range from 5400 to 15,000 RPM. Most server-class systems won’t use anything slower than 7200 RPM.

A much more recent addition to the storage field is solid state drives (SSD). These have no moving parts, so they are very fast. Unfortunately, this comes at a very low capacity per dollar in comparison to traditional spinning disks. SSDs also do not have the same length of history, so the technology is still maturing rapidly. If you choose to use SSD for your virtualization environment, take care to select SSDs that are certified for server loads.

To balance the speed of SSD against the capacity of spinning disks, new solid state hybrid drives (SSHDs) are appearing. They combine both technologies in the same package along with electronics that automatically place commonly-accessed data in solid state storage and more rarely accessed data on the slower spinning platters. The size of the SSD portion tends to be very small, making it little more than a cache. To date, these drives are most commonly found as singular units in desktop and laptop systems. They are gradually making their way into server-class systems. However, due to the less predictable access nature of virtualized environments, SSHDs are not a worthy investment for most situations.

As you design your storage system(s), keep one thing in mind: scaling out is usually more effective than scaling up. Costs escalate quickly when trying to find a faster or larger disk. Using multiple disks that are slower or smaller can address this cost issue. Scaling out spinning disks can greatly enhance their performance as the slow data seek process can be handled in parallel.

Exactly how to scale out is a larger discussion. If you’re using Storage Spaces, you’ll likely use one of its protection schemes. For traditional storage system, you’ll likely choose one of the RAID options. The following article includes a detailed discussion of these options: https://www.altaro.com/hyper-v/storage-and-hyper-v-part-2-drive-combinations/.

Local vs. Remote Storage

If you’re not planning to cluster your systems or if you’re going to restrict yourself to two hosts per cluster, you have the option to use locally-attached storage. The benefit of local storage is that it tends to be cheaper. If it’s attached to an internal system bus, its latency will be a fraction of what you can expect from any external storage. The downside of internal storage is that it will have the lowest maximum expansion capacity.

Somewhere between local and remote storage is external direct-attached storage. This often comes in the form of enclosures that allow connections from up to two separate hosts simultaneously. Beyond the ability to cluster two hosts, it also tends to allow for greater system expansion than internal storage. Many of these enclosures use very high speed data transfer cabling that places storage access speeds on par with internal storage.

Hyper-Converged Systems

A recent introduction into systems virtualization is the concept of “hyper-convergence”. This fancy term simply means that, instead of layered combinations of specialized systems, general purpose hosts in specialized chassis provide all of the necessary components for a complex system: compute, memory, disk, and networking. For example, a traditional cluster would require server-class computers to supply compute and memory, a SAN to provide storage, and several switches to provide network.

A hyper-converged solution is a single chassis with one or more blade-like inserts; each of these inserts provides CPU, memory, and disk for the entire chassis (and potentially other chassis) and the chassis provides networking connectivity between its contained hosts and the rest of the network. What sets a hyper-converged system apart from a standalone host is that these systems typically allow all of these resources to be shared, whether in a traditional cluster configuration or in some other form of a scale-out design. In order to make this work, all hyper-convergence solutions available today include some sort of management software component that presents the underlying components to the hypervisor.

Remote Storage Connectivity

If your disks are all internal or direct-attached, the operating system likely already sees them and you can continue on to the next section. If you are using a GUI environment, please read this article for instructions on connecting to storage using the visual tools. If you are using iSCSI, follow the same article. The iscsicpl.exe application is available even on Hyper-V Server and Windows Server in Core mode. Fiber channel connectivity is very dependent upon the manufacturer of the fiber channel adapter that is installed in the connecting host and upon the manufacturer of the target storage system. Consult their documentation for information.

SMB storage for virtual machines is a new feature whose introduction coincided with the inclusion of SMB version 3.0 in Server 2012. This feature allows commodity Windows Server systems to host the storage for Hyper-V Server using the familiar file share model.

Because the SMB format is published, third parties can also design hardware solutions that provide SMB connectivity. Performance with SMB 3 is on par with similar hardware using traditional connectivity methods such as iSCSI and fiber channel and is only dependent upon the capabilities of the hardware. The more pressing issue with SMB 3 is typically its dependence upon Active Directory and related permissions.

The simplest method is to grant computer account(s) for the host(s) running Hyper-V Full Control over the SMB share and its NTFS location. However, this can be an imperfect solution in remote control situations. You may find that you need to configure delegation for some situations and CredSSP for others.

- More information on SMB storage can be found on Jose Barreto’s blog.

- Information on configuring constrained delegation for Hyper-V (not just SMB) is available here.

- CredSSP for Hyper-V is well-explained here.

Remote Storage for Clusters Configuration Options

When clustering Hyper-V hosts, you have two additional options besides SMB 3 for virtual machine storage: cluster disks and Cluster Shared Volumes.

Cluster disks are the older technology and can be used for almost every application that Microsoft Failover Clustering can be applied to. Because multiple virtual machines can be running on unique nodes simultaneously, it can sometimes be forgotten that each role runs in an active/passive configuration, not active/active. Because of the active/passive design, a single role can be given its own remote disks, and they will be owned by the same cluster node that owns the role. You saw in section 3.4 how to assign a cluster disk directly to a virtual machine. Such disks will be automatically moved with the virtual machine when it fails over to other nodes.

Cluster Shared Volumes (CSVs) are a newer option for cluster storage. When they were introduced with the 2008 R2 server version, they were only authorized for use with Hyper-V. Now, they can be used with several other roles, such as the Scale-Out File Server. CSVs still only have one owner, just as cluster disks do, but they can read from and written by multiple nodes simultaneously. Instead of a single virtual machine having exclusive access to a disk, a disk can be shared between many virtual machines. This grants a much higher degree of flexibility, greatly improved space utilization, and easier provisioning.

CSVs do have a downside that make them something of a mixed blessing. Because only one node can own the disk at any given time, that owner is responsible for tracking the metadata of every file that it contains. This means timestamps, owners, permissions, etc. There is a minor performance hit for every non-owner node. For Hyper-V, this is usually negligible. It can be mitigated by ensuring that networks designated for cluster usage have a solid, clear connection.

There is a benefit to CSV’s single-owner design, however: if any node loses direct connectivity to a CSV, its access can be brokered via the owner node. This is called Redirected Access Mode. If the owner node loses connectivity, ownership is transferred to another node without downtime.

Distribution of Virtual Machines across Separate Storage Locations

Smaller deployments are likely to place all of their virtual machines on the same storage system. There’s no requirement that you do so, even for a single virtual machine. You can mix local and remote storage. You can mix remote storage types. You can have a single virtual machine with multiple hard drives spread across different locations. It is not supported to have a clustered virtual machine that uses any local storage at all, even though the system will permit this configuration.

The purpose of such distribution is to apply hardware to loads it is best suited to serve. Very fast storage tends to have relatively low capacity; the inverse is also true. Some storage provides an advantage to read operations while others provided more balanced I/O characteristics. From a single Hyper-V host or a single cluster, you can use virtual machines with varying needs by utilizing a variety of back-end storage systems.

Pass-Through Disks

Hyper-V allows you to connect a virtual machine directly to a physical hard disk or a LUN. This is known as a pass-through disk. In early versions of Hyper-V, these were preferred for performance-intensive operations, like SQL servers. Microsoft has all but eliminated the performance difference. At this time, it is no longer recommended to use pass-through disks for any purpose. They lack the flexibility and feature set of virtual hard disks. This article on our blog includes a more thorough discussion of pass-through disks and the reasons not to use them.

Fixed, Dynamically Expanding, and Differencing Virtual Hard Disks

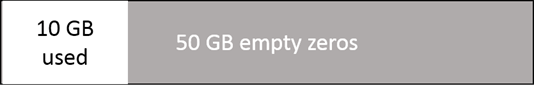

Hyper-V provides three virtual hard disk types. Fixed disks are the simplest. They are a file that contains a perfect bit-by-bit representation of a hard drive along with a bit of header information for Hyper-V (or even Windows) to work with. These are usually, but not always, the fastest-performing virtual disk files.

60 GB Fixed VHDX with 10GB data

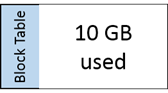

Dynamically expanding virtual disks are a bit more complicated. They start at only 4 kilobytes in size. As data is written to them, they expand to contain it. If they reach their maximum allotted capacity, they stop growing. The virtual hard disk file contains the same header information as its fixed-size cousin, but also includes a table to track which blocks are in use. This table must be referenced to locate blocks whenever the file is written to or read from. If data is deleted from the disk file, it can be shrunk, but must be taken offline to do so.

60 GB Dynamically Expanding VHDX with 10GB data

There is a large mass of literature devoted to warning against use of the dynamically expanding hard disk. Most of it is poorly researched and only anecdotally supported. Dynamically expanding hard disks are suitable for most virtual machines. Notable exceptions are database systems, often including e-mail servers (such as Microsoft Exchange). In Server versions 2012 and later, the performance gap between fixed and dynamically expanding disks has narrowed to nearly nothing; dynamically expanding disks actually provide better performance in some instances. The only meaningful risk in their usage is that of improper planning. Because they do have the ability to grow, it is possible for the physical space to become completely full as they expand. Be sure to properly plan and, more importantly, monitor space usage. A larger discussion of the dynamically expanding disk is available in this post: https://www.altaro.com/hyper-v/proper-use-of-hyper-v-dynamic-disks/.

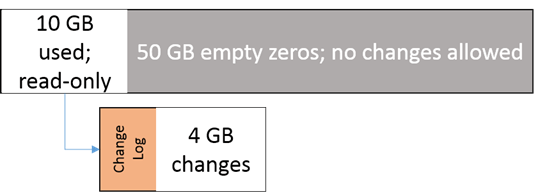

The final disk type is the differencing disk. This disk type requires a parent. The parent can be of any of the three types. Unlike the other two types, the guest operating system of a virtual machine does not distinguish the differencing disk. It simply writes to the assigned disk. Under the hood, Hyper-V ensures that all writes go only to the differencing disk whereas reads come from the newest data copy, whether it is in the differencing disk or its parent (or even further up the chain). Differencing disks are used when a virtual machine has a checkpoint. Since the parent disk is not modified, all of its data remains intact. If you choose to discard the changes in the differencing disk, the parent disk is left exactly as it was when the checkpoint was created.

60 GB Fixed VHDX with a Differencing Disk

Differencing disks are potentially dangerous. They can grow all the way up to the size of the parent disk, and since you can continue to chain into further differencing disks, there is no maximum limit on growth. Also, as the chain lengthens, read operations can require more time as the data is sought. Use differencing disks sparingly and with care.

Virtual Machine Storage Needs

The largest files in a virtual machine are its virtual hard disks. The configuration files are comparatively tiny, needing only a few kilobytes. Other potentially large files are BIN files. A BIN file holds the active memory contents of a virtual machine while it is in a Saved State. It will equal the size of the virtual machine’s memory. If the virtual machine is not using Dynamic Memory, the BIN file will always equal the Startup value. If Dynamic Memory is enabled, the BIN file will resize itself to match the allocated number.

The BIN file always exists for a virtual machine is in a Saved State. It also exists while the virtual machine is powered on if its Automatic Stop Action is set to its default of Save. This ensures that adequate space is available and speeds up the write process by pre-allocating it.

10 Hyper-V Storage Best Practices

There is no universally proper approach to designing and configuring storage for Hyper-V. There are a number of solid best practices to follow that will help you to get the most out of your equipment.

- The management operating system (whether Hyper-V Server or Windows Server) does not require a significant amount of disk space or speed to operate. Do not sacrifice drive bays or spindles that could be assigned to virtual machines just for the management operating system. Instead, use your storage system’s logical volume creation abilities or Windows’/Hyper-V Server’s ability to create partitions during installation. Place the management operating system in its own space (60 GB should be sufficient).

- Superior drive performance is best achieved by using multiple drives in parallel as opposed to using a small number of fast drives.

- Do not place virtualized domain controllers on SMB 3 storage. If the virtualization host or the storage host is unable to contact a domain controller, the SMB 3 channel cannot be authenticated and no connection is possible. This will prevent the virtualization host from being able to start the domain controller. Other connectivity types do not rely on Active Directory and are therefore a superior choice for domain controllers. If possible, keep at least one domain controller on local storage.

-

Hyper-V does not support a loopback storage configuration. This is a situation in which a Hyper-V system attempts to provide its own “remote” storage. As an example, you cannot have Hyper-V Server connect to a virtual machine running on a share that the same Hyper-V system is hosting. It also means you cannot use a single cluster to host Hyper-V and the Scale-Out File Server role and connect its virtual machines to its own SOFS.

-

Do not use a single large LUN or logical disk to hold all virtual machines. Divide virtual machine storage into at least a few smaller spaces even if it continues to use the same underlying physical system. This grants you the flexibility to use Storage Live Migration to address changing needs. For instance, moving virtual hard disks into a fresh space automatically defragments them. You may opt to begin using VDI on Storage Spaces, in which Microsoft’s deduplication is supported. Also, many hypervisor-aware backup solutions can leverage hardware snapshot providers, which will operate at the LUN level. Separated LUNs means smaller snapshots and quicker operations.

-

Use Performance Monitor or a similar tool to gather a baseline trace of storage performance after initial deployment and again once it has reached full production-level distribution. If you suspect performance issues at any point in the future, pull additional traces as comparison. In general a Disk Queue length of 2 or lower does not indicate a disk performance issue. If the queue length exceeds that number, next verify that there aren’t any guests that are performing excessive paging, as this indicates that insufficient memory is assigned to that guest.

-

Do not optimize or select fast storage specifically to hold the page files of virtual machines. Even in active virtual machines, page files should contain rarely accessed information. Increase guest memory to solve page file performance issues instead of using fast storage.

-

Use a monitoring system that is capable of actively warning you when it is low on space or facing other issues.

-

Where possible, use a dedicated space to hold checkpoints. If a checkpoint should fill all available space, only the virtual machines sharing that space will be affected.

-

If you do not intend to change the default Automatic Stop Action of Save for virtual machines or if you will be saving them, ensure that you plan sufficient storage space for their BIN files.